- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Preparing an Analytical Data Model for HANA Cloud ...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Motivation

Sometimes, Its like so What if .. I 've got this data here.

What can I do with it. - Perhaps a legacy model, some technical data, some business data. The application is running since a while and well used. But the maintenance becomes to expensive, not efficiently used, old school user interface. Applications where data gets created may be exchanged. In many companies exist a lot of little tools, reports, written by an application developer, perhaps external, perhaps retired already, but left some nice footprint, used by departments.

In a change ahead - we still have this app there. It is serving us with these reports ad hoc on this and that topic. Its GUI of course is some old school but getting some information that is exactly the way we need. Perhaps you can modernize it a bit but leave the underlying system as is? (and please cheaper and later to some more capabilities to be added.. as usual)

Sound familiar?

I was once in a situation of finding a little application that made its job for almost 3 years continuously. Built by a programmer on a physical Linux box running inside a shielded network area collecting production machine data (Operating Time, Maintenance Time, Issues, downtimes, and create nice reports and alerting persons) taken from a fault tolerant (never downtime) hardware monitoring all the production machinery like certain robots, packaging, filling, pressing, molding. . At the end the little box should be upgraded and no one could find it. - Where was the true physical location of the machine? - It took almost a month to find it, it was an old minitower that was already written off, scheduled to be scrapped already and was only in place for some testing purposes. People found it convenient and so kept using it. No test to production process. At the time that happened security topics were not really considered like now all happened in early 90 when IT was somewhat like a wild west adventure.

Still the app was needed but ported to a modern environment. So I was asked to rebuild /port this in an at that time more modern environment. In recent time "move to cloud" I was told by customers on similar business cases. - "How can we port this app and this capability to cloud?"

One Remark - some of the pictures and examples contain German language. The data I used in this sample show case were of German demo origin. As the content however is of no concern just the process. It takes a lot of effort to translate both field names and test data and does not contribute to the entire process.

The Beginning

The showcase here is exactly based on such a foundation. My manager came to me and asked me, we need to showcase something of typical everyday business issue. A sales slip from a next door branch of a chemist's store /drugstore reminded me of a business case I did a while around working with sales slip analysis.

Of course, anything of shopping can be done now online. But supporting the store next door is also a social topic.

I was confronted with several questions like:

- What data is available?

- What data is permissible to be used and not violating data protection laws?

- What of the permissible data provided sufficient information?

- What would you like to know if you do retail ?

As a particular challenge, my background is C++, data modeling, SQL, less often used in cloud environment where XML, scripting is preferred. Also I intended to reuse as much as possible and challenge the low code / no-code approach of SAP BTP. Can I do this without any scripting in BTP except of the SQL topics I always do?

This is a beginners view of SAP BTP Cloud development. What it will show is, transformation and reuse of an on premise data model

originating from another Database, adopting it to the showcase and for SAP HANA, load data into HANA and make it visible / accessible to a BTP application

that later provides Calculation Views that can be accessed by SAP Analytics Cloud and presented to the user.

Sounds simple? And it is if all requirements are fulfilled and sufficient privileges are granted. In fact all problems I faced while creating the showcase were caused by insufficient privileges. Later on I will describe on what to pay attention.

It is assumed the reader knows how to create a calculation view specific capabilities of HANA SQL or SAP Analytics Cloud.

Also the reader is familiar with tools like SAP PowerDesigner, SAP BTP Business Application Studio and 3rd party applications like DBeaver

The showcase shall fulfill:

reuse and transform an existing custom data model to cloud

put some analytical application on top.

allow in a later phase addition of further extensions such as interactive maintenance of data etc.

Use best capability of each layer to increase user experience.

Live model and no data shall be loaded to SAP Analytics Cloud.

Data shall stay in one place only.

No duplication

No preaggregation shall occur.

The focus of this blogpost is to find a most efficient development. process

So lets look in the cookbooks of SAP HANA Cloud & SAP Business Technology Platform what is needed to assemble.

Recipe of a small analytic SAP HANA application

Ingredients

Data Model

Of course a database application has some data to store. In the showcase I used a classic star model representing a sales slip analysis application that was simplified. It contains one fact table and some dimensions such as products, branches, managers. If you have a database application that should be put to SAP HANA Cloud, just take that one!

Test data

Some related test data to prove Queries, Views and reports should be available of course.

Queries as topping

For the showcase I was interested in what was the content of a typical shopping basket.

What cashier was used, how long was the time to check out, in relation to number of items processed and revenue.

Dashboard decoration

For the showcase a SAP Analytics Cloud dashboard was chosen, simply to illustrate the queries in an easy to consume form. The showcase ends on connection to SAP Analytics Cloud. The topic of this blogpost is to prepare SAP HANA for use with the SAP Analytical Cloud Layer (or e.g. another BTP Application Layer)

Tools

- SAP PowerDesigner, optional to easy convert model in SAP HANA Cloud format and to deploy

- Hana Utilities / ODBC / JDBC drivers, required to allow client side connections and to connect any tools used. To be downloaded from [2]

- MS Visual Studio Code with HANA Plugins. optional to locally edit some of the major scripting and to test some basic views. Alternative to SAP Business Application studio if Browser based development is undesired.

- SAP Business Application Studio in Web, during development the Web based development was the preferred method. It is like MS Visual Studio Code in Web but messaging, direct connection to Cloud Foundry and git sync was far more comfortable.

- DBeaver Community edition supports SAP HANA Cloud Edition out of the box and allows an easy local testing of queries, views, loading of data from client side and anything that's needed to do data manipulation.

- git Somewhere the code needs to be stored in a version controlled development manner. Simply go git and connect from both MS Visual Studio and SAP Business Application Studio in Web as you go.

Foundation

- SAP HANA Cloud, This is the core system on the showcase. The goal was how simple is it, to move a legacy reporting application from a conventional database to cloud and put modern analytical and presentation layers on top.

- SAP Business Technology Platform and in particular SAP Business Application Studio was used to configure layer with minimum or no code approach. Indeed there is no code but some SQL functions used.

- SAP Analytics Cloud The presentation layer we connect to.

Process

The process is best described as a little BPMN diagram.

Process Diagram

The roughly necessary steps described as tasks in the process or rather processes are followed in this blog post. The advantage is - you could always go back an any step and continue hence the development process is rather evolutionary. Only very few dependencies exist. Adjusting the model and testing queries / views may result in adjustment or refinement of the model. -All other steps are rather independent.

Required Privileges

Account to SAP HANA Cloud .(right to create objects, Create, Read, Update, Insert, Load, Delete.)

Privilege ROLE ADMIN,

CREATE ROLE "<schemaname>::external_access_g";

CREATE ROLE "<schemaname>::external_access";

GRANT "<schemaname>::external_access_g", "<schemaname>::external_access" TO SCHEMA_GRANTOR_USER WITH ADMIN OPTION;

GRANT SELECT, SELECT METADATA ON SCHEMA <schemaname> TO "<schemaname>::external_access_g" WITH GRANT OPTION;

GRANT SELECT, SELECT METADATA ON SCHEMA <schemaname> TO "<schemaname>::external_access";In this case the

<schemaname>is called BONDATEN (Sales Slip Data) like so:

CREATE ROLE "BONDATEN::external_access_g";

CREATE ROLE "BONDATEN::external_access";

GRANT "BONDATEN::external_access_g", "BONDATEN::external_access" TO SCHEMA_GRANTOR_USER WITH ADMIN OPTION;

GRANT SELECT, SELECT METADATA ON SCHEMA BONDATEN TO "BONDATEN::external_access_g" WITH GRANT OPTION;

GRANT SELECT, SELECT METADATA ON SCHEMA BONDATEN TO "BONDATEN::external_access";

The privileges defined like so are required to perform live connections and to allow definition of calculation views and creation of layers such as BTP apps. For later use of data manipulation / display the admin privileges are not required. End users would not even be near the database but need to login on application level only.

There are 2 users important.

Your own email you registered with SAP Business Technology platform and Cloud Foundry in example: developer@company.com

A database user: DBUSER

These we will use in the next steps.

Some remark. In a real business case the external visibility of schemas will not be managed by the usual developer user but by the DB-Admin of your SAP HANA Cloud. So the DB Admin will provide the roles and foundations to provide the user provided service instance in cloud foundry.

Creating connections

The instance we are working in SAP Business Technology Platform and SAP Business Application Studio is named "SSA" as short for SalesSlipAnalysis

Once users and privileges are set, it is needed to make the database visible to SAP Business Technology Platform and SAP Business Application Studio:

Log into cloud foundry using Terminal inside business application studio with your user and password

on command prompt (replace the user with your respective email address you are registered with. and your password)

user: SSA $ cf login

API endpoint https://api.cf.eu10.hana.ondemand.com

Email: developeruser@company.com

Password:The cloud foundry should respond with

Authenticating...

OK

Targeted org sharedservices.

Targeted space HANACloud.

API endpoint: https://api.cf.eu10.hana.ondemand.com

API version: 3.115.0

user: developeruser@company.com

org: sharedservices

space: HANACloud

user SSA$

Now, once authenticated is is required to set the user store:

Set the Hana Cloud User Store (Password and certain connection data are altered for security reasons)

From terminal console in SAP Business Application Studio we need to create an user provided service for SSA instance

In this case we call it UPS4SSA

cat db/.env | cut -d "=" -f 2- -z \

| ~/bin/jq -r -c '.hana[] | select(.tags[]==["mta-resource-name:hdi_db"]) .credentials | \

{host: .host, port: .port, driver: .driver, certificate: .certificate, user: "DBUSER", password: "SomePassword", schema: "BONDATEN", tags: "hana"}' \

| ~/bin/jq > /tmp/ups4ssa_credentials.json

user: misc $ cf create-user-provided-service UPS4SSA -p ./ups4ssa_credentials.json

Creating user provided service UPS4SSA in org sharedservices / space HANACloud as developeruser@company.com...

OK

user: misc $ cf service UPS4SSA

Showing info of service UPS4SSA in org sharedservices / space HANACloud as developeruser@company.com...

name: UPS4SSA

service: user-provided

tags:

There are no bound apps for this service.In case there is no admin preparing a role for user provided services then the entire task of exposing the user defined service need to be done by loading a SAP HANA client to Cloud Foundry:

~/sap/hdbclient/hdbuserstore -i SET HANACloud_SSA_DBAdmin a*******-****-****-****-*****x.hana.prod-eu10.hanacloud.ondemand.com:443 DBAdmin

Password = SomePa22w0r9

user: ssabase $ ~/sap/hdbclient/hdbuserstore LIST

DATA FILE : /home/user/.hdb/workspaces-ws-r2lsd/SSFS_HDB.DAT

KEY FILE : /home/user/.hdb/workspaces-ws-r2lsd/SSFS_HDB.KEY

KEY HANACLOUD_SSA_DBADMIN

ENV : a*******-****-****-****-*****x.hana.prod-eu10.hanacloud.ondemand.com:443

USER: DBAdmin

Operation succeed.

~/sap/hdbclient/hdbuserstore -i SET HANACloud_SSA_DBUSER a*******-****-****-****-*****x.hana.prod-eu10.hanacloud.ondemand.com:443 DBUSERAccess to SAP HANA Cloud from SAP Business Application Studio

There should be a file AccessTo<ProjectName>.hdbgrants in JSON format defining the external access configuration.

In this showcase it is called AccessToSSA.hdbgrants and located in path SSA/db/src:

{

"external-access": {

"object_owner": {

"system_privileges" : [ "SYSTEM PRIVILEGE 1", "SYSTEM PRIVILEGE 2" ],

"global_roles" : [

{

"roles" : [ "SSA_USER", "SSA_REPORT" ],

"roles_with_admin_option" : [ "SSA_ADM" ]

}

],

"schema_privileges" : [

{

"privileges" : [ "INSERT", "UPDATE" ],

"privileges_with_grant_option" : [ "SELECT" ]

}

],

"schema_roles" : [

{

"roles" : [ "BONDATEN_SSA_USER", "BONDATEN_SSA_REPORT" ],

"roles_with_admin_option" : [ "BONDATEN_SSA_ADM"]

}

],

"object_privileges" : [

{

"name": "SALESSLIP",

"privileges": [ "INSERT", "UPDATE" ],

"privileges_with_grant_option" : [ "SELECT" ]

}

],

"global_object_privileges" : [

{

"name" : "BONDATEN",

"type" : "REMOTE SOURCE",

"privileges" : [ "CREATE VIRTUAL TABLE" ],

"privileges_with_grant_option" : [ "CREATE VIRTUAL PROCEDURE" ]

}

]

},

"application_user": {

"system_privileges": [{""}],

"global_roles": [{""}],

"schema_privileges": [{""}],

"schema_roles": [{""}],

"object_privileges": [{""}],

"global_object_privileges": [{""}]

}

}

}If there was any inhibiting factor in this little showcase then it was "missing privileges" so getting the admin to grant me the necessary privilege was a bit time consuming. That is a human topic. This is the main reason why I spend so much effort in documenting this here. If there is any topic not showing / not working it might be a missing role or privilege or security configuration.

Further connections required

Client Side Access

Connecting any ODBC, DBeaver, SAP PowerDesigner and MS Visual Studio Code is simple and occurs the very same way to SAP HANA Cloud:

Example for DBeaver:

DBeaver connection configuration

For ODBC make sure to download from [Ref.2] a most recent SAP HANA Client supporting SAP HANA Cloud.

The destination should be like so - data base type has to be SAP HANA Cloud and port 443.

odbc configuration with port 443

Preparation

This step considers the 2nd and 3rd swim lane.

The Data Model

The original data model

Relational Datamodel from OnPremise

The original data model as reverse engineered from a row based relational database with referential integrity on db-level. For simplicity the model was generated to SAP HANA Cloud, adopted compatible data types and classic referential integrity was taken away. Instead HANA type associations were added to allow performance and permit loading of data w/o issues or additional QS checks. If the app is perhaps later enhanced from just reporting to modifying capability then referential integrity can be added later or performed on application level. For now - we intend a reporting / querying application only.

SAP HANA Cloud Classic Datamodel

The tables as generated to SAP HANA Cloud were generated to SQL by SAP PowerDesigner and then deployed to the database.

Preparing Basic Views and Calculated Columns

Now once the model is deployed we re rather in lane 3 and test data shall be loaded and verified first. This topic is not covered here. load test data can be performed using web console - this is the tutorial other like dataglider or (as I did) DBeaver.

As often - there are many parameters that can calculated / derived using SAP HANA SQL capabilities directly in the database - thus reducing I/O and use capabilities at origin. Once Test Data os

Example Utilization - of turnover and amount of goods per operating hour at cashiers

HANA Resolution by Hour

CREATE VIEW BONDATEN.V_KPI_UTILIZATION AS

SELECT COUNT(PD_BON_NR) Durchsatz_Stunde, --Number of Sales Slips

SUM(PD_SUMME) AS Umsatz_Stunde, --Sum (total value of goods incl VAT)

SUM(PD_MENGE) AS Ausstoss_Stunde, --Sum (total amount of goods that went through cashier)

hour(PD_ZEIT) AS stunde, -- operating hour

PD_LFD_POS AS PoS, --identifier of Point of Sales / Cashier

PD_FILIALE AS FILIALE -- branch / location

FROM BONDATEN.POS_DATA_WIDE pdw

WHERE PD_ZEIT IS NOT NULL AND

PD_SATZART = 'F' -- Record type

GROUP BY hour(PD_ZEIT), PD_LFD_POS, PD_FILIALE;Example KPI What time es required to process a checkout of a customer

The goal is simply to provide some HANA functionality like HOUR, like SECONDS_BETWEEN to be exposed as a calculated column and provide this to presentation level.

CREATE VIEW BONDATEN.V_KPI_POS AS

SELECT pdwa.PD_FILIALE AS FILIALE,

pdwa.PD_LFD_POS AS POS,

pdwa.PD_BON_NR AS BON_NR,

pdwa.PD_BON_NR AS BON_NR_M,

pdwb.PD_SUMME AS BON_SUMME,

pdwb.PD_MENGE AS BON_MENGE,

HOUR(pdwa.PD_ZEIT) AS OEFFNUNGSSTUNDE,

SECONDS_BETWEEN(pdwa.PD_ZEIT,pdwb.PD_ZEIT) AS BEARB_DAUER_SEC,

SECONDS_BETWEEN(pdwa.PD_ZEIT,pdwb.PD_ZEIT)/60 AS BEARB_DAUER_MIN

FROM BONDATEN.POS_DATA_WIDE pdwa,

BONDATEN.POS_DATA_WIDE pdwb

WHERE pdwa.PD_BON_NR = pdwb.PD_BON_NR

AND pdwa.PD_FILIALE = pdwb.PD_FILIALE

AND pdwa.PD_LFD_POS = pdwb.PD_LFD_POS

AND pdwa.PD_ZEIT IS NOT NULL

AND pdwb.PD_ZEIT IS NOT NULL

AND pdwb.PD_ZEIT IS NOT NULL

AND pdwa.PD_SATZART = 'H' -- Identify start of record

AND pdwb.PD_SATZART = 'F';Of course - the reader may have created far more complex views and calculation - it is just a little reminder to both resolution and performance - reduce the client side calculation, allow for data base functionality and simplify calculation. Also here "Murphy's law" is applicable - never change a view that is proven to work and produces expected result. So if there is one that exists - try to use it as is on SAP HANA Cloud. Adapt and optimize after transformation.

View Result

This step may need to be repeated to adjust view and query to meet demand.

Once basic data model and basic views are complete the access level to data consuming applications need to be defined.

Intermediate Access Layer to Consuming Applications

Now this blog post considers the 4th swimlane and creation of the calculation view as abstraction layer that will serve as the access level for SAP Analyics Cloud applications an presentation layer.

Let's create an application in SAP Business Application Studio assuming the reader is familiar to the operating.

Start from Template for Cloud Application

In this case I did choose a template to create a cloud application to permit a later extension and adding enhancements on demand.

Now after login to database and cloud foundry it should be possible to see the database schema:

Data Model in SAP Business Application Studio

The association are added and we like to have a look how the schema meta data is defined as SQL code:

Entities in SQL

Now the abstraction layer needs to be created

Preparation of Synonyms

In SAP Business Application Studio create a folder synonyms and use either graphical or scripting editor to create synonyms for each entity.

Creation of Synonyms

Creating the calculation view

The calculation view creation and deployment is the last step in this layer - and likely be complex. Depending on business requirements additional calculated or transformed columns may be defined.

This blogpost shows how to setup a calculation view in Business Application studio

There are 2 methods of creating calculation views - either using the graphic editor as shown above or direct SQL - rather complex.

CalculationView Editor

CREATE CALCULATION SCENARIO "BONDATEN_3"."ssadb::CV_KPIUTIL_CUBE" USING '[{"__CalculationNode__": true,"name": "ssadb::V_KPI_UTILIZATION","operation": {"__AnyDSNodeData__": true,"source": {"__IndexName__": true,"schema": "BONDATEN_3","name": "ssadb::V_KPI_UTILIZATION"}},"attributeVec": [{"__Attribute__": true,"name": "DURCHSATZ_STUNDE","role": 1,"datatype": {"__DataType__": true,"type": 66,"length": 18,"sqlType": 4,"sqlLength": 19},"attributeType": 0},{"__Attribute__": true,"name": "UMSATZ_STUNDE","role": 1,"datatype": {"__DataType__": true,"type": 66,"length": 18,"scale": 2,"sqlType": 34,"sqlLength": 18},"attributeType": 0},{"__Attribute__": true,"name": "AUSSTOSS_STUNDE","role": 1,"datatype": {"__DataType__": true,"type": 73,"sqlType": 3,"sqlLength": 10},"attributeType": 0},{"__Attribute__": true,"name": "STUNDE","role": 1,"datatype": {"__DataType__": true,"type": 73,"sqlType": 3,"sqlLength": 10},"attributeType": 0},{"__Attribute__": true,"name": "POS","role": 1,"datatype": {"__DataType__": true,"type": 73,"sqlType": 3,"sqlLength": 10},"attributeType": 0},{"__Attribute__": true,"name": "FILIALE","role": 1,"datatype": {"__DataType__": true,"type": 73,"sqlType": 3,"sqlLength": 10},"attributeType": 0}]},{"__CalculationNode__": true,"name": "Projection_1","inputVec": [{"__Input__": true,"name": "ssadb::V_KPI_UTILIZATION","mappingVec": [{"__Mapping__": true,"type": 1,"target": "SALES_STUNDE","source": "DURCHSATZ_STUNDE","length": 0},{"__Mapping__": true,"type": 1,"target": "MENGE_STUNDE","source": "AUSSTOSS_STUNDE","length": 0}]}],"operation": {"__ProjectionOpNodeData__": true},"attributeVec": [{"__Attribute__": true,"name": "FILIALE","role": 1,"datatype": {"__DataType__": true,"type": 73,"sqlType": 3,"sqlLength": 10},"attributeType": 0},{"__Attribute__": true,"name": "POS","role": 1,"datatype": {"__DataType__": true,"type": 73,"sqlType": 3,"sqlLength": 10},"attributeType": 0},{"__Attribute__": true,"name": "STUNDE","role": 1,"datatype": {"__DataType__": true,"type": 73,"sqlType": 3,"sqlLength": 10},"attributeType": 0},{"__Attribute__": true,"name": "SALES_STUNDE","role": 1,"datatype": {"__DataType__": true,"type": 66,"length": 18,"sqlType": 4,"sqlLength": 19},"attributeType": 0},{"__Attribute__": true,"name": "UMSATZ_STUNDE","role": 1,"datatype": {"__DataType__": true,"type": 66,"length": 18,"scale": 2,"sqlType": 34,"sqlLength": 18},"attributeType": 0},{"__Attribute__": true,"name": "MENGE_STUNDE","role": 1,"datatype": {"__DataType__": true,"type": 73,"sqlType": 3,"sqlLength": 10},"attributeType": 0}],"debugNodeDataInfo" : {"__DebugNodeDataInfo__": true,"nodeName": "Projection_1"}},{"__CalculationNode__": true,"name": "finalNode","isDefaultNode": true,"inputVec": [{"__Input__": true,"name": "Projection_1","mappingVec": [{"__Mapping__": true,"type": 1,"target": "FILIALE","source": "FILIALE","length": 0},{"__Mapping__": true,"type": 1,"target": "POS","source": "POS","length": 0},{"__Mapping__": true,"type": 1,"target": "STUNDE","source": "STUNDE","length": 0},{"__Mapping__": true,"type": 1,"target": "SALES_STUNDE","source": "SALES_STUNDE","length": 0},{"__Mapping__": true,"type": 1,"target": "UMSATZ_STUNDE","source": "UMSATZ_STUNDE","length": 0},{"__Mapping__": true,"type": 1,"target": "MENGE_STUNDE","source": "MENGE_STUNDE","length": 0}]}],"operation": {"__SemanticQueryOpNodeData__": true},"attributeVec": [{"__Attribute__": true,"name": "FILIALE","role": 1,"datatype": {"__DataType__": true,"type": 73,"sqlType": 3,"sqlLength": 10},"description": "FILIALE","attributeType": 0},{"__Attribute__": true,"name": "POS","role": 1,"datatype": {"__DataType__": true,"type": 73,"sqlType": 3,"sqlLength": 10},"description": "POS","attributeType": 0},{"__Attribute__": true,"name": "STUNDE","role": 1,"datatype": {"__DataType__": true,"type": 73,"sqlType": 3,"sqlLength": 10},"description": "STUNDE","attributeType": 0},{"__Attribute__": true,"name": "SALES_STUNDE","role": 2,"datatype": {"__DataType__": true,"type": 66,"length": 18,"sqlType": 4,"sqlLength": 19},"description": "DURCHSATZ_STUNDE","kfAggregationType": 1,"attributeType": 0},{"__Attribute__": true,"name": "UMSATZ_STUNDE","role": 2,"datatype": {"__DataType__": true,"type": 66,"length": 18,"scale": 2,"sqlType": 34,"sqlLength": 18},"description": "UMSATZ_STUNDE","kfAggregationType": 1,"attributeType": 0},{"__Attribute__": true,"name": "MENGE_STUNDE","role": 2,"datatype": {"__DataType__": true,"type": 73,"sqlType": 3,"sqlLength": 10},"description": "AUSSTOSS_STUNDE","kfAggregationType": 1,"attributeType": 0},{"__Attribute__": true,"name": "row.count","role": 2,"datatype": {"__DataType__": true,"type": 66,"length": 18,"sqlType": 4},"isVisible": false,"kfAggregationType": 2,"attributeType": 4,"specialAttrType": 4}],"debugNodeDataInfo" : {"__DebugNodeDataInfo__": true,"nodeName": "Aggregation"}},{"__Variable__": true,"name": "$$client$$","typeMask": 512,"usage": 0,"isGlobal": true},{"__Variable__": true,"name": "$$language$$","typeMask": 512,"usage": 0,"isGlobal": true}]' WITH PARAMETERS ( 'FLAGS'='128' );

CREATE COLUMN VIEW "BONDATEN_3"."ssadb::CV_KPIUTIL_CUBE" WITH PARAMETERS (indexType=11,

'PARENTCALCINDEXSCHEMA'='BONDATEN_3','PARENTCALCINDEX'='ssadb::CV_KPIUTIL_CUBE','PARENTCALCNODE'='finalNode');

COMMENT ON VIEW "BONDATEN_3"."ssadb::CV_KPIUTIL_CUBE" is 'CV_KPIUTIL_CUBE.hdbcalculationview';

COMMENT ON COLUMN "BONDATEN_3"."ssadb::CV_KPIUTIL_CUBE"."FILIALE" is 'FILIALE';

COMMENT ON COLUMN "BONDATEN_3"."ssadb::CV_KPIUTIL_CUBE"."POS" is 'POS';

COMMENT ON COLUMN "BONDATEN_3"."ssadb::CV_KPIUTIL_CUBE"."STUNDE" is 'STUNDE';

COMMENT ON COLUMN "BONDATEN_3"."ssadb::CV_KPIUTIL_CUBE"."SALES_STUNDE" is 'DURCHSATZ_STUNDE';

COMMENT ON COLUMN "BONDATEN_3"."ssadb::CV_KPIUTIL_CUBE"."UMSATZ_STUNDE" is 'UMSATZ_STUNDE';

COMMENT ON COLUMN "BONDATEN_3"."ssadb::CV_KPIUTIL_CUBE"."MENGE_STUNDE" is 'AUSSTOSS_STUNDE'Generated Output is obviously complex.

This is how it will look like in the HANA Cloud Web Console

Deployed Code in Business Application Studio HANA Plugin

Testing the calculation view

Testing the Calculation Views after deployment shows any inconsistent data - or if ranks and hierarchies are used on what level field and elements are. Now the abstraction layer is complete and the next step is the final and last focus: the presentation layer and what will be presented to users.

SAP Analytics Cloud

Connecting SAP Analytics Cloud to SAP HANA Cloud.

It is required and assumed a SAP Analytics Cloud connection is available and the user is permitted to create connections and develop stories and dashboards.

SAC HomePage

All icons are supported by tool tips - and navigate to the one in this picture circled in red to create a connection.

Connections list

The connections list is now showing up and assuming not yet created one - navigate to the "+" sign again circled in red to create a new connection.

Follow the steps to create a new connection and complete. Please bear in mind a live connection will be configured - This means no data will be stored SAP Analytics Cloud. When data is read - it will be on demand on the most recent active data set.

So we select a live data source from the selection of data sources offered:

DataSources

Do not click of source type or category - SAP HANA is all that is required for now.

Now the connection details are to be defined:

Connection Details

Select SAP HANA Cloud now from available connection types

Connection Type Drop Box

Continue to add authentication.

Connection Details continued..

Here it will be defined its a SAP HANA Cloud connection. Once finished click on OK to complete.

Well done.

Now the connection should appear in the list of connection available and if required to edit - use the check box on the left and the edit & deletion icons previously greyed will become now available.

Connection Defined

Refining the Data Model in SAP Analytics Cloud

So now the database can be accessed by SAP Analytics cloud connection. The data model will now become a foundation for the analytical model in SAP Analytics Cloud.

Select now the Modeler in the side menu.

SAP Analytics Cloud Modeler

The modeler is identified by the cube symbol in the icon bar. In the picture the models I created are already defined. To create a new model for a live data connection select the icon Live Data Connection to access the assistant.

Create A Model from Live Connection

The assistant shows the system type for selection, the connection we just defined and now we need to select the data source.

The data source in this case are the calculation views that were created. Only cube type calculation views can be used as a data source.

Calculation View as Data Source

Lets select a data source and create a model.

Access the Calculation View from Modeler

Now in the modeler all dimensions and measures defined in the calculation view can be accessed and further calculations may be defined

The Modeler

Now as connection and model are defined - its time to create a story for the dashboard.

Navigate to Stories and open

Story Workbench

The story workbench supports to create a consumable dashboard and further calculations and graphical presentation from scratch or template. It is not topic of this blogpost to show creation of a story but the workflow from data to presentation.

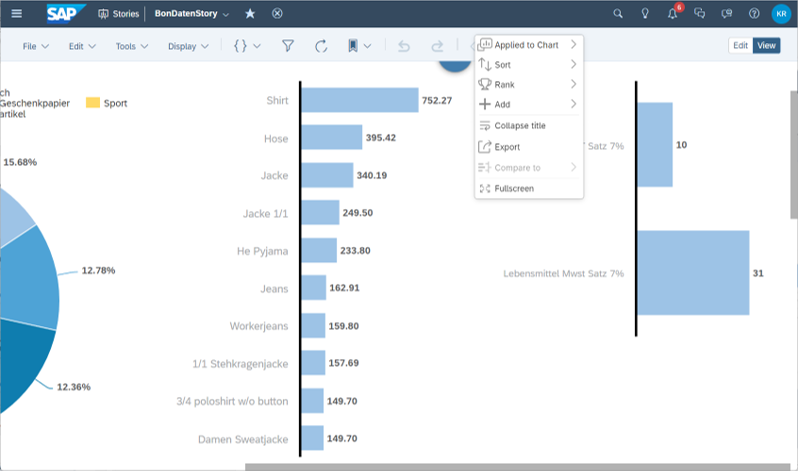

Creating a Story

At the end - finally - a complete story can be published and now consumed by users.

Summary

A recipe and a workflow to complete transformation from a previous local - entirely non cloud legacy local analytics report to SAP HANA cloud database and SAP Business Technology Platform service to be consumed via SAP Analytics Cloud . With just some SQL. Summarizing up - When I did this - the problems I encountered were connection and access rights to acquire. Once the administrator provided me rights and connection information - all steps were straight forward. So access your data live and present it in a modern and easy to use SAP Analytics Cloud Story.

References

1 Creating and user provided service instance in Cloud Foundry

https://help.sap.com/docs/BTP/65de2977205c403bbc107264b8eccf4b/a44355e200b44b968d98ddaa42f07c3a.html

2. Development Tools for SAP HANA Cloud

https://tools.eu1.hana.ondemand.com/#hanatools

- SAP Managed Tags:

- SAP Analytics Cloud,

- SAP HANA Cloud,

- SAP PowerDesigner,

- SAP Business Technology Platform

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

93 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

67 -

Expert

1 -

Expert Insights

177 -

Expert Insights

301 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

346 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

430 -

Workload Fluctuations

1

- Getting Analytical Data on different Dates in one CDS Cube in Technology Blogs by SAP

- SAP Sustainability Footprint Management: Q1-24 Updates & Highlights in Technology Blogs by SAP

- Custom data as table, CDS, Domain, Business object and all that jazz... in Technology Blogs by SAP

- SAP Datasphere - Space, Data Integration, and Data Modeling Best Practices in Technology Blogs by SAP

- Create an xP&A Business Flow with SAP Analytics Cloud, SAP Datasphere & SAP Cloud Integration in Technology Blogs by SAP

| User | Count |

|---|---|

| 29 | |

| 17 | |

| 15 | |

| 13 | |

| 11 | |

| 9 | |

| 8 | |

| 8 | |

| 8 | |

| 7 |