- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- SAP Datasphere - Space, Data Integration, and Data...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Motivation:

SAP Datasphere is a powerful all-in-one business data platform. It provides the technology foundation for creating a business data fabric, delivering meaningful data with context and logic to every user. It combines a flexible data fabric architecture with a robust platform for integrating multiple data sources, performing complex analysis, and modeling.

While these capabilities offer valuable insights, the complexity of data integration and modeling can affect user experience. However, strategic design choices can ensure that SAP Datasphere performs optimally. This article shares best practices and tips to maintain smooth data flow, prevent performance problems, and enhance efficiency. It helps you set up your SAP Datasphere space, integrate data, and model effectively while encouraging creativity as a data engineer, modeler, or architect.

1. Managing Your Datasphere Spaces:

What is the Space and What are the benefits?

In SAP Datasphere, a space is a secure area set up by an administrator where members can handle data. It functions like a self-contained data warehouse, residing within the SAP HANA Cloud database, tailored for specific departments, Line of Business (LOB) units, data domains, project teams, or individuals. Administrators are responsible for creating one or more spaces, managing memory and disk storage allocation, assigning members (users), setting priorities and statement limits, establishing source connections, and acquiring data and models. In SAP Datasphere, everything happens within these spaces to keep things safe. Data and models in space stay private unless shared with other spaces or made available for use by BI tools or other systems.

What are the Space types and Important Configuration Parameters?

- Space Type: SAP Datasphere: Required Space type and the Administrator can only create spaces of type SAP Datasphere. Multiple spaces can be created and configured.

- Optional Space Type: SAP Datasphere(Embedded Data Lake Access): The Administrator must select a space connecting to the embedded HANA data lake. Checkbox setting: Use This Space to Access the Data Lake. Only one space can have access and can only write data to the embedded SAP HANA data lake.

- Optional Space Type: SAP BW bridge: SAP BW bridge provisioning generates a dedicated space for exposing BW bridge objects as remote tables to other SAP Datasphere spaces, allowing the connection of a single SAP BW bridge system to SAP Datasphere.

Important Configurations Parameters

Enable Space Quota Disk(GB) and Memory(GB) Allocate disk storage (GB) and memory(GB) allocation for the space. There is no best practice it is based on the requirement/demand. however generally Disk: Memory(RAM) ratio can be 100:40-50

- Disk 256 GB : Memory(RAM) 120 GB

- Disk 1024 GB : Memory(RAM) 480 GB

- Disk 4096 GB : Memory(RAM) 1800 GB

Memory storage value cannot exceed the amount of disk storage space limit.

Default: Enabled

A disabled option lets space consume all available disk and memory resources in your SAP Datasphere tenant. (Not recommended)Lock or Unlock Your Space SAP Datasphere locks spaces exceeding memory or disk storage limits to prevent resource overload. Users can then free up space by deleting data, or an administrator can assign additional storage. Space Priority (1-low, 8-high) The setting helps SAP Datasphere to prioritize space execution (1-low, 8-high).In situations where spaces are competing for available threads, those with higher priorities have their statements run before those of spaces with lower priorities. e.g. Space gets higher priority on which you have live and operational reports compared to historical reports. Workload Configuration (Custom): Total Statement Thread Limit

Set thread limit between 1% and 70% (or equivalent number) of total tenant threads. Too low impacts statement performance, and too high impacts other spaces. Workload Configuration (Custom): Total Statement Memory Limit To avoid OOM statement errors. Define a memory limit (between 0 for no limit and the total available tenant memory) for statements running in this space. This setting balances performance for this space with a potential impact on memory available for other spaces in your SAP Datasphere tenant.

To prevent a space from encountering a general out-of-memory situation, you can establish a memory limit for the space, such as 90%. This prevents a statement within the space from causing a global out-of-memory issue and interrupting other processes.

Expose for Consumption by Default Allows default exposure of all new space views (Graphical, SQL, Analytical Model) while modelers can adjust individual view consumption via the "Expose for Consumption" switch Time Data

Time Tables and DimensionsIt creates date-time tables and dimensions. You can generate in one space and create required foundation dimension date-time models and share them with other spaces.

Space Organization Scenarios/Examples:

1. Line of Business (LoBs)

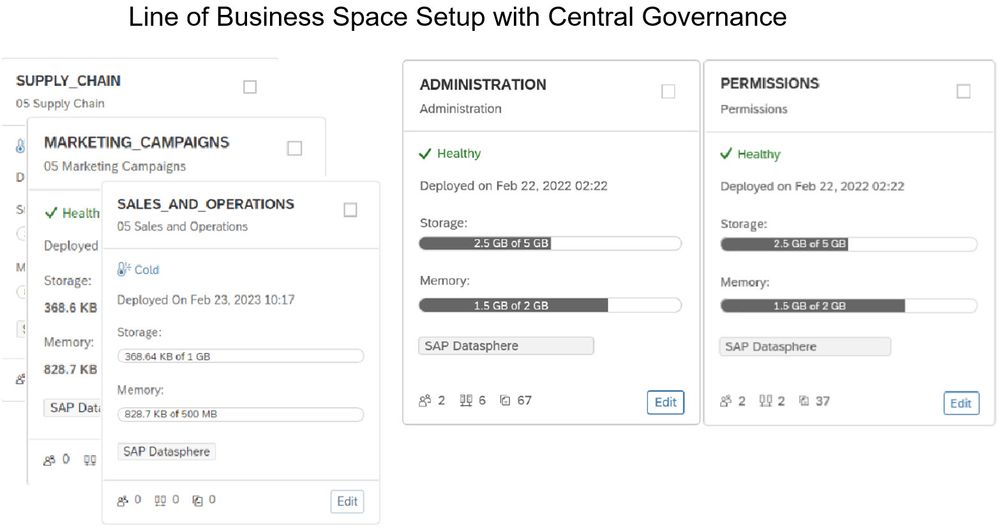

Multiple spaces can be created like Sales, Marketing, Supply Chain, Finance, HR, Service, Analytics, IT Management, etc. To follow Central governance, separate space for Admin and Permissions for Data security (Data Access Control) is recommended.

Administration Space:

- Only admin users will be members for authorization and monitoring.

- Auditor to access audit logs and tenant activities.

- Admin/Security/Developer creates DACs and assigns them to data builder views to enable data security (row level security).

- Specific restricted views are shared with the LOBs spaces (e.g. Sales, Marketing). That way only specific business data with row-level security is available to consume for the business users.

- Benefits:

- Granular Control: Limits access to authorized personnel (e.g., Business or Security admins) who manage specific values.

- Developer Flexibility: Enables developers to work efficiently without authorization restrictions.

- Secure Business Consumption: Provides secure access for users who only need to consume data, ensuring they see only relevant information.

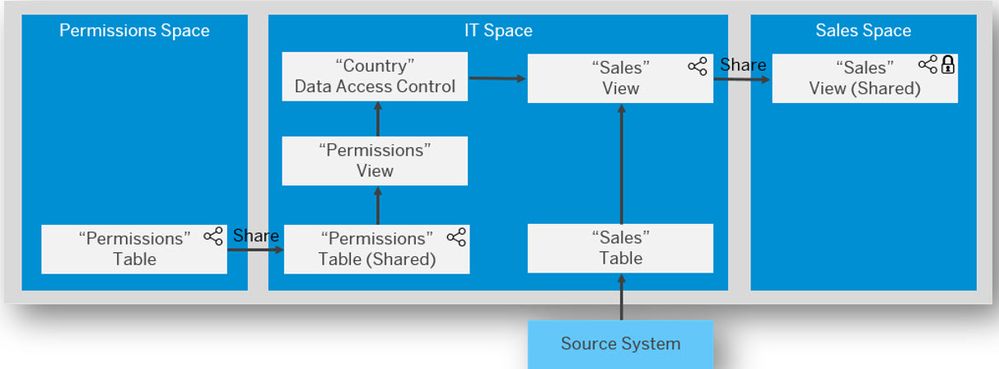

Permissions Space:

- A centralized approach with only assigned users will manage the authorization values table for the data access control for all LOB spaces.

- Protection against unauthorized access and value maintenance from common users.

- Share and expose authorization value tables/views to Administration or IT Space.

Centrazlied IT Space:

- A centralized IT space can be created with connection to multiple sources SAP S/4HANA, SAP BW, SAP SuccessFactors, SAP Concur, Salesforce, Google BQ, etc.

- Data is made available as remote tables or replicated tables and modeling using a data builder.

- Consume the Permission space shared table and create the permission views. Create a Data Access Control(DAC) object and Assign it to view (e.g. Sales view).

- Share the views with different LOB spaces like the Sales view with the Sales department Space.

2. Departments

Similar to LOBs space concept with centralized governance. Create multiple spaces for the key departments in your organization like IT, Finance, Marketing, Operations Management, HR, Sales, Purchasing, etc., and a dedicated Permission space for data security.

3. Data Domains

Create spaces for different data segments like Customer Data, Product Data, Sales Data, Financial Planning & Analysis (FP&A), Social Data, Streaming Data, Financial Data, HR Data, and Manufacturing Data. Enable data sharing among these spaces to encourage reuse while ensuring sensitive data is protected using methods like data masking and authorization. The PERMISSION space authorization table, managed by designated security and administrative users, controls access rights for sharing data across these spaces.

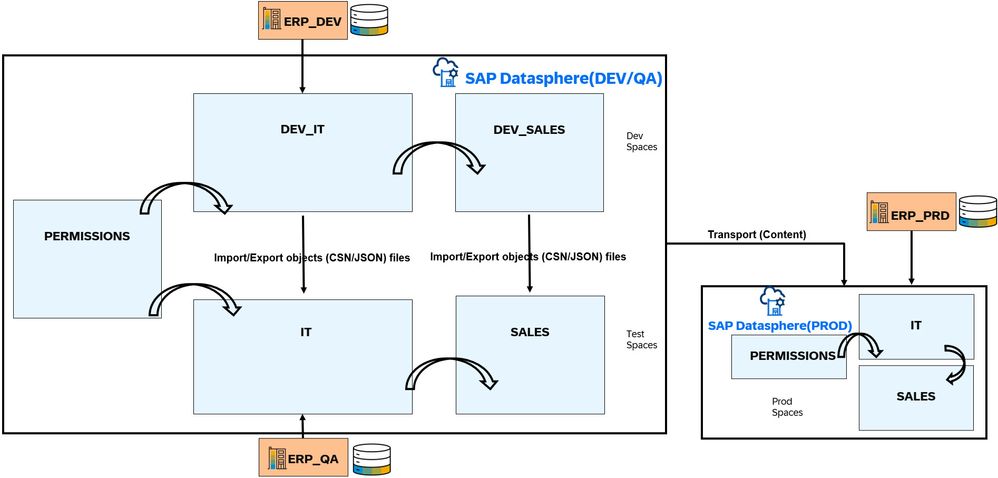

4. Single SAP Datasphere tenant for development and testing using the Space concept.

Leveraging the Spaces concept makes it feasible to utilize a single SAP Datasphere tenant for both development and testing (Dev/Test) purposes. However, it's advisable to adopt a three-tier tenant landscape for Development, Testing, and Production scenarios. Using at least two distinct SAP Datasphere tenants for your data landscape is recommended. This strategy ensures a distinct segregation between your development and testing (Dev/Test) environment and your production environment. The Dev/Test tenant provides a dedicated platform for constructing and validating data models and transformations, safeguarding your production data integrity as the ultimate source of truth.

- It can used for small deployments and the initial phase of the project.

- Not an ideal approach from the object transport and lifecycle management perspective.

- Manual import/export of the objects (CSN/JSON) files across the spaces.

- From SAP Datasphere Dev/QA tenant to PROD tenant, transport content network is used.

Example:

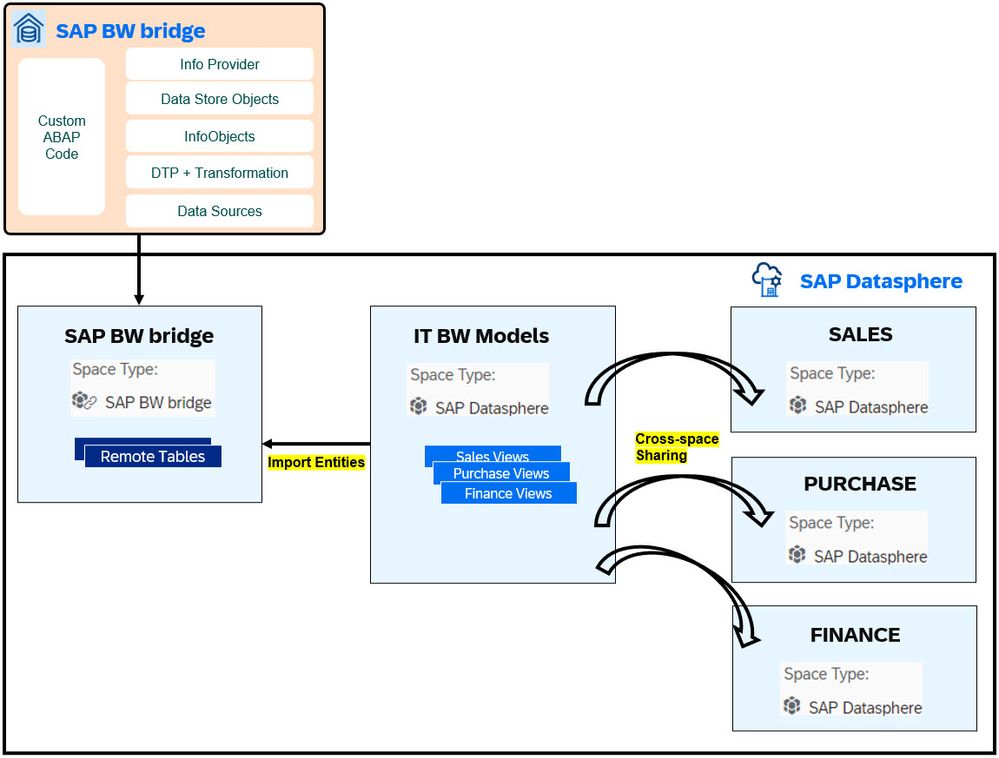

5. With the SAP BW bridge

Customers with investments in SAP BW and BW/4HANA who aim to harness the advantages of cloud-based data warehousing can opt for the SAP BW bridge feature. Enabling these optional features in SAP Datasphere results in the creation of a dedicated space within the tenant for importing BW bridge objects as remote tables, which can then be shared with other spaces. Unlike the standard SAP Datasphere space type, creating data builder objects in the SAP Datasphere BW Bridge Space is not permitted. Importing remote tables is allowed in the BW bridge space, and it's advisable to maintain a separate space from a governance perspective. In this setup, space IT BW Models automatically generate SAP Datasphere objects through the entities import feature for corresponding BW queries, Infoproviders, aDSOs, etc. The created objects can be shared with the target Line of Business (LOB) space via cross-space sharing capabilities. Consequently, original BW bridge objects belong to the BW bridge space type, while created objects are part of the IT BW Model managed space.

Example:

General Space Guidelines:

Multiple spaces: It is advisable to create multiple spaces within your tenant for efficient organization of data and resources.

Segregate Access with Dedicated Spaces: Establish separate spaces for administrator, IT, and security permissions, along with multiple spaces for departments, projects, Lines of Business (LOBs), data domains, etc. This practice aids in controlling access to sensitive data and maintaining stability across various environments for distinct purposes.

Maximize Cross-Space Sharing for Reusable Data and Models: SAP Datasphere facilitates sharing tables, views, and models across spaces within the same tenant, fostering reusability and mitigating data duplication. For instance, refrain from accessing or replicating the same SAP S/4HANA CDS view or tables like ACDOCA into multiple SAP Datasphere spaces, as this can strain your SAP S/4HANA system and lead to data duplication within SAP Datasphere. Instead, adopt a strategy of replicating once and consuming N times across spaces

Considerations for Space Restrictions: Direct sharing of data source connections, data flows, transformation flows, replication flows, and task chains between spaces is not supported. If necessary, these elements must be recreated within each space individually. Additionally, objects cannot be copied or pasted from one space to another. Importing and Exporting data builder objects in CSN/JSON files.

- Naming Conventions for Spaces are Crucial:

- Space name can be changed at any time, however, Space ID cannot be changed later.

- Avoid using reserved keywords(Refer to Rules for Technical Names) such as SYS, PUBLIC, CREATE, SYSTEM, DBADMIN, PAL_STEM_TFIDF, SAP_PA_APL, Datasphere_USER_OWNER, Datasphere_TENANT_OWNER, Datasphere_AUDIT_READER, Datasphere_GLOBAL, and Datasphere_GLOBAL_LOG. Additionally, refrain from using the prefix SYS or prefixes like Datasphere and SAP_ in space IDs, unless explicitly advised. Moreover, keywords reserved for the SAP HANA database cannot be utilized in a space ID. (Refer to Reserved Words help document)

- Examples(Space ID: Space Name): MASTER_DATA: Master Data, SUPPLY_CHAIN: Supply Chain, LEGAL: Legal, SALES_AND_OPERATIONS: Sales and Operations, CENTRAL_IT_SPACE: Central IT Space, PERMISSIONS: Permissions, HUMAN_RESOURCES: Human Resources, ADMINISTRATION: Administration, GOVERNANCE: Governance, SPEND_ANALYSIS: Spend Analysis, FINANCE: Finance, MARKETING: Marketing, CUSTOMER_DATA: Customer Data, CONFIDENTIAL: Confidential, *_DATALAKE: * Data Lake, OPERATIONAL_ANALYTICS: Operational Analytics etc.

- Cross-space sharing scenario: Problem: When sharing models between spaces A and B, users in space B may not see the shared model in the model list. Instead, it appears as a table or view in the data modeling editors, losing context and affecting user decisions. This can be problematic if the shared model includes a calculated column and relies on data restrictions for detailed reporting. When a modeler in space B uses the restricted column in a selection criterion, the calculation needs to happen before the restriction, potentially causing severe performance issues and memory consumption. Solution: To avoid this, consider naming calculated measures with "CM_" prefix and calculated dimensions with "CD_" prefix to indicate their nature to other developers.

2. Data Integration:

SAP Datasphere offers both remote and replication connections to various source systems. Comprehensive details on supported connection types are available in the SAP Help documentation. Not covering in-depth the data connectivity and configuration as it's not the focus of this article, providing quick high-level guide reference and then shifting to the data integration best practices.

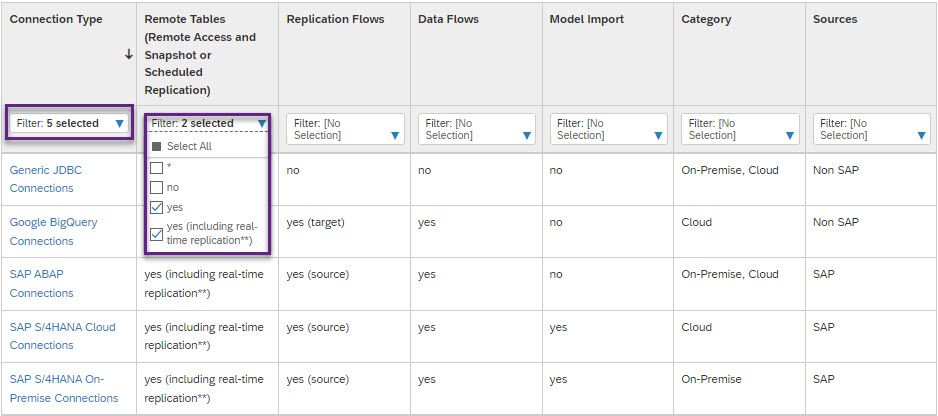

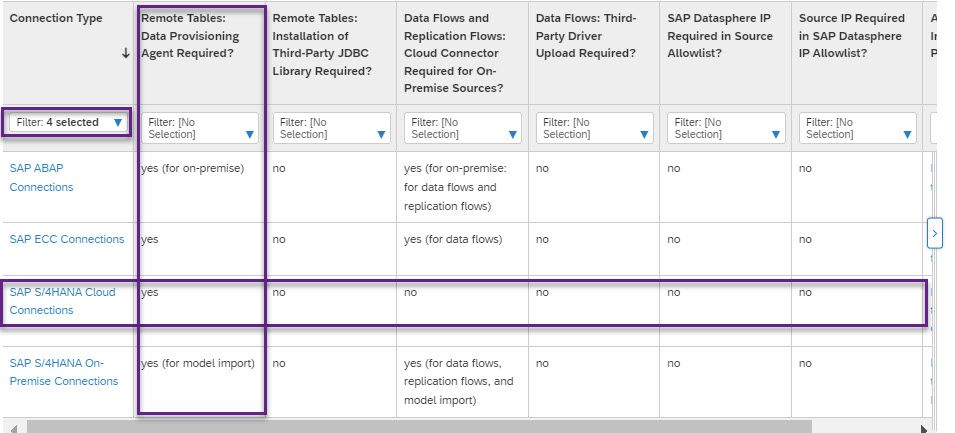

- Connection Types Overview: The overview table provides a quick reference for key functionalities supported by various source connection types in SAP Datasphere. Information is focused on functionalities relevant to data access and manipulations. Use the required column filter to select the Connection Type, Remote Tables, Replication Flows, Model Import, etc. Below example: Selected connection types Generic JDBC, Google BigQuery, ABAP, and S4 and next filtered the column Remote Table(yes). Likewise based on the source connection type, this table will provide the information if the source is supported with a remote table (yes, no), Replication Flows (source, target), Data Flows(yes, no), and model import (yes, no).

- Preparing and Configuration Connections: The overview lists table clarifies prerequisites for the connection types indicating if a specific connection requires a middleware component like the DP Agent (Data Provisioning Agent) or Cloud Connector (CC) for communication or supports direct connection (without any additional software component). Below example: To enable data integration features such as Remote Table, Replication Flow, or Data Flow, specific connection types require different configurations. For ABAP, SAP S/4HANA, and ECC connections, installation and configuration of DP Agent are necessary. However, for utilizing the Replication Flow with SAP S/4HANA Cloud connections, a Cloud Connector (CC) isn't necessary. Nonetheless, for other connection types, it's crucial, as indicated in the image below. This table serves as a convenient guide to pinpoint the software prerequisites essential for various data integration functionalities.

Data Integration Options and Recommendations:

| Integration Feature | Remote or Replicate Data | Use cases, Recommendations |

| Remote Table | Remote |

|

| Remote Table | Replicate(Snapshot, Real-time replication) |

|

| Replication Flow | Replicate |

|

| Data Flow | Replicate |

|

| Persistent View | Replicate |

|

| ETL tools | Replicate |

|

Choosing Between Remote Tables and Data Replication in SAP Datasphere:

The decision between using remote tables (data federation) and data replication in SAP Datasphere depends on several factors. Here's a breakdown to help you choose the best approach for your operational reporting needs:

Use Remote Tables (Data Federation) When:

- Limited Data Pull: You only need a small subset of data from the source system (HANA Calc. Views or S/4HANA Analytic Queries).

- Pre-Aggregated Data: The data in the source system is already aggregated to reduce network traffic and latency.

- Real-Time Reporting: Business needs reports with the most up-to-date data. Remote tables provide near real-time access.

- Filter and Variable Usage: You can restrict data retrieval using filters and variables in your reports, further reducing the amount of data transferred.

- Performance Considerations:

- Location: The data center location of both the source and Datasphere can impact performance. Geographically close locations minimize latency.

- Column Selection: Selecting fewer columns reduces data transfer size.

- Aggregated Data: More the aggregated data is, the better the performance.

- Filtering: Specifically on the columns like date, company code, sales org, plant. Avoid filtering on calculated dimension columns.

- Join, Union, Order By Use the join condition on the ID/key columns rather than a description, and specify columns in Order By with ASC, DESC explicitly.

- Data Volume Size (Rows and Columns): A smaller number of records retrieved improves performance.

- Optimize Queries at the Source: When creating views, and queries in S/4HANA, BW, HANA, Hyperscaler, etc. optimal performance on the source side helps overall performance.

Use Data Replication When:

- Modeling and Operations: Datasphere needs to perform modeling, transformations, joins, unions, or create layered data marts. Data needs to be persisted for these operations.

- Intensive Logic Processing: Business logic requires complex calculations or transformations best done within Datasphere.

- Performance vs. Freshness Trade-Off: While replicated data might be slightly delayed compared to remote tables, report performance can be optimized.

- Operational Business Needs: Consider the criticality of real-time data vs. performance for reports.

General Guidelines:

- Start with Remote Tables: For quick operational reports and less data transfer, use remote tables. They're real-time and reduce network traffic.

- Assess for Replication: If reports need complex logic or performance is key, think about replicating data to Datasphere.

- Use ABAP CDS Views Instead of Direct Tables: Access data efficiently from SAP S/4HANA systems with ABAP CDS views. They offer a standard way to expose data and work with real-time integration via Change Data Capture (CDC).

- Prioritize ABAP CDS views with data extraction and CDC over ODP Data Sources: Use direct connection via ABAP pipeline engine and delta handing using Change Data Capture engine. Here SDI ABAP Adapter (DP Agent) is not required.

- Consider Data Profiling: Profile your source data to understand its structure and quality before integrating data. This helps prevent issues during integration.

- Secure Sensitive Data: Protect sensitive data during integration by masking or anonymizing it, and following security best practices

- Remember: The key is to balance data freshness, report performance, and business requirements.

3. Data Builder Modeling:

SAP Datasphere offers functionalities for data modeling, and here are some best practices to follow for optimal performance, maintainability, and reusability:

General Recommendations:

- SAP HANA Cloud Modeling Best Practices Apply to Datasphere as well: Since HANA Cloud powers SAP Datasphere, established best practices for HANA data modeling are directly applicable.

- Embrace Reusability and Avoid Giant Views: Instead of monolithic views, create a library of smaller, well-defined basic, intermediate reusable views. These reusable pieces can be put together to make more complicated views, making it easier to work with and maintain them. Large, single views can be cumbersome and impact performance. Design smaller, focused views that cater to specific purposes.

- Example: create separate reusable views with Semantic Type: Dimensions for the master data, Text for the master data text, Hierarchies data, Fact data, etc.

- Use space-cross sharing and re-use the views with other spaces.

- Strategic Filtering: Instead of using inner joins for filtering, apply WHERE clause filters on joined datasets. This reduces unnecessary data processing.

- Simplify Logic: Keep your data model logic as simple as possible. Complex transformations might be better handled in separate transformation steps within transformation flows or data flows.

- Hierarchical Modeling: Utilize hierarchies within your data model to represent relationships between master data elements. This improves data governance and simplifies queries.

- Independent Associations: Model dimension, and text views that are associated with master data independently. This allows for reusability across different functional areas.

- SQL vs. Graphical Views: For complex business logic, consider using SQL views. Graphical views might be more suitable for simpler models or improved user experience in reporting tools.

- Clear and Consistent Naming Conventions are Crucial: Guide.

Layered Modeling Approach for Better Performance, Organization and Management:

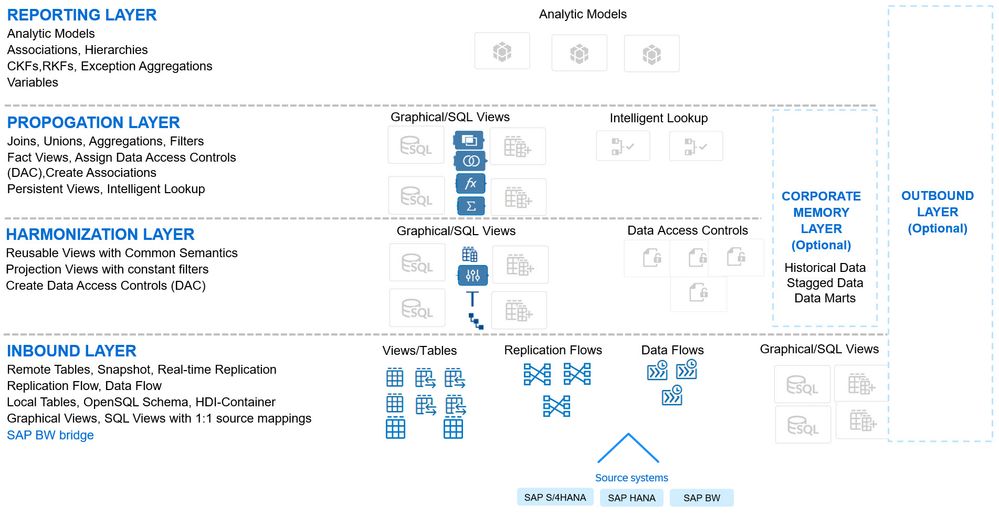

Don't underestimate the power of a well-defined modeling approach in SAP Datasphere! Layering your data architecture from the start (even though it's a familiar concept in data warehousing) lays a solid foundation for efficient data integration, reusability, and long-term maintainability. This approach organizes data into distinct layers (inbound, harmonization, optional propagation, and reporting) with specific purposes, simplifying data management and minimizing complexity in the long run. Here's a breakdown of the layers:

Layers | Purpose | Best Practices |

| Inbound Layer (Remote/Acquire Data, Data Projection ) | Ensure data accessibility through federated or replicated formats. Utilize data integration functionalities such as Replication Flow, Data Flow, or alternative tools to ingest data into SAP Datasphere's OpenSQL Schema. Employ methods like remote table snapshots, real-time replication, and scheduling via task chains. Develop inbound layer views with 1:1 mapping from the source, implementing filters and projection only as needed while refraining from any unnecessary data manipulation |

|

| Harmonization Layer (Reusable Views & Data Standardization) | Use layer to create reusable views on top of the inbound layer views. Standardize the inbound data with common semantics. Projection views with distinct column renaming (BUKRS, Comp_code, 0COMP_CODE), and constant filters. Additionally, you can create a Data Access Control (DAC) to limit the specific dataset or use it in the next layer.

|

|

| Propagation Layer (Joins, Combines and Aggregates) | Utilize objects from the Inbound and Harmonization layers for standardized and consistent business data. Create views using joins, unions, calculated columns, aggregations, and dynamic filters as needed. Model dimensions, text, and hierarchical views. For optimized performance, persist complex views or materialize them into separate data marts for specific analytics. Access them through Analytic models or expose them via public OData API for third-party BI clients, tools, and apps, or ODBC if the Expose for Consumption switch is enabled |

|

| Reporting Layer ( Business Consumption Views) | Leverage Fact views from the Propagation layer to create unified consumption entities like Analytic Models(THE go-to analytic consumption entity), the main choice for analytics. Use them in SAC or Excel Add-in. For other tools, make the OData service available for Analytic Models. Include features like RKF, CKF, and exception aggregation. Support source/filter variables, time-dependent dimensions, texts, currency conversion, and analytical preview. The HANA engine optimizes dimension association through pruning, saving time during the JOIN execution. Apply DAC to add extra reporting restrictions based on user access. |

|

Corporate Memory Layer(Optional, Preserving Historical Data for Future Use):

The Corporate Memory Layer(CML) can be designed for long-term, persistent storage of the complete historical record of loaded transactional data. This layer offers several benefits:

- Controlled Independent Updates: CML filled independently of updates to architected data marts, ensuring a complete historical record is preserved.

- Reconstruction Source: CML historical data can be used to reconstruct specific data points or entire data sets without reaccessing the source systems. This is particularly valuable when:

- Source system data is deleted or archived or the system is to be retired.

- Data is needed for AI/ML use cases that often require historical trends.

- Historical business reporting.

- Cost-Effective Storage: Often utilizes more cost-effective storage solutions like Embedded SAP HANA Cloud Data Lake to manage large volumes of historical data efficiently.

Outbound Layer(Optional, A Flexible Data Delivery Hub):

SAP Datasphere offers an optional outbound data layer that acts as a versatile data delivery hub. This layer provides temporary storage for data intended for external consumption. By leveraging the outbound data layer strategically, you can enhance data accessibility, streamline data exchange with external systems, and efficiently manage the data lifecycle within your SAP Datasphere environment. Key characteristics include:

- Flexible Access (OpenSQL Schema ODBC/JDBC, OData, etc.): Data can be directly accessed by both internal and third-party tools, promoting broader data utilization.

- SAP FedML (Data Federation): A scenario for training business data using hyperscalart ML capabilities.

- External Delivery (Replication Flow): Data can be pushed to various external destinations, facilitating seamless data exchange with other systems.

- Provisioning and Management: This layer acts as the data staging area when provisioning data from Datasphere to requesting target systems.

- Data Lifecycle Management: Data can be efficiently deleted from this layer once it's no longer needed to supply the target system, optimizing storage usage.

- Reconciliation Support: Timestamping records upon replication to external systems simplifies data reconciliation efforts, ensuring data consistency across systems.

Summary:

Following established best practices acts as a roadmap for efficient data modeling in SAP Datasphere. These practices, like those in SAP BW and HANA, maximize resource usage and performance. While this guide emphasizes tried-and-tested methods, SAP Datasphere's flexibility encourages exploring new functionalities for various modeling approaches.

References:

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

95 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

67 -

Expert

1 -

Expert Insights

177 -

Expert Insights

308 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,576 -

Product Updates

353 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

440 -

Workload Fluctuations

1

- The 2024 Developer Insights Survey: The Report in Technology Blogs by SAP

- SAP Datasphere + SAP S/4HANA: Your Guide to Seamless Data Integration in Technology Blogs by SAP

- What’s New in SAP Datasphere Version 2024.9 — Apr 23, 2024 in Technology Blogs by Members

- Hybrid Architectures: A Modern Approach for SAP Data Integration in Technology Blogs by SAP

- What’s New in SAP Analytics Cloud Release 2024.09 in Technology Blogs by SAP

| User | Count |

|---|---|

| 22 | |

| 13 | |

| 13 | |

| 11 | |

| 10 | |

| 10 | |

| 9 | |

| 9 | |

| 9 | |

| 8 |