- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Refresh Scenarios With SAP Landscape Management (L...

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Advisor

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

10-05-2021

11:54 PM

In this series of blog posts, I will cover some of the options available in SAP Landscape Management (LaMa) to perform Database Refresh when using HANA as the database. In part 1 the focus will be within Azure and performing database refresh across US West and US East regions. In addition the source and target will be in different subscriptions and using HANA MDC.

LaMa refresh options fall in three types of categories.

Option 1 is not applicable as there is no support for cross region provisioning in any of the above cloud adapters. All 3 adapters support provisioning within the same region. The Azure connector however, does support cross subscription provisioning but as we need to demonstrate both cases (region and subscription), option 1 will not be covered.

Option 2 and 3 are both applicable and example scenarios will be covered here in part 1 of the blog series. Subsequent posts will cover other IaaS providers, refresh across IaaS providers and other related scenarios.

Note: Some diagrams may appear too small or blurry to view. In this case please click on the diagram to enlarge it.

Update: The second and third part of the series are published:

Part 2: Refresh Across Sites (HANA Single Tenant Database Replication)

Part 3: Refresh Using Backint Agents for AWS and GCP

As shown in the diagram above, I have subscription A with a distributed setup of ABAP with HANA system residing in the US West region of Azure. Subscription B has a similar system residing in US East region. Each region has its own Azure Virtual Network or VNet. Each VNet has a subnet and for US West the address space is 10.1.0.0/24 and US East is 10.2.0.0/24.

LaMa is part of subscription A and in US West. At this point there is no network connectivity between the 2 environments.

All of the IP addresses used for intersystem communication are in the private address space.

The HANA database in US West, has two tenant databases - AH1 and DB1. AH1 is used for system DEQ. On the US East side, the HANA has a single tenant (AH1).

Note: NEQ was previously in the US West region. I moved it to the US East region as well as to a different subscription.

Note that there is a 13 character limit for hostname in LaMa.

We will use Azure VNet Peering to establish connectivity between the two environments. Since the VNets are in different subscriptions, the person performing this task either has permissions for both subscriptions or has to work with the owner of the other subscription to establish this peering.

Note that this communication is not over the public internet but using Azure's own private network backbone infrastructure.

The high-level steps involved are below.

As this blog is not about showing how to do VNet peering, please refer to this Microsoft document for details on how to do it.

Similar peering is available in AWS and GCP. Instead of VNet Peering, they call it VPC (virtual private cloud) Peering.

Now that peering is established, perform a simple test from LaMa OS prompt to the ssh port of the three systems in VNet B.

Assuming that ssh connections are not blocked, you should see a successful connected output.

Note that the configuration of LaMa outlined in this section only covers what is needed for the scenarios in this blog.

In order to use HANA replication you have to configure HANA to meet the security requirements. The recommended way is to ensure secure communication with SSL between the source and target. In a lab environment you may decide to disable the SSL (though not recommended).

The SSL configuration required is documented in the "Preparing to Copy or Move a Tenant Database" section of the HANA administration guide.

Steps:

This is not recommended and should only be used for lab environment testing without sensitive data. Although the peering traffic across regions is not on the public internet, it is still recommended by SAP as well as Azure to use secure communication.

Steps:

On both source and target SYSTEMDB issue the following SQL commands:

Restart both source and target HANA systems.

Before we do the database refresh, we can optionally perform a tenant copy to ensure our environment is setup correctly for replication. I could copy the tenant DB of DEQ (AH1) to a different name e.g. AH2.

Alternatively I could also copy a tenant DB that is being used by another system 'SEQ' that I already have. The master HANA has tenant DB AH1 for system 'DEQ" and tenant DB 'DB1' for system SEQ. This is configured in LaMa same as DEQ such as provided by 'Installation' and can be used for copying.

LaMA topology with SEQ:

On the source HANA (US West) we have an additional tenant database "DB1' which we will copy over to target HANA (US East)

After the successful tenant copy test, you can destroy the tenant DB.

In this exercise we will refresh NEQ from DEQ. Since the mapping in LaMa is already done with DEQ as source for NEQ, we do not need to specify the source when refreshing NEQ.

As in the tenant copy, the database refresh will use HANA replication.

Note this option will only appear in the provisioning view if you have marked NEQ as a copy of DEQ in LaMa. This does not mean that NEQ was copied but this mapping is required. Refer to the section "Configure Target System Provisioning & RFC"

In this exercise we will do restore-based refresh using files. This assumes that the backup files are stored in a filesystem (local or other NFS server) and these are made available via an NFS mount on the target system.

The other option is using Azure Backup service that is SAP certified for Backint. However, as indicated earlier this option is not feasible when performing the refresh across subscriptions. Azure currently does not have a mechanism to allow access to the backup vaults from another subscription.

Azure Backup allows the HANA backup files to be restored into a filesystem and then technically these could be used by LaMa to perform the refresh. However, this would be considered a custom process and is outside the scope of this blog (maybe another blog). We will therefore not use Backint.

Back when we had NFSv3 it was always a bad idea to use NFS over a long distance due to latency concerns and the resulting very poor network performance. NFSv4 however, is much better at this and can get decent performance. It is still not the best way to get files across from one region to another but it does the job. It is possible to tune NFSv4 further for optimal performance on high latency networks but we will stick with the defaults.

Part 2: Refresh Across Sites (HANA Single Tenant Database Replication)

Part 3: Refresh Using Backint Agents for AWS and GCP

LaMa refresh options fall in three types of categories.

- Leveraging storage integration (e.g. with the cloud adapters for AWS, Azure and GCP)

- Using HANA replication

- Restore based refresh

Option 1 is not applicable as there is no support for cross region provisioning in any of the above cloud adapters. All 3 adapters support provisioning within the same region. The Azure connector however, does support cross subscription provisioning but as we need to demonstrate both cases (region and subscription), option 1 will not be covered.

Option 2 and 3 are both applicable and example scenarios will be covered here in part 1 of the blog series. Subsequent posts will cover other IaaS providers, refresh across IaaS providers and other related scenarios.

Note: Some diagrams may appear too small or blurry to view. In this case please click on the diagram to enlarge it.

Update: The second and third part of the series are published:

Part 2: Refresh Across Sites (HANA Single Tenant Database Replication)

Part 3: Refresh Using Backint Agents for AWS and GCP

Lab Environment

As shown in the diagram above, I have subscription A with a distributed setup of ABAP with HANA system residing in the US West region of Azure. Subscription B has a similar system residing in US East region. Each region has its own Azure Virtual Network or VNet. Each VNet has a subnet and for US West the address space is 10.1.0.0/24 and US East is 10.2.0.0/24.

LaMa is part of subscription A and in US West. At this point there is no network connectivity between the 2 environments.

All of the IP addresses used for intersystem communication are in the private address space.

The HANA database in US West, has two tenant databases - AH1 and DB1. AH1 is used for system DEQ. On the US East side, the HANA has a single tenant (AH1).

Note: NEQ was previously in the US West region. I moved it to the US East region as well as to a different subscription.

- HANA version 2.00.052

- Netweaver ABAP Release 7.52

- SUSE Linux Enterprise 12

- LaMa 3.0 Enterprise edition SP20

- SAP Host Agent Patch 50

- SAP Adaptive Extensions 1.0 Patch 60

| TYPE | SID/HANASID | VM Hostname | Virtual Hostname |

| ASCS (uswest) | DEQ | deq-xscs-0 | deq-ascs |

| HANA Tenant DB (uswest) | AH1 | deq-db-0 | ah1-db |

| PAS (uswest) | DEQ | deq-app-0 | deq-app-1 |

| LaMa (uswest) | J2E | lama-azure | n/a |

| ASCS (useast) | NEQ | neq-app-0 | neq-ascs |

| HANA Tenant DB (uswest) | AH1 | neq-db-0 | neq-db-c |

| PAS (uswest) | NEQ | neq-xscs-0 | neq-app-c |

Note that there is a 13 character limit for hostname in LaMa.

Connectivity

We will use Azure VNet Peering to establish connectivity between the two environments. Since the VNets are in different subscriptions, the person performing this task either has permissions for both subscriptions or has to work with the owner of the other subscription to establish this peering.

Note that this communication is not over the public internet but using Azure's own private network backbone infrastructure.

The high-level steps involved are below.

- As userA grant network contributor role to userB (for VNet A only)

- As userB grant network contributor role to userA (for VNet B only)

- As either user, add peering between the two vnets

As this blog is not about showing how to do VNet peering, please refer to this Microsoft document for details on how to do it.

Similar peering is available in AWS and GCP. Instead of VNet Peering, they call it VPC (virtual private cloud) Peering.

Test Connectivity

Now that peering is established, perform a simple test from LaMa OS prompt to the ssh port of the three systems in VNet B.

LaMa# telnet <ip address of NEQ system> 22Assuming that ssh connections are not blocked, you should see a successful connected output.

LaMa Configuration

Note that the configuration of LaMa outlined in this section only covers what is needed for the scenarios in this blog.

Discover/Configure Systems in LaMa

Engine Setting

- Go to Setup -> Settings -> Engine

- Enable “Automatic Mountpoint Creation”

- Disable “Backup Up Application Servers During Database Refresh"-- since we cannot use storage integration across regions.

Pool Configuration

- Configure Pool: Configuration -> Pools

Network Configuration

- Add Network: Infrastructure -> Network Components -> Network -> Add Network

- Mandatory fields should be enough for this exercise. Example:

- Name: Azure-net (can be any name)

- Subnet Mask: 255.255.255.0

- Broadcast Address: 10.1.0.255

- Mandatory fields should be enough for this exercise. Example:

- Add network assignment: Infrastructure -> Network Components -> Network -> Assignment

- Repeat for useast - (Azure-East)

- Add Network: Infrastructure -> Network Components -> Network -> Add Network

Repository Creation

- Create Repository for Software Provisioning Manager

- First step is to ensure that your NFS server has the below directory structure. Important ones are the two sub-directories InstMaster and Archives. The parent directory can be anything. In my case it is /Repository

- /Repository/InstMaster

- /Repository/Archives

- Although recommended to have a dedicated NFS server for repositories, in this lab environment I used my ASCS host (deq-xscs-0) to act as the NFS server.

- My /etc/exports file has the entry for /Repository

- First step is to ensure that your NFS server has the below directory structure. Important ones are the two sub-directories InstMaster and Archives. The parent directory can be anything. In my case it is /Repository

- Create Repository for Software Provisioning Manager

/Repository *(rw,no_root_squash,sync,no_subtree_check)

- deq-ascs-0 has nfs server enabled and below command run to export contents of /etc/exports

# exportfs -a

- Mount deq-ascs-0:/Repository on /repo of ascs server (a4h-vm1)

- Populate InstMaster with latest SWPM (e.g. SAPCAR -xvf SWPM10SP29_2-20009701.SAR -R /repo/InstMaster)

- Populate Archives with the SAP Kernel and Netweaver Installation Export for your ABAP application server

- It is good idea to also put SAPHOSTAGENT and SAP Adaptive Extensions

- Infrastructure -> Repositories -> Add Repository -> Software Repositories -> Add Repository

- We added host name of ASCS server that has the mount point for the NFS server. This will provide the necessary information regarding the NFS server to LaMa in order to use for repository mount on the target servers.

- Select the mount point from the list.

- In the end you will have something like this:

- We now have the repository created. Next create the configuration for the above. Highlight the repository and click on “Add Configuration”. Fill in the info as below.

Discovery

- Discover hosts and assign to pool

- Detect using host agent and account sapadm

- For uswest hosts mark them as AC-Enabled

- For the useast hosts mark them both for AC-enabled and isolation ready.

- Isolation ready means the host can be fenced (or firewalled)

- Discover hosts and assign to pool

- In LaMa Operations -> Hosts you should see something like below

- Discover Instances (for source system - US West)

- Detect using <sid>adm for ASCS and Application Server

- Detect using ah1adm for HANA and SYSTEM for database

- For HANA select below option if you have only a single tenant (needed for operations intended for a tenant database)

- Discover Instances (for source system - US West)

- Repeat discovery in US East

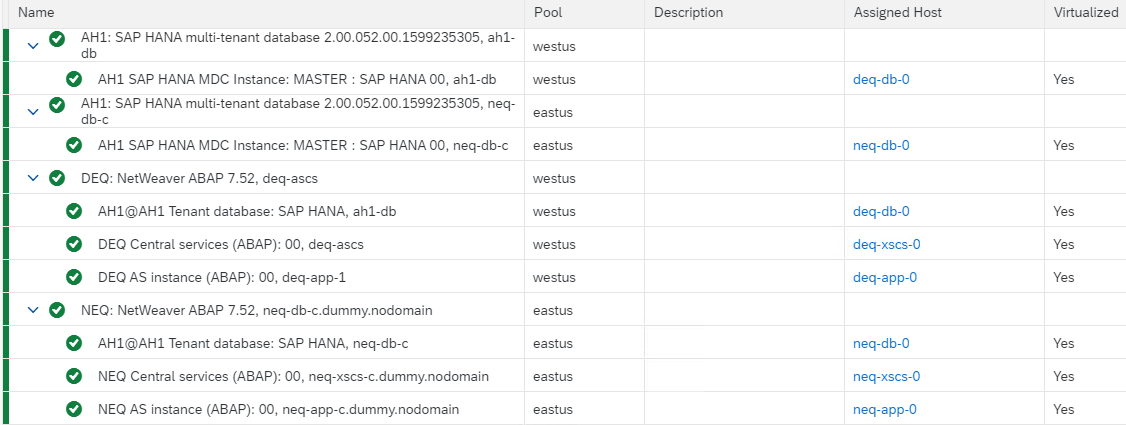

- Your operation view in LaMa should now have the topology as below. Note that there are two entries for AH1 master - for US West and US East and assigned hosts and pools are different.

- It is important for HANA MDC based systems to have this kind of topology in order to manage with LaMa - i.e. HANA master as a separate system while tenant DB is part of the other managed systems

- A basic test can be to perform stop and start of HANA tenant database. If topology is wrong then this will likely fail.

- Your operation view in LaMa should now have the topology as below. Note that there are two entries for AH1 master - for US West and US East and assigned hosts and pools are different.

- Go to Configuration and edit instance ASCS and under instance properties ensure AC-Enabled is selected and verify that the virtual hostname is used for communication

- Next go to Mount point and uncheck “Automounter” followed by click on “Retrieve Mount List”. Only keep the below:

- Repeat for PAS instance. This time you will get NFS list (Storage Type = NETFS).

- Repeat for HANA tenant database instance. There are no mount points to configure here.

- Repeat configuration as above for master database instances (AH1)

Configure Source System Provisioning & RFC

- Setup source system for copy, clone, rename and application server installation (plus anything else you want to use it for, such as HANA Replication)

- Once you have configured the RFC destination, you should retrieve the version of the system in step 1.

Configure Target System Provisioning & RFC

- Repeat the above for the target system as copy of the source. This is important to enable the refresh option.

- Note that you need to ensure ahead of time that release versions match

- The support package level can differ within the same SAP_BASIS release

- Applicable if following prerequisites are met:

- Source and target need to be on the same SAP_BASIS release, e.g on 754

- PCA Task content needs to be on the same build level

PCA Activation

- Install PCA License on both systems

- Operations -> Systems Tab -> System -> Install ABAP PCA License

- Install PCA License on both systems

Configure HANA backup share

- Create the mount point on the HANA server (source)

# mkdir -p /mnt/hanabackups

# chown <sid>adm:sapsys /mnt/hanabackups- Edit /etc/exports so that the current HANA directory is exported for NFS mounts. Your directory may vary from mine.

/hana/backup/data/DB_AH1 *(rw,no_root_squash,sync,no_subtree_check)- Ensure NFS server is enabled on the HANA server. The issue the below command to export the above.

# exportfs -a- Edit /etc/fstab so mounts happen automatically

deq-db-0:/hana/backup/data/DB_AH1 /mnt/hanabackups nfs defaults 0 0- Run the mount command

# mount deq-db-0:/hana/backup/data/DB_AH1 /mnt/hanabackups- Add the following profile parameter to the SAP Host Agent profile, which is located at /usr/sap/hostctrl/exe/host_profile. For more information, see SAP Note 2628497

acosprep/nfs_paths=/hana/backup/data/DB_AH1- Restart host agent

# /usr/sap/hostctrl/exe/saphostexec -restart- Configure the "Transfer Mount Configuration for System Provisioning" in LaMa

- Configuration -> Systems -> DEQ -> Edit -> Provisioning & RFC

- Enter source host (deq-db-0) and click 'Retrieve Mount List'

- Remove all except /mnt/hanabackups

- Set Usage Type to HDB Backup Share

- Click save

HANA Configuration

In order to use HANA replication you have to configure HANA to meet the security requirements. The recommended way is to ensure secure communication with SSL between the source and target. In a lab environment you may decide to disable the SSL (though not recommended).

Enable Secure Communication

The SSL configuration required is documented in the "Preparing to Copy or Move a Tenant Database" section of the HANA administration guide.

Steps:

- Verify TLS/SSL Configuration of Internal Communication Channels

In both the source system and the target system, verify that TLS/SSL is enabled on internal communication channels on the basis of the system public key infrastructure (system PKI). - Set Up Trust Relationship Between Target and Source Systems

Create a certificate collection in the system database of the target system and add either the public-key certificate of the system database of source system, or the root certificate of the source system. This certificate is used to secure communication between the systems via external SQL connections. - Open Communication From Target to Source System

Open communication from the target system to the source system by enabling services in the source system to listen on all network interfaces. - Create Credential for Authenticated Access to Source System

Create a credential to enable authenticated access to the source system for the purpose of copying or moving a tenant database. - Back Up Tenant Database

Back up the tenant database of source

Optional: Disable Secure Network Communication

This is not recommended and should only be used for lab environment testing without sensitive data. Although the peering traffic across regions is not on the public internet, it is still recommended by SAP as well as Azure to use secure communication.

Steps:

On both source and target SYSTEMDB issue the following SQL commands:

alter system alter configuration ('global.ini','SYSTEM') set ('communication','ssl') ='off' with reconfigure;

alter system alter configuration ('global.ini','SYSTEM') set ('multidb','database_isolation') ='low' with reconfigure;

alter system alter configuration ('global.ini','SYSTEM') set ('multidb','enforce_ssl_database_replication') ='off' with reconfigure;

alter system alter configuration ('global.ini','SYSTEM') unset ('persistence','log_mode') with reconfigure;

alter system alter configuration ('global.ini','SYSTEM') set ('communication','listeninterface') = '.global' with reconfigure;Restart both source and target HANA systems.

Tenant Copy

Before we do the database refresh, we can optionally perform a tenant copy to ensure our environment is setup correctly for replication. I could copy the tenant DB of DEQ (AH1) to a different name e.g. AH2.

Alternatively I could also copy a tenant DB that is being used by another system 'SEQ' that I already have. The master HANA has tenant DB AH1 for system 'DEQ" and tenant DB 'DB1' for system SEQ. This is configured in LaMa same as DEQ such as provided by 'Installation' and can be used for copying.

LaMA topology with SEQ:

Execute Copy Tenant DB

On the source HANA (US West) we have an additional tenant database "DB1' which we will copy over to target HANA (US East)

- Provisioning -> System SEQ -> Copy Tenant DB

- Change target pool to 'Azure Container > eastus'

- Select target MDC System (AH1: neq-db-c) and Tenant DB name (DB1). The tenant DB name does not need to match the source.

- Provide Username and Password -- can keep defaults

- Specify the target host name of db1-db-c.dummy.nodomain

- You may get a warning message about virtual hostname assigned to non-adaptive instance. You can ignore this.

- Click Execute

- In LaMa you now see tenant DB 'DB1' also in the eastus pool

- SQL validation

hdbsql=> \c -n db1-db-c:30013 -u SYSTEM

Password:

Connected to AH1@db1-db-c:30013

hdbsql SYSTEMDB=> select * from M_DATABASES

DATABASE_NAME,DESCRIPTION,ACTIVE_STATUS,ACTIVE_STATUS_DETAILS,OS_USER,OS_GROUP,RESTART_MODE,FALLBACK_SNAPSHOT_CREATE_TIME

"SYSTEMDB","SystemDB-AH1-00","YES","","","","DEFAULT",?

"AH1","","YES","","","","DEFAULT",?

"DB1","","YES","","","","DEFAULT",?

Destroy Tenant DB

After the successful tenant copy test, you can destroy the tenant DB.

- First the tenant DB must be stopped

- Operations View -> DB1@AH1 (eastus pool) -> Operations Menu -> Stop

- Provisioning -> AH1 (eastus pool) -> Tenant Processes -> Destroy Tenant DB

- Source Tenant DB = DB1

- Enter Credentials

- Execute

Refresh Database with HANA Replication

In this exercise we will refresh NEQ from DEQ. Since the mapping in LaMa is already done with DEQ as source for NEQ, we do not need to specify the source when refreshing NEQ.

As in the tenant copy, the database refresh will use HANA replication.

Note this option will only appear in the provisioning view if you have marked NEQ as a copy of DEQ in LaMa. This does not mean that NEQ was copied but this mapping is required. Refer to the section "Configure Target System Provisioning & RFC"

- Provisioning View -> NEQ -> Refresh Processes -> Refresh Database

- Enter Master Password for OS and DB Users

- If passwords differ then they can be changed in one of the subsequent steps

- In Tenant Details, accept defaults for DB credentials or change if needed

- There is nothing to select for the Hosts as we unchecked "Backup Application Servers During Database Refresh" in the LaMa engine settings.

- In the Host Names screen, verify that the entries displayed are correct and if not change them

- In the Users screen, accept default as the users already exist.

- In the Rename screen, either accept defaults or if passwords differ from the Master then change them accordingly

- In the isolation screen, select the default network access control list. During the initial configuration in LaMa these default rules are defined. If you need to correct them then you may do so here.

- Select "Unfence target system with confirmation". You may also select the other two options based on your scenario. For unfencing without prompt users take full responsibility for unfencing the target.

- For PCA, you can add any task list variant that you may have created as well as any other task list you would like to execute, for example the BDLS task list with its improvements. In this example we kept the default.

- The activity should now run and progress bar will eventually reach close to 100% before waiting for confirmation

- Make sure to respond to the prompt for unfencing the target in the Monitoring view. From the actions menu select 'Continue'

Restore-Based Refresh

In this exercise we will do restore-based refresh using files. This assumes that the backup files are stored in a filesystem (local or other NFS server) and these are made available via an NFS mount on the target system.

The other option is using Azure Backup service that is SAP certified for Backint. However, as indicated earlier this option is not feasible when performing the refresh across subscriptions. Azure currently does not have a mechanism to allow access to the backup vaults from another subscription.

Azure Backup allows the HANA backup files to be restored into a filesystem and then technically these could be used by LaMa to perform the refresh. However, this would be considered a custom process and is outside the scope of this blog (maybe another blog). We will therefore not use Backint.

Back when we had NFSv3 it was always a bad idea to use NFS over a long distance due to latency concerns and the resulting very poor network performance. NFSv4 however, is much better at this and can get decent performance. It is still not the best way to get files across from one region to another but it does the job. It is possible to tune NFSv4 further for optimal performance on high latency networks but we will stick with the defaults.

- Provisioning View -> NEQ -> Provisioning -> Refresh Processes -> Restore-based Refresh

- Basic screen: Enter master password

- Hosts screen: nothing to enter

- Host Names screen: Verify host names and IP addresses

- User screen: Accept default as we pre-existing account

- Database Restore screen: Select the backup you want to use

- This is visible to the target host (neq-db-c) as the HANA backup share got mounted via NFS

- You will get a warning which you can ignore (but do take note of it)

- Tenant Details screen: enter SYSTEMDB credentials

- Rename screen: either accept defaults or if passwords differ from the Master then change them accordingly

- Ignore the warning

- Isolation screen: Check radio button for "Unfence target system with confirmation". See section on replication based refresh for details about the other options.

- ABAP PCA screen: Accept default. See section on replication based refresh for details about the other options.

- Summary screen: Click on Execute

- There are 28 steps involved and when successful you should get the 100% completion status

Part 2: Refresh Across Sites (HANA Single Tenant Database Replication)

Part 3: Refresh Using Backint Agents for AWS and GCP

References

- SAP Managed Tags:

- SAP Landscape Management,

- SAP Landscape Management, enterprise edition

Labels:

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

114 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

76 -

Expert

1 -

Expert Insights

177 -

Expert Insights

351 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

14 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,575 -

Product Updates

392 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,871 -

Technology Updates

487 -

Workload Fluctuations

1

Related Content

- Enabling In-Order processing with SAP Integration Suite, advanced event mesh Partitioned Queues in Technology Blogs by SAP

- Benchmarking: Bridging the Gap Between Core Business and BPM Practice in Technology Blogs by SAP

- Business AI for Aerospace, Defense and Complex Manufacturing in Technology Blogs by SAP

- Want to learn more about SAP Master Data Governance at SAP Sapphire 2024? in Technology Blogs by SAP

- How to Connect a Fieldglass System to SAP Start in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 19 | |

| 14 | |

| 11 | |

| 10 | |

| 9 | |

| 8 | |

| 7 | |

| 7 | |

| 7 | |

| 5 |