- SAP Community

- Products and Technology

- Technology

- Technology Blogs by Members

- Whatever happens in an Azure and SAP private linky...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

This post is part of a series sharing service implementation experience and possible applications of SAP Private Link Service on Azure. Find the table of contents and my curated news regarding series updates here. Looking for part 2? Find the associated GitHub repos here. |

🛈Note: Nov 2022: SAP released Azure Application Gateway for SAP Private Link. It simplifies your architecture further and increase security with a managed web application firewall compared to the standard load balancer setup described in this post. Oct 2022: SAP added guidance for "bring your own domain and certificate" for the SAP Private Link. In addition to that you can obtain the certificate from well-known certificate authorities automatically via Azure Key Vault like so. 22nd of June 2022: SAP announced General Availability of the service. References to the Beta state no longer apply! 24th of Nov 2021: SAP introduced hostname feature for PLS. Going forward host names are used instead of private IPs. Not all Screenshots below have been updated! |

Dear community,

With the release of the Beta of SAP Private Link Service (PLS) exciting times dawned upon us. We finally get a managed solution to securely connect from our Apps running on BTP (deployed on Azure) to any IaaS workload running on Azure without even traversing the internet. The first options that come to mind would be SAP WebDispatcher, ECC, S4, HANA, SAP CAR, anyDB, Jenkins, Apache or HPC cluster to name a few.

Before Private Link Service, you would have typically deployed an SAP Cloud Connector (reverse connect tunnel over the public internet) or sophisticated internet-facing setups involving gateway components with web application firewalls to allow inbound. Often this required two separate VMs (primary + shadow instance) or at least additional processing power on the primary application server, web dispatcher or the likes.

In addition to that you needed to open outbound ports for the cloud connector to reach the public BTP endpoints to initiate the reverse connect. This outbound ports to the public Internet are often a no-go for customers, that has only been tolerated for the lack of a suitable alternative.

If you feel brave enough for the Beta and your focus is layer 4 connectivity those days are gone 😊See part 8 of the series for a deep dive on Cloud Connector vs. PLS.

I am referring to layer 4 and layer 7 of the OSI model throughout the post. Layer 4 addresses network level communication such as TCP/IP and layer 7 application protocols such as http and RFC.

Fig.1 pinkies “swearing”

The first scenario we are going to look at in this series, is the consumption of an OData service living on my S4 system, which is locked up in an Azure private virtual network (VNet). My BTP workloads are provisioned in an Azure-based subaccount in west Europe (Amsterdam) and my S4 is based in north Europe (Dublin).

The beta release covers one way scenarios from BTP to Azure VMs for now. Check philipp.becker post on the next planned steps.

SAP’s docs and developer tutorial focus on the CF CLI commands. In my blog I will show the process with the BTP UI instead.

Fig.2 architecture overview

Let’s look at the moving parts

To get started we need to identify our VM, its location, and the VNet where it is contained.

Fig.3 S/4Hana VM properties

We can see the system has no public IP. Furthermore, my Network Security Group on the mentioned subnet is set to allow inbound from my VNets and my P2S VPN but not from the Internet. This reflects common setups. So, my way in to reach my S4 from BTP will be the private link service.

Next, I deployed a standard load balancer within the same resource group as my S4 and configured it to target my two SAP web dispatchers. Make sure you choose NIC instead of IP Address for your backend pool configuration. The dispatchers will be addressed round-robin to achieve optimal throughput and address high availability to some extent.

Fig.4 Screenshot of Load balancer configuration

I pointed my health probes against the SSL port, that was configured on backend transaction SMICM. SAP NetWeaver exposes two “pingable” endpoints. You can verify on backend transaction SICF.

- /sap/bc/ping needs authentication and

- /sap/public/ping, which is open to be called by everyone in line of sight of the system

Fig.5 Screenshot of Load balancer health endpoint configuration

The load balancing rule finally ties together everything and establishes the route.

Fig.6 Screenshot of Load balancer rule configuration

Using this rule, I receive https traffic on the standard port 443 and pass it on to the https port my web dispatchers are listening on. Usually that is 443 + SAP instance number.

Note: Introducing an internal standard load balancer into your subnet will compromise your outbound connectivity (e.g. the internet) when there is no additional routing mechanism like a User-Defined-Routing table, NAT Gateway or a public load balancer in place. The Azure NAT Gateway gets you a fully managed solution for outbound-only internet connection (no inbound considerations needed). An additional public load balancer instead will be less costly but needs to be locked down in terms of inbound connectivity. Find more details on the process on the Azure docs and the Azure CLI command to add the required outbound rule to the load balancer below. |

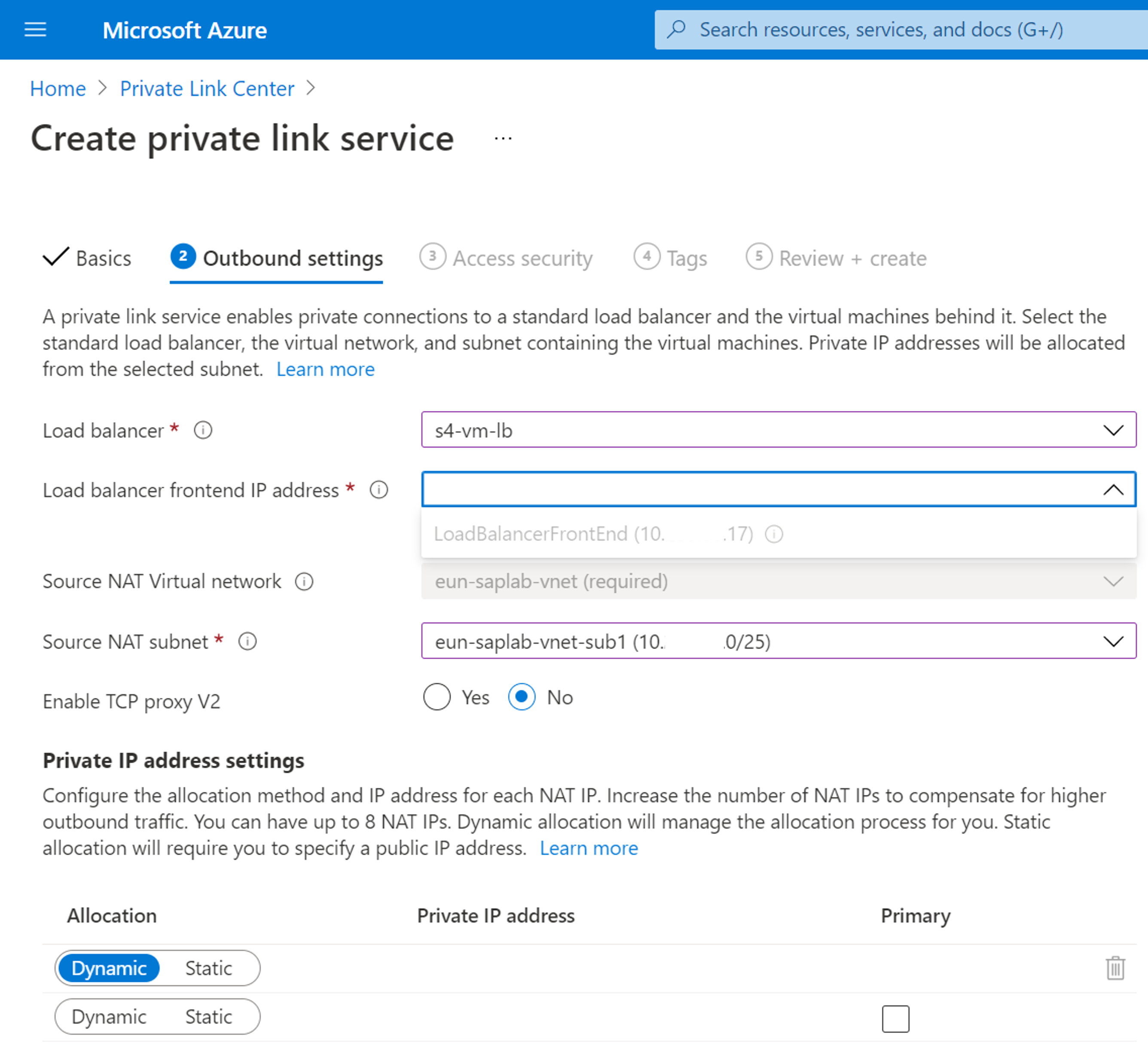

az network lb outbound-rule create --address-pool <your public lb backend pool> --frontend-ip-configs <plb-pip-config> --idle-timeout 30 --lb-name <your public lb name> --name MyOutBoundRules --outbound-ports 10000 --enable-tcp-reset true --protocol All --resource-group <your resource group> --subscription <your azure subscription>Now, we are all set to create the Private Link Service on Azure using the VNet info from the VM and the load balancer config.

Fig.7 Screenshot of Private Link Service Deployment settings

On the access security tab, I chose “Role-based access control only” but you can adapt to your needs.

Once the deployment finishes navigate to the Properties pane (under Settings) of the Private Link Service on Azure and retrieve the Resource ID. You will need it to complete the process on the BTP side.

Fig.8 Screenshot of Private Link Service Deployment settings

With that we move over to BTP. Open your subaccount, ensure that you assigned the Private Link service (Beta) on your entitlements and create the service. Use the name az-private-link in case you want to plug & play with my examples. Type a message to ensure you can identify the connection request on the Azure side. This is useful if there are multiple requests on the same service and you need to be able to act on them separately.

Fig.9 Screenshot of Private Link Service deployment wizard on BTP

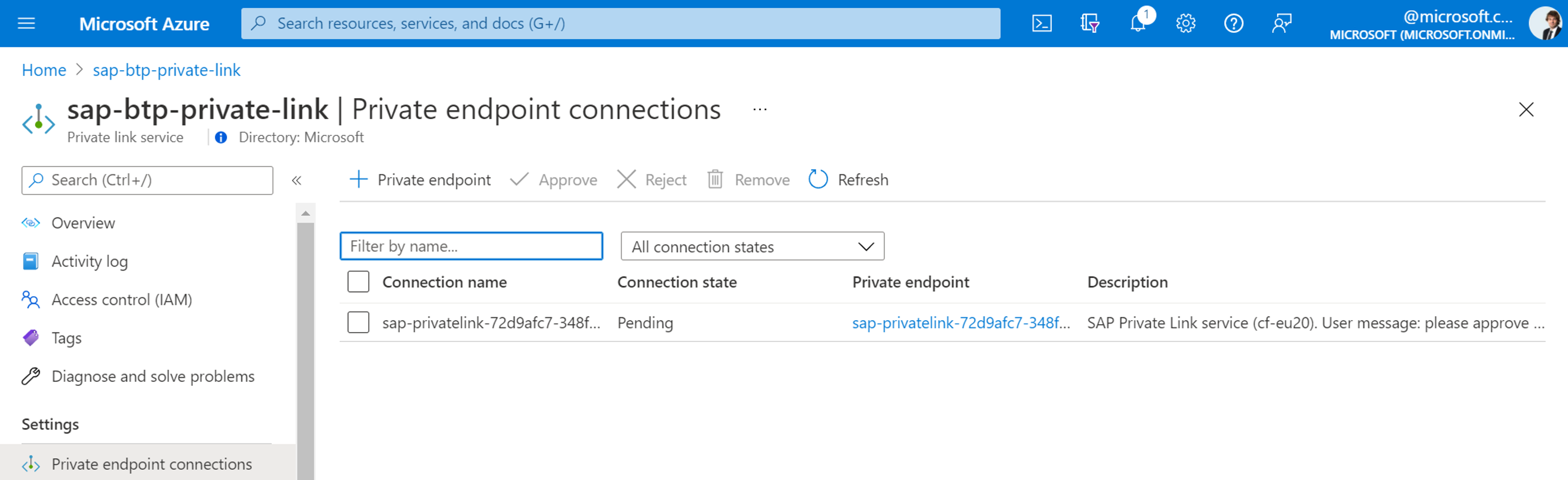

Once you hit submit, an approval request gets forwarded to the Azure Private Link Service assoicated to the resourceId you supplied before. Mark the request and hit approve.

Fig.10 Screenshot from approval request on Azure portal

So far so good. Finally, we need to bind this new SAP Private Link Service to any app to be able to send http calls through that tunnel and see the private IP on the BTP side. Without that first binding it won’t be generated. Re-use my naming to be able to run my Java or CAP project right away.

| Note: As of 24th of November SAP introduced generated host names (instead of plain private IPs). I kept the screenshots using IPs anyways, because part 7 of the blog series discusses this feature upgrade in detail. |

Going forward, I will reference my Java app using the SAP Cloud SDK, but you could do with any other BTP supported runtime. Harut Ter-Minasyan provided another nice CAP example targeting the Business Partner OData service tested against an S4 CAL deployment (Be aware you might need to change/delete the public IP when using CAL).

But wait, plain http calls on code level? We have destinations to abstract away the configuration and authentication complexity.

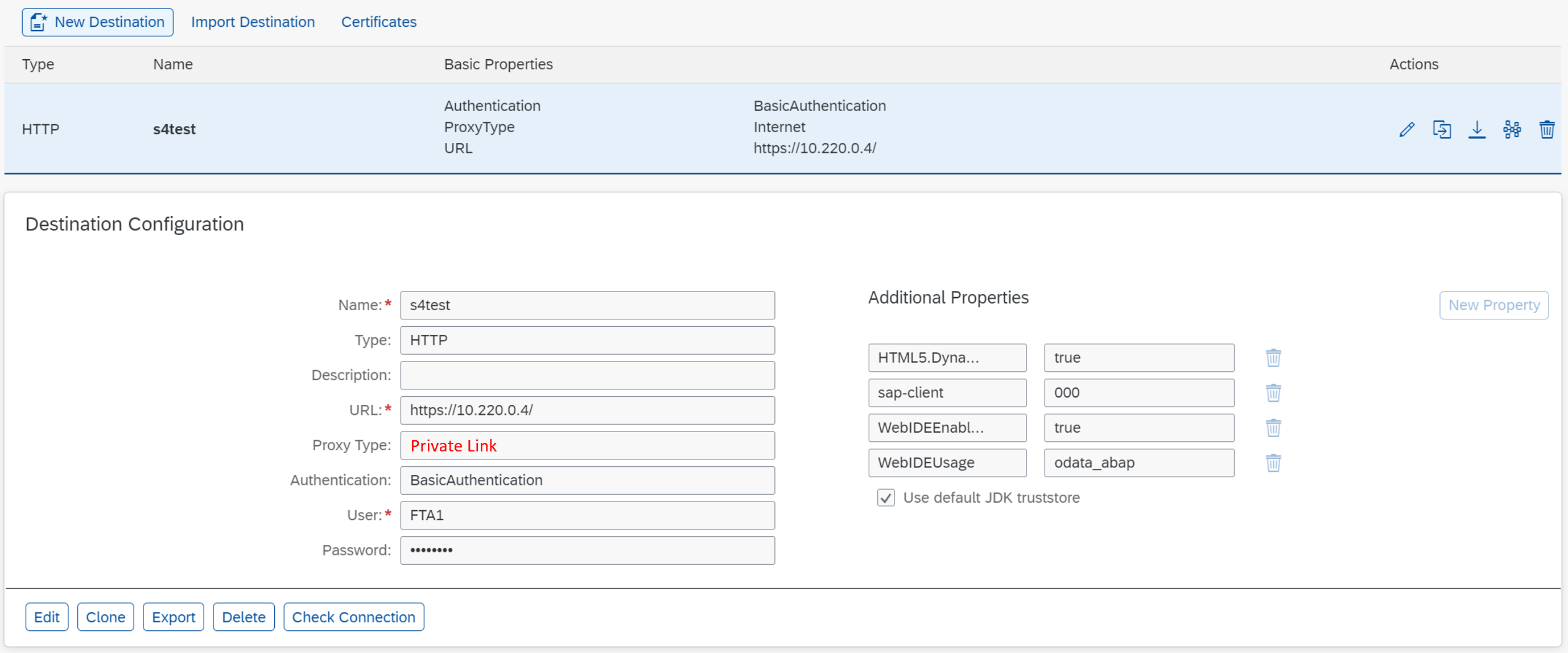

So, let’s create the destination service “az-destinations” on our dev space to cater for that. We maintain the connection to our S4 using the private IP we got from “az-private-link”. Eventually you need to bind the destination service to your app too. With my implementations that will happen automatically on deployment, because they are listed as required on the mta.yaml.

Fig.11 Destination config on CF space dev for private link service

The additional properties make it available to SAP Business Application Studio and ensure the sap-client. In my case that is 000.

Ok great, let’s test this!

Fig.12 Screenshot from Java app start page

I open the Java app and follow the link to the Servlet as highlighted above. Aaaand private linky linky link don’t break our swear! …

Let’s check on the application log what happened.

Fig.13 SSL error message from Java app via private link service

Ah ok, fair enough. The SSL handshake checks if the response originates from a responder, that we expect. Since our app on BTP “sees” 10.220.0.4 there is a mismatch on the received server certificate. My S4 sits behind the Azure load balancer and a pool of web dispatchers, which send a certificate, that doesn’t mention 10.220.0.4. Mhm, what now? There are various options to tackle this. Here are a few.

- Override the SSL peer verification process in your code with the private IP of the private link service. Check my BTPAzureProxyServletIgnoreSSL.java class for more details.

- Change the Destination config from https to plain http.

- Add property “TrustAll” to your Destination.

- Use a dedicated SSL config like Server Name Indication (SNI) on your web dispatcher or netweaver setup and import the associated certificate in the trust store of your BTP destination.

- Or the most desirable: bring your own domain and certificate. See this post to learn more how to achieve that.

The first three options relax the end-to-end trust verification. For maximum security in such a shared environment as BTP you would want to tackle this properly. The fourth option requires you to generate an additional Personal Security Environment (PSE) and configure the Intern Communication Manager (ICM) parameters, so that request coming from BTP will be answered with the expected certificate. That allows you to keep your existing trust setup on the SAP backend untouched. Have a look at part 7 for more details.

If you don't want to dive in with fully blown SSL setup to start with, TrustAll is your friend.

For productive purposes I highly recommend managed well-known certificate issued through Azure Key Vault and CNAME mapping for the SAP BTP internal SAP Private Link hostname. Read more about that here.

Consuming OData via the private linky app is a piece of cake now

From here on all implementation topics like app roles, XSUAA, logging, monitoring, staging, scaling etc. stay the same as if you were using any CloudFoundry implementation. For simplicity I exposed the private linky app via another destination and created a Fiori app based on that.

Fig.14 Destination config for consuming app

Fig.15 Fiori app consuming OData via private linky app

The complete feature set of that OData service is available. We only created connectivity using this new beta service after all 😉

Restricting access to exposed SAP backend services further

If you need to lock down the SAP backend services (ICF nodes), that are visible from the SAP Private Link Service you can do so with a proxy component. SAP offers custom SAP Web Dispatcher URL filters for example. You can specify the source IP (in our case the standard load balancer behind the Azure private link service) and your desired OData service paths for instance. This is analog to what the Cloud Connector provides with the exception, that the web dispatcher acts on http protocol level. This approach doesn't work for RFC connections. Have a look at post 6 of the series to learn about securing RFCs in detail.

# We allow access to the "ping" service, but only if

# accessed from IP of PLS load balancer and only via https

P /sap/public/ping * * * 192.168.100.35/32

S /sap/public/ping * * * 192.168.100.35/32One could argue that this approach would be extra cautious, because the traffic went through Azure VNets only and entered your private tunnel directly behind your app on BTP. The SAP backend authorizations for the "calling" user will take care of what to show or hide. However, exercising zero-trust efforts would demand such filtering.

These URL filter act as an extra layer for governance, as a fail safe for too open authorizations and as a safety net to mitigate the shared-tenant nature of BTP. You can apply any proxy (e.g. Apache, NGINX etc.) for the filtering. Just install it on a VM behind the Standard load balancer to inspect traffic and forward to your SAP backend system as per your allowed configuration. The SAP Web Dispatcher would be a SAP native approach to implement this.

Thoughts on production readiness

The connectivity components of the setup are managed by Microsoft or SAP for enterprise-grade apps. The development best-practices by SAP are not touched. You code your apps without any need to know of the private link service.

All your traffic stays on the Microsoft backbone, it is private and you get rid of the additional infrastructure components overhead mentioned at the beginning. No outbound ports need to be opened, which makes life easier for deployments such as HEC for instance. This kind of simplification speeds up roll-out and increases resiliency.

The Cloud Connector continues to play a role for layer 7 functionalities like audit logging and dedicated allow-listing of propriatry interfaces such as RFCs in one place. With PLS acting on layer 4, many of those concerns are shifted into the SAP WebDispatcher (e.g. Access Control Lists), the Azure Network Security Group or even the SAP ERP backend. This can pose a challenge for existing landscapes that relied on the isolation of the cloud connector. Read more about that in part 6 of the series.

A self-signed certificate is not optimal. You may consider bringing your own domain and certificate as described by SAP here. Furthermore, you might favor trusting the root or intermediate certificate of your Certificate Authority in BTP. That way there is no need for populating the certificate anymore.

See here, how to create a certificate with a well-known certificate authority like DigiCert or GlobalSign automatically from Azure Key Vault.

Cloud Connector and PLS can co-exist. However, you need to make sure, that you close the interfaces exposed to PLS on the network that you want to restrict to the Cloud Connector only. Otherwise Cloud Connector could simply be bypassed.

Further Reading

- Getting Started with SAP Private Link Service for Azure

- SAP docs for Private Link Service for Azure

- Developer Tutorial for Private Links Service

- SAP's official blog post for the new service

- Microsoft docs for Azure Private Link Service

- SAP Cloud SDK docs

- SAP Web Dispatcher URL filtering

Final Words

Linky swears are not to be taken lightly. I believe SAP is making good use of the Azure portfolio creating another integration scenario that will become p foopular going forward. Today we saw the setup process for this new private link service that keeps your BTP traffic private and on the Microsoft backbone, drilled a little in the security configuration with destinations and verified the usual development approach can be applied to the apps routing through the private link service with a standard Fiori app. As they say “trust is good, control is better” 😉

In part two of this series, we will look at applying this approach with SAP Integration Suite. Any other topic you would like to be discussed in that regard? Just reach via GitHub or on the comments section below.

Find the mentioned Java, CAP and Fiori projects on my GitHub repos here. Find your way back to the table of contents of the series here.

As always feel free to ask lots of follow-up questions.

Best Regards

Martin

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

"automatische backups"

1 -

"regelmäßige sicherung"

1 -

"TypeScript" "Development" "FeedBack"

1 -

505 Technology Updates 53

1 -

ABAP

14 -

ABAP API

1 -

ABAP CDS Views

2 -

ABAP CDS Views - BW Extraction

1 -

ABAP CDS Views - CDC (Change Data Capture)

1 -

ABAP class

2 -

ABAP Cloud

3 -

ABAP Development

5 -

ABAP in Eclipse

1 -

ABAP Platform Trial

1 -

ABAP Programming

2 -

abap technical

1 -

abapGit

1 -

absl

2 -

access data from SAP Datasphere directly from Snowflake

1 -

Access data from SAP datasphere to Qliksense

1 -

Accrual

1 -

action

1 -

adapter modules

1 -

Addon

1 -

Adobe Document Services

1 -

ADS

1 -

ADS Config

1 -

ADS with ABAP

1 -

ADS with Java

1 -

ADT

2 -

Advance Shipping and Receiving

1 -

Advanced Event Mesh

3 -

AEM

1 -

AI

7 -

AI Launchpad

1 -

AI Projects

1 -

AIML

9 -

Alert in Sap analytical cloud

1 -

Amazon S3

1 -

Analytical Dataset

1 -

Analytical Model

1 -

Analytics

1 -

Analyze Workload Data

1 -

annotations

1 -

API

1 -

API and Integration

3 -

API Call

2 -

API security

1 -

Application Architecture

1 -

Application Development

5 -

Application Development for SAP HANA Cloud

3 -

Applications and Business Processes (AP)

1 -

Artificial Intelligence

1 -

Artificial Intelligence (AI)

5 -

Artificial Intelligence (AI) 1 Business Trends 363 Business Trends 8 Digital Transformation with Cloud ERP (DT) 1 Event Information 462 Event Information 15 Expert Insights 114 Expert Insights 76 Life at SAP 418 Life at SAP 1 Product Updates 4

1 -

Artificial Intelligence (AI) blockchain Data & Analytics

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise Oil Gas IoT Exploration Production

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise sustainability responsibility esg social compliance cybersecurity risk

1 -

ASE

1 -

ASR

2 -

ASUG

1 -

Attachments

1 -

Authorisations

1 -

Automating Processes

1 -

Automation

2 -

aws

2 -

Azure

1 -

Azure AI Studio

1 -

Azure API Center

1 -

Azure API Management

1 -

B2B Integration

1 -

Backorder Processing

1 -

Backup

1 -

Backup and Recovery

1 -

Backup schedule

1 -

BADI_MATERIAL_CHECK error message

1 -

Bank

1 -

BAS

1 -

basis

2 -

Basis Monitoring & Tcodes with Key notes

2 -

Batch Management

1 -

BDC

1 -

Best Practice

1 -

bitcoin

1 -

Blockchain

3 -

bodl

1 -

BOP in aATP

1 -

BOP Segments

1 -

BOP Strategies

1 -

BOP Variant

1 -

BPC

1 -

BPC LIVE

1 -

BTP

13 -

BTP Destination

2 -

Business AI

1 -

Business and IT Integration

1 -

Business application stu

1 -

Business Application Studio

1 -

Business Architecture

1 -

Business Communication Services

1 -

Business Continuity

1 -

Business Data Fabric

3 -

Business Fabric

1 -

Business Partner

12 -

Business Partner Master Data

10 -

Business Technology Platform

2 -

Business Trends

4 -

BW4HANA

1 -

CA

1 -

calculation view

1 -

CAP

4 -

Capgemini

1 -

CAPM

1 -

Catalyst for Efficiency: Revolutionizing SAP Integration Suite with Artificial Intelligence (AI) and

1 -

CCMS

2 -

CDQ

12 -

CDS

2 -

Cental Finance

1 -

Certificates

1 -

CFL

1 -

Change Management

1 -

chatbot

1 -

chatgpt

3 -

CL_SALV_TABLE

2 -

Class Runner

1 -

Classrunner

1 -

Cloud ALM Monitoring

1 -

Cloud ALM Operations

1 -

cloud connector

1 -

Cloud Extensibility

1 -

Cloud Foundry

4 -

Cloud Integration

6 -

Cloud Platform Integration

2 -

cloudalm

1 -

communication

1 -

Compensation Information Management

1 -

Compensation Management

1 -

Compliance

1 -

Compound Employee API

1 -

Configuration

1 -

Connectors

1 -

Consolidation Extension for SAP Analytics Cloud

2 -

Control Indicators.

1 -

Controller-Service-Repository pattern

1 -

Conversion

1 -

Cosine similarity

1 -

cryptocurrency

1 -

CSI

1 -

ctms

1 -

Custom chatbot

3 -

Custom Destination Service

1 -

custom fields

1 -

Customer Experience

1 -

Customer Journey

1 -

Customizing

1 -

cyber security

3 -

cybersecurity

1 -

Data

1 -

Data & Analytics

1 -

Data Aging

1 -

Data Analytics

2 -

Data and Analytics (DA)

1 -

Data Archiving

1 -

Data Back-up

1 -

Data Flow

1 -

Data Governance

5 -

Data Integration

2 -

Data Quality

12 -

Data Quality Management

12 -

Data Synchronization

1 -

data transfer

1 -

Data Unleashed

1 -

Data Value

8 -

database tables

1 -

Datasphere

3 -

datenbanksicherung

1 -

dba cockpit

1 -

dbacockpit

1 -

Debugging

2 -

Defender

1 -

Delimiting Pay Components

1 -

Delta Integrations

1 -

Destination

3 -

Destination Service

1 -

Developer extensibility

1 -

Developing with SAP Integration Suite

1 -

Devops

1 -

digital transformation

1 -

Documentation

1 -

Dot Product

1 -

DQM

1 -

dump database

1 -

dump transaction

1 -

e-Invoice

1 -

E4H Conversion

1 -

Eclipse ADT ABAP Development Tools

2 -

edoc

1 -

edocument

1 -

ELA

1 -

Embedded Consolidation

1 -

Embedding

1 -

Embeddings

1 -

Employee Central

1 -

Employee Central Payroll

1 -

Employee Central Time Off

1 -

Employee Information

1 -

Employee Rehires

1 -

Enable Now

1 -

Enable now manager

1 -

endpoint

1 -

Enhancement Request

1 -

Enterprise Architecture

1 -

ESLint

1 -

ETL Business Analytics with SAP Signavio

1 -

Euclidean distance

1 -

Event Dates

1 -

Event Driven Architecture

1 -

Event Mesh

2 -

Event Reason

1 -

EventBasedIntegration

1 -

EWM

1 -

EWM Outbound configuration

1 -

EWM-TM-Integration

1 -

Existing Event Changes

1 -

Expand

1 -

Expert

2 -

Expert Insights

2 -

Exploits

1 -

Fiori

14 -

Fiori Elements

2 -

Fiori SAPUI5

12 -

first-guidance

1 -

Flask

1 -

FTC

1 -

Full Stack

8 -

Funds Management

1 -

gCTS

1 -

General

1 -

Generative AI

1 -

Getting Started

1 -

GitHub

9 -

Grants Management

1 -

groovy

1 -

GTP

1 -

HANA

6 -

HANA Cloud

2 -

Hana Cloud Database Integration

2 -

HANA DB

2 -

HANA XS Advanced

1 -

Historical Events

1 -

home labs

1 -

HowTo

1 -

HR Data Management

1 -

html5

8 -

HTML5 Application

1 -

Identity cards validation

1 -

idm

1 -

Implementation

1 -

input parameter

1 -

instant payments

1 -

Integration

3 -

Integration Advisor

1 -

Integration Architecture

1 -

Integration Center

1 -

Integration Suite

1 -

intelligent enterprise

1 -

iot

1 -

Java

1 -

job

1 -

Job Information Changes

1 -

Job-Related Events

1 -

Job_Event_Information

1 -

joule

4 -

Journal Entries

1 -

Just Ask

1 -

Kerberos for ABAP

8 -

Kerberos for JAVA

8 -

KNN

1 -

Launch Wizard

1 -

Learning Content

2 -

Life at SAP

5 -

lightning

1 -

Linear Regression SAP HANA Cloud

1 -

Loading Indicator

1 -

local tax regulations

1 -

LP

1 -

Machine Learning

2 -

Marketing

1 -

Master Data

3 -

Master Data Management

14 -

Maxdb

2 -

MDG

1 -

MDGM

1 -

MDM

1 -

Message box.

1 -

Messages on RF Device

1 -

Microservices Architecture

1 -

Microsoft Universal Print

1 -

Middleware Solutions

1 -

Migration

5 -

ML Model Development

1 -

Modeling in SAP HANA Cloud

8 -

Monitoring

3 -

MTA

1 -

Multi-Record Scenarios

1 -

Multiple Event Triggers

1 -

Myself Transformation

1 -

Neo

1 -

New Event Creation

1 -

New Feature

1 -

Newcomer

1 -

NodeJS

3 -

ODATA

2 -

OData APIs

1 -

odatav2

1 -

ODATAV4

1 -

ODBC

1 -

ODBC Connection

1 -

Onpremise

1 -

open source

2 -

OpenAI API

1 -

Oracle

1 -

PaPM

1 -

PaPM Dynamic Data Copy through Writer function

1 -

PaPM Remote Call

1 -

PAS-C01

1 -

Pay Component Management

1 -

PGP

1 -

Pickle

1 -

PLANNING ARCHITECTURE

1 -

Popup in Sap analytical cloud

1 -

PostgrSQL

1 -

POSTMAN

1 -

Prettier

1 -

Process Automation

2 -

Product Updates

5 -

PSM

1 -

Public Cloud

1 -

Python

4 -

python library - Document information extraction service

1 -

Qlik

1 -

Qualtrics

1 -

RAP

3 -

RAP BO

2 -

Record Deletion

1 -

Recovery

1 -

recurring payments

1 -

redeply

1 -

Release

1 -

Remote Consumption Model

1 -

Replication Flows

1 -

research

1 -

Resilience

1 -

REST

1 -

REST API

1 -

Retagging Required

1 -

Risk

1 -

Rolling Kernel Switch

1 -

route

1 -

rules

1 -

S4 HANA

1 -

S4 HANA Cloud

1 -

S4 HANA On-Premise

1 -

S4HANA

3 -

S4HANA_OP_2023

2 -

SAC

10 -

SAC PLANNING

9 -

SAP

4 -

SAP ABAP

1 -

SAP Advanced Event Mesh

1 -

SAP AI Core

8 -

SAP AI Launchpad

8 -

SAP Analytic Cloud Compass

1 -

Sap Analytical Cloud

1 -

SAP Analytics Cloud

4 -

SAP Analytics Cloud for Consolidation

3 -

SAP Analytics Cloud Story

1 -

SAP analytics clouds

1 -

SAP API Management

1 -

SAP BAS

1 -

SAP Basis

6 -

SAP BODS

1 -

SAP BODS certification.

1 -

SAP BTP

21 -

SAP BTP Build Work Zone

2 -

SAP BTP Cloud Foundry

6 -

SAP BTP Costing

1 -

SAP BTP CTMS

1 -

SAP BTP Innovation

1 -

SAP BTP Migration Tool

1 -

SAP BTP SDK IOS

1 -

SAP BTPEA

1 -

SAP Build

11 -

SAP Build App

1 -

SAP Build apps

1 -

SAP Build CodeJam

1 -

SAP Build Process Automation

3 -

SAP Build work zone

10 -

SAP Business Objects Platform

1 -

SAP Business Technology

2 -

SAP Business Technology Platform (XP)

1 -

sap bw

1 -

SAP CAP

2 -

SAP CDC

1 -

SAP CDP

1 -

SAP CDS VIEW

1 -

SAP Certification

1 -

SAP Cloud ALM

4 -

SAP Cloud Application Programming Model

1 -

SAP Cloud Integration for Data Services

1 -

SAP cloud platform

8 -

SAP Companion

1 -

SAP CPI

3 -

SAP CPI (Cloud Platform Integration)

2 -

SAP CPI Discover tab

1 -

sap credential store

1 -

SAP Customer Data Cloud

1 -

SAP Customer Data Platform

1 -

SAP Data Intelligence

1 -

SAP Data Migration in Retail Industry

1 -

SAP Data Services

1 -

SAP DATABASE

1 -

SAP Dataspher to Non SAP BI tools

1 -

SAP Datasphere

9 -

SAP DRC

1 -

SAP EWM

1 -

SAP Fiori

3 -

SAP Fiori App Embedding

1 -

Sap Fiori Extension Project Using BAS

1 -

SAP GRC

1 -

SAP HANA

1 -

SAP HCM (Human Capital Management)

1 -

SAP HR Solutions

1 -

SAP IDM

1 -

SAP Integration Suite

9 -

SAP Integrations

4 -

SAP iRPA

2 -

SAP LAGGING AND SLOW

1 -

SAP Learning Class

1 -

SAP Learning Hub

1 -

SAP Master Data

1 -

SAP Odata

2 -

SAP on Azure

2 -

SAP PartnerEdge

1 -

sap partners

1 -

SAP Password Reset

1 -

SAP PO Migration

1 -

SAP Prepackaged Content

1 -

SAP Process Automation

2 -

SAP Process Integration

2 -

SAP Process Orchestration

1 -

SAP S4HANA

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Cloud for Finance

1 -

SAP S4HANA Cloud private edition

1 -

SAP Sandbox

1 -

SAP STMS

1 -

SAP successfactors

3 -

SAP SuccessFactors HXM Core

1 -

SAP Time

1 -

SAP TM

2 -

SAP Trading Partner Management

1 -

SAP UI5

1 -

SAP Upgrade

1 -

SAP Utilities

1 -

SAP-GUI

8 -

SAP_COM_0276

1 -

SAPBTP

1 -

SAPCPI

1 -

SAPEWM

1 -

sapfirstguidance

1 -

SAPHANAService

1 -

SAPIQ

1 -

sapmentors

1 -

saponaws

2 -

SAPS4HANA

1 -

SAPUI5

5 -

schedule

1 -

Script Operator

1 -

Secure Login Client Setup

8 -

security

9 -

Selenium Testing

1 -

Self Transformation

1 -

Self-Transformation

1 -

SEN

1 -

SEN Manager

1 -

service

1 -

SET_CELL_TYPE

1 -

SET_CELL_TYPE_COLUMN

1 -

SFTP scenario

2 -

Simplex

1 -

Single Sign On

8 -

Singlesource

1 -

SKLearn

1 -

Slow loading

1 -

soap

1 -

Software Development

1 -

SOLMAN

1 -

solman 7.2

2 -

Solution Manager

3 -

sp_dumpdb

1 -

sp_dumptrans

1 -

SQL

1 -

sql script

1 -

SSL

8 -

SSO

8 -

Substring function

1 -

SuccessFactors

1 -

SuccessFactors Platform

1 -

SuccessFactors Time Tracking

1 -

Sybase

1 -

system copy method

1 -

System owner

1 -

Table splitting

1 -

Tax Integration

1 -

Technical article

1 -

Technical articles

1 -

Technology Updates

15 -

Technology Updates

1 -

Technology_Updates

1 -

terraform

1 -

Threats

2 -

Time Collectors

1 -

Time Off

2 -

Time Sheet

1 -

Time Sheet SAP SuccessFactors Time Tracking

1 -

Tips and tricks

2 -

toggle button

1 -

Tools

1 -

Trainings & Certifications

1 -

Transformation Flow

1 -

Transport in SAP BODS

1 -

Transport Management

1 -

TypeScript

3 -

ui designer

1 -

unbind

1 -

Unified Customer Profile

1 -

UPB

1 -

Use of Parameters for Data Copy in PaPM

1 -

User Unlock

1 -

VA02

1 -

Validations

1 -

Vector Database

2 -

Vector Engine

1 -

Visual Studio Code

1 -

VSCode

2 -

VSCode extenions

1 -

Vulnerabilities

1 -

Web SDK

1 -

work zone

1 -

workload

1 -

xsa

1 -

XSA Refresh

1

- « Previous

- Next »

- HDI Artifact Recovery Wizard in Technology Blogs by SAP

- How to add SAP Datasphere as a datastore in Cloud Integration for data services? in Technology Q&A

- Using Integration Suite API's with Basic Auth in Technology Q&A

- SAP BW/4 - revamp and true to the line 2024 in Technology Blogs by Members

- Get started with SAP BTP ABAP Environment: Trial Account vs. Free Tier Option in Technology Blogs by SAP

| User | Count |

|---|---|

| 9 | |

| 8 | |

| 5 | |

| 5 | |

| 4 | |

| 4 | |

| 4 | |

| 3 | |

| 3 | |

| 3 |