- SAP Community

- Products and Technology

- Technology

- Technology Blogs by Members

- A Comprehensive Guide to the Sustainability Contro...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

The SAP Sustainability Control Tower (SCT) is SAP’s solution for holistic environmental, social and governance (ESG) reporting. With the SAP SCT, recording, reporting and acting on your companies ESG data becomes easy!

The SCT offers a lot of pre-defined metrics according to legal regulations such as ESRS and EU Taxonomy. To calculate these metrics within the SCT, you of course have to provide the data, making uploading data into the SCT one of the key steps. While this can be done via manual file upload better solutions are automatic integrations which are offered for the SAP Sustainability Footprint Management or the SAP Datasphere for example. But there is also another way to upload data into the SCT: The Inbound API. Follow along for a comprehensive tutorial on how to use this API and learn how you can connect any system to the SCT yourself!

Using one example data record we will guide along the different API calls that you can and have to make in order to publish this record in the SCT. The code examples will be presented in python but the information for the API request can of course be used universally.

Requirements

In order to start using the SCT API, you need to make sure that you are subscribed to the API service.

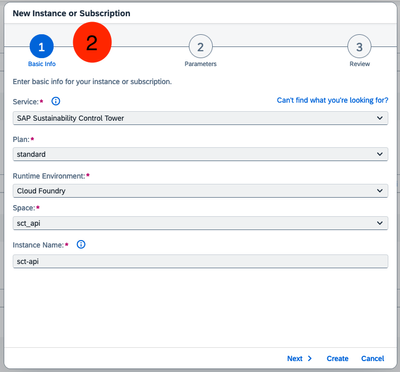

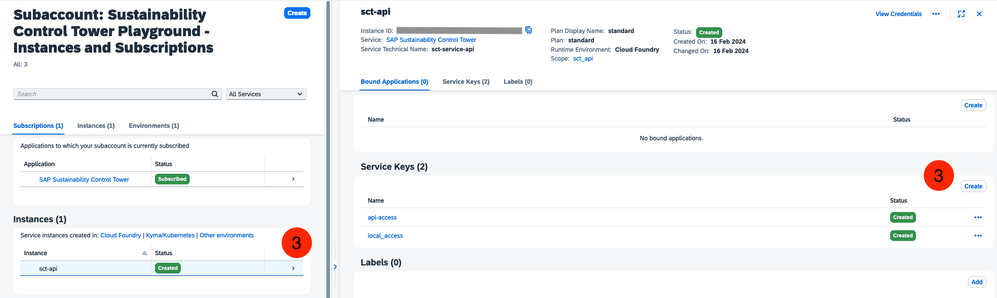

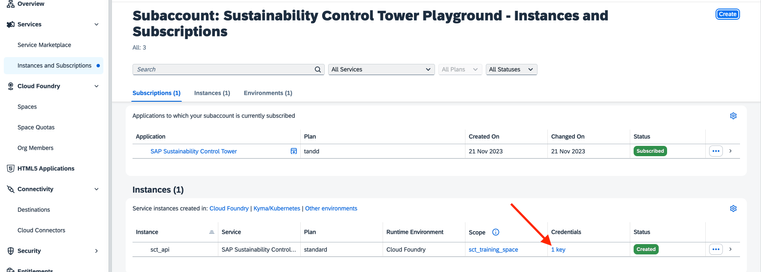

Check in your BTP Subaccount in which you have set up the subscription for the SCT service under “Instances and Subscriptions” whether you already have an instance of the SCT API with a service key. You need the Subaccount Administrator role for that.

If no instance for your SCT has been created, you can create one by clicking “Create”. Then choose Sustainability Control Tower (sct-service-api) as service and select a standard (Instance) plan. Select a runtime of your choosing, e.g. Cloud Foundry and a space, set a instance name and click create. An instance subscribing you to the SCT API will be created.

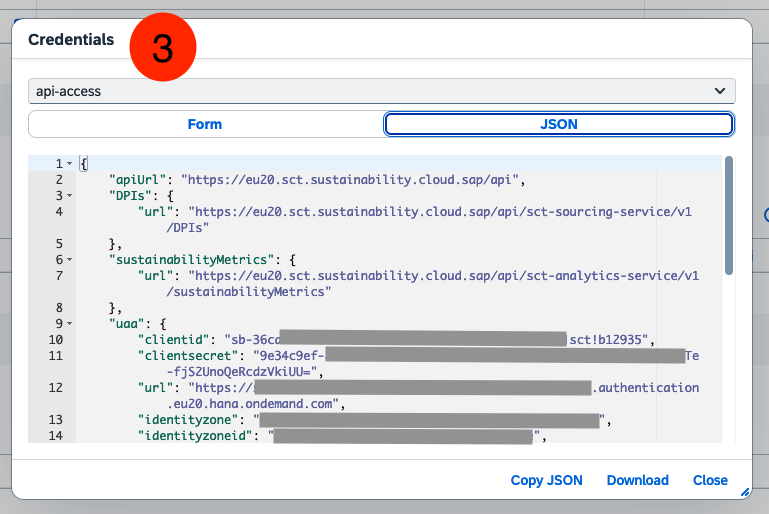

Lastly, create a service key for this instance. This contains client credentials that you will need to authorize yourself via OAuth2.0, as well as the endpoints for the Inbound and Outbound API of the SCT.

Now you are all set up connecting to the API for pushing data to the SCT!

These steps are also explained in the SCT Setup documentation, SAP Help Portal - SCT - Subscribing to the Application and Services, but this link has restricted access to SCT system owners only.

The general API reference can be found in the SAP Business Accelerator Hub.

Before using the API

Before actually pushing data to the SCT via the API, let’s have a brief discussion about the OAuth2.0 authorization and the expected data format for the SCT.

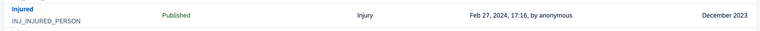

Preparing your data

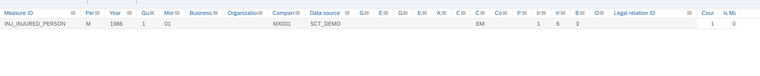

The SCT requires a specific data model for uploading individual records for any of the measures that are provided. The exact schema for each measure can be found in the “Manage ESG Data” app under Export Template or in the SCT Help Portal.

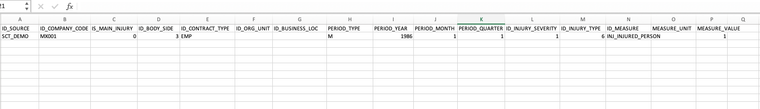

So let’s take the following example, where we have one record stored in a excel file, using the master data structure of the SCT demo data:

We want to upload that one employee had been injured in January 1986 within our company with the code MX001. The columns ID_BODY_SIDE, ID_INJURY_SEVERITY and ID_INJURY_TYPE refer to parameters of the injury (which part of the body was affected, whether it was deadly and how it occurred) and as we are talking about an injury we are uploading this data to the INJ_INJURED_PERSON measure.

For it to work with the API however, your data must be in JSON-format. This means that every record (row in your excel file) is an element in a list of objects. Every object contains key-value pairs with the variable names (columns in your excel file) and their respective values. See Push Data into Injuries DPI for reference on key names (all in camelCase) as they differ from the column names of the excel file.

This turns our example record into this format:

{

"runContext": {

"measureId": "INJ_INJURED_PERSON",

"isUpdateProcess": false

},

"injuries": [

{

"sourceId": "SCT_DEMO",

"companyCodeId": "MX001",

"isMainInjury": "0",

"bodySideId": "3",

"contractTypeId": "EMP",

"orgUnitId": "",

"businessLocationId": "",

"periodType": "M",

"periodYear": "1986",

"periodMonth": "1",

"periodQuarter": "1",

"injurySeverityId": "1",

"injuryTypeId": "6",

"measureUnit": "",

"measureValue": "1",

"customDimensions": [

{

"dimensionId": "Z_CUSTOM_DIS",

"value": "DEB"

}

]

}

]

}

In addition to our example record that is listed under the “injuries” part, we also need to specify the “runContext”. The “runContext” specifies the measure you want to push data for and whether your are updating records or pushing new ones.

With “measureId”, the measure is specified. In our case, this is the aforementioned INJ_INJURED_PERSON.

With “isUpdateProcess”, you can specify whether you want to update existing data points (then “isUpdateProcess” would be true) or append new records (then “isUpdateProcess” would be false). As we want to publish new records, we set this to false.

The block for “customDimensions” is optional. It is added here only for demonstration purposes.

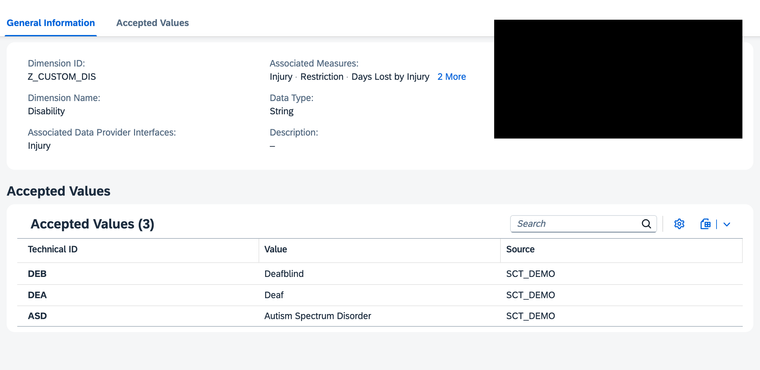

For certain DPIs, the SCT also supports custom dimensions to be pushed via the API. In the “Manage Custom Dimensions” app, you can set up a new custom dimension and link it to a DPI. After that, you need to upload master data for this dimension (containing allowed values and their technical IDs). When this is done, you can push records that contain data on this custom dimension following the structure given in the example: The “dimensionId” refers to the Dimension ID, here Z_CUSTOM_DIS, the “value” refers to the technical IDs of your accepted values, e.g. DEB in our example above. See the screenshot for clarification:

Retrieving your access token

As a last preparation step before you pushing records to the SCT, you will also have to retrieve an access token via OAuth2.0 using the service key credentials set up earlier. You will need your "clientid", your "clientsecret" and your TokenURL. Your TokenURL is the URL in the "uaa" part of the service key plus “/oauth/token”. For example:

token_url = "https://<your_company_space>.sct.authentication.eu20.hana.ondemand.com/oauth/token"

You can retrieve an access token by posting an API request against the TokenURL. Here is an example on how to do it in Python:

import requests

#retrieve access token

client_id = "your_client_id"

client_secret = "your_client_secret"

token_url = "your_token_url"

token_data = {

'grant_type': 'client_credentials',

'client_id': client_id,

'client_secret': client_secret,

}

# Make a POST request

token_response = requests.post(token_url, data=token_data)

if token_response.status_code == 200:

print("Retrieval of access token successful")

else:

print(f"Token request failed with status code: {token_response.status_code}")

print(token_response.text)

#store access_token in a variable for later use

access_token = token_response.json().get('access_token')

Request Table

In the following table you can see all the API requests that you can make to successfully publish data to the SCT as a quick overview on the necessary headers and URLs.

Just follow along as we explain each step in more detail based on our example record.

The base url for all the Inbound API related requests can be taken from the service key under "DPIs". The full API reference can be found in the SAP Business Accelerator Hub.

Purpose | Method | URL (Endpoint) | Headers | Body |

Retrieve access token | POST | <uaa-url>/oauth/token |

| {

|

Push data into DPI | POST | <DPIs-url>/<DPIYouWantToPushDataTo> | { | your records in JSON-format as shown in the data preparation step |

Validate data | POST | <DPIs-url>/validate | { | { |

Get validation results | GET | <DPIs-url>/validationResults(runId='{run_id}') | { |

|

Publish data | POST | <DPIs-url>/publish | { | { |

Using the API

The process of uploading data in the SCT contains several steps. It is not possible to directly push data into the SCT database tables itself. Rather the data is first brought in to the Data Provider Interface (DPI) layer. There it has to be validated in regards to conforming to the master data of the SCT. After a successful validation the data can then be published to the SCT tables and it will be visible as part of the metrics.

This process is implemented in the manual data upload or the import via datasphere with the “Manage ESG Data” app for example and is of course also mandatory when using the SCT Inbound API.

Pushing Data to the DPI

So as a first step, we need to push the data to the respective DPI. For our example the INJ_INJURED_PERSON measure is part of the Injury DPI, so we need to push the data to the “/Injuries” endpoint.

The full list of DPI endpoints is available in the API reference in the SAP Business Accelerator Hub.

When pushing to the DPI, you need to make sure the headers and URL you use are correct. You can retrieve the necessary header arguments and the URL from the example or the table.

For our injury example it would look like this in Python (for reference, check out Code Snippet Push API) :

#posting data

with open("your-data-file.json", 'r') as json_file:

data = json.load(json_file)

url = "https://eu20.sct.sustainability.cloud.sap/api/sct-sourcing-service/v1/DPIs/Injuries"

headers = {

"Authorization": f"Bearer {access_token}", #your authorization key

"DataServiceVersion": "2.0",

"Accept": "application/json",

"Content-Type": "application/json"

}

#Make a POST request

post_response = requests.post(url, headers=headers, json=data)

#check whether the request was successful

if post_response.status_code == 200:

print(post_response.text)

else:

print(f"Request failed with status code {post_response.status_code}")

print(post_response.text)

Giving us this post_response in JSON-format:

{'@context': '$metadata#DpiService.response',

'@metadataEtag': 'W/"249ec913ea2cb6e21b17ee04c7a"',

'runId': 'ef696387-5e61-49ad-b0e7',

'message': '1 records posted successfully'

}

Whenever you successfully send a request to post data to the SCT, a "runId" is configured which you will need to further validate and publish the data. You can retrieve the "runId" from the from the post response to the DPI like this:

#get your runId

run_id = post_response.json().get("runId", None) #retrieve the runId from the post_response

run_data = {

"runId": run_id

}

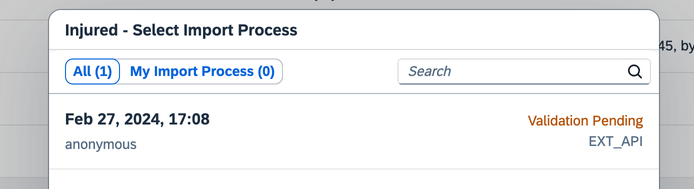

You can also see the import process being started in the SCT. In the "Manage ESG Data" App there is an open import process for the Injury DPI telling us, that a validation is pending:

Side Note: Currently it is not possible to push audit metadata such as who pushed what data when via the API. So the import process is shown as started by an anonymous user.

Validating the Data

The next step after the successful push to the DPI, is to validate your data. This is done by posting the "runId" to the “/validate” endpoint.

For our example record, validating it could look something like this (for reference: Code Snippet Validate API) :

#validating data

#headers

validate_headers = {

"Authorization": f"Bearer {access_token}", #your authorization key

"DataServiceVersion": "2.0",

"Accept": "*/*",

"Content-Type": "application/json"

}

#url

url = "https://eu20.sct.sustainability.cloud.sap/api/sct-sourcing-service/v1/DPIs/validate"

validation_response = requests.post(url, headers=validate_headers, json=run_data)

if validation_response.status_code == 200:

print(validation_response.text)

elif response.status_code == 204: #validation request yields no content in response

print("Request was successful, but there is no content in the response.")

else:

print(f"Request failed with status code {validation_response.status_code}")

print(validation_response.text)

With this request we are triggering the validation in the CPE environment of the SCT. This activity is performed asynchronously so we might have to wait a bit for the validation results to be ready.

Getting the validation results

If the validation request was successful, you can retrieve the validation results (Get validation results API).

As described in the overview table a GET request against “/validationResults(runId='<runId>')” is needed to fetch the result of the validation run. Note that the "runId" parameter has to be encompassed in single quotation marks.

Since the validation is running asynchronously, You need to make sure the validation is completed before you retrieve the results. Therefore, it is sensible to call the GET request for the validation results multiple times until the validation is done. Here is an approach on how to do this:

##get validation results

#headers

response_headers = {

"Authorization": f"Bearer {access_token}",

"DataServiceVersion": "2.0",

"Accept": "application/json"

}

#url

url = f"https://eu20.sct.sustainability.cloud.sap/api/sct-sourcing-service/v1/DPIs/validationResults(runId='{run_id}')"

validation_results = {}

status = "IN_PROGRESS"

while status == "IN_PROGRESS":

response = requests.get(url, headers=response_headers)

validation_results = response.json()

status = validation_results.get("status")

print("Status is still IN_PROGRESS...")

time.sleep(5)

print(f"status: {status}. Validation completed.")

print(validation_results)

The validation results will look like this:

{'@context': '$metadata#DpiService.validationResponse',

'@metadataEtag': 'W/"249ec913ea2cb6e21b17ee04c7a"',

'runId': 'ef696387-5e61-49ad-b0e7',

'status': 'COMPLETED',

'errorCount': 0,

'totalCount': 1}

The status indicates that the validation is complete. The "errorCount" indicates how many data points (rows in your original excel file) are invalid and cannot be uploaded. The "totalCount" indicates how many data points you have pushed and which have been checked during validation.

If you run into issues with the validation, there are two options. If all your records are invalid, the "errorCount" will be equal to the "totalCount" and status will be NO_VALID_RECORDS:

{'@context': '$metadata#DpiService.validationResponse',

'@metadataEtag': 'W/"249ec913ea2cb6e21b17ee04c7a"',

'runId': 'ef696387-5e61-49ad-b0e7',

'status': 'NO_VALID_RECORDS',

'errorCount': 2,

'totalCount': 2}

If some of your records are invalid, the "errorCount" will indicate how many records are invalid, but the status will be COMPLETED as you could publish the non invalid records regardless:

{'@context': '$metadata#DpiService.validationResponse',

'@metadataEtag': 'W/"249ec913ea2cb6e21b17ee04c7a"',

'runId': 'ef696387-5e61-49ad-b0e7',

'status': 'COMPLETED',

'errorCount': 1,

'totalCount': 2}

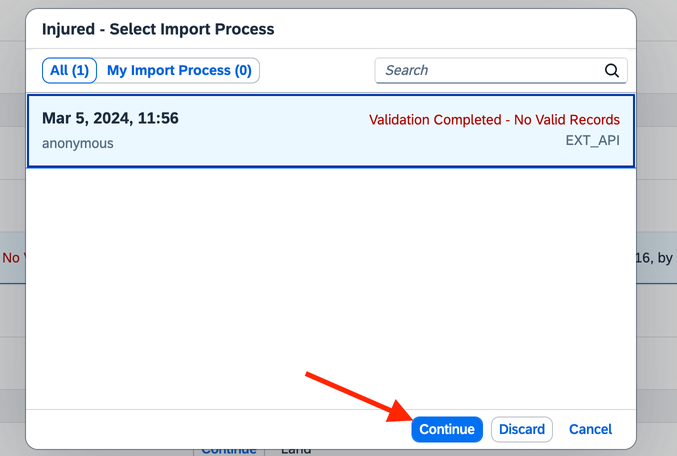

Unfortunately, you will not get details on what exactly went wrong from the API response. If you want to view the validation results, you will have to go into the “Manage ESG Data” app of the SCT and select the current import process that you triggered:

By clicking “Continue”, you will be directed to the error log. Now you can see what went wrong, resolve the issues in your data and restart the import process.

Thankfully, as our example data is all valid, we can publish it now. In SCT, we can see that publishing is pending:

Publishing the Data

If the validation results are all fine, you can finally publish your data to the SCT (Code Snippet Publish Data API) :

##publishing data if validation results are clear

url = "https://eu20.sct.sustainability.cloud.sap/api/sct-sourcing-service/v1/DPIs/publish"

publish_headers = validate_headers

publish_response = requests.post(url, headers=publish_headers, json=run_data) #run data and publish_headers have been defined above

if publish_response.status_code == 204: #publish request yields no content in response

print(publish_response.text)

else:

print(f"Request failed with status code {publish_response.status_code}")

print(publish_response.text)

And you are done! 😁

Your data should be visible in the SCT and the import process in the "Manage ESG Data" app should be finished with the status set to “Published”:

We can also have a look at the MTDAC table of the CPE environment which contains all of the uploaded records. And indeed our record for one injured person in January 1986 is visible:

Summary

In this blog post we have shown how you can use the SCT Inbound API to push data to the SCT. This now enables you to connect any source system you want to the SCT and automate the data upload process. One option for example could be a simple side-by-side extension on SAP BTP for preprocessing data before uploading it or using SAP Build Process Automation with a simple workflow.

Stay tuned for follow-up blog posts on these topics in the coming weeks!

We hope you enjoyed this comprehensive overview of the SCT Inbound API.

Best regards,

Eva and Jonathan

- SAP Managed Tags:

- API,

- Python,

- Sustainability

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

"automatische backups"

1 -

"regelmäßige sicherung"

1 -

"TypeScript" "Development" "FeedBack"

1 -

505 Technology Updates 53

1 -

ABAP

14 -

ABAP API

1 -

ABAP CDS Views

2 -

ABAP CDS Views - BW Extraction

1 -

ABAP CDS Views - CDC (Change Data Capture)

1 -

ABAP class

2 -

ABAP Cloud

3 -

ABAP Development

5 -

ABAP in Eclipse

1 -

ABAP Platform Trial

1 -

ABAP Programming

2 -

abap technical

1 -

abapGit

1 -

absl

2 -

access data from SAP Datasphere directly from Snowflake

1 -

Access data from SAP datasphere to Qliksense

1 -

Accrual

1 -

action

1 -

adapter modules

1 -

Addon

1 -

Adobe Document Services

1 -

ADS

1 -

ADS Config

1 -

ADS with ABAP

1 -

ADS with Java

1 -

ADT

2 -

Advance Shipping and Receiving

1 -

Advanced Event Mesh

3 -

AEM

1 -

AI

7 -

AI Launchpad

1 -

AI Projects

1 -

AIML

9 -

Alert in Sap analytical cloud

1 -

Amazon S3

1 -

Analytical Dataset

1 -

Analytical Model

1 -

Analytics

1 -

Analyze Workload Data

1 -

annotations

1 -

API

1 -

API and Integration

3 -

API Call

2 -

API security

1 -

Application Architecture

1 -

Application Development

5 -

Application Development for SAP HANA Cloud

3 -

Applications and Business Processes (AP)

1 -

Artificial Intelligence

1 -

Artificial Intelligence (AI)

5 -

Artificial Intelligence (AI) 1 Business Trends 363 Business Trends 8 Digital Transformation with Cloud ERP (DT) 1 Event Information 462 Event Information 15 Expert Insights 114 Expert Insights 76 Life at SAP 418 Life at SAP 1 Product Updates 4

1 -

Artificial Intelligence (AI) blockchain Data & Analytics

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise Oil Gas IoT Exploration Production

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise sustainability responsibility esg social compliance cybersecurity risk

1 -

ASE

1 -

ASR

2 -

ASUG

1 -

Attachments

1 -

Authorisations

1 -

Automating Processes

1 -

Automation

2 -

aws

2 -

Azure

1 -

Azure AI Studio

1 -

Azure API Center

1 -

Azure API Management

1 -

B2B Integration

1 -

Backorder Processing

1 -

Backup

1 -

Backup and Recovery

1 -

Backup schedule

1 -

BADI_MATERIAL_CHECK error message

1 -

Bank

1 -

BAS

1 -

basis

2 -

Basis Monitoring & Tcodes with Key notes

2 -

Batch Management

1 -

BDC

1 -

Best Practice

1 -

bitcoin

1 -

Blockchain

3 -

bodl

1 -

BOP in aATP

1 -

BOP Segments

1 -

BOP Strategies

1 -

BOP Variant

1 -

BPC

1 -

BPC LIVE

1 -

BTP

13 -

BTP Destination

2 -

Business AI

1 -

Business and IT Integration

1 -

Business application stu

1 -

Business Application Studio

1 -

Business Architecture

1 -

Business Communication Services

1 -

Business Continuity

1 -

Business Data Fabric

3 -

Business Fabric

1 -

Business Partner

12 -

Business Partner Master Data

10 -

Business Technology Platform

2 -

Business Trends

4 -

BW4HANA

1 -

CA

1 -

calculation view

1 -

CAP

4 -

Capgemini

1 -

CAPM

1 -

Catalyst for Efficiency: Revolutionizing SAP Integration Suite with Artificial Intelligence (AI) and

1 -

CCMS

2 -

CDQ

12 -

CDS

2 -

Cental Finance

1 -

Certificates

1 -

CFL

1 -

Change Management

1 -

chatbot

1 -

chatgpt

3 -

CL_SALV_TABLE

2 -

Class Runner

1 -

Classrunner

1 -

Cloud ALM Monitoring

1 -

Cloud ALM Operations

1 -

cloud connector

1 -

Cloud Extensibility

1 -

Cloud Foundry

4 -

Cloud Integration

6 -

Cloud Platform Integration

2 -

cloudalm

1 -

communication

1 -

Compensation Information Management

1 -

Compensation Management

1 -

Compliance

1 -

Compound Employee API

1 -

Configuration

1 -

Connectors

1 -

Consolidation Extension for SAP Analytics Cloud

2 -

Control Indicators.

1 -

Controller-Service-Repository pattern

1 -

Conversion

1 -

Cosine similarity

1 -

cryptocurrency

1 -

CSI

1 -

ctms

1 -

Custom chatbot

3 -

Custom Destination Service

1 -

custom fields

1 -

Customer Experience

1 -

Customer Journey

1 -

Customizing

1 -

cyber security

3 -

cybersecurity

1 -

Data

1 -

Data & Analytics

1 -

Data Aging

1 -

Data Analytics

2 -

Data and Analytics (DA)

1 -

Data Archiving

1 -

Data Back-up

1 -

Data Flow

1 -

Data Governance

5 -

Data Integration

2 -

Data Quality

12 -

Data Quality Management

12 -

Data Synchronization

1 -

data transfer

1 -

Data Unleashed

1 -

Data Value

8 -

database tables

1 -

Datasphere

3 -

datenbanksicherung

1 -

dba cockpit

1 -

dbacockpit

1 -

Debugging

2 -

Defender

1 -

Delimiting Pay Components

1 -

Delta Integrations

1 -

Destination

3 -

Destination Service

1 -

Developer extensibility

1 -

Developing with SAP Integration Suite

1 -

Devops

1 -

digital transformation

1 -

Documentation

1 -

Dot Product

1 -

DQM

1 -

dump database

1 -

dump transaction

1 -

e-Invoice

1 -

E4H Conversion

1 -

Eclipse ADT ABAP Development Tools

2 -

edoc

1 -

edocument

1 -

ELA

1 -

Embedded Consolidation

1 -

Embedding

1 -

Embeddings

1 -

Employee Central

1 -

Employee Central Payroll

1 -

Employee Central Time Off

1 -

Employee Information

1 -

Employee Rehires

1 -

Enable Now

1 -

Enable now manager

1 -

endpoint

1 -

Enhancement Request

1 -

Enterprise Architecture

1 -

ESLint

1 -

ETL Business Analytics with SAP Signavio

1 -

Euclidean distance

1 -

Event Dates

1 -

Event Driven Architecture

1 -

Event Mesh

2 -

Event Reason

1 -

EventBasedIntegration

1 -

EWM

1 -

EWM Outbound configuration

1 -

EWM-TM-Integration

1 -

Existing Event Changes

1 -

Expand

1 -

Expert

2 -

Expert Insights

2 -

Exploits

1 -

Fiori

14 -

Fiori Elements

2 -

Fiori SAPUI5

12 -

first-guidance

1 -

Flask

1 -

FTC

1 -

Full Stack

8 -

Funds Management

1 -

gCTS

1 -

General

1 -

Generative AI

1 -

Getting Started

1 -

GitHub

9 -

Grants Management

1 -

groovy

1 -

GTP

1 -

HANA

6 -

HANA Cloud

2 -

Hana Cloud Database Integration

2 -

HANA DB

2 -

HANA XS Advanced

1 -

Historical Events

1 -

home labs

1 -

HowTo

1 -

HR Data Management

1 -

html5

8 -

HTML5 Application

1 -

Identity cards validation

1 -

idm

1 -

Implementation

1 -

input parameter

1 -

instant payments

1 -

Integration

3 -

Integration Advisor

1 -

Integration Architecture

1 -

Integration Center

1 -

Integration Suite

1 -

intelligent enterprise

1 -

iot

1 -

Java

1 -

job

1 -

Job Information Changes

1 -

Job-Related Events

1 -

Job_Event_Information

1 -

joule

4 -

Journal Entries

1 -

Just Ask

1 -

Kerberos for ABAP

8 -

Kerberos for JAVA

8 -

KNN

1 -

Launch Wizard

1 -

Learning Content

2 -

Life at SAP

5 -

lightning

1 -

Linear Regression SAP HANA Cloud

1 -

Loading Indicator

1 -

local tax regulations

1 -

LP

1 -

Machine Learning

2 -

Marketing

1 -

Master Data

3 -

Master Data Management

14 -

Maxdb

2 -

MDG

1 -

MDGM

1 -

MDM

1 -

Message box.

1 -

Messages on RF Device

1 -

Microservices Architecture

1 -

Microsoft Universal Print

1 -

Middleware Solutions

1 -

Migration

5 -

ML Model Development

1 -

Modeling in SAP HANA Cloud

8 -

Monitoring

3 -

MTA

1 -

Multi-Record Scenarios

1 -

Multiple Event Triggers

1 -

Myself Transformation

1 -

Neo

1 -

New Event Creation

1 -

New Feature

1 -

Newcomer

1 -

NodeJS

3 -

ODATA

2 -

OData APIs

1 -

odatav2

1 -

ODATAV4

1 -

ODBC

1 -

ODBC Connection

1 -

Onpremise

1 -

open source

2 -

OpenAI API

1 -

Oracle

1 -

PaPM

1 -

PaPM Dynamic Data Copy through Writer function

1 -

PaPM Remote Call

1 -

PAS-C01

1 -

Pay Component Management

1 -

PGP

1 -

Pickle

1 -

PLANNING ARCHITECTURE

1 -

Popup in Sap analytical cloud

1 -

PostgrSQL

1 -

POSTMAN

1 -

Prettier

1 -

Process Automation

2 -

Product Updates

5 -

PSM

1 -

Public Cloud

1 -

Python

4 -

python library - Document information extraction service

1 -

Qlik

1 -

Qualtrics

1 -

RAP

3 -

RAP BO

2 -

Record Deletion

1 -

Recovery

1 -

recurring payments

1 -

redeply

1 -

Release

1 -

Remote Consumption Model

1 -

Replication Flows

1 -

research

1 -

Resilience

1 -

REST

1 -

REST API

1 -

Retagging Required

1 -

Risk

1 -

Rolling Kernel Switch

1 -

route

1 -

rules

1 -

S4 HANA

1 -

S4 HANA Cloud

1 -

S4 HANA On-Premise

1 -

S4HANA

3 -

S4HANA_OP_2023

2 -

SAC

10 -

SAC PLANNING

9 -

SAP

4 -

SAP ABAP

1 -

SAP Advanced Event Mesh

1 -

SAP AI Core

8 -

SAP AI Launchpad

8 -

SAP Analytic Cloud Compass

1 -

Sap Analytical Cloud

1 -

SAP Analytics Cloud

4 -

SAP Analytics Cloud for Consolidation

3 -

SAP Analytics Cloud Story

1 -

SAP analytics clouds

1 -

SAP API Management

1 -

SAP BAS

1 -

SAP Basis

6 -

SAP BODS

1 -

SAP BODS certification.

1 -

SAP BTP

21 -

SAP BTP Build Work Zone

2 -

SAP BTP Cloud Foundry

6 -

SAP BTP Costing

1 -

SAP BTP CTMS

1 -

SAP BTP Innovation

1 -

SAP BTP Migration Tool

1 -

SAP BTP SDK IOS

1 -

SAP BTPEA

1 -

SAP Build

11 -

SAP Build App

1 -

SAP Build apps

1 -

SAP Build CodeJam

1 -

SAP Build Process Automation

3 -

SAP Build work zone

10 -

SAP Business Objects Platform

1 -

SAP Business Technology

2 -

SAP Business Technology Platform (XP)

1 -

sap bw

1 -

SAP CAP

2 -

SAP CDC

1 -

SAP CDP

1 -

SAP CDS VIEW

1 -

SAP Certification

1 -

SAP Cloud ALM

4 -

SAP Cloud Application Programming Model

1 -

SAP Cloud Integration for Data Services

1 -

SAP cloud platform

8 -

SAP Companion

1 -

SAP CPI

3 -

SAP CPI (Cloud Platform Integration)

2 -

SAP CPI Discover tab

1 -

sap credential store

1 -

SAP Customer Data Cloud

1 -

SAP Customer Data Platform

1 -

SAP Data Intelligence

1 -

SAP Data Migration in Retail Industry

1 -

SAP Data Services

1 -

SAP DATABASE

1 -

SAP Dataspher to Non SAP BI tools

1 -

SAP Datasphere

9 -

SAP DRC

1 -

SAP EWM

1 -

SAP Fiori

3 -

SAP Fiori App Embedding

1 -

Sap Fiori Extension Project Using BAS

1 -

SAP GRC

1 -

SAP HANA

1 -

SAP HCM (Human Capital Management)

1 -

SAP HR Solutions

1 -

SAP IDM

1 -

SAP Integration Suite

9 -

SAP Integrations

4 -

SAP iRPA

2 -

SAP LAGGING AND SLOW

1 -

SAP Learning Class

1 -

SAP Learning Hub

1 -

SAP Master Data

1 -

SAP Odata

2 -

SAP on Azure

2 -

SAP PartnerEdge

1 -

sap partners

1 -

SAP Password Reset

1 -

SAP PO Migration

1 -

SAP Prepackaged Content

1 -

SAP Process Automation

2 -

SAP Process Integration

2 -

SAP Process Orchestration

1 -

SAP S4HANA

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Cloud for Finance

1 -

SAP S4HANA Cloud private edition

1 -

SAP Sandbox

1 -

SAP STMS

1 -

SAP successfactors

3 -

SAP SuccessFactors HXM Core

1 -

SAP Time

1 -

SAP TM

2 -

SAP Trading Partner Management

1 -

SAP UI5

1 -

SAP Upgrade

1 -

SAP Utilities

1 -

SAP-GUI

8 -

SAP_COM_0276

1 -

SAPBTP

1 -

SAPCPI

1 -

SAPEWM

1 -

sapfirstguidance

1 -

SAPHANAService

1 -

SAPIQ

1 -

sapmentors

1 -

saponaws

2 -

SAPS4HANA

1 -

SAPUI5

5 -

schedule

1 -

Script Operator

1 -

Secure Login Client Setup

8 -

security

9 -

Selenium Testing

1 -

Self Transformation

1 -

Self-Transformation

1 -

SEN

1 -

SEN Manager

1 -

service

1 -

SET_CELL_TYPE

1 -

SET_CELL_TYPE_COLUMN

1 -

SFTP scenario

2 -

Simplex

1 -

Single Sign On

8 -

Singlesource

1 -

SKLearn

1 -

Slow loading

1 -

soap

1 -

Software Development

1 -

SOLMAN

1 -

solman 7.2

2 -

Solution Manager

3 -

sp_dumpdb

1 -

sp_dumptrans

1 -

SQL

1 -

sql script

1 -

SSL

8 -

SSO

8 -

Substring function

1 -

SuccessFactors

1 -

SuccessFactors Platform

1 -

SuccessFactors Time Tracking

1 -

Sybase

1 -

system copy method

1 -

System owner

1 -

Table splitting

1 -

Tax Integration

1 -

Technical article

1 -

Technical articles

1 -

Technology Updates

15 -

Technology Updates

1 -

Technology_Updates

1 -

terraform

1 -

Threats

2 -

Time Collectors

1 -

Time Off

2 -

Time Sheet

1 -

Time Sheet SAP SuccessFactors Time Tracking

1 -

Tips and tricks

2 -

toggle button

1 -

Tools

1 -

Trainings & Certifications

1 -

Transformation Flow

1 -

Transport in SAP BODS

1 -

Transport Management

1 -

TypeScript

3 -

ui designer

1 -

unbind

1 -

Unified Customer Profile

1 -

UPB

1 -

Use of Parameters for Data Copy in PaPM

1 -

User Unlock

1 -

VA02

1 -

Validations

1 -

Vector Database

2 -

Vector Engine

1 -

Visual Studio Code

1 -

VSCode

2 -

VSCode extenions

1 -

Vulnerabilities

1 -

Web SDK

1 -

work zone

1 -

workload

1 -

xsa

1 -

XSA Refresh

1

- « Previous

- Next »

- Partner-2-Partner Collaboration in Manufacturing in Technology Blogs by SAP

- SAP Sustainability Footprint Management: Q1-24 Updates & Highlights in Technology Blogs by SAP

- SAP Datasphere - Space, Data Integration, and Data Modeling Best Practices in Technology Blogs by SAP

- Partner-2-partner collaboration in the construction industry in Technology Blogs by SAP

| User | Count |

|---|---|

| 8 | |

| 7 | |

| 5 | |

| 4 | |

| 4 | |

| 4 | |

| 4 | |

| 4 | |

| 3 | |

| 3 |