- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Motivation and Key Artefacts of SAP Data and Analy...

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Associate

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

05-15-2023

8:04 AM

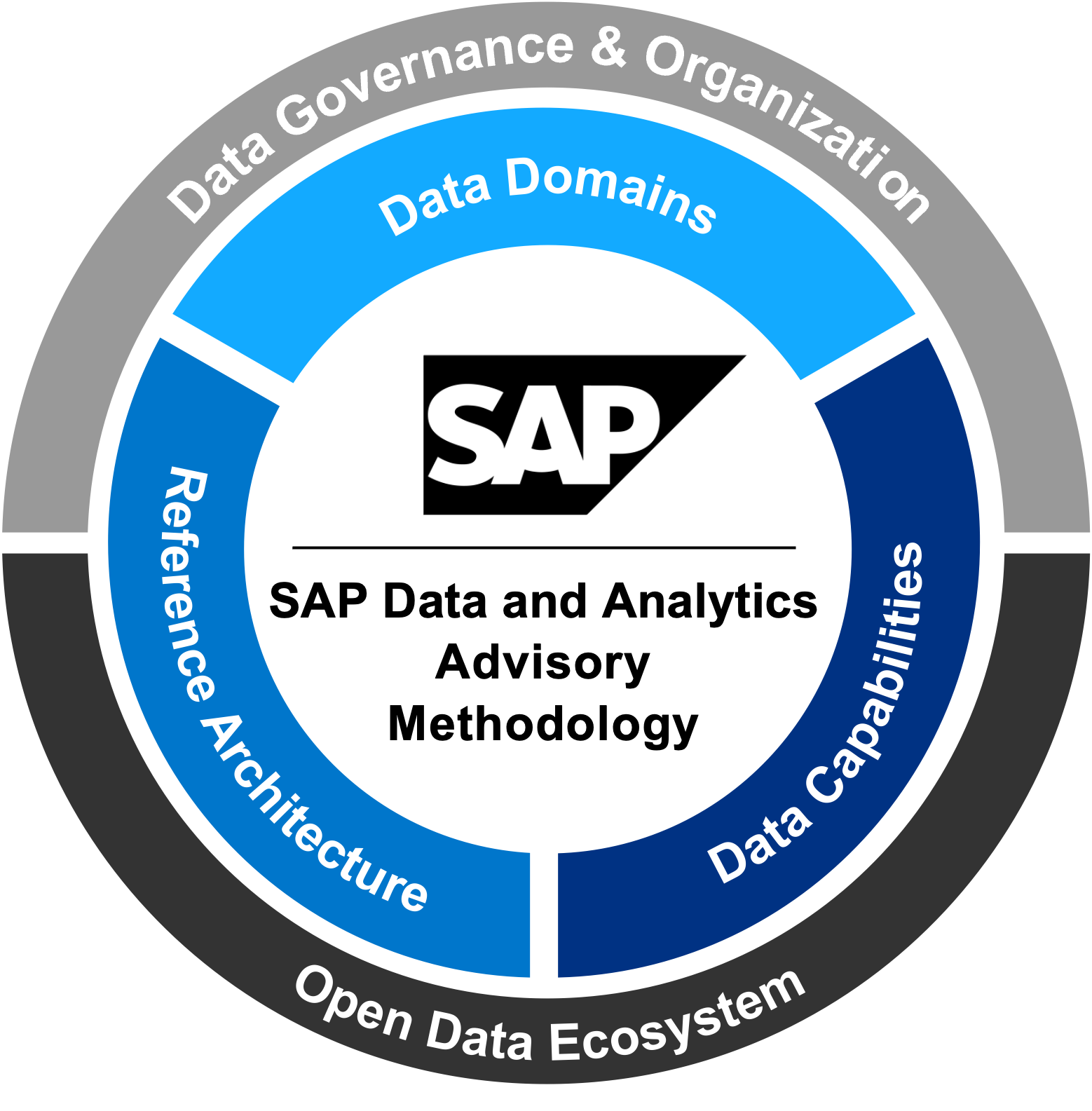

On March 15th, 2023, we published the first Release of the SAP Data and Analytics Advisory Methodology and provided a high-level overview of the approach, key artefacts, and content.

Today, we will start a blog series to provide more details to understand the concept and how to apply the methodology to data-driven challenges or opportunities of companies.

In this blog I would like to elaborate more on the motivation for SAP to develop such a methodology and provide a better understanding on the key concepts that makes this approach special / different. The subsequent blogs will describe each phase of the architecture development approach in more detail and explain the proposed tools and templates.

Although most companies understand that data in context of their business operations are an asset that can generate value for business functions and their customers, most struggle to leverage the potential.

The biggest issue is the missing visibility and lack of understanding of data companies own, let alone an easy and secure data access for analysis. First of all, this refers to scattered on-premise IT landscapes with several custom build or off-the shelf business applications that support business operations and create large volumes of mainly structured date stored in local databases. (Public) cloud applications make data visibility and accessibility even harder as the underlying infrastructure is shared and managed by cloud service providers.

All these business applications are designed to support business processes but are not build to allow easy access to analyses the data they produce. Therefore, this transactional data is replicated, transformed or harmonized into all types of data stores like

Next, they are further processed, analyzed, changed or used to create new data (e.g. by calculating key figures, creating aggregates, etc.). Quite often this replication happens several times with the same data serving different stakeholders or functions.

This data sprawl not only increases complexity and data volume further. You also lose the business semantics and other important context information required to truely understand the data. Thus, the insights, actions or even decisions taken from this data processing and analysis might be based on inaccurate facts.

This leads to the ultimate problem statement:

“The potential to gain value from data remains largely untapped as companies struggle to keep pace with simply accessing, storing, and harmonizing the data in complex and unintegrated data landscapes.”

While trusted and well understood data is the essential ingredient to generate value, technology is the enabler, the transformer of data.

Over the last two decades several new (digital) technologies have emerged or have matured that create data-to-value potentials that were unimaginable before. Technologies like Internet-of-Things, cloud computing, mobile devices, advance analytics, big data storing & processing, knowledge graphs and artificial intelligence (esp. machine learning) have been game changers.

This led to the emergence of new off-the-shelf and open-source software products and cloud-based services providing the tools and functionality to process and analyses huge volumes of structured or unstructured data to identify useful patterns or create essential business insights.

Considering the diverse market offerings of software vendors or open-source components that provide comparable data & analytics capabilities and features the challenge is to develop a suitable solution architecture that solves the customer-specific data-driven business outcome.

In summary, it needs trusted data combined with the right technology architecture to create business value.

To apply this formula for diverse data-driven business use cases an architecture methodology is required that focusses on the development of tailored data-to-value solution architectures.

These solution architectures need to consider

Which leads me to the actual motivation for SAP to develop this methodology.

According to Thomson Data almost 77% of most global transactions rely on SAP software tools making it the largest ERP vendor in the world.

This means a large chunk of structured transactional and master data in context of key business operations like sales, customer service, procurement, supply chain, manufacturing but also finance & controlling and payroll is data created by SAP business application and stored in respective databases. Metadata and especially business semantics are available in such transactional systems that are key to understand and analyze this data. This led to the idea to create a Data Domain Reference Model where such SAP data is organized and described from a business perspective to provide a common language for SAP data.

As mentioned before there are many technology concepts that can help to create value from data. Many business software providers have included these technology concepts in their solutions and services. Finally, many open-source solutions are available that can be used for free.

SAP has provided such solutions for a long time. Nowadays, many of them are cloud-based built on the SAP Business Technology Platform (SAP BTP) like

Therefore, SAP is predestined to provide “reference architectures” that should accelerate solution architecture design for suitable data-to-value use cases. As many of these reference architectures are centered around SAP BTP, a proof-of-concept can be conducted quickly to validate the business concept.

The methodology recommends describing use cases that explains how value is created from a business perspective. Use cases help to understand the business context and requirements, especially for the “data product”, and support the finetuning of the business concept that create the desired business outcome.

Many customer-specific use cases share requirements, architecture patterns or technical capabilities. Thus, they can be organized in categories and patterns to foster reusability.

The methodology distinguishes two types of use case categories:

As mentioned before, SAP has started creating SAP BTP-centric reference architectures providing the required capabilities of the use case patterns covered by the categories.

Last but not least, SAP offers a strong methodology competence to help customers create most value out of SAP software products. Examples are

All of this have been good reasons to develop and provide a structured, repeatable, and adaptable approach that finally became the “SAP Data & Analytics Advisory Methodology”.

Now that we have explained the motivation (i.e., the “why”) let us talk about what we want to achieve with the methodology in the long run and what is in for our customers.

Let’s start with the latter, the Vision:

“We want to enable our customers to swiftly design and validate data-driven business innovations that can be subsequently implemented and scaled-out on an enterprise level.”

Which leads us to the questions how we want to achieve it, i.e., our Mission:

“We provide a structured process to develop a tailored solution architecture that delivers a defined data-to-value business outcome. This architecture approach is enhanced by consistent data domain & capability models, and by providing SAP BTP-centric reference architectures that can easily be realized.”

The next blog will describe the core architecture development concept and how the key artefacts & models outlined above are used to support an efficient process and accelerate the architecture design.

Today, we will start a blog series to provide more details to understand the concept and how to apply the methodology to data-driven challenges or opportunities of companies.

In this blog I would like to elaborate more on the motivation for SAP to develop such a methodology and provide a better understanding on the key concepts that makes this approach special / different. The subsequent blogs will describe each phase of the architecture development approach in more detail and explain the proposed tools and templates.

Motivation

Although most companies understand that data in context of their business operations are an asset that can generate value for business functions and their customers, most struggle to leverage the potential.

The biggest issue is the missing visibility and lack of understanding of data companies own, let alone an easy and secure data access for analysis. First of all, this refers to scattered on-premise IT landscapes with several custom build or off-the shelf business applications that support business operations and create large volumes of mainly structured date stored in local databases. (Public) cloud applications make data visibility and accessibility even harder as the underlying infrastructure is shared and managed by cloud service providers.

All these business applications are designed to support business processes but are not build to allow easy access to analyses the data they produce. Therefore, this transactional data is replicated, transformed or harmonized into all types of data stores like

- Data Warehouses

- Data Lakes

- RDBMS

- Hyperscaler cloud data stores

- Excel

- Access

Next, they are further processed, analyzed, changed or used to create new data (e.g. by calculating key figures, creating aggregates, etc.). Quite often this replication happens several times with the same data serving different stakeholders or functions.

This data sprawl not only increases complexity and data volume further. You also lose the business semantics and other important context information required to truely understand the data. Thus, the insights, actions or even decisions taken from this data processing and analysis might be based on inaccurate facts.

This leads to the ultimate problem statement:

“The potential to gain value from data remains largely untapped as companies struggle to keep pace with simply accessing, storing, and harmonizing the data in complex and unintegrated data landscapes.”

While trusted and well understood data is the essential ingredient to generate value, technology is the enabler, the transformer of data.

Over the last two decades several new (digital) technologies have emerged or have matured that create data-to-value potentials that were unimaginable before. Technologies like Internet-of-Things, cloud computing, mobile devices, advance analytics, big data storing & processing, knowledge graphs and artificial intelligence (esp. machine learning) have been game changers.

This led to the emergence of new off-the-shelf and open-source software products and cloud-based services providing the tools and functionality to process and analyses huge volumes of structured or unstructured data to identify useful patterns or create essential business insights.

Considering the diverse market offerings of software vendors or open-source components that provide comparable data & analytics capabilities and features the challenge is to develop a suitable solution architecture that solves the customer-specific data-driven business outcome.

In summary, it needs trusted data combined with the right technology architecture to create business value.

To apply this formula for diverse data-driven business use cases an architecture methodology is required that focusses on the development of tailored data-to-value solution architectures.

These solution architectures need to consider

- integration of required data sources,

- capabilities to process, transform and store the required data in defined data sets called “data products”,

- and digital technology solutions mentioned before that transforms the data into valuable insights.

Which leads me to the actual motivation for SAP to develop this methodology.

- Let`s start with data:

According to Thomson Data almost 77% of most global transactions rely on SAP software tools making it the largest ERP vendor in the world.

This means a large chunk of structured transactional and master data in context of key business operations like sales, customer service, procurement, supply chain, manufacturing but also finance & controlling and payroll is data created by SAP business application and stored in respective databases. Metadata and especially business semantics are available in such transactional systems that are key to understand and analyze this data. This led to the idea to create a Data Domain Reference Model where such SAP data is organized and described from a business perspective to provide a common language for SAP data.

- Second: Technology.

As mentioned before there are many technology concepts that can help to create value from data. Many business software providers have included these technology concepts in their solutions and services. Finally, many open-source solutions are available that can be used for free.

SAP has provided such solutions for a long time. Nowadays, many of them are cloud-based built on the SAP Business Technology Platform (SAP BTP) like

- SAP Analytics Cloud for Data Analytics, Predictive & Planning

- SAP Datasphere to enable an Enterprise Data Fabric or Data Warehouse

- SAP HANA Cloud as in-memory Database incl. Data Lake extension

- SAP Data Intelligence Cloud for data orchestration & integration.

Therefore, SAP is predestined to provide “reference architectures” that should accelerate solution architecture design for suitable data-to-value use cases. As many of these reference architectures are centered around SAP BTP, a proof-of-concept can be conducted quickly to validate the business concept.

- Business Value Patterns

The methodology recommends describing use cases that explains how value is created from a business perspective. Use cases help to understand the business context and requirements, especially for the “data product”, and support the finetuning of the business concept that create the desired business outcome.

Many customer-specific use cases share requirements, architecture patterns or technical capabilities. Thus, they can be organized in categories and patterns to foster reusability.

The methodology distinguishes two types of use case categories:

- Technical use case categories focus on providing value to IT organization, e.g., by simplifying data management or by providing better data governance.

- Business use case categories focus on value creation for business functions or customers, e.g., by optimizing business operations, enabling better decision making or providing new products and services to customers.

As mentioned before, SAP has started creating SAP BTP-centric reference architectures providing the required capabilities of the use case patterns covered by the categories.

Last but not least, SAP offers a strong methodology competence to help customers create most value out of SAP software products. Examples are

- SAP Activate

- SAP Integration Solution Advisory Methodology

- SAP Application Extension Methodology

- SAP Enterprise Architecture Framework

All of this have been good reasons to develop and provide a structured, repeatable, and adaptable approach that finally became the “SAP Data & Analytics Advisory Methodology”.

Vision and Mission

Now that we have explained the motivation (i.e., the “why”) let us talk about what we want to achieve with the methodology in the long run and what is in for our customers.

Let’s start with the latter, the Vision:

“We want to enable our customers to swiftly design and validate data-driven business innovations that can be subsequently implemented and scaled-out on an enterprise level.”

- swiftly design and validate: the methodology should provide tools and content that helps to accelerate the definition of a target architecture for a data-driven business outcome (“design”) and provide an environment to test-drive the architecture on short notice (“validate”)

- subsequently implemented and scaled-out: after validating the architecture, use the same environment to deploy the solution in a pilot project (“implement”) and manage a company- wide roll out (“scale-out”)

Which leads us to the questions how we want to achieve it, i.e., our Mission:

“We provide a structured process to develop a tailored solution architecture that delivers a defined data-to-value business outcome. This architecture approach is enhanced by consistent data domain & capability models, and by providing SAP BTP-centric reference architectures that can easily be realized.”

- structured process: methodology approach to develop target architecture is following established architecture methodologies like TOGAF ADM (Architecture Development Method) that define the sequence of steps that need to be executed from scope definition to implementation timeline (roadmap or project plan).

- tailored solution architecture: main deliverable is a solution architecture that is fit-for-purpose, is aligned with the IT and Data Strategy and therefore fits into the customer IT landscape.

- data domain & capability models: Data Domain Reference Model shall provide a common business language for SAP data while Data & Analytics Capability Model intends to standardize building blocks used during architecture design

- reference architectures: Use Case Patterns & Categories provide a framework to assign customer use cases which are mapped to generic & SAP BTP-centric reference architectures that provide predefined end-to-end architecture designs

The next blog will describe the core architecture development concept and how the key artefacts & models outlined above are used to support an efficient process and accelerate the architecture design.

Labels:

3 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

93 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

67 -

Expert

1 -

Expert Insights

177 -

Expert Insights

301 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

346 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

429 -

Workload Fluctuations

1

Related Content

- SAP Analytics Cloud Planning - Converting data in Technology Blogs by SAP

- SAP Sustainability Footprint Management: Q1-24 Updates & Highlights in Technology Blogs by SAP

- Unveiling Customer Needs: SAP Signavio Community supporting our customer`s adoption in Technology Blogs by SAP

- SAP Datasphere - Space, Data Integration, and Data Modeling Best Practices in Technology Blogs by SAP

- SAP Datasphere News in March in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 35 | |

| 17 | |

| 17 | |

| 15 | |

| 11 | |

| 9 | |

| 8 | |

| 8 | |

| 8 | |

| 7 |