- SAP Community

- What's New

- The Impact of the Digital Services Act (DSA) on SA...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

On February 17, the SAP Community site -- along with many other online platforms -- will need to comply with Digital Services Act (DSA) regulations set forth by the European Union (EU). As a result, we will be rolling out site changes and processes that will have a major impact on how moderation works on SAP Community.

Compliance is simple in some cases -- and we took the necessary steps months ago. For example, DSA requires us to display the average number of visitors from EU countries, and we provide that information monthly. But moderation is far more complicated -- and will change the way we work with members and how you work with us when it comes to processing alerts, removing content, and banning users.

In the pre-DSA days, it was easy. Members alerted problematic content, or we found it ourselves. We would investigate and determine whether we were dealing with a spammer or someone who otherwise violated our Rules of Engagement -- by plagiarizing constantly, behaving in an unacceptable manner, and so on.

Often, it would be obvious -- spam isn't exactly subtle -- and so we could take swift action, removing the content (and its poster) from the site. Sometimes, though, we might have to dig a little deeper and decide to reject an alert.

Whatever the decision, though, it was usually final. We'd take the actions and move on. If you got caught spamming, for example, your content and you were gone, and that was pretty much that. Yes, we'd keep track internally, but the whole process was quick.

Those days are over.

To comply with DSA, we must track every single moderation action in the DSA - Transparency Database. We must also provide information to those impacted by a moderation decision, and they will have up to 6 months to contest the decision.

On top of that, moderation alerts can even come from non-members and/or visitors who aren't logged in. Everyone who stops by SAP Community must have the ability to report content, per DSA.

The regulations won't just affect us. They will affect you as well.

Members will still be able to "Report Inappropriate Content":

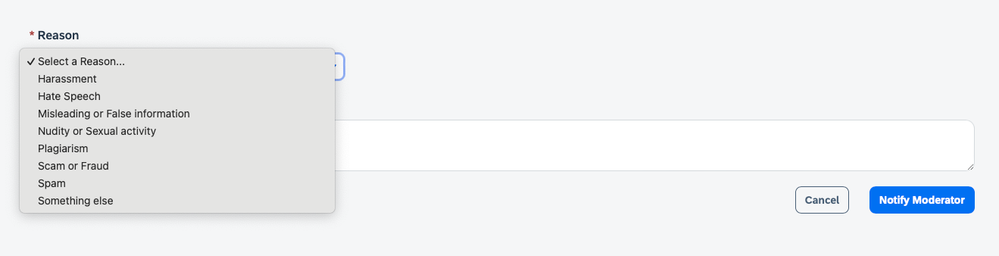

But they'll then be prompted to provide additional information about the report (similar to what we had in the previous platform):

Visitors who aren't logged in will be able to do the same -- reporting content and providing reasons why -- but they must also give additional details in order for the moderation team to process and comply:

In both cases, the member who reported the content will receive a notification immediately -- either via the system (if logged in) or our moderators@sap.com mailbox (if offline) -- indicating that we got the report. They'll receive an incident number that the moderation team will use to track the alert and its outcome. At that point, the clock starts ticking. The moderation team has 72 business hours to investigate and render a decision on the matter. Our team will use our moderators@sap.com mailbox to communicate with the member who reported the content and the member whose content has been reported -- if we need to take action that affects the latter member.

If the moderation team agrees with the report, they'll take the necessary steps (e.g., removal of content and possibly banning the user from the community) and explain the decision to all parties involved.

If the team disagrees, they'll communicate this decision to the member who reported the content. (In this scenario, we don't inform the member who was reported -- because we won't be modifying any of his or her content.)

If members are unhappy with a decision, they have up to 6 months to lodge a formal protest via a response to moderators@sap.com. If we agreed with an alert -- removing content and/or banning a member -- that member can contest the moderation team's decision. If the team decides that the member has a compelling case, we could add the content back and/or reinstate his or her account.

A similar process occurs if we disagree with an alert: The member who submitted the alert has up to 6 months to contest our decision.

While members may contest moderation decisions, the moderation team has the final say and may stick with the original decision.

For every moderation action, the team will need to capture information for the DSA - Transparency Database, as well as for an annual report that we must publish on our site. Both the database and the report will include various metrics, but member names will be excluded for the sake of privacy.

Note that these processes and policies only apply to the creation and modification of content, such as removing a post, editing something (e.g., removal of an email address), and taking out an image. Some simple modification tasks -- such as changing a tag -- would not fall under DSA regulations.

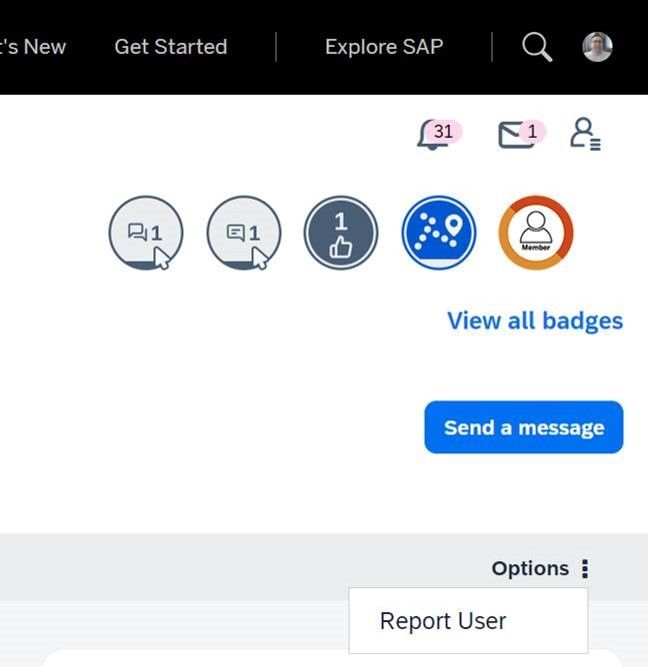

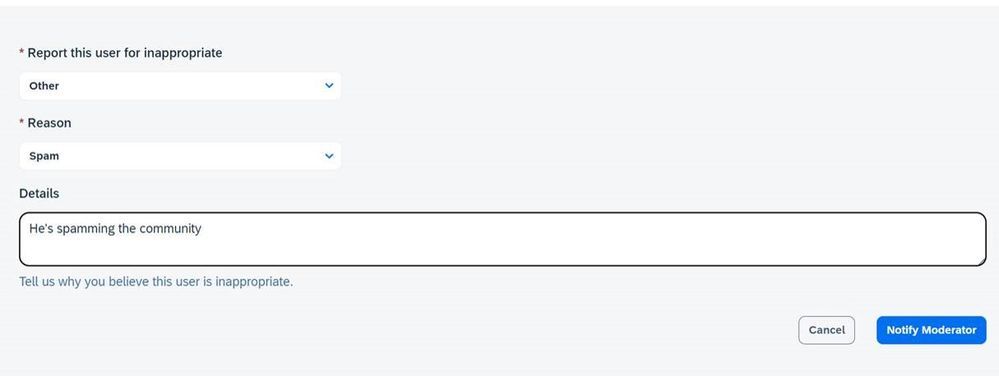

In addition to reporting content, members can report other users directly. In fact, we recommend that members take this approach when they discover someone who has spammed the community multiple times.

To bring the user to our attention, click his or her display name, then select "Report User" from the Options:

From there, select "Other," then "Spam":

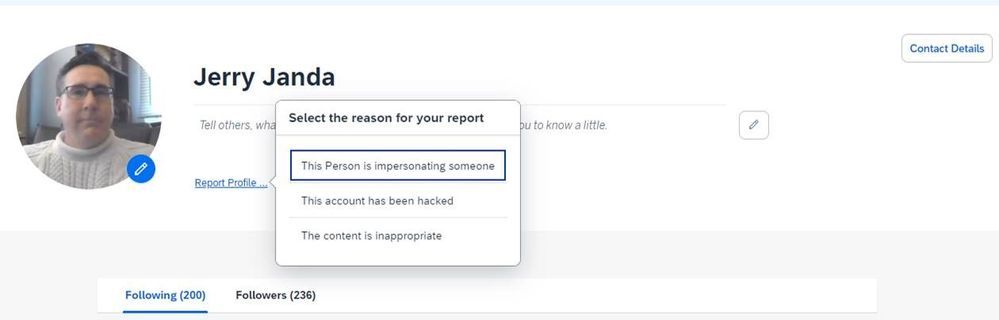

You can also report profile.sap.com profiles…

Why do we recommend reporting the user (instead of the content) for prolific spammers? If a spammer creates 50 pieces of content, and you report all 50, then we have to open and process 50 individual cases, send several emails for each case, etc. But if you report the profile, it counts as one case, and we can ban the user and delete all of his or her content as part of that one case. That makes things move much more quickly (and means fewer messages from us to you).

As you can see, moderation is going to become more complicated -- both for our members and us. Still, these are the steps we must take to comply with DSA.

- SAP Managed Tags:

- SAP Community

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

| User | Count |

|---|---|

| 2 | |

| 1 | |

| 1 | |

| 1 |