- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Replication Flow Blog Series Part 1 – Overview

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Replication Flow Blog Series Part 1 – Overview | SAP Blogs

- Replication Flow Blog Series Part 2 – Premium Outbound Integration | SAP Blogs

- Replication Flows Blog Series Part 3 – Integration with Kafka

- Replication Flow Blog Series Part 4 – Sizing

Data Integration is an essential topic in a Business Data Fabric like SAP Datasphere. Replication Flow is the cornerstone to fuel SAP Datasphere with data, especially from SAP ABAP sources. There is also a big need to move enriched data from SAP Datasphere into external environments to succeed certain use cases. Any data that is moved out into external targets is called premium outbound integration and will get highlighted in the next blog.

Introduction to Replication Flows

The intention of Replication is toa simplify the realization of data replication use cases in SAP Datasphere. Replication Flow is the name of the artefact that a user creates & maintains inside the SAP Datasphere Data Builder application.

The main functionalities of Replication Flows cover:

- Model data replication from a selected source to a selected target. In this case a more simplified way of realizing "mass data replication use cases" is being offered to move data very easy from a source to a target system.

- Initial focus replication with simple projections and filters, e.g., adding, adjusting and removal of columns as well as ability to provide row-level filters on one or multiple.

- Simplified realization of cloud-to-cloud replication scenarios without the need of installing and maintaining an on-premise component, e.g. a component like the Data provisioning Agent for HANA SDI is not needed for Replication Flows

- Dedicated user interface for modelling mass data replication via a new interface that is embedded in the existing modeler application and optimized for mass data replication scenarios to offer a simplified user experience.

- Support initial load as well as delta load capabilities, which is mainly based on trigger-based change-data-capture (CDC) using logging tables on the connected source systems (except when using ODP as source, delta is handled without triggers).

- Support parallelization during initial load through partitioning to achieve a parallelized data load.

- Support resiliency functionalities & automated recovery in case of error scenarios.

See more details in the graphic below.

Overview

Overview of Replication Flow Connectivity

Looking at the supported source & target connectivity, different connectivity can currently be used when creating a Replication Flow, which can also be checked in our product documentation under the following Link.

Replication Flow source and target connectivity

The supported source connectivity includes:

- SAP Datasphere

- SAP S/4HANA Cloud

- SAP S/4HANA on-Premise

- SAP Business Suite & SAP S/4HANA Foundation via SLT

- SAP Business Warehouse

- Azure MS SQL database

The supported target connectivity includes:

- SAP Datasphere

- SAP HANA Cloud

- SAP HANA on-Premise

- SAP HANA Data Lake Files (HDL-Files)

- Google BigQuery

- Google Cloud Storage

- Amazon Simple Storage (AWS S3)

- Azure Data Lake Generation 2

There are partially special configurations available for specific target connections, such as different file formats for target objects stores (e.g. CSV, Parquet etc.). More information about these configuration settings can be found in our product documentation.

Note: Before starting to create your Replication Flow, you want to take a look in the following SAP Note: https://me.sap.com - Important considerations for SAP Datasphere Replication Flows.

It contains a list of major & important considerations as well as limitations of the Replication Flow in SAP Datasphere. Please have a look here if your scenario might be affected before going on and start building Replication Flows.

In case you have any feedback for future enhancements of Replication Flows, please use the SAP Influence portal.

Create a Replication Flow in SAP Datasphere

This chapter will provide an overview for how to create a Replication Flow including an explanation for all relevant settings a user needs to define in the different steps.

In this example we will show you how to connect an SAP S/4HANA system and replicate an ABAP CDS view into SAP Datasphere.

First of all, we open the Data Builder application in SAP Datasphere

Homescreen

In the Data Builder, you can open the Flows tab where you can finally create a Replication Flow..

Select S/4HANA as a source

Select New Replication Flow

Configure Source Connection

First, select a source connection using the SAP Datasphere user guidance Select Source Connection button.

Select Source Connection

Then in the pop-up dialog, select the connection to SAP S/4HANA by selecting the connection S4_HANA.

In a second step, you need to select a source container by clicking on Select Source Container:

Select Source Container

The definition of a container depends on the individual source system you have selected. The following examples can show what a container can be for the common source systems a Replication Flow supports:

- In case of a database source system, a container is the database schema in which the source data sets are accessible (e.g. SAP HANA Cloud or Microsoft Azure SQL etc.)

- In case of an SAP ABAP based system, it is the logical object you want to replicate like:

- CDS - for CDS View based replication from SAP S/4HANA using the application component hierarchy where CDS views are located

- CDS_EXTRACTION – for CDS View based replication from SAP S/4HANA where users will get a flat result list when searching for CDS Views without application component hierarchy (see the next paragraph for details)

- ODP_BW & ODP_SAPI for ODP-based data replication from SAP sources

- SLT - Tables for table-based extraction from SAP source systems where you need to select the pre-created SLT configuration

In the pop-up dialog, select the folder CDS to leverage the replication of CDS Views from the selected SAP S/4HANA system.

Select CDS Views

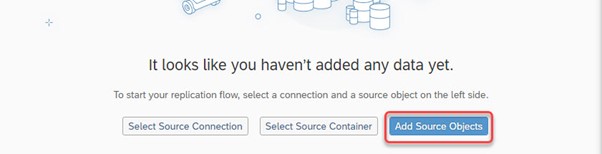

In a next step, we will add the source data sets (= CDS Views) that will be replicated as part of this example. Therefore, click on the button Add Source Objects.

Add Source Objects

After browsing through the navigation bar, we select the following four custom ABAP CDS views out of the folder TMP.

- Z_CDFS_EPM_BUPA

- Z_CDS_EPM_PD

- Z_CDS_EPM_SO

- Z_CDS_EPM_SO_I

Select custom CDS views

Afterward we click on Next and Add Selection to add the four CDS Views to your Replication Flow.

Add selection

After the selection is successful, you will see that the CDS Views are now available in your Replication Flow.

Overview screen

In case you want to remove replication objects from your Replication Flow, please mark the desired object and click on the remove button next to the source object name.

Remove object

Configure Target Connection

Define your target connection as part of the data replication scenario. In this case we replicate the data from SAP S/4HANA to SAP Datasphere local tables as target system.

To select the target connection, please click on the following button in your Replication Flow.

Select Target System

In this example, we will replicate CDS Views from SAP S/4HANA to SAP Datasphere as target system. Therefore, please choose SAP Datasphere in the dialog.

Select SAP Datasphere

Note: The displayed dialog shows only the connections that are supported as target system in Replication Flows. The SAP Datasphere connection is automatically created in your SAP Datasphere system, and you do not need to create it in the "Connection" application in SAP Datasphere, where you create connection to remote systems such as the SAP S/4HANA source connection ins this example.

You will recognize that the Target Container is automatically being filled with the name of the space you are currently logged in. This is currently the expected behaviour as the Replication Flow will always load the data to your local space where the Replication Flow is being created, in case you select SAP Datasphere as target system. Writing into another space in SAP Datasphere is not yet supported.

Target Container

After selecting the target connection and target container, the target data set name for each replication object will automatically be filled with the same name as the source data set name. The Replication Flow can either use an already pre-created data set in the target (e.g. a pre-created target table) or can create the target data set in case it is not yet existing.

When selecting a replication object, you can click on the Additional Options button next to the target data set name. Here you have the following options to:

- Rename target Object to rename the target data set, e.g., when you want to let the replication flow create a target data set, but you want to change its name.

- Map to Existing target Object to map to a pre-created target data set (not available for object stores as target).

- Change Container Path when using an object store as target system.

Additional Options

There are different configurations possible for your Replication Flow in the modelling user interface, which are described in more detail in one of the following paragraphs.

- Target system specific settings on Replication Flow level

- Replication object specific settings on data set level (e.g., projections incl. filtering or options in the settings tab) using Object Properties panel.

Target system specific settings on Replication Flow level

For the following target systems (e.g., target object stores and Google Big Query) you can define different configurations when clicking on the settings icon next to the selected target connection.

Target specific settings

- Target connection specific properties that are automatically popping up in the user interface if a certain connection is being specified.

- SAP Datasphere

- Delta Capture (ON / OFF, default: ON). Please not that this setting is only available in configuration panel explained later in this deep dive section.

- Object Stores (HDL Files)

- Group Delta By (Date, Time) allowing users to define if the delta records should be automatically grouped in folders based on date or time.

- File Type (CSV, Parquet, JSON, JSONLines)

- Compression (for Parquet)

- Delimiter (for CSV)

- Header Line (for CSV)

- Orient (for JSON)

- Google BigQuery

- Write Mode (Append)

- Clamp Decimal Floating Point Data Types (True/False)

Example: Target Settings HDL_FILES

Configure Replication Flow Settings

The following section will explain which configurations options are available using Replication Flows in SAP Datasphere including general settings valid for an entire Replication Flow as well as specific configurations on replication object level inside a Replication Flow.

For each selected source data set (replication object in your Replication Flow) there are two ways to configure each replication object using.

- the tabs projection and settings located in the middle of your Replication Flow

- using the configuration panel on the right-hand side of the modelling screen when a replication object is being selected.

Settings

Configuration options using the tabs projection and settings

Settings

Load Type: Select the load type for each Task where you can select Initial Only or Initial and Delta. Initial Only will load the data via a full load without any change data capture (CDC) or delta capabilities. Initial and Delta will perform the initial load of a data set followed by replicating all changes (inserts, updates, deletes) for this data set. Furthermore, the required technical artefacts on the source to initiate the delta processes are automatically being created.

Truncate: A check box that allows users to clean-up the target data set, e.g., in case a user want to re-initialize the data replication with a new initial load.

Load Type

Projections

In case no projections have been defined, the display will be empty and to add a projection, please follow the steps in the paragraph where we explain the configuration options in the side panel.

Projections

By default, all columns supported from the source data set are being replicated to the target data set using an auto mapping with the exact same column names in the source & target data set. You can use the mapping dialog to customize the standard mapping, e.g., if the column names differ from each other. Additionally, you can remove columns that are not needed and also create additional columns and either map new columns to existing column of fill it with constant values or pre-defined functions (e.g., CURRENT_TIME, CURRENT_DATE). More information about mapping capabilities can be found here: Replication Flow Mapping.

When browsing and selecting a pre-defined target data set, e.g., a table in SAP Datasphere, you cannot create additional columns as the target structure is defined by the existing table. In such a case you can either let the replication flow create a new target table or adjust the pre-created table with new structure.

Note: At the moment, a user can only provide one projection per replication object and not multiple ones. There might be cases where columns from the source data set are not visible in the dialog and automatically being removed. The reason for this can be for example that the column is using a data type, which is not yet supported by replication flows. You can check the following SAP Note for details: Important Considerations for Replication Flows in SAP Datasphere.

Configuration options using the replication object configuration panel

When selecting a replication object, the following configuration panel appears on the right in which you can perform various configurations for each individual replication object in your Replication Flow.

Settings

The available settings include:

- Adding a Projection

- Changing Settingsincluding configuration of load type, truncate option and specific configuration parameters that are also available in the previous paragraphs in other UI controls.

Note: In this case the user can provide granular configurations for each individual replication object in case the settings on replication flow level are not sufficient.

After you have done all required configurations, you need to save the replication flow using the Save button in the top menu bar.

Save

The following pop-up will appear where you can specify the name of your Replication Flow.

Name of Replication Flow

Note: Replication Flows will have the same name for business and technical name. This cannot be changed.

As a next step, you need to deploy the replication flow using the Deploy button in the top menu bar:

During the deployment several checks will be performed in background to check if the replication flow does fulfil all pre-requisites and is ready to be executed.

The deployment process will also make sure that the necessary run-time artefacts are being generated before you can finally start a Replication Flow.

In case the deployment is executed successfully, click the Run button to start your Replication Flow.

Run Replication Flow

Monitoring of Replication Flows

Monitoring Replication Flows is embedded inside the SAP Datasphere Data Integration Monitor application.

You can either click the Data Integration Monitor application located on the left-hand menu panel or inside the Data Builder application after you have run the replication flow using the Open in Data Integration Monitor icon on the top menu bar of your Replication Flow:

Directly open Data Integration Monitor application.

Monitoring Application

The monitoring of Replication Flows is included into two parts, which is the general Flow Monitoring that provides a high-level overview of the Replication Flows (incl. also Transformation Flows) general monitoring status as well as a detail monitoring screen of each Replication Flow with detailed information about each replication object.

Once the Data Integration Monitor is being opened via the left-hand menu panel, a user will be re-directed to the main page of the Data Integration Monitor. A user can now navigate to Flow Monitor and finally suer the filter option to view all Replication Flows in the local space.

Flow Monitoring

In case you wants to switch into the detailed monitoring view of a specific Replication Flow, you need to click on the details button within a specific row of the selected Replication Flow.

Detailed Monitoring

Inside the detailed monitoring screen, you can access various different information such as the source and target connection, load statistics and the status of each replication object inside the selected Replication Flow.

Monitoring replication objects

You can also check the Metrics tab for additional statistics such as the initial load duration as well as the number of transferred records during initial as well as delta load phase.

Monitoring Metrics

Additional information can be also found here: Flow Monitoring in SAP Datasphere

This was the first detailed hands-on block for Replication Flows to give a general understanding of the functionality. The intention was to share as much as possible that you have success with your Replication Flow project. We will add over the time more and more of these blogs to share latest knowledge or hot topics.

Thanks to daniel.ingenhaag hannes.keil , martin.boeckling and the rest of the SAP Datasphere product & development team who helped in the creation of this blog series.

The next blog explains the premium outbound integration which is a new enhancement for Replication Flows delivered with the last release mid of November. Stay tuned.

- SAP Managed Tags:

- SAP Datasphere

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

93 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

67 -

Expert

1 -

Expert Insights

177 -

Expert Insights

301 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

346 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

429 -

Workload Fluctuations

1

- Enhance your SAP Datasphere Experience with API Access in Technology Blogs by SAP

- Consuming SAP with SAP Build Apps - Mobile Apps for iOS and Android in Technology Blogs by SAP

- Exploring Integration Options in SAP Datasphere with the focus on using SAP extractors - Part II in Technology Blogs by SAP

- ABAP Cloud Developer Trial 2022 Available Now in Technology Blogs by SAP

- Introducing Blog Series of SAP Signavio Process Insights, discovery edition – An in-depth exploratio in Technology Blogs by SAP

| User | Count |

|---|---|

| 33 | |

| 17 | |

| 15 | |

| 13 | |

| 11 | |

| 9 | |

| 8 | |

| 8 | |

| 8 | |

| 7 |