- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Replicating table data from an SAP ECC system with...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

A few months back I authored a post detailling the process of data replication from an ECC or S4 system into Google Big Query through the utilization of SAP Data Intelligence. In this prior post, I used a data pipeline for replication, specifically chosen to accommodate the requirements of Google Big Query as a target (it would have been the same for a Snowflake target). But for other targets, I could have used a feature known as Replication flow.

As you are aware, on the 8th of March, SAP made a significant announcement concerning the release of SAP Datasphere. This innovative evolution of SAP Data Warehouse Cloud incorporates several key attributes from SAP Data Intelligence, including Replication Flows. Currently, this feature facilitates data replication within SAP Datasphere itself, SAP HANA Cloud, SAP HANA Datalake Files, or SAP HANA (On Prem). Notably, the roadmap for Q4 2023 includes the expansion of target options to encompass Google Big Query, Google Cloud Storage, Amazon S3 and Microsoft Azure Data Lake Storage Gen2.

Quickly, customer inquiries came in, centered around the methodology of data replication utilizing the capabilities of SAP Datasphere. For an S/4HANA system, achieving this task becomes straightforward through the use of CDS ( Core Data Services). The core purpose of SAP Datasphere is to provide a Business Data layer for our customers, for them not to have to replicate table to table and go thru the laborious and error-prone process of building models but rather give them out of the box directly usable data. But still, among these questions, one particular focus was the replication of data from an SAP ECC source, more precisely, a table-to-table replication. Although I would strongly not advise to replicate table to table in a datalake and have the modelling done there, it is still an architecture model that we see. Maybe not for many years, as more and more customers will move to S/4HANA but still, it is a valid question.

For these customers, here is a blog explaining how to perform this table to table replication from an ECC system with SAP Datasphere using Replication Flows.

*IMPORTANT NOTICE*

EDIT (28/11/2023) : This blog has generated more impact than any of my other blogs, and received a lot of email asking for further explaination in terms of how I did this. Some customers where not able to see SLT as an option for replication flows on ECC systems. This is because you do not have a supported version of the DMIS addon. Here is the explained architecture used to implement Replication Flows for ECC systems.

If you look at the sap note 2890171, you will see that for using Replication flows with the SLT connector Operator, you need to have an SLT system with DMIS 2018 SP06/DMIS 2020 SP03 or higher. Unfortunately you cannot install such DMIS versions on an ECC EHP8 ( having a NW 7.5 and the maximum version of the DMIS being a DMIS 2011). So for this POC, I had to use a separate box with a NW 7.52 where I installed a DMIS 2018 and then connected my ECC thru RFC in SM59 to this SLT box.

And remember that you also need to have on the ECC box a DMIS version that is valid with the DMIS version on the separate STL box. For example, in this POC I used a DMIS 2018 SP06, and here is the SAP note for this DMIS verion's Source Compatibility - SAP Note 3010969. Meaning in this example, that I needed a DMIS 2011 SP18 or higher on my ECC source system.

As SAP Datasphere customers are entitled to have an SLT runtime license, just like for SAP Data Intelligence, this is a valid setup, the only drawback is you need a separate SLT box and to have the valid DMIS versions both on the ECC system and on the SLT system for the SAP Datasphere Replication flows. I hope this makes everything a little more clear.

Before starting, I would like to thank Olivier SCHMITT for providing me with the SAP ECC system, along with the DMIS Addon and also for doing the installation of the SAP Cloud Connector, SAP Analytics Cloud Agent and SAP Java Connector on this box.

I would like also to thank Massinissa BOUZIT for his support thoughout the whole process.

Here is the video :

EDIT (21/08/2023) : Contrary to what I say in the video and as noted by a reader in the comments, the SAP Analytics Cloud Agent and SAP Java Connector are not needed for this specific use case of SAP Datasphere replication flows. You can skip these steps also in the SAP Cloud Connector Configuration and you don't need to specify and enable the Agent in On-Premise Data Source configuration on the SAP Datasphere Administration.

So first you will have to log in your SAP Datasphere system and go to "System", "Administration" and choose "Data Source Configuration".

Here you will find several information, Subaccount, Region Host and Subaccount User (you will also need the subaccount user password)

After that you will have to install in your On Prem architecture the SAP Cloud connector, you will also need a SAP Analytics Cloud Agent, and the SAP Java Connector. I won't go through this process as you can find documentation about this.

As explained before in the begining of this blog, you also will need an SLT system or to install the DMIS Addon on a seperate system with a version DMIS 2018 SP06 / DMIS 2020 SP03 or higher. And once again, since the DMIS 2018 SP06 requires a minimum version of NetWeaver 752, you cannot install the DMIS addon on your ECC system, ECC EHP8 only supports NW 750.

For this POC, I have the DMIS Addon on a different box( (an S/4HANA system), and defined my ECC as an RFC connection.

I am sorry for the confusion as many customers tried to follow this blog with a DMIS 2011 on their ECC box and couldn't see SLT as an available option for their replication flows.

So back to the process, log into your installed SAP Cloud Connector.

Add your SAP Datasphere Subaccount, using all the information we got on the SAP Datasphere system and give it a specific Location ID, make note of this, you will need afterwards.

Now go to configure the Cloud to On-Premise for the Subaccount that you just created, for that click on Cloud to On-Premise, and select subaccount.

Add two Virtual mapping to internal systems. One for your SLT or DMIS addon, and one for the SAP Cloud Connector Agent. In my case, the DMIS Addon is on a different box than my SAP ECC system, I called it ecc6ehp8 but it's actually a S/4HANA sytem. You have then to enter the following ressources for the SAP system, as explained in the help.

For the SAP Analytics Cloud agent, you will also have to enter a ressource.

All the setup on the SAP Cloud Connector is done, we can go back to SAP Datasphere.

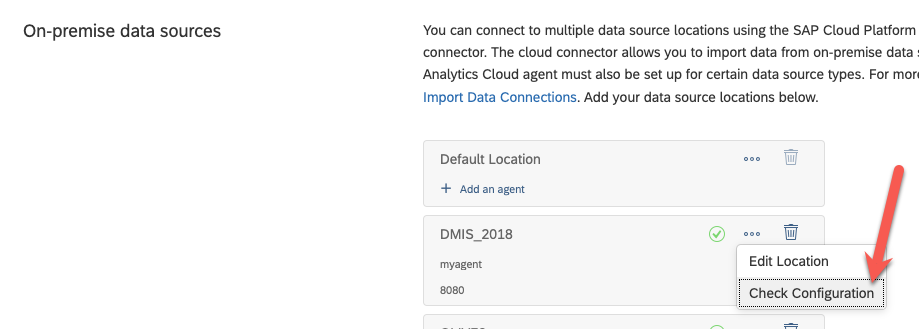

Here we are going to configure the location we created in the SAP Cloud Connector. Go back to system, administration, data source configuration and at the bottom, add a new location :

Use the same location ID as in the SAP Cloud Connector, for the host, the one of your SAP Analytics Cloud Agent, with user and password. By default, this is Agent/Agent.

Check that everything is working

Now we can create the connection to our ECC system (actually to the SLT system)

Use all the information given in the SAP Cloud Connector, location ID, the virtual name you used for your mapping, etc... of course, don't forget to configure the SAP Cloud connector!

Verify your connection and validate that the Replication flow are configured correctly.

Now we can go to configure the SLT system, launch transaction SM59 :

Add a new ABAP Connection to your SAP ECC system :

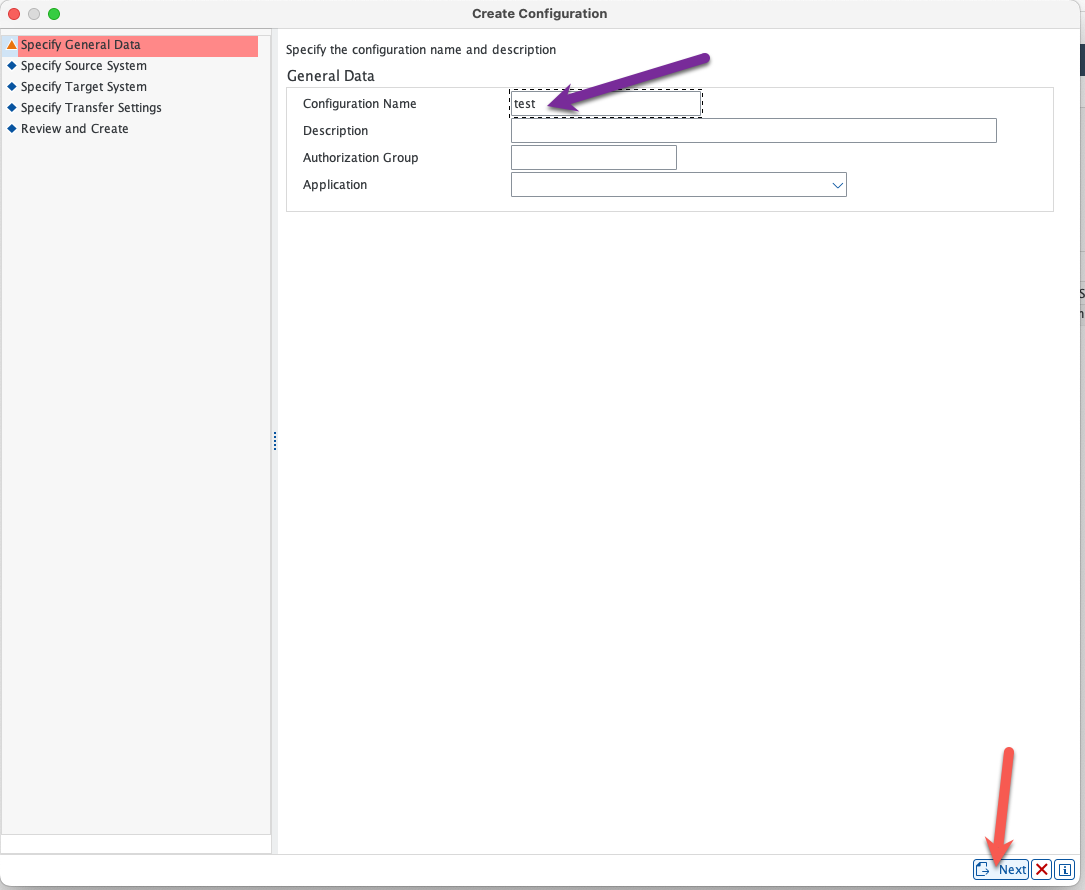

Now go to transaction LTRC, the SAP Landscape Transformation Replication Server Cockpit and create a new configuration.

Give it a name and click next.

Select the RFC connection that you just created in transaction SM59 to your SAP ECC system :

For the target system, choose "Other" and "SAP Data intelligence (Replication Management Service)". As explained in the introduction, this is a feature coming from SAP Data Intelligence that was implemented in SAP Datasphere.

Specify if you want real time or not, please note that currently there is a limitation of one hour even with Real Time turned on for replication.

Finish and make note of the mass transfer ID of your configuration. We will not be adding tables here as this will be done on the SAP Datasphere side. Note also that you can see "NONE" as a target, although we specified SAP Data Intelligence Replication Management Service, this is normal.

All the configuration steps are finished now, we can start replicating our data. Please note that for big tables, like MARA in this example, you will have also to partition your table to avoid getting an error message and having the replication flow to fail. I will not go into the details of partitioning your tables. For small tables, this will not be an issue.

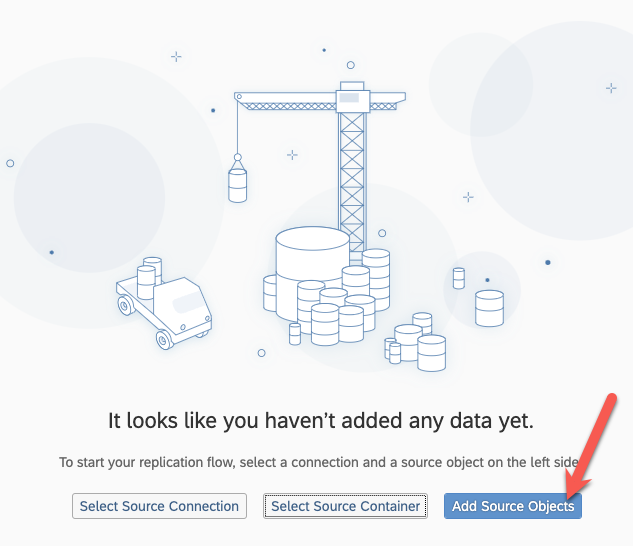

Back to SAP Datasphere, go to Data Builder and create a new Replication flow :

Select your source :

Select the connection you created previously :

Select the source container :

Select SLT as a source container and then the mass transfer ID of your configuration on the SLT system you previously created :

Add the source objects :

Search for the tables you wish to replicate, select them and add them :

Select again on the next screen and add the selection :

Specify the behavior of your replication flow (Initial Load, Deta):

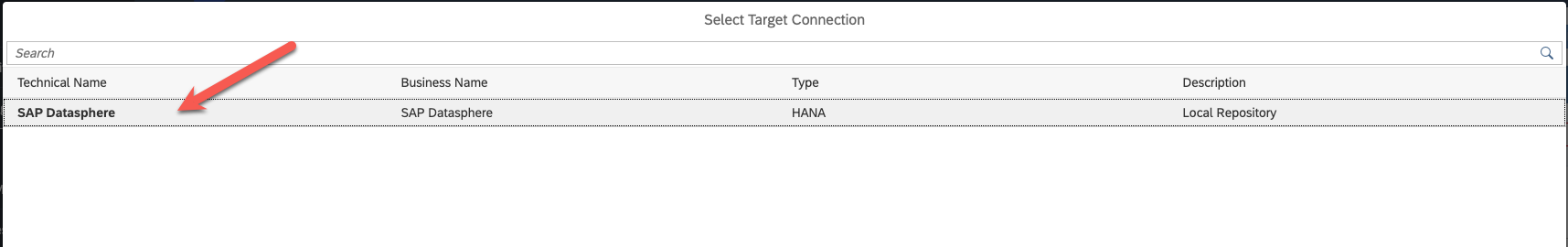

And select your target, in my case, SAP Datasphere itself.

Now, save, deploy and run! You can go to the Data Integration Monitor to see if everything is going smoothly.

Go to Flow monitor and select your replication flow :

As I said, the many errors you see below are because I didn't partition my MARA table which was too big. When I did, I all worked.

Go back to Data Builder and verify that the data was loaded correctly.

Click to preview you data and you should be all set!

I hope that this was useful for you. Don't hesitate to leave a comment if you have a question. Once again, I would like to thank Olivier SCHMITT for providing me with the SAP ECC system, along with the DMIS Addon and also for doing the installation of the SAP Cloud Connector, SAP Analytics Cloud Agent and SAP Java Connector on this box. I would like also to thank Massinissa BOUZIT for his support thoughout the whole process and especially with the SLT configuration and for partitioning the table MARA for me.

And if you wish to learn more about SAP Datasphere, find out how to unleash the power of your business data with SAP’s free learning content on SAP Datasphere. It’s designed to help you enrich your data projects, simplify the data landscape, and make the most out of your investment. Check out even more role-based learning resources and opportunities to get certified in one place on SAP Learning site.

- SAP Managed Tags:

- SAP Datasphere

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

95 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

67 -

Expert

1 -

Expert Insights

177 -

Expert Insights

308 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,576 -

Product Updates

350 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

437 -

Workload Fluctuations

1

- SAP Datasphere + SAP S/4HANA: Your Guide to Seamless Data Integration in Technology Blogs by SAP

- What’s New in SAP Datasphere Version 2024.9 — Apr 23, 2024 in Technology Blogs by Members

- Hybrid Architectures: A Modern Approach for SAP Data Integration in Technology Blogs by SAP

- Replication jobs running under my user ID. Security audit conflict. in Technology Q&A

- Use CRUD Opreations with SAP Datasphere Command-Line-Interface in Technology Blogs by SAP

| User | Count |

|---|---|

| 24 | |

| 15 | |

| 13 | |

| 11 | |

| 11 | |

| 10 | |

| 9 | |

| 9 | |

| 8 | |

| 8 |