- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Lets add 2 Custom Embedding models to SAP AI CORE

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

NOTE: The views and opinions expressed in this blog are my own

I've recently written a blog Share corporate info with an LLM using Embeddings which gives a very high level overview of embedding for LLM's using SAP AI CORE.

There are several different styles of Embeddings such as:

- Word2Vec: Utilizes a shallow neural network for predicting the context of words based on neighboring words, offering efficiency and ease of use but facing challenges with rare words and complex word relationship capturing. Patented by Google in 2013 but generally considered superseded by Transformer models.

- GloVe (Global Vectors for Word Representation): Employs co-occurrence statistics to capture global word relationships, effectively grasping semantic connections but potentially struggling with syntactic relationships.

- Transformer-based Models (e.g. GPT-3.5): Revolutionized NLP with attention mechanisms and multi-layer architectures, producing contextual embeddings that understand both meaning and context of words/sentences, albeit requiring significant computational resources for both training and inference

All the attention (excuse the pun) these days is on the Embeddings from Transformer models.

Check out the the Massive Text Embedding Benchmark (MTEB) Leaderboard.

Now as we go a little deeper in our attempts to compare Embeddings on Enterprise data, lets add 2 additional models:

- Word2Vec - the old timer

- WhereIsAI/UAE-Large-V1 - the new kid on the block [as at 20 Feb 24 ranked 6th overall, but it is currently the top ranked small model under 7Gb]

Both are small enough to run on CPU, which is great for some preliminary tests.

Yes you can run them both on your laptop, but that's not Enterprise grade so lets run them on SAP AI CORE.

The process for deploying is pretty simple so I won't elaborate all the steps here, but I would recommend the tutorial Deploy a custom ML Python service on SAP AI CORE for more detail on the steps involved.

The pre-requisites to proceed are: SAP AI Core (Extended plan preferably), Docker Hub account.

Create a requirements.txt file:

Flask

angle_emb

torch

gensim

Create a server.py file:

# This code uses the AnglE library (https://github.com/WhereIsAI/angle_emb)

# based on the following paper:

# Li, Xianming and Li, Jing. AnglE-optimized Text Embeddings. arXiv preprint arXiv:2309.12871, 2023.

# https://huggingface.co/WhereIsAI/UAE-Large-V1

# This code also refers to https://radimrehurek.com/gensim/models/word2vec.html

from flask import Flask, request, jsonify

#AngleE

from angle_emb import AnglE, Prompts

#word2vector

import numpy as np

import gensim.downloader as genapi

app = Flask(__name__)

# Load the AnglE model

angle = AnglE.from_pretrained('WhereIsAI/UAE-Large-V1', pooling_strategy='cls').cuda()

angle.set_prompt(prompt=Prompts.C)

# Load pre-trained Word2Vec model

word2vec_model = genapi.load("word2vec-google-news-300")

@app.route('/embeddings/uae', methods=['POST'])

@app.route('/v1/embeddings/uae', methods=['POST'])

def uae():

try:

# Get JSON data from the request

data = request.get_json()

# Extract the prompt from the JSON data

prompt = {'text': data['text']}

# Encode the prompt to a vector

vec = angle.encode(prompt, to_numpy=True)

# Convert the vector to a string

vec_str = str(vec[0].tolist() ) #' '.join(map(str, vec))

# Return the vector string as JSON response

return jsonify({'vector': vec_str})

except Exception as e:

return jsonify({'error': str(e)})

@app.route('/embeddings/w2v', methods=['POST'])

@app.route('/v1/embeddings/w2v', methods=['POST'])

def w2v():

try:

# Get JSON data from the request

data = request.get_json()

# Extract the prompt from the JSON data

prompt = data['text']

words = prompt.split()

vectors = []

for word in words:

try:

vector = word2vec_model[word]

vectors.append(vector)

except KeyError:

# If the word is not found in the vocabulary, skip it

pass

# Calculate the mean of all word vectors to get the sentence vector

if vectors:

sentence_vector = np.mean(vectors, axis=0)

else:

# If no word vectors were found, return a vector of zeros

sentence_vector = np.zeros(word2vec_model.vector_size)

# Return the vector string as JSON response

vec_str = str(np.array2string(sentence_vector, separator=',', formatter={'float_kind': lambda x: "%.8f" % x}))

return jsonify({'vector': vec_str})

except Exception as e:

return jsonify({'error': str(e)})

if __name__ == '__main__':

#app.run(debug=True)

app.run(host='0.0.0.0', port=5000, debug=False)

Create a Docker file:

# This Dockerfile builds a container with a Flask app that uses the AnglE library

# based on the following paper:

# Li, Xianming and Li, Jing. AnglE-optimized Text Embeddings. arXiv preprint arXiv:2309.12871, 2023.

# https://huggingface.co/WhereIsAI/UAE-Large-V1

# Base image

FROM python:3.9

# Set the working directory in the container to /app

WORKDIR /app

# Copy the current directory contents into the container at /app

COPY server.py /app/

COPY requirements.txt /app/

# Install any needed packages specified in requirements.txt

RUN pip install --no-cache-dir -r requirements.txt

# Enable permission to execute anything inside the folder app

RUN chgrp -R 65534 /app && \

chmod -R 777 /app

#Expected with AI CORE deployments

RUN mkdir -p /nonexistent

RUN chmod -R 777 /nonexistent

EXPOSE 5000

# Run app.py when the container launches

CMD ["python", "-m", "server", "--host=0.0.0.0"]

Now you need to build, test and push the docker image to Docker Hub:

docker run -p 5000:5000 <your account>/sap-ai-test-embeddings:latest

docker build -t <your account>/sap-ai-test-embeddings:latest .

docker login -u <your account>

docker push <your account>/sap-ai-test-embeddings:latest

NOTE: You can test locally by running server.py directly or running the docker image:

### Test Embedding UAE

POST http://localhost:5000/embeddings/uae

Content-Type: application/json

{

"text" : "black panther"

}

### Test Embedding W2V

POST http://localhost:5000/embeddings/w2v

Content-Type: application/json

{

"text" : "black panther"

}

Now in a private git repo linked to AI-CORE specify the custom-embeddings/service-custom-embeddings.yaml:

apiVersion: ai.sap.com/v1alpha1

kind: ServingTemplate

metadata:

name: custom-embeddings

annotations:

scenarios.ai.sap.com/description: "Run a custom embeddings server on AI Core"

scenarios.ai.sap.com/name: "custom-embedding-scenario"

executables.ai.sap.com/description: "Run a custom embeddings server on AI Core"

executables.ai.sap.com/name: "custom-embedding-executable"

labels:

scenarios.ai.sap.com/id: "custom-embedding-server"

ai.sap.com/version: "0.1"

spec:

template:

apiVersion: "serving.kserve.io/v1beta1"

metadata:

annotations: |

autoscaling.knative.dev/metric: concurrency

autoscaling.knative.dev/target: 1

autoscaling.knative.dev/targetBurstCapacity: 0

labels: |

ai.sap.com/resourcePlan: basic

spec: |

predictor:

minReplicas: 1

maxReplicas: 1

containers:

- name: kserve-container

image: <your account>/sap-ai-test-embeddings

ports:

- containerPort: 5000

protocol: TCP

In SAP AI CORE Administration add a new application:

After a short while you should see the new ML Operations Scenario appear:

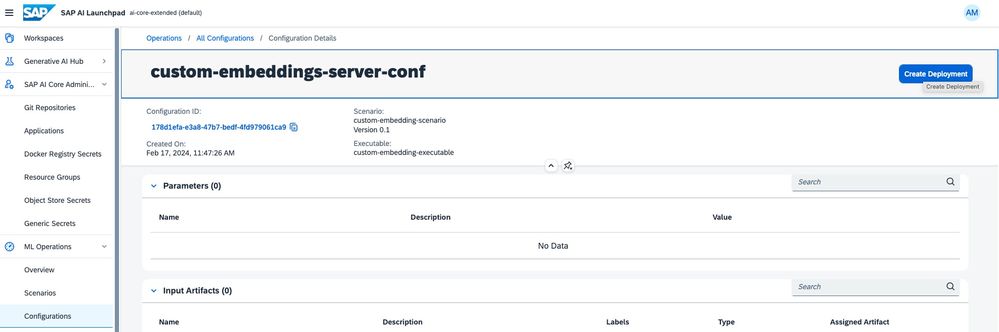

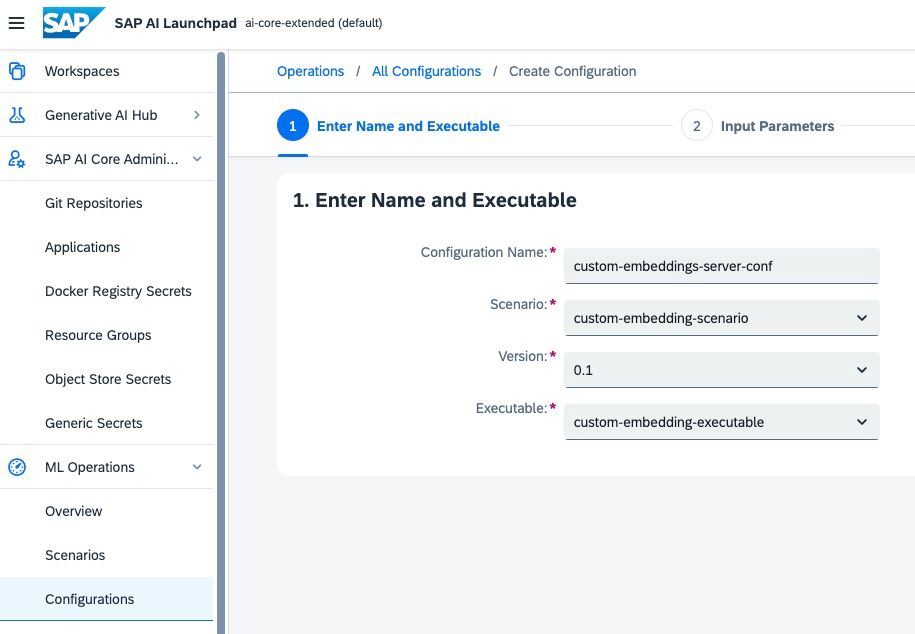

Next create the configuration.

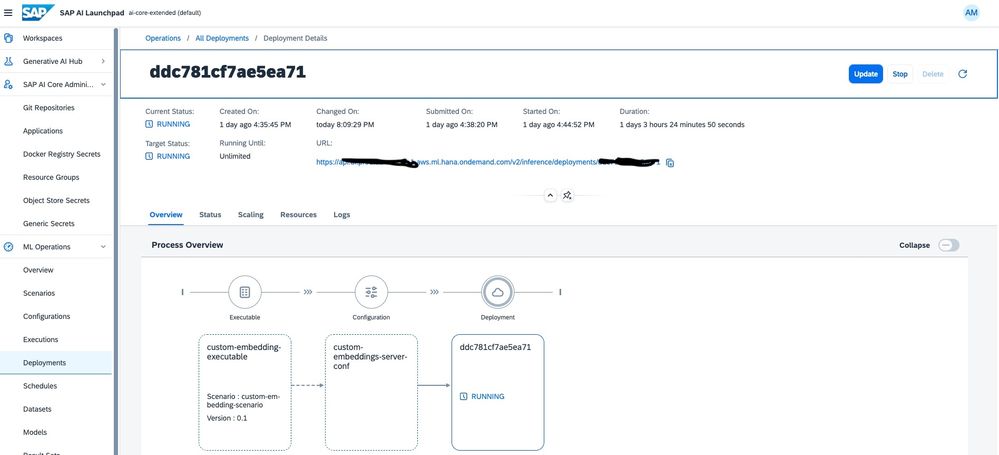

Next Deploy.

Finally check that the Deployment is running.

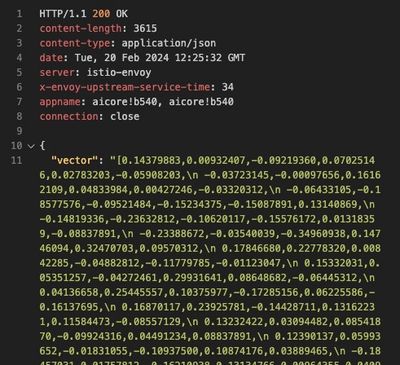

Now lets check that the 2 new Embeddings work running on SAP AI CORE:

@auth='Bearer ......'

### Test Embedding UAE

POST <your deployment url>/v1/embeddings/uae

Content-Type: application/json

Authorization: {{auth}}

ai-resource-group: default

{

"text" : "black panther"

}

### Test Embedding W2V

POST <your deployment url>/v2/inference/deployments/ddc781cf7ae5ea71/v1/embeddings/w2v

Content-Type: application/json

Authorization: {{authEU10}}

ai-resource-group: default

{

"text" : "black panther"

}

If all went well you should be getting Embedding results like:

| UAE-Large-V1 [1024 Dimensions] | Word2Vec [300 Dimensions] |

click to enlarge click to enlarge |  click to enlarge click to enlarge |

The good news is that if you followed this blog and the previous one you will have 4 Embedding Models running that you can test with your business scenarios:

| Azure OpenAI text-embedding-ada-002 | 1536 Dimensions, hosted externally 175B parameters Currently Ranked 38 for Embeddings |

| Ollama running Microsoft/Phi-2 | 2536 Dimension, hosted on SAP AI CORE, 2.7B parameters, infer.s [GPU] Not Ranked |

| WhereIsAI/UAE-Large-V1 | 1024 Dimensions, hosted on SAP AI CORE, 335M parameters, basic [CPU] Currently Ranked 6 for Embeddings

|

| Word2Vec (google-news-300) | 300 Dimensions,hosted on SAP AI CORE, basic [CPU] Not Ranked |

The bad news is this blog is getting a bit long, so I will need to test and compare them in my next blog in the series.

In the meantime I welcome your feedback below.

SAP notes that posts about potential uses of generative AI and large language models are merely the individual poster’s ideas and opinions, and do not represent SAP’s official position or future development roadmap. SAP has no legal obligation or other commitment to pursue any course of business, or develop or release any functionality, mentioned in any post or related content on this website.

- SAP Managed Tags:

- Artificial Intelligence,

- SAP AI Core

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

93 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

67 -

Expert

1 -

Expert Insights

177 -

Expert Insights

301 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

346 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

430 -

Workload Fluctuations

1

- Sapphire 2024 user experience and application development sessions in Technology Blogs by SAP

- SAP Analytics Cloud: Support Universal Account Model (UAM) with Custom Widget in Technology Blogs by SAP

- How to embed SWZ portal into an iFrame? in Technology Blogs by SAP

- Data Privacy Embedding Model via Core AI in Technology Q&A

- Python RAG sample for beginners using SAP HANA Cloud and SAP AI Core in Technology Blogs by SAP

| User | Count |

|---|---|

| 31 | |

| 17 | |

| 15 | |

| 13 | |

| 11 | |

| 9 | |

| 8 | |

| 8 | |

| 8 | |

| 7 |