- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Integrating GPT Chat API with SAP CAP for Advanced...

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Product and Topic Expert

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

06-20-2023

12:13 PM

This blog post is part of a series of technical enablement sessions on SAP BTP for Industries. Check the full calendar here to watch the recordings of past sessions and register for the upcoming ones! The replay of this session available here.

Authors: yatsea.li, amagnani, edward.neveux, jacobtan

Disclaimer: SAP notes that posts about potential uses of generative AI and large language models are merely the individual poster's ideas and opinions, and do not represent SAP's official position or future development roadmap. SAP has no legal obligation or other commitment to pursue any course of business, or develop or release any functionality, mentioned in any post or related content on this website.

Large Language Models (LLMs) like ChatGPT and GPT-4 have gained significant popularity with emergent capabilities. In this fourth session of the series, we’ll explore the potentials of GPT in SAP ecosystem, and discuss how SAP partners can leverage this powerful AI technology in combination with SAP technologies to accelerate their solution development processes, and create more intelligent solutions on SAP Business Technology Platform (SAP BTP).

Here list the full blog post series of Exploring the potential of GPT in SAP ecosystem:

In our previous blog about we have seen GPT’s advanced text processing capability on customer messages through prompt engineering, such as text summarization, sentiment analysis, entities extraction etc.

In this blog post, we will proceed with integrating the prompts into the Intelligent Ticketing Solution for processing customer messages with GPT through its Chat API. Additionally, implementing the LLM Proxy Service in the architecture diagram.

Let us start with a look into GPT’s Chat API.

In the playground, we can examine the underlying API request to Azure’s OpenAI Service when submitting a prompt by clicking the "View Code" on the Chat session. Let us consider the example of analyzing the sentiment of input text.

In the following diagram, you can find the API endpoint, and API key to access GPT Chat API. The request body of Chat API consists of a system-role message for the instruction and a user-role message for the user input message, along with other parameters such as temperature, max_tokens, etc.

For more detail about Chat API, please refer to Azure OpenAI Service API Reference.

Next, let us look at some sample code on how to perform the API call using the same sentiment analysis prompt in SAP CAP Node.js, which we have seen previously.

Please refer to gpt-api-samples for the NodeJS samples of using Chat API of GPT-3.5 onward models and Embedding API of text-embedding-ada-002 (Version 2) models in Azure OpenAI Service through four options.

Integrating a single prompt into an SAP CAP application using GPT’s Chat API is quite straightforward.

However, what if you have multiple prompts that need to be integrated?

What if the prompts have dynamic input parameters?

What if you need flexibility, instead of being locked-into a specific Large Language Model?

Therefore, it is a best practice to implement a Large Language Model Proxy Service in your SAP CAP solution with the design principles as follows:

In our sample intelligent ticketing solution, we have implemented such a Large Language Model Proxy Service based on these principles. Next, we will explore into its design and implementation details.

In previous blog post about Prompt Engineering for Advance Text Processing on Customer Messages, we have seen all the prompts share the same structure consisting of Instruction, User Input (Input Indicator, Text Message and Output Indicator) and Response in JSON etc.

Take text summarization and sentiment analysis for example, the only differences are instruction and response.

Therefore, it is possible to abstract it as a custom generic RESTful API in SAP CAP illustrated in the diagram below, in which the details of the prompts and LLM API access can be hidden and configured into a JSON configuration file as config.json, accessible through the concept of use_case.

The API only requires two simple input parameters:

The diagram below illustrates how use-case(sentiment-analysis) can be used to identify its associated prompt detail and API access information in config.json. In this way, we can make the LLMProxyService configurable.

In llm-proxy-service.cds, a generic action named invokeLLM() is defined, along with its output schema as CustomerMsgResultType, which could be accessed through a generic RESTful API or a direct action call of LlmProxyService outside or within SAP CAP for all the integration with LLM APIs.

In our sample of LlmProxyService implementation, we have chosen direct http call via axios to LLM API, which makes it LLM-agnostic possible with configuration about LLM API access, such as “api_base_url” and “api_auth”. Certainly, it could be also achieved with LangChain etc.

Let’s have a look at the authentication to LLM API. The implementation details can be found here, which can support both OpenAI and Azure OpenAI Service through configuration api_auth: “bearer-api-key” and api_auth: “api-key”.

Let’s have a look at the implementation details of action invokeLLM() in LlmProxyService.

use_case - sentiment-analysis

use_case - customer-message-process

We have seen that how to integrate the prompts into an SAP CAP NodeJS Application with GPT’s Chat API, even implementing a generic, reusable, and configurable LLM Proxy Service to handle all the integration of LLM APIs and turning the prompts into a custom RESTful API in SAP CAP, leading to the flexibility of selecting LLMs, and ease of maintenance.

Authors: yatsea.li, amagnani, edward.neveux, jacobtan

Disclaimer: SAP notes that posts about potential uses of generative AI and large language models are merely the individual poster's ideas and opinions, and do not represent SAP's official position or future development roadmap. SAP has no legal obligation or other commitment to pursue any course of business, or develop or release any functionality, mentioned in any post or related content on this website.

Introduction

Large Language Models (LLMs) like ChatGPT and GPT-4 have gained significant popularity with emergent capabilities. In this fourth session of the series, we’ll explore the potentials of GPT in SAP ecosystem, and discuss how SAP partners can leverage this powerful AI technology in combination with SAP technologies to accelerate their solution development processes, and create more intelligent solutions on SAP Business Technology Platform (SAP BTP).

Here list the full blog post series of Exploring the potential of GPT in SAP ecosystem:

In our previous blog about we have seen GPT’s advanced text processing capability on customer messages through prompt engineering, such as text summarization, sentiment analysis, entities extraction etc.

In this blog post, we will proceed with integrating the prompts into the Intelligent Ticketing Solution for processing customer messages with GPT through its Chat API. Additionally, implementing the LLM Proxy Service in the architecture diagram.

A glimpse into the Chat API of GPT

Let us start with a look into GPT’s Chat API.

In the playground, we can examine the underlying API request to Azure’s OpenAI Service when submitting a prompt by clicking the "View Code" on the Chat session. Let us consider the example of analyzing the sentiment of input text.

In the following diagram, you can find the API endpoint, and API key to access GPT Chat API. The request body of Chat API consists of a system-role message for the instruction and a user-role message for the user input message, along with other parameters such as temperature, max_tokens, etc.

For more detail about Chat API, please refer to Azure OpenAI Service API Reference.

Using the prompts through GPT’s Chat API in NodeJS with SAP CAP

Next, let us look at some sample code on how to perform the API call using the same sentiment analysis prompt in SAP CAP Node.js, which we have seen previously.

Please refer to gpt-api-samples for the NodeJS samples of using Chat API of GPT-3.5 onward models and Embedding API of text-embedding-ada-002 (Version 2) models in Azure OpenAI Service through four options.

- langchain: A popular open-source framework for developing applications powered by large language models. With LangChain, you can leverage multiple Large Language Models such as OpenAI GPT models or Meta LLaMa models etc.

- @azure/openai module: The Azure OpenAI client library for JavaScript is an adaptation of OpenAI's REST APIs that provides an idiomatic interface and rich integration with the rest of the Azure SDK ecosystem

- azure-openai module: A fork of the official OpenAI Node.js library that has been adapted to support the Azure OpenAI API.

- Direct http call with axios module: Making a direct HTTP call to the RESTful API of GPT using any HTTP client in Node.js, such as axios, request etc.

Implementing a reusable, LLM-agnostic and configurable LLM Proxy Service

Integrating a single prompt into an SAP CAP application using GPT’s Chat API is quite straightforward.

However, what if you have multiple prompts that need to be integrated?

What if the prompts have dynamic input parameters?

What if you need flexibility, instead of being locked-into a specific Large Language Model?

Therefore, it is a best practice to implement a Large Language Model Proxy Service in your SAP CAP solution with the design principles as follows:

- It makes sense to have a reusable service that handles all the integrations with Large Language Model APIs, instead of scattering the integration glue code throughout the application.

- Being Large-Language-Model-agnostic will give you the flexibility to choose a Large Language Model based on your specific requirements.

- Making prompts configurable through a configuration mechanism, rather than hard-coding them as static and fixed prompts in the code, will provide greater flexibility and ease of maintenance.

In our sample intelligent ticketing solution, we have implemented such a Large Language Model Proxy Service based on these principles. Next, we will explore into its design and implementation details.

Turning the Prompts into a custom generic RESTful API in SAP CAP

In previous blog post about Prompt Engineering for Advance Text Processing on Customer Messages, we have seen all the prompts share the same structure consisting of Instruction, User Input (Input Indicator, Text Message and Output Indicator) and Response in JSON etc.

Take text summarization and sentiment analysis for example, the only differences are instruction and response.

Therefore, it is possible to abstract it as a custom generic RESTful API in SAP CAP illustrated in the diagram below, in which the details of the prompts and LLM API access can be hidden and configured into a JSON configuration file as config.json, accessible through the concept of use_case.

The API only requires two simple input parameters:

- use_case: text-summarization, sentiment-analysis etc, which can be used to identify its associated prompt and api access info from configuration.

- text: The text message of inbound customer message to be responded to.

Configuration of LlmProxyService

The diagram below illustrates how use-case(sentiment-analysis) can be used to identify its associated prompt detail and API access information in config.json. In this way, we can make the LLMProxyService configurable.

Service Definition of LlmProxyService

In llm-proxy-service.cds, a generic action named invokeLLM() is defined, along with its output schema as CustomerMsgResultType, which could be accessed through a generic RESTful API or a direct action call of LlmProxyService outside or within SAP CAP for all the integration with LLM APIs.

Authentication to LLM API

In our sample of LlmProxyService implementation, we have chosen direct http call via axios to LLM API, which makes it LLM-agnostic possible with configuration about LLM API access, such as “api_base_url” and “api_auth”. Certainly, it could be also achieved with LangChain etc.

Let’s have a look at the authentication to LLM API. The implementation details can be found here, which can support both OpenAI and Azure OpenAI Service through configuration api_auth: “bearer-api-key” and api_auth: “api-key”.

Implementation of action invokeLLM() in LlmProxyService

Let’s have a look at the implementation details of action invokeLLM() in LlmProxyService.

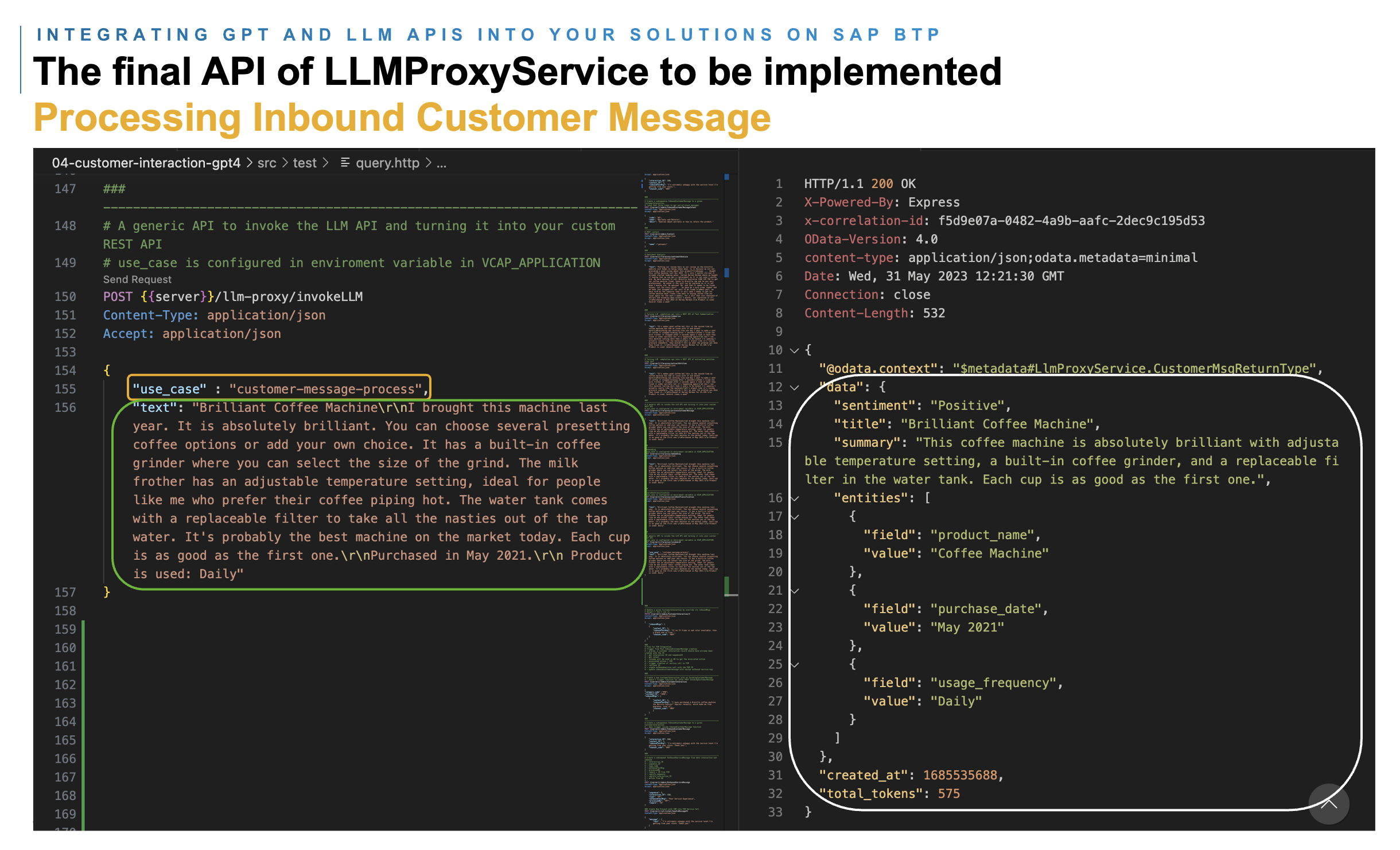

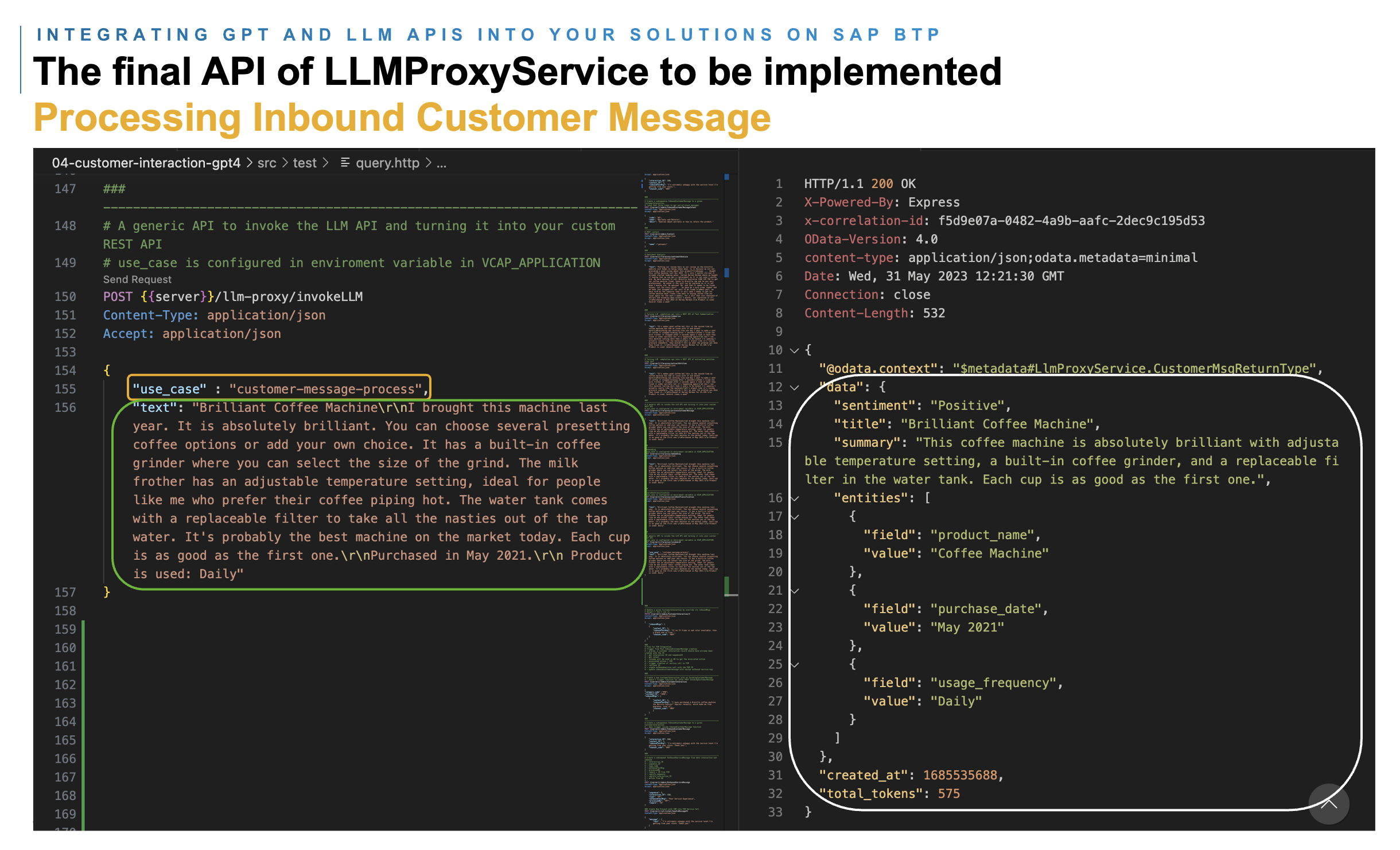

Test on Final REST API of invokeLLM

Finally, let us run some tests on the REST API of invokeLLM in LlmProxyService with

use_case - sentiment-analysis

use_case - customer-message-process

Further resources

- SAP and generative AI

- The source code of the sample Intelligent Ticketing Solution

- gpt-api-samples in NodeJS

- LangChain

Wrap up

We have seen that how to integrate the prompts into an SAP CAP NodeJS Application with GPT’s Chat API, even implementing a generic, reusable, and configurable LLM Proxy Service to handle all the integration of LLM APIs and turning the prompts into a custom RESTful API in SAP CAP, leading to the flexibility of selecting LLMs, and ease of maintenance.

Labels:

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

95 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

67 -

Expert

1 -

Expert Insights

177 -

Expert Insights

310 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,576 -

Product Updates

353 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

442 -

Workload Fluctuations

1

Related Content

- The 2024 Developer Insights Survey: The Report in Technology Blogs by SAP

- Explore the SAP HANA Cloud vector engine with a free learning experience in Technology Blogs by SAP

- Hybrid Architectures: A Modern Approach for SAP Data Integration in Technology Blogs by SAP

- External System Integration in DMC in Technology Blogs by Members

- Exploring Integration Options in SAP Datasphere with the focus on using SAP extractors - Part II in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 19 | |

| 15 | |

| 12 | |

| 11 | |

| 9 | |

| 8 | |

| 8 | |

| 8 | |

| 7 | |

| 7 |