- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Harnessing the Power of SAP HANA Cloud Vector Engi...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Introduction

In the rapidly evolving world of business, the ability to leverage advanced technologies to extract meaningful insights from data is paramount. One such technology that has been making waves is the Language Model (LLM), known for its ability to understand, generate, and augment human language in a way that is contextually relevant. However, the true potential of LLMs can only be unlocked when they are fed with rich, business-specific knowledge. This is where SAP HANA Cloud Vector Engine comes into play.

SAP HANA Cloud Vector Engine is a powerful tool that can store and process large amounts of data in real-time, making it an ideal companion for LLMs. By integrating LLMs with SAP HANA Cloud Vector Engine, businesses can create a context-aware LLM architecture that can understand and respond to business queries with unprecedented accuracy and relevance.

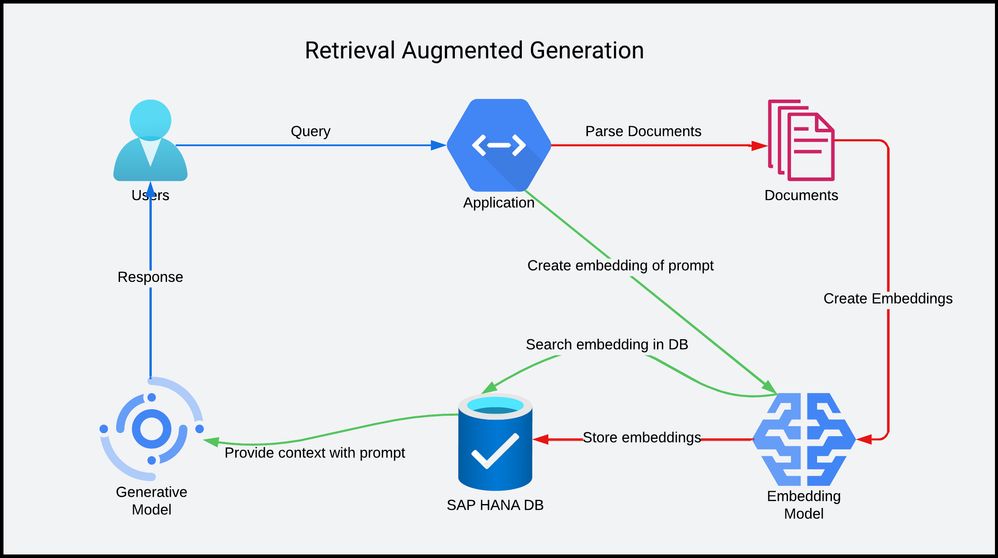

In this blog post, we will delve into the process of leveraging SAP HANA Cloud Vector Engine to build a context-aware LLM architecture using a Retrieval-Augmented Generation (RAG) approach. We will explore how this integration can bridge the gap between LLMs and your business data, leading to more intelligent and insightful interactions. Whether you are a data scientist, a business analyst, or a tech enthusiast, this blog post will provide you with valuable insights into the future of business intelligence. So, let's get started!

Content:

- Important Terms

- Technical Implementation(Demo App):

- Initial Setup for Python App

- Crafting Python App REST Endpoints

- Establishing the LLM and DB context

- Parse the uploaded documents(.pptx, .pdf, .wordx)

- Generate and store embeddings in SAP Hana DB

- Interacting with the Language Model using Context from Vector DB

Key benefits of using Retrieval-Augmented Generation (RAG) architecture

- Conclusion

- References

Important Terms:

Embeddings: In the context of machine learning, embeddings are a type of word representation that captures various aspects of a word's meaning. They are capable of capturing context of a word in a document, semantic and syntactic similarity, relation with other words, etc. Embeddings transform high-dimensional data into low-dimensional vectors, making it easier to perform machine learning tasks.

Embedding Model: An embedding model is a way to reduce the dimensionality of input data, such as text. It represents complex data in a lower-dimensional space, which is easier to work with. These models are often used in natural language processing (NLP) to map words or phrases from a vocabulary to vectors of real numbers.

Generative Model: A generative model in machine learning is a type of statistical model that generates all values for observations, typically through a probabilistic distribution. Generative models contrast with discriminative models, which model the distribution of individual classes.

SAP HANA Vector Engine: SAP HANA Vector Engine is a part of SAP HANA Cloud that is designed to process large amounts of data in real-time. It's a powerful tool that can store and process vector data, making it ideal for tasks that require high computational power and speed.

Retrieval-Augmented Generation (RAG): RAG is a method that combines the benefits of retrieval-based models and generative models for machine learning tasks. In the context of language models, it uses a retriever to find relevant context from a document collection, and a generator to create a response from the retrieved context.

Language Model (LLM): A language model is a type of artificial intelligence model that understands, generates, and augments human language. LLMs are used in various applications, including translation, speech recognition, and text summarization.

Technical Implementation(Demo App):

1) Inital Setup for Python App

Add the relevant libraries in the requirements.txt file

pdfminer.six==20201018

langchain~=0.1.5

requests~=2.31.0

python-pptx==0.6.21

python-docx==0.8.11

langchain_openai~= 0.1.1

python-dotenv==1.0.1

hdbcli==2.20.15

flask-cors~=3.0.10

Flask==3.0.3

Add the required environment variables in a .env file in the root directory

HANA_DB_ADDRESS=host

HANA_DB_USER=user

HANA_DB_PORT=port

HANA_DB_PASSWORD=password

HANA_TABLE=HANA_TABLE_NAME

OPENAI_API_KEY=your_openai_api_key

2) Crafting Python App REST Endpoints:

In this proof of concept (PoC), we will be developing two key REST endpoints. These endpoints will serve as the backbone of our application, enabling us to interact with the Language Model (LLM) and manage document uploads effectively.

The first endpoint is designed to handle document parsing and uploading. It is capable of processing a variety of document formats, including PDF, PPTX, and DOCX. This endpoint will extract the text from these documents and prepare it for further processing.

The second endpoint is where the magic happens. This endpoint allows us to interact with the LLM, providing it with the relevant business context in the prompt. This ensures that the LLM's responses are not only accurate but also contextually relevant to the business scenario at hand.

from flask import Flask, request

import chatbot

app = Flask(__name__)

llmContext = LLMContextInitializer()

@app.route("/chat", methods=["POST"])

def chat():

global llmContext

request_data = request.data.decode('utf-8')

response_content = chatbot.chat_with_llm(llmContext, request_data).content

return response_content

@app.route("/parse_and_upload_file", methods=["POST"])

def parse_and_upload_file():

global llmContext

# Check if files were included in the request

if 'files[]' not in request.files:

return "No files uploaded", 400

files = request.files.getlist('files[]')

for file in files:

if file.filename == '':

continue

if not parseAndUploadDocuments.allowed_file(file.filename):

return "Unsupported file type, please upload only pdf, word or ppt files", 400

parseAndUploadDocuments.parse_and_upload_documents(files, llmContext)

return "Files parsed and uploaded successfully"

3) Establishing the LLM and DB context

This section of the blog post will explain the process of importing necessary libraries, initializing the AI proxy client, setting up the database connection, and defining the LLMContextInitializer class. This setup is crucial for the functioning of our application as it establishes the foundation for interacting with the Language Model and the SAP HANA database.

The LLMContextInitializer class is defined, which serves as a container for various components of the application. This includes the Language Model (llm), the embeddings (embeddings), the database (db), the retriever (retriever), the memory (memory), and the question-answering chain (qa_chain).

In the __init__ method of the LLMContextInitializer class, these components are initialized. The embeddings and Language Model are set up using the AI proxy client. The database and retriever are set up using the previously established database connection. The memory and question-answering chain are also set up, with the chain being configured to use the Language Model and the retriever.

# Import necessary libraries

from langchain_openai import OpenAIEmbeddings, ChatOpenAI

from langchain.memory import ConversationBufferMemory

import os

from dotenv import load_dotenv

from hdbcli import dbapi

from langchain_community.vectorstores.hanavector import HanaDB

from langchain.prompts import PromptTemplate

from langchain.chains import ConversationalRetrievalChain

import getpass

os.environ["OPENAI_API_KEY"] = getpass.getpass()

prompt_template = """

You are provided multiple context items that are related to the prompt you have to answer.

Use the following pieces of context to answer the question at the end.

```

{context}

```

Question: {question}

"""

PROMPT = PromptTemplate(

template=prompt_template, input_variables=["context", "question"]

)

chain_type_kwargs = {"prompt": PROMPT}

load_dotenv()

# Connect to the database using environment variables

connection = dbapi.connect(

address=os.environ.get("HANA_DB_ADDRESS"),

port=os.environ.get("HANA_DB_PORT"),

user=os.environ.get("HANA_DB_USER"),

password=os.environ.get("HANA_DB_PASSWORD"),

autocommit=True,

sslValidateCertificate=False,

)

class LLMContextInitializer:

prompt = None

llm = None

embeddings = None

db = None

retriever = None

qa_chain = None

memory = None

def __init__(self):

self.embeddings = OpenAIEmbeddings(model="text-embedding-ada-002")

self.llm = ChatOpenAI(model="gpt-3.5-turbo", temperature=0)

self.db = HanaDB(

connection=connection,

embedding=self.embeddings,

table_name=os.environ.get("HANA_TABLE")

)

self.retriever = self.db.as_retriever()

self.memory = ConversationBufferMemory(memory_key="chat_history", output_key="answer", return_messages=True)

self.qa_chain = ConversationalRetrievalChain.from_llm(

self.llm,

self.db.as_retriever(search_kwargs={"k": 5}),

return_source_documents=True,

memory=self.memory,

verbose=False,

combine_docs_chain_kwargs={"prompt": PROMPT}

)

4) Parse the uploaded documents(.pptx, .pdf, .wordx):

In the first step we created the REST endpoint to parse and upload the documents. In this step we will parse various formats of uploaded documents and extract texts from them using various python libraries which will be created into embeddings in later steps.

def docx_to_text(file), def pdf_to_text(file) and def pptx_to_text(file): These functions are responsible for converting their respective document formats into text strings.

from pptx import Presentation

from pdfminer.high_level import extract_text_to_fp

from io import BytesIO

from docx import Document

def extract_text_from_shape(shape):

if hasattr(shape, "text"):

return shape.text

elif hasattr(shape, "text_frame"):

return '\n'.join([extract_text_from_shape(paragraph) for paragraph in shape.text_frame.paragraphs])

elif hasattr(shape, "shape"):

return '\n'.join([extract_text_from_shape(child) for child in shape.shape])

else:

return ''

# Method to extract text from a PowerPoint presentation

def pptx_to_text(file):

prs = Presentation(file)

text = ''

for slide in prs.slides:

for shape in slide.shapes:

text += extract_text_from_shape(shape)

return text

# Method to extract text from a PDF file

def pdf_to_text(file):

output = BytesIO()

extract_text_to_fp(file, output)

text = output.getvalue().decode('utf-8')

return text

# Method to extract text from a Word document

def docx_to_text(file):

doc = Document(file)

text = ""

for paragraph in doc.paragraphs:

text += paragraph.text + "\n"

return text

5) Generate and store embeddings in SAP Hana DB:

a) def parse_and_upload_documents(files, llm_context):

This function initiates by purging the existing database. It then categorizes each uploaded file based on its format and extracts text using the corresponding function. The extracted text is segmented into manageable chunks, and a Document object, encapsulating the text and associated metadata, is created for each chunk.

Before the documents are added to the SAP Hana DB, the function verifies for duplicate filenames. If a duplicate is detected, it's bypassed; otherwise, the documents are incorporated into the database.

b) def check_file_name_duplicate(db, file_name):

This function checks whether a given file already resides in the database table. If it does, the file is disregarded during the upload process. The function specifically checks the metadata column of the SAP Hana DB table, comparing the value of the source field with the filename. If the document already exists, the upload is skipped. This is facilitated by the parse_and_upload_documents function, where we manually create a Document with the metadata having a source field as the filename.

Note: In the below code we are first extracting the text from documents and then creating Document Object from them using langchain.docstore.document, in which we provide the page_content and the metadata. But if you want to use standard libraries to create Document Objects from different type of file formats you can refer Document Loaders

from langchain_text_splitters import RecursiveCharacterTextSplitter

from langchain.docstore.document import Document

def parse_and_upload_documents(files, llm_context):

llm_context.db.delete(filter={})

for file in files:

filename = file.filename

print("Processing file:", filename)

text = None

if filename.endswith('.pptx'):

text = pptx_to_text(file)

elif filename.endswith('.pdf'):

text = pdf_to_text(file)

elif filename.endswith('.docx'):

text = docx_to_text(file)

# Split the text into chunks

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=250, add_start_index=True)

text_chunks = text_splitter.split_text(text)

documents = []

for chunk in text_chunks:

# Create a Document object for each chunk

document = Document(

page_content=chunk,

metadata={"source": filename},

)

documents.append(document)

print(f"Number of document chunks: {len(documents)}")

print("Documents:", documents)

if not check_file_name_duplicate(llm_context.db, filename):

# Add the documents to the database

llm_context.db.add_documents(documents)

else:

print(f"File {filename} already exists in the database")

return "Files parsed and uploaded successfully"

def check_file_name_duplicate(db, file_name):

"""checks if the file name exists in the vector db"""

cur = db.connection.cursor()

cur.execute(

f"SELECT DISTINCT JSON_VALUE({db.metadata_column}, '$.source') AS FILE_NAME FROM {db.table_name} WHERE JSON_VALUE({db.metadata_column}, '$.source') = '{file_name}';")

result = cur.fetchall()

result_column = [row[0] for row in result]

return len(result_column) > 0

6) Interacting with the Language Model using Context from Vector DB:

Up until step 4, we have successfully followed the path indicated by the red line in the above RAG architecture diagram. With the initial setup now complete, the user is ready to interact with the Language Model using a prompt.

This prompt is then transformed into an embedding, and the top 5 most relevant embeddings are retrieved from the SAP Hana DB Vector Engine. The text corresponding to these embeddings is supplied to the Language Model as context, along with the original user prompt. This process ensures that the Language Model's responses are not only accurate but also contextually relevant, leading to more insightful and meaningful interactions. The chat REST endpoint in step 1 will call the below funtion:

def chat_with_llm(llmcontext, question):

qa_chain = llmcontext.qa_chain

result = qa_chain.invoke({"question": question})

print("Answer from LLM:")

print("================")

print(result["answer"])

print("Response fetched")

source_docs = result["source_documents"]

print("================")

print(f"Number of used source document chunks: {len(source_docs)}")

for doc in source_docs:

print("-" * 80)

print(doc.page_content)

print(doc.metadata)

return result["answer"]

Key benefits of using Retrieval-Augmented Generation (RAG) architecture

Contextual Relevance: RAG architecture allows the model to pull in relevant information from a large corpus of documents, leading to more contextually accurate responses.

Scalability: RAG separates the retrieval and generation processes, allowing for more efficient scaling. You can update the document collection independently of the model, making it easier to add new information.

Flexibility: RAG is model-agnostic, meaning it can be used with any transformer-based generative model. This provides a lot of flexibility in terms of the models you can use.

Improved Performance: By combining the strengths of retrieval-based and generative models, RAG often outperforms both on various tasks.

Real-time Adaptability: RAG models can adapt their responses based on the information that's currently relevant, making them great for real-time applications.

Efficient Resource Use: By retrieving only a small subset of documents for each query, RAG models make more efficient use of computational resources compared to models that need to consider the entire document collection.

Conclusion:

In this blog post, we embarked on an exciting journey through the world of Language Models, SAP HANA Cloud Vector Engine, and Retrieval-Augmented Generation (RAG) architecture. We explored how to create a context-aware LLM architecture that can understand and respond to business queries with unprecedented accuracy and relevance.

We delved into the technical implementation, setting up REST endpoints, parsing and uploading documents, and interacting with the LLM. We also discussed the benefits of using RAG architecture, highlighting its ability to provide contextually relevant responses, its scalability, flexibility, and efficient resource use.

The integration of LLMs with SAP HANA Cloud Vector Engine opens up a world of possibilities for businesses. It allows them to leverage the power of advanced technologies to extract meaningful insights from their data, leading to more intelligent and insightful interactions.

As we continue to explore and innovate in this space, we look forward to seeing how these technologies will shape the future of business intelligence. Whether you are a data scientist, a business analyst, or a tech enthusiast, we hope this blog post has provided you with valuable insights and sparked your curiosity to learn more. The future is here, and it's more exciting than ever!

References:

- Langchain Vectorstores - SAP HANA Vector: Bow to set up SAP HANA Cloud Vector Engine and use it to query documents for retrieval augmented generation (RAG) using large language models (LLMs)

- hdbcli on PyPI: Installation and usage of a Python client for SAP HANA to connect to and manipulate data in a SAP HANA database

- Langchain Data Connection - Document Loaders: Document loaders in Langchain and how to use them to load data from various sources as Documents (pieces of text with additional information)

- Langchain Data Connection - Text Embedding: Text embedding models, how they are used to represent text as vectors for mathematical comparison, and how to use the LangChain library to access text embedding models

- Langchain Data Connection - Document Transformers: Text splitters, their purposes and different types (splitting by HTML tags, Markdown characters, code characters, sentences, tokens, or semantic topics), and how to use Chunkviz to see how the splitter worked on your text)

- SAP HANA Cloud - SAP HANA Database: Vector Engine Guide: Vectors, vector embeddings, similarity measures, the built-in REAL_VECTOR data type, and two vector similarity measures offered by SAP HANA Cloud database

- SAP Managed Tags:

- SAP Data Intelligence,

- SAP HANA service for SAP BTP

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

94 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

67 -

Expert

1 -

Expert Insights

177 -

Expert Insights

307 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,576 -

Product Updates

349 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

436 -

Workload Fluctuations

1

- 10+ ways to reshape your SAP landscape with SAP Business Technology Platform - Blog 6 in Technology Blogs by SAP

- The 2024 Developer Insights Survey: The Report in Technology Blogs by SAP

- DR landscape information for specific customer in Technology Q&A

- Hybrid Architectures: A Modern Approach for SAP Data Integration in Technology Blogs by SAP

- Exploring GraphQL and REST for SAP Developers in Technology Blogs by SAP

| User | Count |

|---|---|

| 24 | |

| 16 | |

| 11 | |

| 10 | |

| 10 | |

| 9 | |

| 9 | |

| 8 | |

| 8 | |

| 7 |