- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Exploring the basics of Explainable AI - Part 2

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

The WHY-WHAT-HOW of Explainable AI

In the previous part, we covered the basics of Artificial Intelligence. In this part, we will focus on the concepts of XAI its importance, use cases and various models.

WHY?

As AI is making more and more decisions for us in the field of finance, healthcare, law enforcements, military, and governments it is increasingly that they make these decisions right and for the right reasons. Explainable AI (XAI) is a set of tools and methods that help us understand these the predictions and decisions that AI programs make especially where the stakes are high stake.

Take an example of artificial intelligence program that predicts if a patient is at high-risk post discharge, it is crucial for doctors to understand the reasoning behind the predictions. With explanations, the doctors can better understand the basis and can make an informed decision. Without this transparency, it can be difficult to trust an AI system’s predictions.

The General Data Protection Regulation (GDPR) requires that for decision-making systems solely based on automated processing people have rights to have meaningful explanation about the logic involved for the decisions made.

As Artificial Intelligence continues to advance in high-risk tasks and augmenting humans, there is an increasing importance placed on establishing trust and transparency within the system.

WHAT?

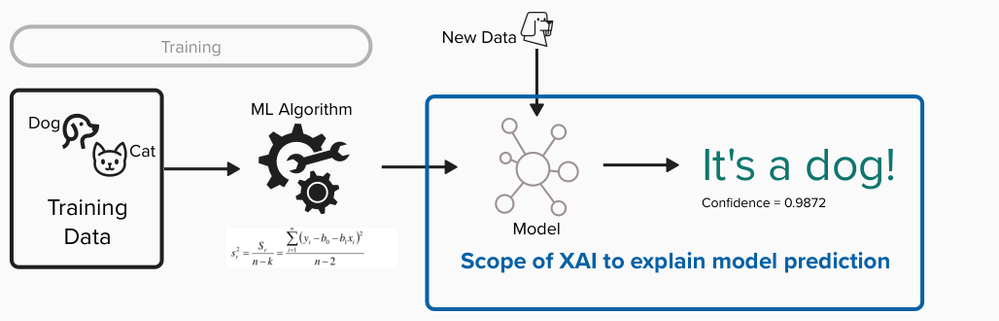

In most simple terms, Explainable AI are the methods and techniques that can make AI model’s decisions or predictions understandable to humans.

Figure 3 Scope of Explainable AI (XAI) in general

In context of AI, Explainability and Interpretability helps in building trust and improving transparency but sometimes used interchangeably. In my understanding there is a difference between the two concepts.

Explainability focuses on providing insights on the working of the model for meaningful explanation about why the model took a certain decision.

On the other hand, Interpretability aims at understanding the behavior of the AI system by building relationship between input features and output predictions.

These two terms are commonly used interchangeably and so it was necessary to bring it up here.

HOW?

The AI models can be broadly classified as directly explainable models such as linear regression models, decision trees and rule-based models and they are simple models to explain. Generalized Linear Rule Model (GLRM) is an example of directly explainable model. It generates simple rules which can be easily understood by humans. On the other side, models such as deep neural networks require post-hoc explainability. These models are complex and act as a black box for humans to understand its working and so they require another set of algorithms to explain the decisions.

There are three techniques applied for algorithms that are used for post-hoc explainability.

Explaining the model globally – Generate an explanation that gives an overview of the model and how it works. These algorithms try to train a simpler model like a decision tree to approximate the outcomes from the complex model while optimizing loss of information.

Explaining a decision locally – The algorithm tries to explain a particular decision that the model made. For example, if the AI model predicts a credit score for an applicant as low risk, the algorithm provides an explanation why the model thinks the applicant is at a low risk of defaulting loan. This could be achieved, for instance, with the local contribution of features or with giving similar examples.

Inspecting counterfactual – The algorithm is focused to give an explanation by generating counterfactual instance. Counterfactual instance is similar to the original data but differs in some features that allows user to get insights into the factors that influence the outcomes. For example, the outcome of high risk credit score of an applicant can be explained with an example of hypothetical applicant with low credit score and with most features similar except higher debt percentage.

In this part we learned why XAI is important, what is XAI and then how XAI algorithms work. In the next and final part, we will cover how to design user experience for XAI.

Resources and further reads:

Generative AI at SAP – an OpenSAP course

SAP Fiori Design Guidelines for Web

UXAI – A visual introduction to Explainable AI for Designers.

Introduction to Explainable AI: Techniques and Design (By Vera Liao)

Building XAI applications with question-driven user-centered design (Blog on Medium by Vera Liao)

Trustworthy AI: How to make artificial intelligence understandable

People+AI Research (PAIR) Guidebook: AI Design Guideline from Google

AlphaGo documentary on YouTube

- SAP Managed Tags:

- Machine Learning,

- Artificial Intelligence,

- User Experience

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

93 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

67 -

Expert

1 -

Expert Insights

177 -

Expert Insights

301 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

346 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

430 -

Workload Fluctuations

1

- Pioneering Datasphere: A Consultant’s Voyage Through SAP’s Data Evolution in Technology Blogs by Members

- Unveiling the Future: The first role-based certification for SAP Analytics Cloud is live! in Technology Blogs by SAP

- Global Explanation Capabilities in SAP HANA Machine Learning in Technology Blogs by SAP

- SAP Inside Track Bangalore 2024: Generative AI Extravaganza in Technology Blogs by SAP

- Exploring the basics of Explainable AI - Part 3 in Technology Blogs by SAP

| User | Count |

|---|---|

| 29 | |

| 17 | |

| 15 | |

| 13 | |

| 11 | |

| 9 | |

| 8 | |

| 8 | |

| 8 | |

| 7 |