- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Db2 Database Recovery and Cloud Object Store

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Terminology

Let’s make you familiar with the most important terminology:

- In IBM Cloud Object Storage, a bucket is a kind of container. Buckets are not nested, that is, a COS bucket doesn’t contain other buckets.

- Data is stored as COS objects. Object names can include slashes so that the naming often resembles a UNIX file system structure. However, unlike a file system, COS objects have no hierarchy.

Configuration

As a first step, you must set up access to the Cloud. With current levels of IBM Db2 for Linux, UNIX, and Windows, you can use the Amazon S3 API to work with the cloud object store (COS). A Db2 instance works with a COS instance using the following resources:

- A keystore to keep access data for the COS account

- Account credentials for the COS instance

You can use a local keystore, or a centralized keystore. For Cloud access, the Amazon S3 API appears to become a standard. IBM Db2 for Linux, UNIX, and Windows allows the use of this API, but for authentication using the S3 API, you must create a key pair consisting of an access key and a secret key. This key pair is known as HMAC credential. The procedure to create an HMAC credential is well documented.

Cloud Access

For a local keystore, configure the Db2 instance with location and keystore type:

db2 update dbm cfg using keystore_location <path of keystore file>

db2 update dbm cfg using keystore_type pkcs12

Restart the Db2 instance to activate the change.

The next step is to catalog the cloud storage location using the following command:

db2 catalog storage access alias <alias name> \

vendor s3 \

server ... \

user ... \

password ... \

[container <COS bucket name> [object <object name>]]

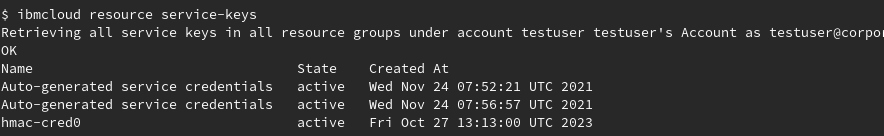

To run this command, you can use an alias name of your choice. The required connection data consists of the remote location (the server entry) and the credentials (user/password). You can get this data by listing the HMAC credentials for your COS instance. List available service keys using the following command:

ibmcloud resource service-keys

Use the key name of the HMAC credential that you created and run the following:

ibmcloud resource service-key <key name>

Under cos_hmac_keys, the items access_key_id and secret_access_key indicate the entries for user and password. The entry listed as endpoints is a URL containing a list of possible server locations. Use one of the addresses listed to specify the server parameter.

With the catalog storage access command, you define an alias that is used to refer to the COS objects. The optional parameters container and object allow you to specify a COS bucket and a prefix for object names as part of the alias. In the next section, I’ll show you why these parameters can be beneficial.

Location Identifiers

Now that you have created the alias, you will also need to know how to use it in the Db2 context to access a COS location.

As in the following example, start creating the alias myalias to point to your COS instance:

db2 catalog storage access alias myalias \

vendor s3 \

server https://control.cloud-object-storage.cloud.ibm.com/v2/endpoints \

user ejlMaKx3iKJgYTiQHCWRUSXNXx70hiMt \

password 23Hd6Hxj7NgZhjCjzwPgWH4onHoYmHtgTKhweDf2I5TDYAqp

Before you can store data in a COS instance, you must create a container called a bucket. For example, create a bucket named mybucket using the command below:

ibmcloud cos bucket-create –bucket mybucket

To work with IBM Db2 for Linux, UNIX, and Windows and with objects in bucket mybucket, you use a location identifier like the following:

DB2REMOTE://myalias/mybucket/myobject

IBM Db2 uses the object part of the location identifier (myobject in this example) as prefix for the COS object names. In fact, this terminology requires some clarification. When I talk about location identifiers of IBM Db2, the object information refers to a prefix. In the COS terminology of the IBM Cloud, the object name identifies the object itself. In the examples below I will explain this in more detail.

The syntax shown potentially leads to lengthy location identifiers that are cumbersome in daily operations. Fortunately, there are options to make the handling of location identifiers a bit more comfortable.

If you include the bucket mybucket in the definition of myalias (using the keyword container), then the DB2REMOTE identifier used before is still correct:

db2 catalog storage access alias myalias \

vendor s3 \

server https://control.cloud-object-storage.cloud.ibm.com/v2/endpoints \

user ejlMaKx3iKJgYTiQHCWRUSXNXx70hiMt \

password 23Hd6Hxj7NgZhjCjzwPgWH4onHoYmHtgTKhweDf2I5TDYAqp \

container mybucket

However, you can now omit the bucket name:

DB2REMOTE://myalias//myobject

The double slash after the alias indicates that the information about the bucket to be used is taken from the alias. If the object name is also specified in the alias definition, the DB2REMOTE identifier can be written as follows:

DB2REMOTE://myalias//

An identifier ending with a double slash indicates that the object name should also be taken from the alias. However, you still can specify a bucket, and an object name. For example, you can use the alias to access another bucket of your COS instance.

Multipart Upload

For performance reasons, we strongly recommend that you upload large amounts of data into COS using a multipart upload. For a multipart upload, the data is split into parts that are uploaded independently and in parallel. For more information, refer to the Cloud documentation Storing large objects at the IBM Cloud website.

IBM Db2 uses multipart upload by default. (The Db2 registry setting DB2_ENABLE_COS_SDK is ON.) Turning off multipart upload is not recommended. Throughout this blog post, I will assume that environments with multipart upload are used.

I should mention that even the multipart upload has general size limitations. However, these are quite high, so even backups of large database environments can be configured within these limitations:

- Maximum number of parts per upload: 10000

- Chunk size: 5mb - 5gb

- Maximum size for an object in COS: 10tb

In IBM Db2, the size of the data chunks is configured in the DBM CFG using parameter MULTIPARTSIZEMB. The unit is in megabytes, the default is 100. To stay within the boundaries of the IBM Cloud limitations, values between 5 and 5120 are allowed.

In addition, to configure the multipart upload properly, make sure that you stay below the maximum values mentioned above. For backups to COS, the size limitations hold per backup path. Therefore, you need to:

- Use multiple backup paths for backup images larger than 10tb.

- Set the MULTIPARTSIZEMB parameter to stay below 10000 parts per backup path.

Staging Area

For some data transfers, IBM Db2 uses a local staging area. The staging area temporarily stores the data before it’s uploaded to the Cloud, or it collects the data downloaded from the Cloud before it’s further processed. While a database backup uploads the data directly, a staging area is required for:

- Database restore

- Logfile archiving and retrieval

By default, the staging area is a subdirectory of ~/sqllib/tmp. You can configure a different path using the Db2 registry parameter DB2_OBJECT_STORAGE_LOCAL_STAGING_PATH. After you have modified this parameter, you must restart the Db2 instance for the change to take effect.

For more information, see https://www.ibm.com/docs/en/db2/11.5?topic=reference-remote-storage-requirements.

Log Archiving and Retrieval

Now that you are equipped with the means to access your COS instance from Db2, you can configure your database to archive the database log files into the cloud. For example, to archive the log file to bucket mybucket, use the following command:

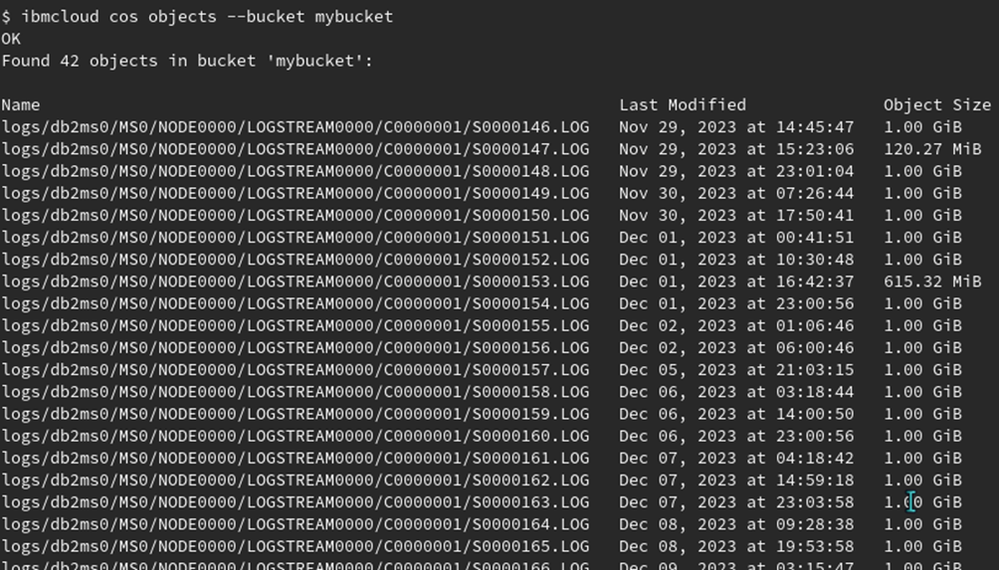

db2 update db cfg using logarchmeth1 DB2REMOTE://myalias/mybucket/logs

Note that you need to be connected to the database for this command to work. At the remote storage, Db2 creates object names resembling the directory structure of the local file system:

In the specification of the location identifier, we see that the object information is:

logs

With the location identifier specified, this is the prefix used for all objects stored in COS. The full COS object names identify the individual objects:

logs/db2ms0/MS0/NODE0000/LOGSTREAM0000/C0000001/S0000146.LOG

logs/db2ms0/MS0/NODE0000/LOGSTREAM0000/C0000001/S0000147.LOG

...

For logfile retrieval, IBM Db2 uses the same configuration parameter as for the logfile archiving. The log files are retrieved to the staging area. From there, they are further processed depending on the utility requesting the logfile. Examples are a backup with INCLUDE LOGS option or a ROLLFORWARD operation.

Backup

To perform a backup to a COS instance, you use the same syntax as for a backup to disk. For the backup storage location, specify DB2REMOTE identifiers as discussed in the section on location identifiers.

In the example shown here, the backup goes to a different bucket than the log files:

db2 backup db ms0 online to

db2remote://myalias/mybackup/backups/MS0,

db2remote://myalias/mybackup/backups/MS0,

db2remote://myalias/mybackup/backups/MS0,

db2remote://myalias/mybackup/backups/MS0

You are free to define an additional storage alias, say myalias2, with bucket mybackup as default. Then, the backup command can be written as follows.

db2 backup db ms0 online to

db2remote://myalias2//backups/MS0,

db2remote://myalias2//backups/MS0,

db2remote://myalias2//backups/MS0,

db2remote://myalias2//backups/MS0

If you also specify the object in the alias configuration, you can also omit the part after the last double slash:

db2 backup db ms0 online to

db2remote://myalias2//,

db2remote://myalias2//,

db2remote://myalias2//,

db2remote://myalias2//

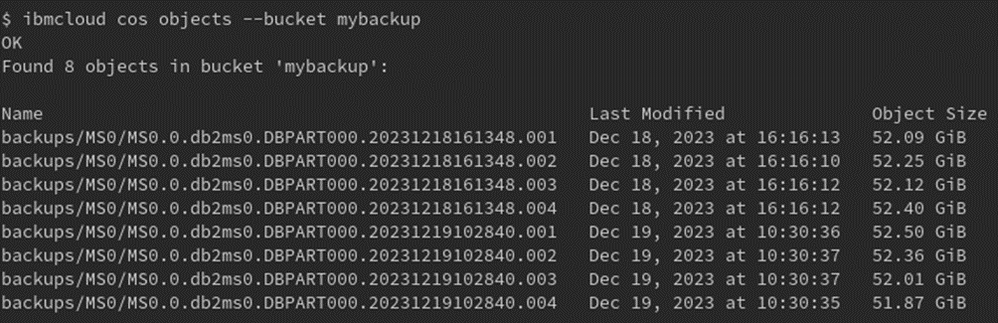

In the listing below, you can find two backups in bucket mybackup. Each of the backup images consists of four COS objects. Recall that the sizes of the objects are limited to 10tb.

Notes:

- If DB CFG parameters NUM_DB_BACKUPS, REC_HIS_RETENTN, and AUTO_DEL_REC_OBJ are configured properly, Db2 will automatically delete old backup images, including the corresponding log files.

- A backup using INCLUDE LOGS, which is the default behavior, must potentially restore log files from the archive location. When COS is used, this works automatically.

- The object part of the location identifier is backups/MS0. To store the backup as COS objects, IBM Db2 takes this object part and appends a slash and a string that we would expect from a backup to disk.

Restore

Like a backup, the restore from COS works with the same DB2REMOTE identifiers using the syntax of a restore from local storage. In fact, the restore makes use of the staging area that was configured. Note, however, that the restore downloads the first part of the backup image, that is, the file of the backup image with sequence number 001, and then starts the restore processing. While the restore is running, the Db2 engine downloads the remaining sequences of the backup image in the background.

The consequence of this behavior is:

- You must have the disk space available that is required to hold the backup image to be restored.

- You must balance the advantages and restrictions on parallel backup and restore processing. On the one hand, it’s beneficial to have many small sequences of the backup image because then a restore will start earlier to copy data into the data files. On the other hand, you can’t choose too many sequences because there are limitations on backup and restore processing, such as the amount of prefetcher threads in the Db2 engine, possible I/O bottlenecks due to high processing parallelism, and network throughput into the Cloud. It’s therefore advisable to start the backup/restore parallelism with a similar number of sequences as in non-cloud environments. If still desired, the effects of higher parallelism can be tested afterwards.

Considerations for SAP Environments

In an SAP environment, one open question naturally arises:

Can I start a backup into the Cloud using SAP tools, in particular the DBA Cockpit?

The answer is simple: You can specify a backup to an IBM Cloud COS location just as you can specify a disk location. You simply use DB2REMOTE identifiers instead of local OS paths.

To start a database backup from an SAP system, call up the DBA Cockpit (transaction code: DBACOCKPIT). In the navigation pane of the DBA Cockpit, choose Jobs → DBA Planning Calendar.

In the DBA planning calendar, choose Database Backup to Device from the action pad, and enter the required parameters for your backup. You can then add a schedule using the button Add or start the backup immediately using Execute Immediately.

Note that the field to specify the path locations for the backup image has a limited length. Make sure that SAP Note 3406473 is applied to have a maximum length of 250 bytes.

If you intend to use multiple paths for the backup image, the field can become too small. It will therefore be helpful to use short location identifiers as I’ve outlined above. For example, you can define short aliases and include the bucket and the object name into the alias definition.

Summary

I’ve shown you how you can set up the backup and recovery of an IBM Db2 for Linux, UNIX, and Windows database to the Cloud. With the procedures I’ve outlined, you can store backup images and log file archives to the cloud object store (COS) of an IBM Cloud. As the approach chosen uses the Amazon S3 API, you can use my examples for the setup of an AWS Cloud as well.

- SAP Managed Tags:

- IBM Db2 for Linux, UNIX, and Windows

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

93 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

67 -

Expert

1 -

Expert Insights

177 -

Expert Insights

301 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

346 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

429 -

Workload Fluctuations

1

- In SAP Hana Cloud, do packages exist as database objects, like in Oracle? in Technology Q&A

- Extract blob data (PDF) from CAPM using python library of Document information extraction service. in Technology Blogs by Members

- IoT - Ultimate Data Cyber Security - with Enterprise Blockchain and SAP BTP 🚀 in Technology Blogs by Members

- SAP HANA Cloud's Vector Engine vs. HANA on-premise in Technology Blogs by Members

- Analyze Expensive ABAP Workload in the Cloud with Work Process Sampling in Technology Blogs by SAP

| User | Count |

|---|---|

| 32 | |

| 17 | |

| 15 | |

| 14 | |

| 11 | |

| 9 | |

| 8 | |

| 8 | |

| 8 | |

| 7 |