- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Bring SAP Ariba data into SAP Datasphere

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Introduction

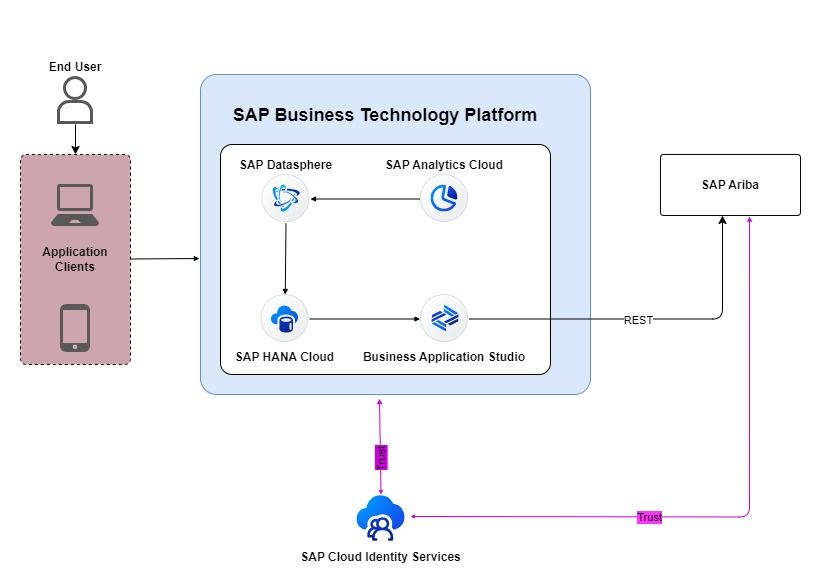

In the ever-evolving landscape of enterprise solutions, integrating data seamlessly has become a key driver for efficiency and informed decision-making. Within a customer’s SAP landscape, there could be a mix of different SAP solutions that serve the purpose of transactional data storage and intelligent data processing. Usually, there is a need for a robust integration between SAP solutions to be able to access the data in the system of records (e.g., SAP Ariba) and process it in the analytical layer (e.g., SAP Analytics Cloud).

This blog discusses the integration of SAP Ariba REST API with SAP HANA Cloud, SAP Datasphere, and SAP Analytics Cloud. The solution uses SAP Business Technology Platform (BTP) and Cloud Application Programming (CAP) Node.js extractor application to integrate SAP solutions.

TL;DR Hands On Tutorial

Overview

SAP HANA Cloud is supported as the CAP standard database and is recommended for productive use. The solution uses CAP Core Data Services (CDS) for service definition and domain modeling with HANA Cloud as the data persistency layer.

As SAP HANA Cloud is available as a service within SAP BTP, and it is also the database on which SAP Datasphere is built, there can be two scenarios depending on which SAP HANA Cloud is used for data persistence. The solution can work on both, and they are discussed in detail in this blog.

The steps to implement the solution are:

- SAP Ariba REST API exploration.

- CAP Node.js extractor application implementation, deployment, and testing when:

- SAP BTP-based SAP HANA Cloud is used as a sidecar data persistence layer.

- SAP HANA Cloud underneath SAP Datasphere is used as a data persistence layer.

3. Data modeling in SAP Datasphere and consumption in SAP Analytics Cloud.

Step 1: SAP Ariba REST API exploration.

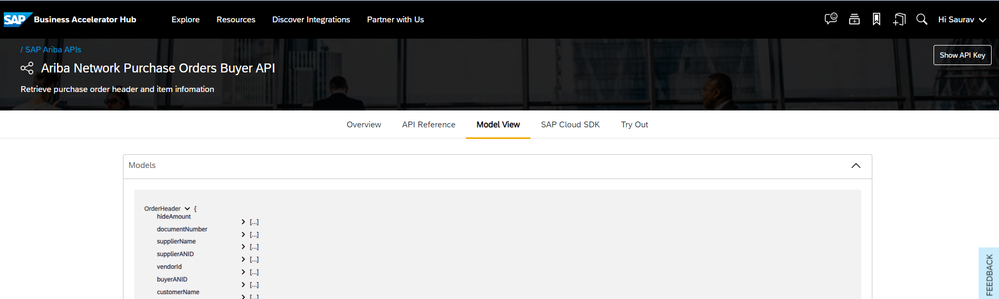

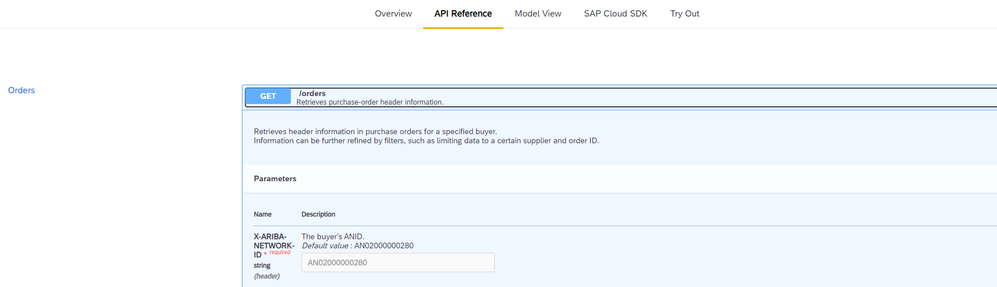

To kickstart the integration journey, we tap into the SAP Ariba Network Purchase Orders Buyer API, as listed on the SAP Business Accelerator Hub. Here we will identify essential header and item data, such as Vendor ID, Document Number, Supplier Name, Quantity, and Purchase Order Amount.

- Log in to the SAP Business Accelerator Hub and navigate to the SAP Ariba REST API documentation. The default buyer’s Ariba Network ID and the API key would be necessary in the next steps.

Step 2: CAP Node.js extractor application implementation, deployment, and testing

In this step, we harness the capabilities of the SAP Business Technology Platform (BTP) and the Cloud Application Programming (CAP) Node.js application. We create a data model based on the API fields, establish a service model for OData endpoint provisioning, and develop the application logic for reading API data, parsing it, and writing to SAP HANA Cloud. Please refer to the blog Develop a CAP Node.js App Using SAP Business Application Studio which discusses the steps that would be necessary for this project.

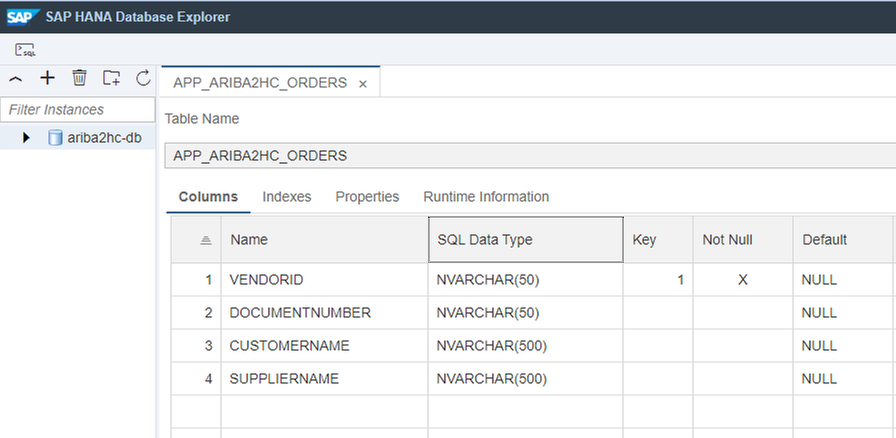

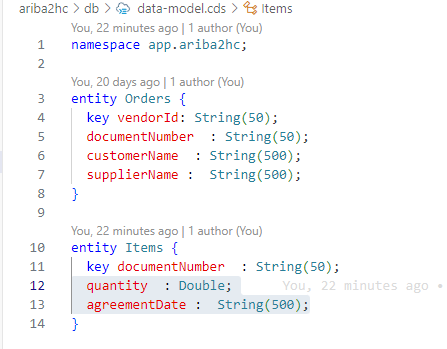

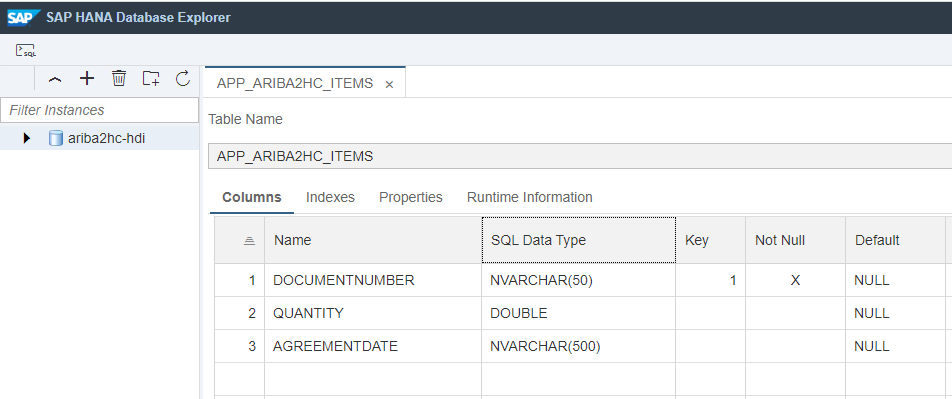

- Using the SAP Business Application Studio for CAP application, create the data model based on the fields identified from the API. This will serve as the data model to be persisted in the HANA database.

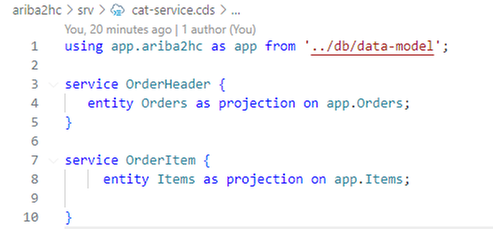

- Next create the service model based on the data model. The service model will serve as the OData endpoint for data provisioning from the CAP application.

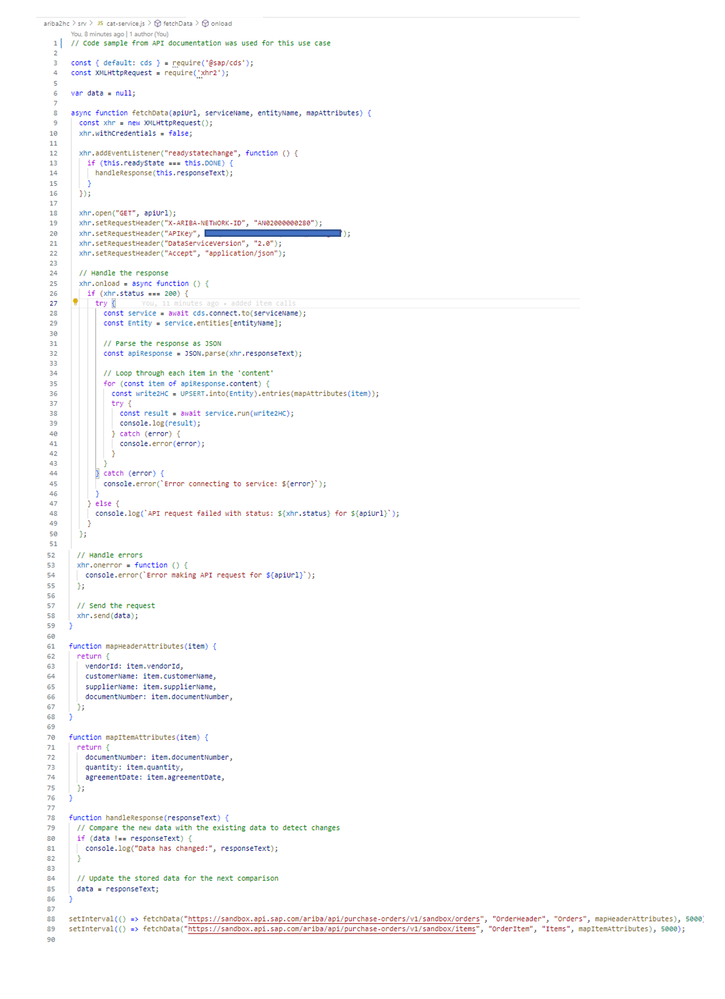

- The CAP application needs the application logic to read the API data, parse it, and write to the HANA Cloud. All these steps are implemented in the cat-service.js script. CAP provides out-of-the-box HANA cloud persistency, and this can also be manually configured by running the command “cds add hana” in the terminal.

In this implementation, data persistence is achieved through the usage of SAP Cloud Application Programming Model (CAP) and the cds module. Here, cds.connect.to(serviceName) connects to the CAP service (serviceName), and UPSERT.into(Entity) inserts data into the specified entity (Entity). This operation persists the data into the underlying database associated with the CAP service.

The data synchronization process is automated using the setInterval function. The fetchData function is repeatedly called at regular intervals (every 5 seconds) using setInterval, ensuring that the data is periodically fetched and compared for changes. This automatic polling mechanism ensures that the application continuously retrieves and updates data from the specified API endpoints.

The provided implementation fetches the entire content from the API endpoints every time the fetchData function is called. The comparison between the fetched data and the previously stored data (data) is done to identify changes.

Different APIs handle deltas in different ways but generally, a delta fetch can be achieved by specifying a filter by date in the request.

For example:

Adding startDate as a filter condition will fetch data from the source system for a period of 31 days from the startDate. This startDate can be configured to only bring daily, weekly, or monthly changes.

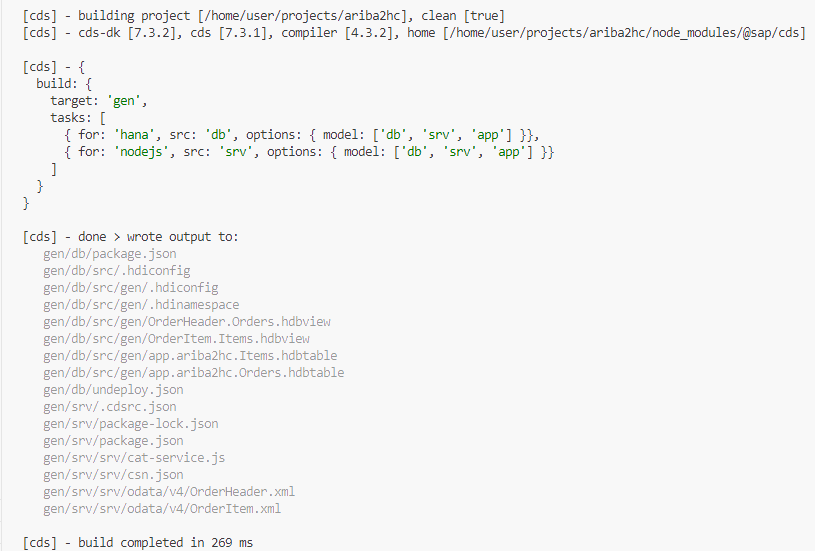

- The application is now almost ready for testing. Before testing, it needs to have all the required libraries and connections to HANA Cloud. For that run the command “npm install” that installs all libraries to the project folder. The application needs @Sap/cds package for running and deploying the application. Execute the command “cds build” which builds the application locally, generates the database artifacts and the application is now ready for deployment. Finally, log in to the Cloud Foundry account and check if the HANA Cloud service is up and running.

- Run command “cds deploy” to deploy the generated artifacts and this will create the HDI container, HANA tables required to test the application on the local server.

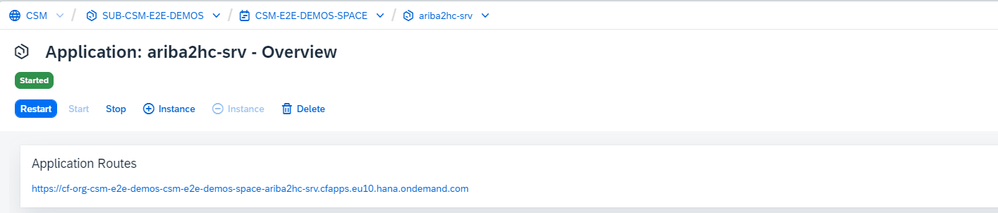

- To deploy the application on the SAP BTP account, create a Multi Target Application archive “mtar” package of the solution by running the command “mbt build”. After a successful build, the application can be deployed by running the command “cf deploy <path to .mtar>”

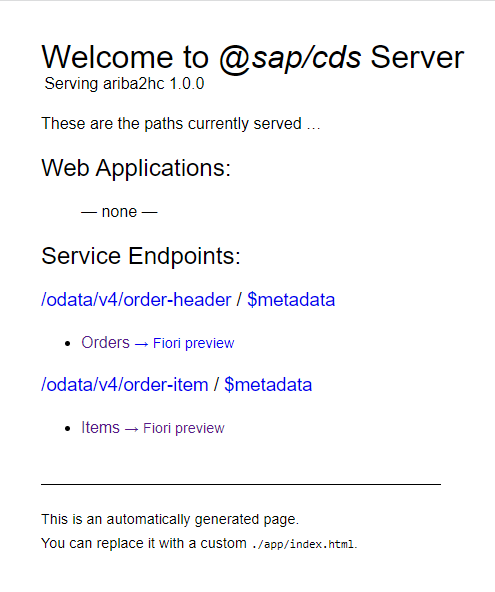

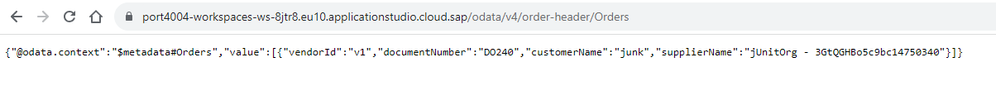

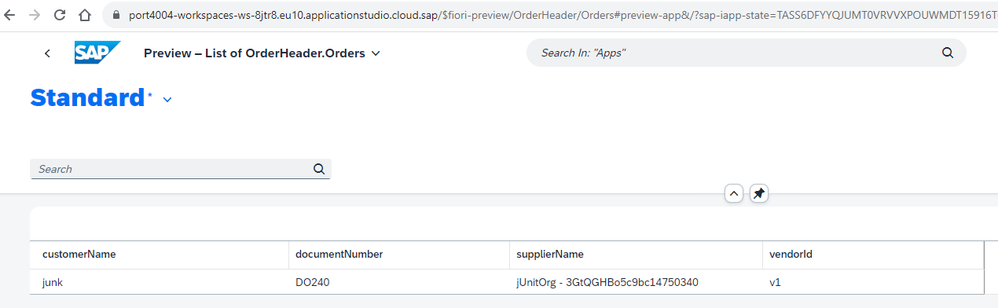

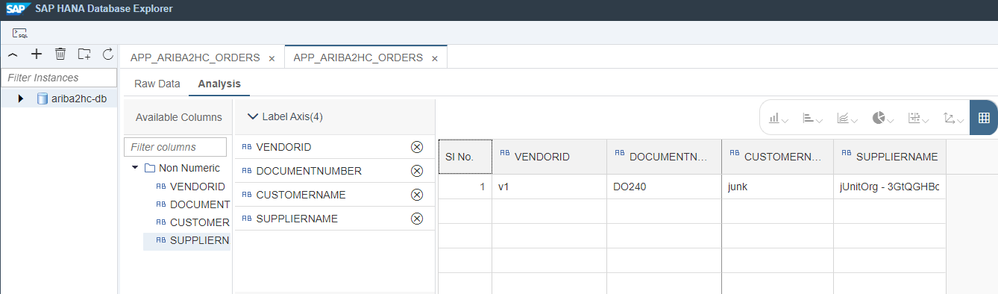

- After successful deployment, we can run the application locally by executing the command “cds watch –profile hybrid”. The API response gets written to the HANA table. On clicking the Orders or Fiori Preview – data is also provisioned as OData and Fiori Preview. The application can also be launched from the SAP BTP account-based application URL. Data persistency can be checked using SAP HANA Database Explorer by adding the HDI instance to it.

Scenario A: Leveraging SAP BTP-based HANA Cloud as a sidecar Data persistency layer.

Note: This is a preferred approach if the customer data needs to be persisted in HANA cloud which runs on a separate datacenter than SAP Datasphere or SAP Analytics Cloud.

After the successful deployment of the CAP application with the generated artifacts, the HANA Deployment Infrastructure (HDI) container is available for integration with SAP Datasphere.

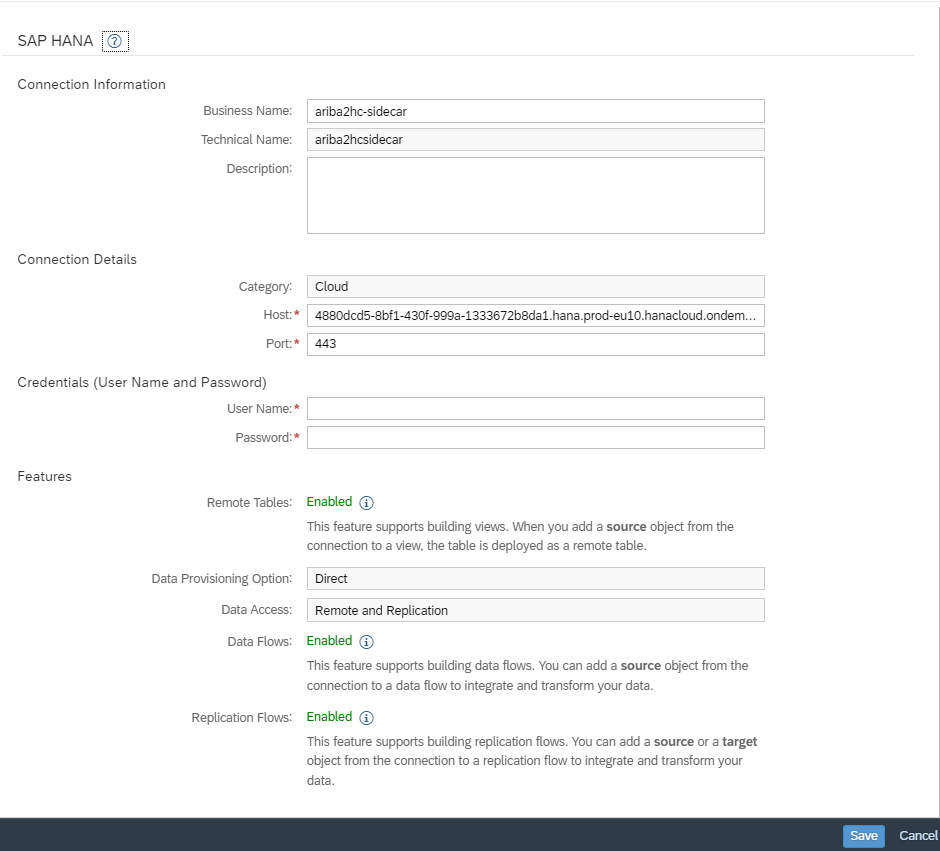

- Login to SAP Datasphere and create a new connection of type SAP HANA and input the runtime user, and password from the HDI container key from the BTP cockpit. Save the connection details as shown below and validate.

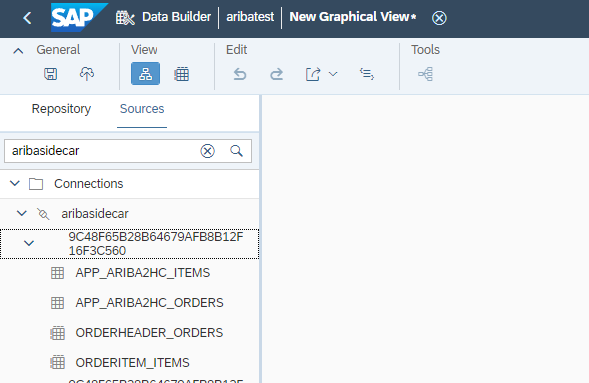

- Navigate to Databuilder, create a new graphical view, the newly created tables will be available under “Sources -> Connections”.

Scenario B: Leveraging SAP HANA Cloud underneath SAP Datasphere as a data persistency layer.

Note: This is a preferred approach if the customer data can be persisted in the HANA cloud underlying the SAP Datasphere.

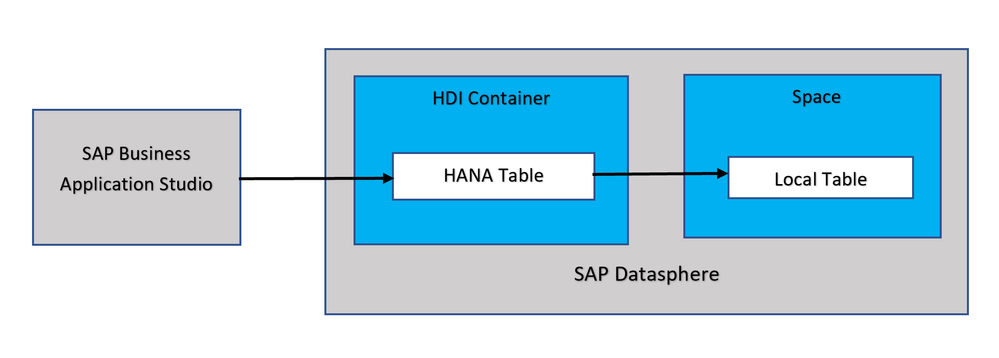

In this scenario, the CAP application performs the ETL (Extract, Transform, Load) task to load the SAP Ariba data into the Open SQL Schema/HDI Container table, which is then used in the SAP Datasphere for data modeling.

Pre-requisites:

The SAP Datasphere tenant and SAP Business Technology Platform organization and space must be in the same data center (for example, eu10, us10). The Datasphere space must be provisioned for access from SAP BTP and Business Application Studio.

Please refer to the following blogs and the help document for more details about the steps necessary for this.

SAP Datawarehouse Cloud - Hybrid - access to SAP HANA for SQL data warehousing

Access the SAP HANA Cloud database underneath SAP Datawarehouse Cloud

Prepare Your HDI Project for Exchanging Data with Your Space

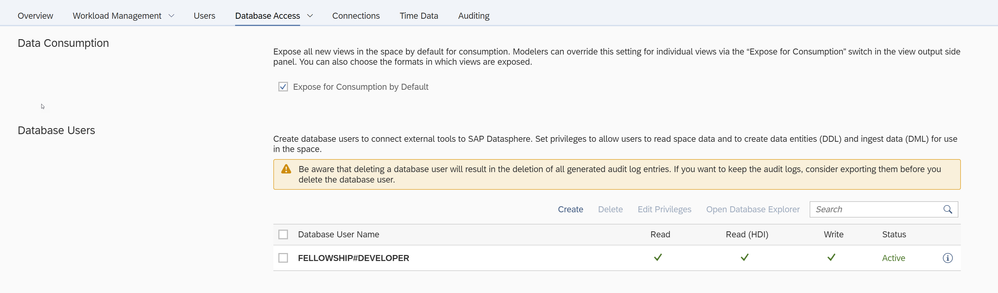

- Create a DB user in the Datasphere space which has HDI (HANA Deployment Infrastructure) consumption rights.

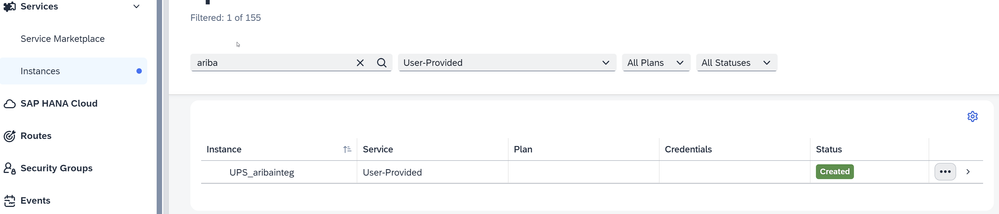

- Create a User Provided Service in BTP space. The UPS (User Provided Service) will make use of the DB user credentials.

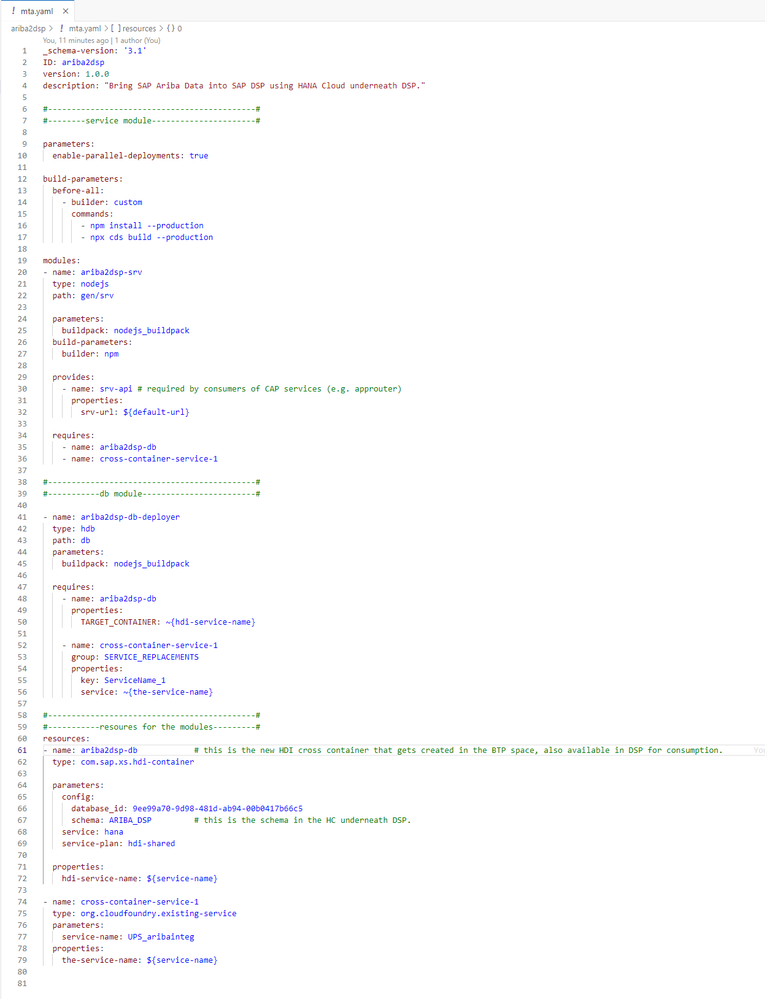

- Modify the mta.yaml to use the UPS (User Provided Service) and the HANA Cloud of Datasphere. Here the db module looks for a cross container ariba2dsp-db which is provisioned by the UPS (User Provided Service) that is connected to Datasphere space.

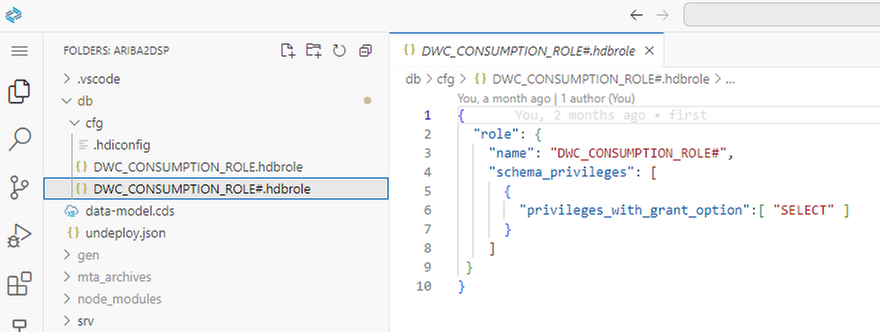

- To access the Open SQL Schema/HDI container “ARIBA_DSP” objects in the SAP Datasphere space, create the “DWC_CONSUMPTION_ROLE” as shown below

- Next step is to build “cds build” and deploy “cds deploy”. Or “mbt build” and “cf deploy <path to .mtar>”. Make sure to connect to the BTP cloud foundry instance and space that is mapped to the SAP Datasphere HANA Cloud instance.

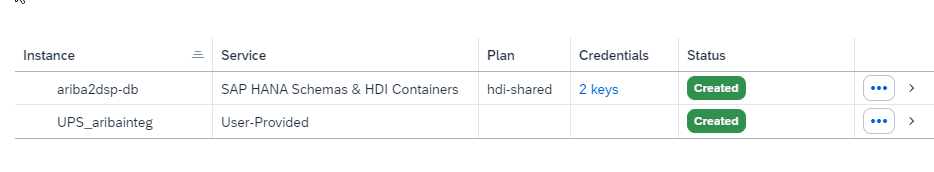

The instances thus created in the BTP space would look as below:

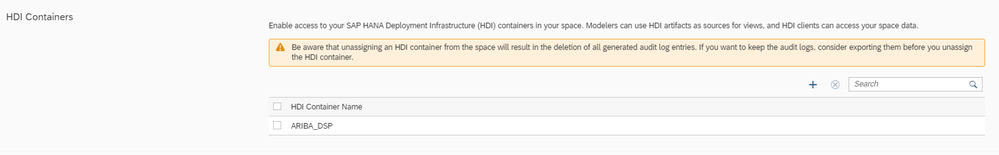

- The Open SQL Schema/HDI container thus created needs to be added to the SAP Datasphere space.

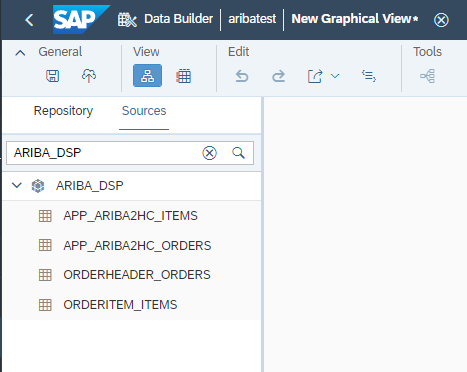

- Navigate to Databuilder, create a new graphical view, the newly created tables will be available under “Sources -> Schema”

This scenario provides a simplified landscape and is free from the release cycle of HANA Cloud. As the underlying HANA Cloud can be used together with the BTP services, the possibility to use BTP features now become possible with this already simplified integration. For example, filtering or massaging the persisted data, running ML models on the persisted data etc. Besides, the native integration to the SAP Analytics Cloud can seamlessly work with the exposed data models from the SAP Datasphere.

Step 3: Data modeling in SAP Datasphere and consumption in SAP Analytics Cloud.

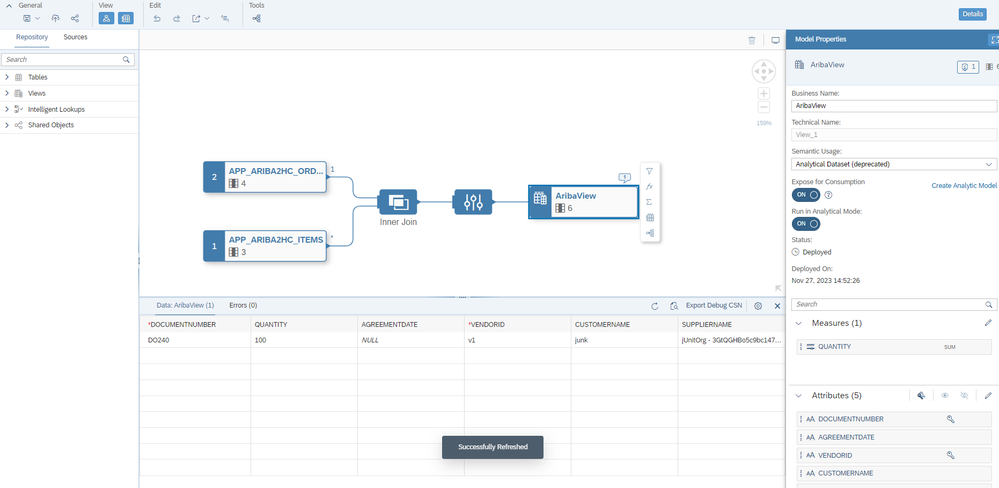

In this step the HDI container tables created in either of the scenarios are used for modeling graphical views and analytic models, which are exposed for consumption in SAP Analytics Cloud

- In SAP Datasphere, navigate to the Databuilder section and create a new graphical view. Select the HANA tables from the sources to create a graphical view. Data can be visualized in SAP Datasphere. Save and deploy to run in analytical mode.

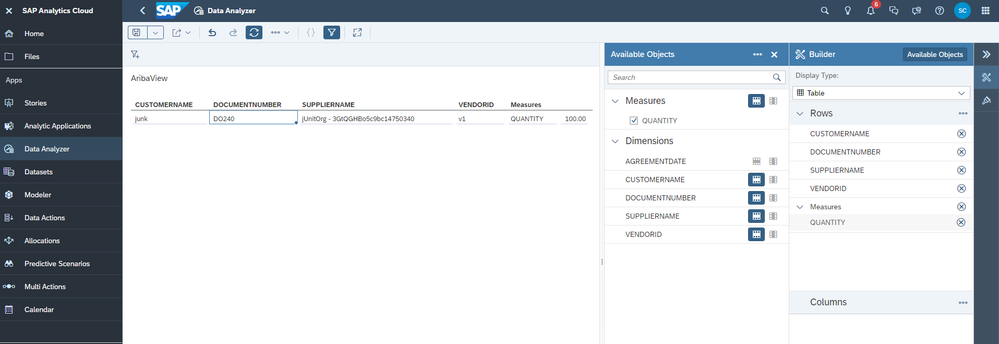

- In SAC (SAP Analytics Cloud) the data can be analyzed to create SAC (SAP Analytics Cloud) based artefacts like story or a canvas.

Conclusion: Empowering Data Integration with SAP Solutions

Summing up our exploration, this blog highlights the versatility of Cloud Application Programming in SAP BTP, offering a robust platform for HANA Cloud project development. Whether utilizing sidecar HANA Cloud or tapping into the underlying HANA Cloud of Datasphere, CAP ensures a seamless and efficient data integration process. This is one of the many ways of bringing data into Datasphere and some of the benefits are custom application logic for data handling which includes parsing, filtering etc., managing source system loads by periodic executing of API calls. Using BAS (Business Application Studio) has certain benefits like native support for HANA Cloud integration and application deployment, Source Control via Git integration etc.

Hope you liked the use case showing the data integration capabilities of the SAP solution and tools. Below are the sample code repositories from this implementation. Thank you.

GitHub Repository for Scenario A Code

GitHub Repository for Scenario B Code

Credits:

I would like to thank Stefan Hoffmann and Axel Meier from the HANA Database & Analytics Cross Product Management team for their valuable guidance in completing this implementation.

References:

Develop a CAP Node.js App Using SAP Business Application Studio

Combine CAP with SAP HANA Cloud to Create Full-Stack Applications

SAP Datawarehouse Cloud - Hybrid - access to SAP HANA for SQL data warehousing

Access the SAP HANA Cloud database underneath SAP Datawarehouse Cloud

Prepare Your HDI Project for Exchanging Data with Your Space

- SAP Managed Tags:

- SAP Analytics Cloud,

- SAP Datasphere,

- SAP Business Technology Platform

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

93 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

67 -

Expert

1 -

Expert Insights

177 -

Expert Insights

301 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

346 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

430 -

Workload Fluctuations

1

- Expanding Our Horizons: SAP's Build-Out and Datacenter Strategy for SAP Business Technology Platform in Technology Blogs by SAP

- Issues with "SAP Analytics Cloud, add-in for Microsoft Excel" while working with SAP Datasphere in Technology Q&A

- ODATA API Consumption SAP Datasphere - API filtering issue in Technology Q&A

- Deliver Real-World Results with SAP Business AI: Q4 2023 & Q1 2024 Release Highlights in Technology Blogs by SAP

- Porting Legacy Data Models in SAP Datasphere in Technology Blogs by SAP

| User | Count |

|---|---|

| 26 | |

| 17 | |

| 15 | |

| 13 | |

| 11 | |

| 9 | |

| 8 | |

| 8 | |

| 8 | |

| 7 |