- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Boosting Benchmarking for Reliable Business AI

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

This blog post is part of a series that dives into various aspects of SAP’s approach to generative AI, and its technical underpinnings.

In the previous blog post of this series, we introduced the SAP generative AI hub, in SAP AI Core. In this blog post, we will discuss a significant aspect – benchmarking of AI models.

Read the first blog post of the series.

Introduction

At SAP, we are building business AI - AI which is solving our customers’ business problems and which is an integrated part of SAP’s applications and platforms. By integrating this technology in our applications, our customers can achieve relevant outcomes with direct business value (Tomasz Janasz et al. 2021). While generative AI (GenAI) can create great value for companies, applying this technology to business problems comes with high requirements for correctness and reliability.

The number of generative AI use cases developed at SAP and the number of AI models which are available, both commercial and open source, is growing quickly. Therefore, we need a systematic approach for selecting the most suitable model for each use case and need to answer the question “What is the best model?” for each particular use case.

In our previous blog posts, we have discussed how SAP's generative AI hub in SAP AI Core lets developers access the best AI models from our cloud-technology partners, such as Microsoft Azure, leading AI startups, and open-source models in an SAP environment. In this third blog post of the series, we will describe why benchmarking is important for business AI, how it fits into SAP’s AI strategy and what are current best practices for benchmarking and testing AI models, to maximize business value.

Why benchmarking matters

To state the obvious, customers expect their software to work correctly and reliably. Conducting rigorous testing is, therefore, part of any professional software development project. It becomes even more important when the software is used to make important business decisions. Now, customers have similar high expectations regarding correctness of any AI feature. Effective testing helps in identifying biases, inaccuracies, and unexpected behaviors, and helps to ensure that the models align with ethical standards and user expectations.

AI testing often utilizes collections of test samples, typically across different tasks, which are organized in standardized benchmarks. Benchmarks allow comparing AI models and have been a measure of progress in AI. Initiatives like Stanford’s Holistic Evaluation of Language Models (HELM) or the Huggingface Open LLM leaderboard compare AI models across multiple tasks and datasets.

Compared to traditional software testing, testing AI models, particularly large language models (LLMs), is still a less mature discipline. In conventional software testing, there are established methodologies, like unit testing, and the behavior of the software is largely predictable and deterministic, as it was programmed by a human developer. AI models resemble 'black boxes' which were trained, rather than explicitly programmed and whose inner workings are hard to understand, even for AI experts. This opacity makes understanding the causes behind outputs difficult, complicating the debugging and improvement processes. Furthermore, the vast and varied nature of language models complicates the creation of comprehensive test sets, as it is nearly impossible to predict all the ways users might interact with the model and the space of potential outputs is nearly unbounded.

How are models currently being used?

General-purpose large language models are flexible and have a wide range of applications. However, for specific use cases that require specialized knowledge, these general-purpose models might need fine-tuning or the use of retrieval-augmented generation (RAG) to improve their accuracy and reliability. The high cost of fine-tuning may necessitate the use of smaller models to ensure feasibility. Meanwhile, retrieval-augmented generation systems may require models with larger context window sizes.

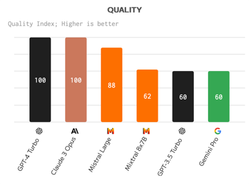

For any large language model used in a user application, there's a balance to be struck between the responsiveness of the model and the quality of its output. For instance, models like GPT-4 from OpenAI are known for their excellent performance in terms of quality but are restricted by high latency and cost. Other models, such as Gemini Pro, Mixtral 8x7B, GPT-3.5 Turbo, and Llama 2 Chat 70B, provide a good mix of quality, speed, and cost-effectiveness. While these models may not perform as well as GPT-4, they still outperform many other models on the market.

Figure 1: Quality, Performance & Price Analysis of LLMs (from Artificial Analysis)

Therefore, choosing the right large language model requires a careful evaluation of the trade-offs between quality, speed, and cost. This underscores the importance of comprehensive benchmarking when assessing different models.

From “eyeballing” to supervised evaluation

Existing AI benchmarks are focused on academic datasets typically created from public sources, like Wikipedia. They do not yet sufficiently cover enterprise use cases which require data from specific business domain[1]. Therefore, additional testing is required. Let us investigate the testing process for generative AI in more detail.

Figure 2: Three approaches to AI model evaluation

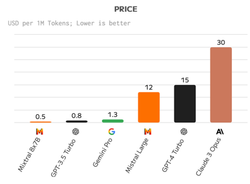

Testing and evaluating AI models follows an iterative approach. When teams are ideating on new generative AI use cases, they typically start experimenting with various AI models in a “playground” environment, such as the generative AI hub in SAP AI Launchpad (read more about it in blog post 2 of this series, or this blog post). This environment allows for rapid prototyping via prompt engineering. SAP AI Launchpad also allows sending prompts to different models for easy comparison. Compared to traditional AI models, generative AI models allow fast prototyping and a first feasibility check without the significant upfront investment of creating training and test data sets.

Figure 3: Prompt Editor in SAP AI Launchpad

However, simply “eyeballing” a few prompt-answer pairs is not sufficient beyond the prototyping stage. Developers need more robust and reproducible tests. These tests should be automated so that they can be conducted as a part of automated testing and release pipelines. Automated testing is also important for regression testing, when the application, the input data or the underlying model have been changed. Finally, these methods should cater to diverse use case scenarios and languages, aiding in informed technology choices and application enhancements

The second stage in benchmarking models is using a large language model as a judge. This approach requires a test set of inputs but does not require ground truth references, i.e. it does not require the expected output or label. Instead, it uses the ability of powerful large language models to score the output – using one AI model to score another AI model. Employing language models as a judge sidesteps the expensive creation of human references, making it an efficient method to scale the evaluation after the eyeballing step. While research shows high correlation between language models as a judge and human scores (Liu, et al. 2023), a downside is the potential for bias or errors in the automatic judgments. Thus, the evaluation results should not be trusted blindly but undergo additional testing, either with a supervised test set, which we describe next, or human evaluation.

The third stage is supervised evaluation, consisting of a test set of model inputs and expected outputs, usually provided by human domain experts to ensure they are accurate and reliable. These test sets should cover a wide range of expected inputs including edge cases and hard examples. The model output can be compared to the expected outputs automatically with an automated metric which measures the agreement between model outputs and human reference, producing a measure on the model’s performance. Examples for metrics are statistical measures, like accuracy, precision and recall, or AI-specific metrics, like the BLEU (Bilingual Evaluation Understudy) score which is commonly used in machine translation evaluation to measure the similarity of generated translations to human reference translations.

Supervised evaluation is the most reliable way to benchmark AI models, but it is also the costliest. Therefore, it makes sense to start with the simpler and cheaper prompt-engineering approaches, then enhance the tests using language models as a judge, and finally move to supervised evaluation. An overview of these evaluation methods is depicted in Figure 3.

Figure 4: Ways of evaluating LLMs

Evaluation in practice: document processing with LLMs

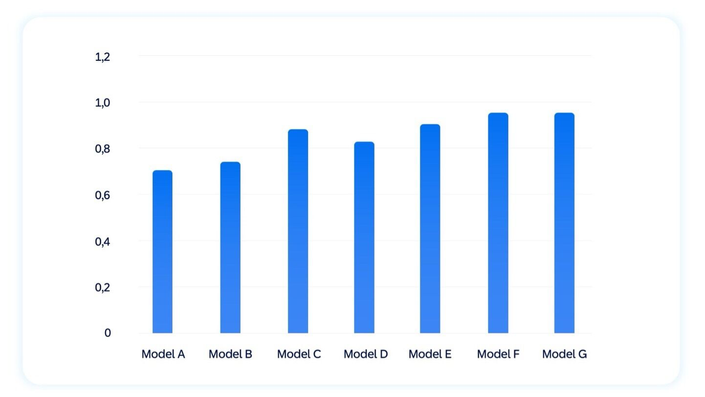

Let us illustrate this with an example AI use case at SAP. Document Information Extraction is an SAP AI service which extracts information from business documents, such as invoices or purchase orders. The service was recently enhanced with large language model capabilities which greatly enhances its flexibility to process more document types and support more languages. After an initial prototyping phase, which showed the initial feasibility of using large language models, the team conducted a supervised evaluation of different large language models on a subset of header fields. We report the extraction accuracy of each model prompted in a zero-shot fashion relative to a fully supervised baseline. Figure 4 shows the anonymized results. We can see that some of the models can achieve high extraction accuracy, rivaling supervised models. But the results also show that the extraction accuracy between models varies, illustrating the importance of benchmarking. The main business value of large language model in this scenario is their flexibility: allowing users to process any type of document in almost any language (although the exact language coverage differs between models). It is important to note that large language models are several orders of magnitude larger and more expensive than the supervised model. All these factors need to be considered when producing AI.

Figure 5: Benchmarking results for information extraction from documents using zero-shot prompting on a subset of header fields. Results are relative to a supervised baseline. Model names are anonymized.

Benchmarking for Enterprise AI

To enhance the state of AI benchmarking for enterprise use cases, SAP is teaming up with its academic partners. In 2023, SAP joined the Stanford HAI Corporate Affiliate Program. Under this program, SAP is now starting a collaboration with the Stanford Center for Research on Foundation Models (CRFM) on the Holistic Evaluation of Language Models (HELM) project to study specific enterprise AI use cases.

Conclusion

In this blog post, we have outlined the importance and the challenges of benchmarking or business AI. We have recommended three stages of evaluation as current best practices for benchmarking, going from quick eyeballing of examples to using language models as a judge and supervised evaluation. This helps to quickly establish a first feasibility check and build more robust and reliable tests in an iterative manner. The field of AI benchmarking is still evolving, and we can be sure that we will see more benchmarks and test methodologies covering, for example AI safety, trustworthy AI, and industry verticals, in the future.

References

Tomasz Janasz, Peter Mortensen, Christian Reisswig, Tobias Weller, Maximilian Herrmann, Ivona Crnoja, and Johannes Höhne. "Advancements in ML-enabled intelligent document processing and how to overcome adoption challenges in enterprises." Die Unternehmung 75, no. 3 (2021): 340-358.

Yang Liu, Dan Iter, Yichong Xu, Shuohang Wang, Ruochen Xu, and Chenguang Zhu. "GPTEval: NLG evaluation using GPT-4 with better human alignment." arXiv preprint arXiv:2303.16634 (2023).

[1] But see the recently released Enterprise Scenarios Leaderboard by Patronus as a first benchmark for enterprise use cases

- SAP Managed Tags:

- Artificial Intelligence,

- SAP AI Core,

- SAP AI Launchpad,

- SAP Business Technology Platform

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

93 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

67 -

Expert

1 -

Expert Insights

177 -

Expert Insights

301 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

346 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

429 -

Workload Fluctuations

1

- SAP BW/4 - revamp and true to the line 2024 in Technology Blogs by Members

- SAP Signavio is the highest ranked Leader in the SPARK Matrix™ Digital Twin of an Organization (DTO) in Technology Blogs by SAP

- 10+ ways to reshape your SAP landscape with SAP Business Technology Platform – Blog 4 in Technology Blogs by SAP

- Explore Business Continuity Options for SAP workload using AWS Elastic DisasterRecoveryService (DRS) in Technology Blogs by Members

- Value Unlocked! How customers benefit from SAP HANA Cloud in Technology Blogs by SAP

| User | Count |

|---|---|

| 34 | |

| 17 | |

| 15 | |

| 14 | |

| 11 | |

| 9 | |

| 8 | |

| 8 | |

| 8 | |

| 7 |