- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- BENCHMARKING FUJITSU’S DIGITAL ANNEALER TO SOLVE C...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Quantum Annealing

If you have heard of quantum computing, it may have been in the context of gate-based quantum computing. This concept is akin to the logic gates that form the bedrock of classical computers. However, like many quantum technologies, gate-based quantum computing grapples with substantial constraints, wrestling with significant error rates and scaling limitations.

Enter quantum annealing—a specialized contender for optimization that shares the ambition of gate-based QC and offers some current advantages. The beauty of quantum annealing lies in its higher relative error tolerance. However, much like its gate-based counterpart, maintaining a stable state demands temperatures of the order of millikelvin and requires sophisticated control over the quantum system. This renders it an expensive undertaking and presents challenges in tackling larger, business-relevant problems.

Digital Annealing

In the face of these obstacles, opportunities arise to think creatively and open parallel avenues for progress. From the concepts of quantum computing, quantum annealers emerged, and now, quantum-inspired technology has taken the stage with the advent of digital annealers.

The term "quantum-inspired" distinguishes digital annealers from their truly quantum counterparts. Remarkably, digital annealers are not quantum at all. Instead, they leverage computational methods inspired by the principles of quantum mechanics. While not a "quantum" solution per-se, their existence owes much to the profound work and reflections of quantum scientists.

The collaborative efforts of SAP and Fujitsu aim to harness their combined market strengths to tackle challenges and seize opportunities presented by more quantum-inspired technology. By integrating quantum-inspired technology with SAP's software, both companies stand to benefit from each other's success and expertise, propelling innovation in this dynamic field.

Demystifying the Digital Annealer

Fujitsu's Digital Annealer Unit (DAU) is a hardware accelerator designed for executing parallel operations using a tailored simulated-annealing algorithm. But how does this innovative technology operate? In essence, annealers are problem-solvers that navigate challenges described by an energy function—problems that involve binary input variables and assigning values to all possible configurations of these variables. The ultimate objective of annealing is to pinpoint the variable configuration leading to the lowest function value, a pursuit known as function minimization. Yet, this task is no walk in the park; it is a formidable challenge due to the prevalence of many local minima in functions. As any mountaineer knows, simply descending along a function until reaching the bottom of a valley can easily result in getting stuck.

Digital annealers, however, introduce a clever solution: they enable us to break free from local minima and reassess the situation. To illustrate this concept, envision shaking a marble within an egg carton—a 2D analogy. In this analogy, the egg carton's dimples, akin to local minima, vary in size and depth. Shaking too little might lead to getting stuck in a local minimum, while shaking too vigorously risks overshooting the global minimum. For a more rigorous explanation of optimization we will post a more detailed explanation at a later date.

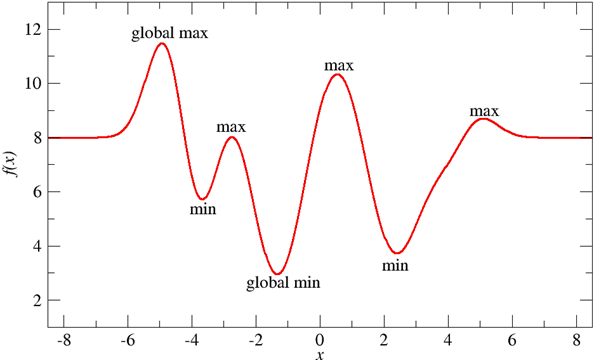

A 1-D Cost Function

Figure 1: Here, we can see a cost function (in this case a 1-D function) with the global minimum at x=-1.5 and several local minima. If we were to use classical methods to find the global minimum, the likelihood of getting stuck in a local minimum is great.

The precise workings and intricacies of the underlying annealing scheme constitute Fujitsu's intellectual property, hidden from the user. Nevertheless, users retain a degree of control over the algorithm's behavior through various parameters exposed in Fujitsu's API. Even the simplest operating scheme involves a single parameter—the time allocated for the annealing task. A larger time window enhances the likelihood of discovering superior solutions. All other variables are automatically configured to ensure the algorithm completes within the specified time. This blend of complexity and user-friendliness empowers individuals to harness the potential of the Digital Annealer without delving into the intricacies of its inner workings.

Quadratic Unconstrained Binary Optimization

The Digital Annealer Unit (DAU) seamlessly processes a Quadratic Unconstrained Binary Optimization (QUBO) problem along with additional metadata. In this mathematical formulation of optimization, known as QUBO, every variable is constrained to binary values—either 0 or 1. Again, if you are seeking a more in-depth understanding of QUBOs, we will post a more comprehensive explanation.

The output from the DAU is a binary string, a representation of the optimal QUBO solution unearthed by the algorithm within the allocated time. In this context, the term "best solution" alludes to the configuration that results in the most favourable cost-function outcome. Typically, the cost function is defined to be minimized, although it can also represent a maximum depending on the specific scenario.

Given that annealing is inherently a probabilistic algorithm, running the same algorithm twice with identical input data may yield different solutions. Consequently, the algorithm is usually executed multiple times, and the final output is the best overall solution derived from any of the runs. This iterative approach accounts for the algorithm's stochastic nature, ensuring that the user receives the most robust and optimal solution for their QUBO problem.

Benchmarking a Novel Optimization Technique (MIPLIB & QPLIB)

In the early stages of our proof of concept, both SAP and Fujitsu collaborated diligently to design, implement, and benchmark a prototype—evaluating the capabilities of the Digital Annealer Unit (DAU). This phase had some generic objectives: Namely to unravel the intricacies of the technology, immerse colleagues in new programming paradigms, prototype standardized optimization tasks tailored for the DAU, and gauge initial outcomes against existing technologies. At the same time, SAP was charting a course to seamlessly integrate the DAU into its products and services.

At the heart of the PoC was the careful selection of problems, a pivotal decision-making process. Given the specialized nature of the DAU, it was imperative to assess where it outshone traditional optimization algorithms. The PoC's maiden trial involved running instances from two crucial libraries— the linear Mixed Integer Programming Library (MIPLIB) and the Quadratic Programming Library (QPLIB)— on the digital annealer. This strategic move provided a broad overview of the DAU's strengths and shed light on potential use cases for the annealer. Very quickly we found that the DAU’s strengths lay in problems of a more complicated, quadratic nature (QPLIB), so these are presented here.

Determining what constitutes "better" in optimization is no simple task. Traditional metrics often revolve around speed, yet the global minimum is not always a guaranteed outcome. Suppose an algorithm lands on the second lowest minimum but does so in a fraction of the time—does that make it "better"? The contextual nuance often muddles benchmarking evaluations. In our project, we opted for a pragmatic approach, focusing on runtime and the relative quality of solutions compared to those achieved by a state-of-the-art classical optimizer. This allowed us to assess the DAU's performance with a balanced perspective, acknowledging that the optimal solution might be a complex interplay of speed and solution quality in the realm of optimization challenges.

Pick and Choose: Problem selection

What were we trying to prove?

The main purpose of this proof of concept was to conduct an initial evaluation of a new optimization technology. The focus was not to thoroughly test every aspect of the technology, but rather to address two important questions:

- Does the technology wield any advantages over existing classical optimizers?

- Can it seamlessly integrate with SAP's products and services?

To evaluate how well the DAU performs, we needed to establish criteria for what constitutes better or worse outcomes. This, as we have stated already, is not straightforward, especially in optimization problems. When assessing algorithms, remember we typically consider two factors: the time it takes to run the algorithm (runtime) and the quality of the result it produces. Comparing two algorithms on the same problem set might reveal that one is faster but yields a lower-quality solution than the other. Determining which algorithm performs better requires a careful analysis.

Fixed time versus fixed quality

To address this challenge, we conducted two types of runs and measurements. In one scenario, we fixed the runtime of the algorithms and measured the quality (objective value) of the solutions they produced. In the other scenario, we fixed the quality by allowing one algorithm to run until it achieved a solution with the same quality as the other, and then measured the runtime required to reach that solution.

We established a relative performance metric by dividing the runtime of an alternative method by the runtime of the DAU. A value above 1 indicates that the DAU is faster than the alternative method, while a value below 1 indicates how much slower the DAU is. For example, a value of x = 0.4 means that the alternative method takes 40 % of the time to find a comparable or better solution. When discussing the average speedup, we divide the mean runtime of the alternative method by the mean runtime of the DAU.

It is important to note that this type of comparison does not consider solutions with a "slightly worse" objective value. In some cases, if an algorithm runs for t seconds and an alternative solution is slightly worse after t seconds but cannot find an equal solution within 100t seconds, we stop the run and simply declare the first algorithm to be 100 times faster (and therefore, 100 times better), without considering the potentially negligible difference in solution quality.

We also defined a relative solution quality metric to compare objective values for fixed runtimes. Comparing objective values is more complex than comparing runtimes. A standard method is to compute the gap between the solutions x and y for the objective function f. A gap of "gap"(x,y) indicates that solution x is gap(x,y) percent better than solution x with respect to y.

Using these metrics, we compared the performance of the DAU with existing optimizers that are considered to be the best general-purpose solvers for the problem classes we are examining.

Rising to the challenge: QPLIB Under the Microscope

As mentioned above at the end of the section “benchmarking a novel optimization technique.” DAU’s strengths were found in QPLIB instances, so we focused on them. The QPLIB is a collection of benchmarking test instances for quadratic-programming problems, which are often used to evaluate the performance of optimization algorithms and solvers. The QPLIB currently contains 319 discrete and 134 continuous instances of distinct characteristics.

The library provides a set of benchmark problems that allow researchers and developers to compare the efficiency and effectiveness of different quadratic programming solvers and algorithms. Having a standardized set of problems to test against makes it easier to objectively evaluate and compare the performance of optimization techniques.

From the available discrete instances, we chose ten. These were selected because they were quadratic and non-convex (i.e. had multiple local minima) in nature, had linear constraints, and could be easily modelled as a QUBO problem. Out of the ten instances chosen, we saw an improvement on the DAU in nine of them. In some cases, this improvement in quality exceeded our arbitrary limit (capped at 100). In only one quadratic case, QPLIB_3380, the Digital Annealer could not achieve the optimum solution.

The Analysis

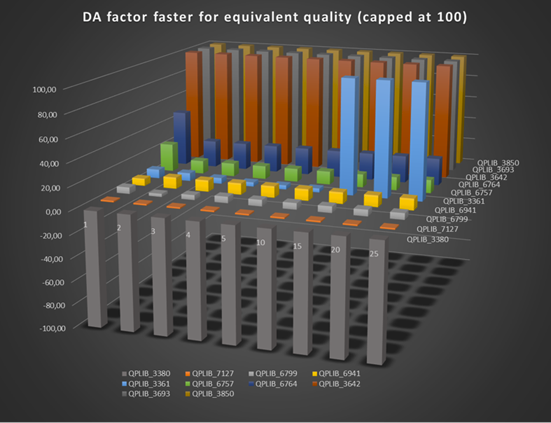

Figure 2: Time to solution (fixed solution quality). The rows (RHS) represent the list of test cases chosen. The numbers 1 – 25 (foreground) represent the digital annealer selected runtime in seconds. The height of the block shows the value of the speed-up “factor.” It is positive if the digital annealer solution was of higher quality than the currently available optimization technique. For case QPLIB_3380, the digital annealer could not achieve the optimum solution. However, in all other cases, the digital annealer showed an improvement on current optimization techniques.

Fixing solution quality, comparing runtimes: Declare victory and withdraw

In our pursuit of fixing solution quality and comparing runtimes, we have reached a notable checkpoint that invites reflection on the path we've taken so far. The outcomes have exceeded our expectations, shedding light on the technology's potential. While the Digital Annealer held its ground among other optimization methods for linear challenges, it truly excelled in handling quadratic problems. This proof-of-concept, a significant step toward SAP's appetite for disruptive optimization, is only the beginning; there is much more to come.

For ten unconstrained problems, the DAU was on average over seventy times faster. All but one QUBO problem were solved to optimality in the first second. The remaining QUBO problem was solved optimally after four seconds.

For constrained problems, we saw comparable results for fifteen of the eighteen instances. For one of the remaining problems, the traditional optimization algorithm found an optimal solution right away and proved itself optimal. For this one instance, the DAU took a minute to find the optimal solution. For two other problems, the DAU could not find a feasible solution, as did the traditional algorithm.

For nine of these 15 instances, the DAU found an optimal solution in just two seconds, while traditional optimizers could only find an optimal solution in two seconds for three of the instances. For the remaining instances, traditional algorithms took eight times as long to find the optimal solution, and in four cases almost 20 times as long.

For six of these 15 instances, for high annealing times (greater than or equal to 15 seconds), traditional optimization cannot match the DAU’s solution quality, even given 100 times the annealing time (where we capped the time, see figure 2). There are a few exceptions due to the probabilistic nature of the DAU. In these cases, the DAU found worse solutions for smaller annealing times.

The mix of specialization and hand-picked use cases

Undoubtedly, we saw significant speed-ups when fixing the solution quality and comparing the runtimes. For half of the instances, we saw at least a 100 times speed-up compared to traditional optimization algorithms. In addition, the DAU was able to solve all the QUBOs to optimality within four seconds, with nine out of ten in just one second. The results above show great potential for the DAU. Specifically for problems where traditional optimizers struggle.

There is no shortage of optimization problems in enterprise (one might speculate that all business endeavours are an optimization of some trade-off between one thing and another). However, the digital annealer seems to have potential in problems of a quadratic nature. This leads us to two constraints, the first being that the use case must have a quadratic dimension and that the quadratic nature must be irreducible, i.e. it cannot be efficiently restated as a linear objective (or at least it must be so difficult to reformulate that it is more efficient just to use the DAU).

Since the data from QPLIB is normalized and without context, we have no information about the nature of represented use cases. This is the next step in SAP’s PoC with Fujitsu.

A successful benchmarking for both SAP and Fujitsu

The successful completion of the first stage of this proof of concept project is a testament to the strong collaboration between SAP and Fujitsu. Both organizations are excited about the potential impact their joint efforts will have for their customers and are committed to further advancing their collaborative work.

Integration testing

We have demonstrated the technical feasibility of integrating the Fujitsu Digital Annealer (DAU) into the DSC (Digital Supply Chain) optimization service. Both APIs integrate seamlessly into our test framework within the Integrated Development Environment. Our system is now up and running, allowing Travelling Salesperson (TSP) problems, in their quadratic formulation, to be sent to the Fujitsu DAU and optimization results to be efficiently retrieved. Notably, Fujitsu has streamlined the process by facilitating the use of call-backs, allowing us to record intermediate results for a more comprehensive evaluation.

Final thoughts and the next steps

Our immediate focus is now on extracting more business-relevant use cases from the instances and resolving any outstanding questions beyond technical integration. We will conduct further research to validate various cloud qualities, including scalability, availability, and reliability.

With the conviction that solving QPLIB instances represents an improvement over current optimization techniques, the next phase is very promising. Looking ahead, our partnership is committed to building on this PoC's achievements. The path includes refining and scaling our efforts as SAP and Fujitsu combine their strengths and anticipate continued success in the journey ahead.

Acknowledgments: Florian Krellner(SAP), and the Fujitsu Digital Annealer Team

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

93 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

67 -

Expert

1 -

Expert Insights

177 -

Expert Insights

301 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

346 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

429 -

Workload Fluctuations

1

- AN INTRODUCTION TO QUADRATIC UNCONSTRAINED BINARY OPTIMIZATION (QUBO) in Technology Blogs by SAP

- Introducing New Capabilities for Enterprise Automation in Technology Blogs by SAP

- Do you know how SAP Signavio solutions can support your journey to SAP S/4HANA? in Technology Blogs by SAP

- Software Update Managerにおけるダウンタイム削減について(3) in Technology Blogs by SAP

| User | Count |

|---|---|

| 32 | |

| 17 | |

| 15 | |

| 13 | |

| 11 | |

| 9 | |

| 8 | |

| 8 | |

| 8 | |

| 7 |