- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Adversarial Machine Learning: is your AI-based com...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

The epoch of AI is already in motion and it will be touching our everyday lives more and more. This very same blog may have been written by using some AI-based module. The source code underlying this blog platforms may have been implemented together with some AI companion.

All this triggers a lot of excitement, but also some concerns, for sure in people having some security background (you know, the usual mood breakers).

How do we ensure that these AI-components will not jeopardize the security and safety of the system in which they will be integrated? There are already many examples of real AI-components that have been easily fooled with simple attacks (face recognition, autonomous driving, malware classification, etc). How can we make these AI-components more secure, robust, and resilient against these attacks?

Adversarial machine learning (AML, in short) is the process of extracting information about the behavior and characteristics of an ML system and/or learning how to manipulate the inputs into an ML system in order to obtain a preferred outcome. As explained very well in the document released by NIST in Jan 2024 "Adversarial Machine Learning: A Taxonomy and Terminology of Attacks and Mitigations" [1], studying AML enables understanding how the AI system can be compromised and therefore how it can also be better protected against adversarial manipulations. AML, as discussed in [1], has a very broad scope, touching different security and privacy aspects of AI components. In particular, four main types of attacks are considered: (1) evasion, (2) data and model poisoning, (3) data and model privacy, and (4) abuse.

In collaboration with the University of Cagliari, Pluribus one, and Eurecom, we started a PhD subject in October 2022 focusing on "Security Testing for AI components", mainly targeting evasion attacks. The rest of this blog will introduce this line of study and mention what we have been done so far.

Security Testing for AI components

In our work we study evasion attacks in multiple industrial domains to understand how and at which extent these attacks can be mitigated. Concretely, we aim to contribute to the community effort toward an open-source testing platform for AI components. We are currently channeling our work into SecML-Torch, an open-source Python library designed to evaluate the robustness of AI components. The ultimate goal for us is to build a feedback loop based on adversarial retraining to increase the robustness of the AI component under-test.

In an evasion attack, the adversary’s goal is to generate adversarial examples to alter AI model behavior, i.e., fool its classification result.

Depending on the situation, the adversary may have perfect (white-box), partial (gray-box), or very limited knowledge (black-box) of the AI system under-testing. In the first case, attackers can stage powerful gradient-based attacks, and they can easily guide the optimization of adversarial examples with the full observability of the victim AI model itself. A classical example of partial knowledge is when the attacker knows the learning algorithm and the feature representation, but not the model weights and training data. In black-box scenarios, the attacker does not know the AI system under-testing and can only access it via queries.

In our study, we focused so far on gray-box and black-box scenarios. As industrial domains we have been targeting Web Application Firewall (WAF) and Anti-Phishing classifiers. Both these domains are consuming more and more AI, with their classifiers getting advantage of complex AI models trained on many historical data (e.g., CloudFlare ML WAF, Anti-phishing Vade Secure). Creating adversarial examples for these industrial domains is more challenging than doing it for simpler domains such as image recognition. Indeed the input space the attacker can play with is more elaborated than adding noise to the different pixels of an image.

Our work on adversarial machine learning for WAF is already well explained in this blog [2] written by Davide Ariu CEO at Pluribus One. For the reader interested to the full technical details, I can suggest our paper pre-print "Adversarial ModSecurity: Countering Adversarial SQL Injections with Robust Machine Learning" here [3]. In summary we showed how

- full rule-based WAF (e.g., ModSecurity and its Core Rule Set) are of little help in blocking SQL injections in complex WAF scenarios

- ML and adversarial retraining can improve and significantly raise the security bar in those scenario

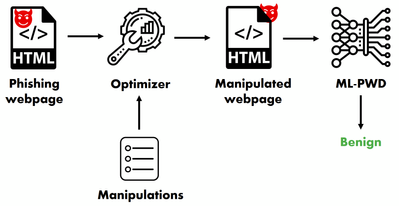

For our study on Anti-phishing, we will write a specific blog as continuation of this one. Full details are available in our paper "Raze to the Ground: Query-Efficient Adversarial HTML Attacks on Machine-Learning Phishing Webpage Detectors" available here [4]. The manipulations created for this work will be soon available in SecML-Torch.

Let me finish by thanking the main leader of all this line of research: Biagio Montaruli, our fellow PhD candidate.

References

- [1] NIST. Adversarial Machine Learning: A Taxonomy and Terminology of Attacks and Mitigations. Jan 2024.

- [2] Davide Ariu. The rise and fall of ModSecurity and the OWASP Core Rule Set (thanks, respectively, to robust and adversarial machine learning). Medium. Oct 2023.

- [3] Biagio Montaruli et al. Adversarial ModSecurity: Countering Adversarial SQL Injections with Robust Machine Learning. arXiv:2308.04964. Aug 2023.

- [4] Biagio Montaruli et al. Raze to the Ground: Query-Efficient Adversarial HTML Attacks on Machine-Learning Phishing Webpage Detectors. Artificial Intelligence and Security. Nov 2023.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

95 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

67 -

Expert

1 -

Expert Insights

177 -

Expert Insights

308 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,576 -

Product Updates

353 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

440 -

Workload Fluctuations

1

- Creating ABAP Dataframes for SAP PAL in Technology Blogs by Members

- SAP Fiori for SAP S/4HANA - Empowering Your Homepage: Enabling My Home for SAP S/4HANA 2023 FPS01 in Technology Blogs by SAP

- Experiencing Embeddings with the First Baby Step in Technology Blogs by Members

- CAP LLM Plugin – Empowering Developers for rapid Gen AI-CAP App Development in Technology Blogs by SAP

- Adversarial Machine Learning: is your AI-based component robust? in Technology Blogs by SAP

| User | Count |

|---|---|

| 22 | |

| 13 | |

| 13 | |

| 11 | |

| 10 | |

| 10 | |

| 9 | |

| 9 | |

| 9 | |

| 8 |