- SAP Community

- Products and Technology

- Technology

- Technology Blogs by Members

- Generative AI with SAP. RAG Evaluations

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

But for LLMs, it's different. The MML Benchmark evaluates LLMs on knowledge and reasonability, but no uniform method exists for running it, whether we evaluate prompt sensitivity, the LLM, our RAG, or our prompts. Even simple changes like altering parenthesis can drastically affect accuracy, making consistent evaluation challenging.

The RAG ecosystem's evolution is closely tied to advancements in its technical stack, with tools like LangChain and LLamaIndex gaining prominence alongside emerging platforms like Flowise AI, HayStack, Meltano, and Cohere Coral, each contributing unique features to the field. Traditional software and cloud service providers are also expanding their offerings to include RAG-centric services, further diversifying the technology landscape. This divergence is marked by trends towards customization, simplification, and specialization, indicating a symbiotic growth between RAG models and their technical stack. While the RAG toolkit is converging into a foundational technical stack, the vision of a fully integrated, comprehensive platform remains a future goal, pending ongoing innovation and development.

Gao et al. Retrieval-Augmented Generation for Large Language Models

After SAP releases (will release) SAP HANA Cloud Vector Engine (still in preview, but you can use pgvector PostreSQL extension on BTP) and Generative AI Hub, both summarized in this blog post, you see SAP moving towards RAG (Retrieval Augmented Generation). RAG, a buzzword in the industry, focuses on knowledge search, QA, conversational agents, workflow automation, and document processing for our Gen AI applications.

Soon enough, you start playing around with a RAG implementation, it's easy to find ourselves in the middle of the challenges in productionizing applications; things like bad retrieval issues leading to low response quality, hallucinations, irrelevance, or outdated information are just a few examples that before bringing it to production you must set up the architecture ready to do an evaluation of our RAG system, emphasizing the need for relevant and precise results to avoid performance degradation due to distractors.

Defining benchmarks, evaluating end-to-end solutions, and assessing specific components of the retrieval is what I am going to discuss in this blog. NOT model / AI application evaluation (which is a topic for another day).

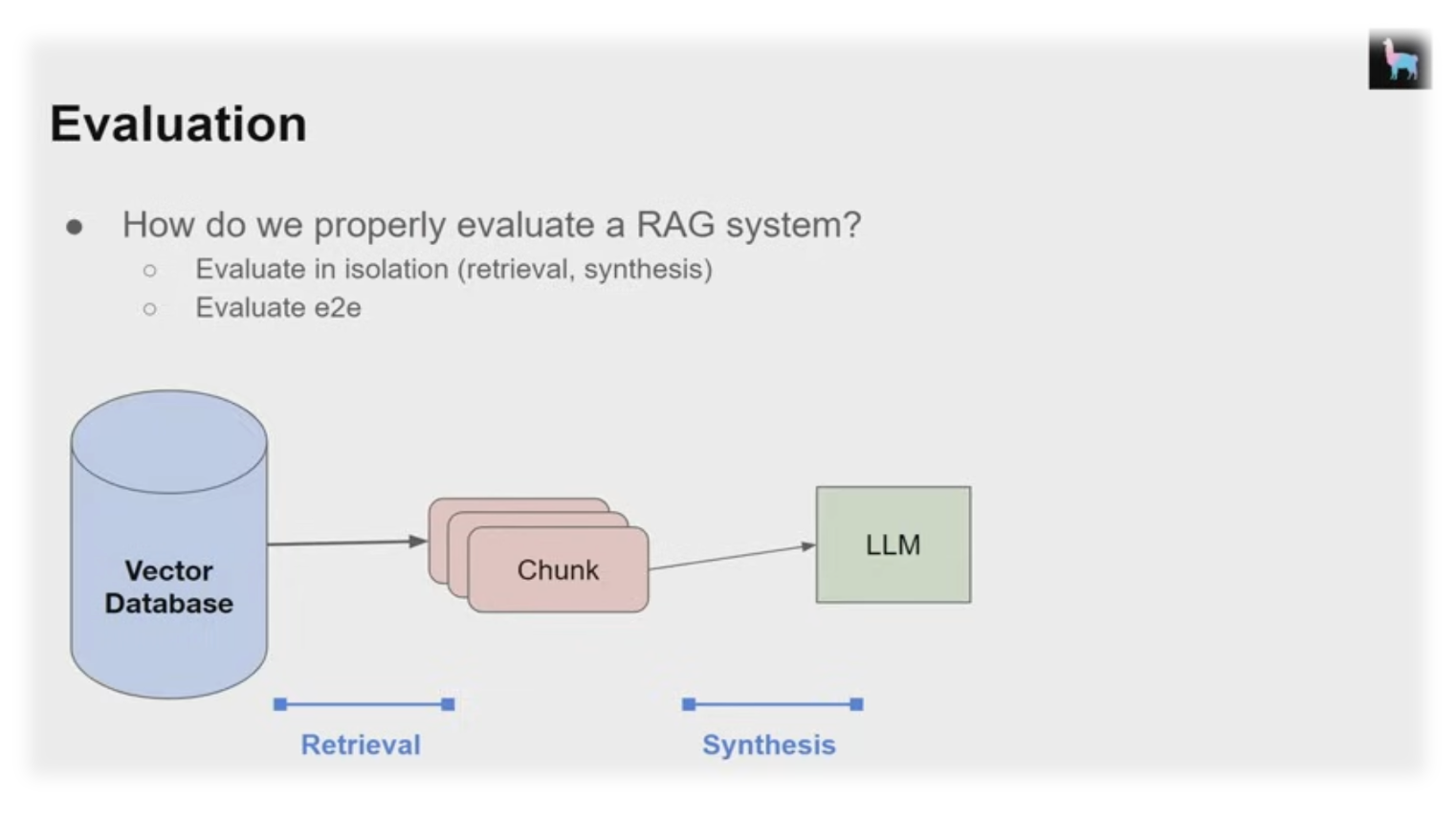

RAG Evaluation (concept)

RAG Evaluation is a vital step; you must establish benchmarks for the system 1) to track progress and 2) to make improvements.

There are several approaches to evaluation. You need to evaluate both the end-to-end solution, considering input queries and output responses, and specific components of your system. For instance, if you've identified retrieval as an area for improvement, you'll need retrieval metrics to assess your retrieval system.

Evaluation in Isolation ensures that the retrieved results answer the query accurately and do not include irrelevant information. First, you need an evaluation dataset that pairs input queries with relevant document IDs. You can obtain such datasets through human labeling, user feedback, or even synthetic generation. Once you have this dataset, you can measure various ranking metrics.

In summary, it is a suite of metrics that measure the effectiveness of systems (like search engines, recommendation systems, or information retrieval systems) in ranking items according to queries or tasks. The evaluation metrics for the retriever module mainly focus on context relevance, measuring the relatedness of retrieved documents to the query question.

End-to-End Evaluation focuses after retrieval. After generating a response and evaluating the final response. You need another dataset to assess the quality of the responses generated from the input queries. This dataset can be created through human annotations, user feedback, or by having ground truth reference answers for each query. After obtaining this dataset, you can run the entire retrieval and synthesis pipeline, followed by evaluations using language models.

From the perspective of content generation goals, evaluation can be divided into unlabeled and labeled content. Unlabeled content evaluation metrics include answer fidelity, answer relevance, harmlessness, etc., while labeled content evaluation metrics include Accuracy and EM. Additionally, from the perspective of evaluation methods, end-to-end evaluation can be divided into manual evaluation and automated evaluation using LLMs.

While these evaluation processes might seem complex, retrieval evaluation has been a well-established practice in the field for years and is well-documented.

RAG Eval Frameworks (open source)

RaLLe

RaLLe is a framework for developing and evaluating RAG that appeared this Q4 2023.

A variety of actions can be specified for an R-LLM, with each action capable of being tested independently to assess related prompts. The experimental setup and evaluation results are monitored using MLflow. Moreover, a straightforward chat interface can be constructed to apply and test the optimal practices identified during the development and evaluation phases in a real-world context.

| RaLLe's main benefit over other frameworks is it provides a higher transparency in verifying individual inference steps and optimizing prompts vs other tools like ChatGPT Retrieval Plugin, Guidance, and LangChain by providing an accessible platform. |

You can check out RaLLe in this video.

ARES

ARES framework (Stanford University) requires inputs such as an in-domain passage set, a human preference validation set of 150+ annotated data points, and five few-shot examples of in-domain queries and answers for prompting LLMs in synthetic data generation. The first step is to initiate by creating synthetic queries and answers from the corpus passages. Leveraging these generated training triples and a contrastive learning framework, the nest step is to fine-tune an LLM to classify query-passage-answer triples across three criteria 1) context relevance, 2) answer faithfulness, and 3) answer relevance. Then, finally, LLM is employed to judge and evaluate RAG systems and establish confidence bounds for ranking using Prediction-Powered Inference (PPI) and the human preference validation set.

Unlike RaLLe, ARES offers statistical guarantees for its predictions using PPI, providing confidence intervals for its scoring. |

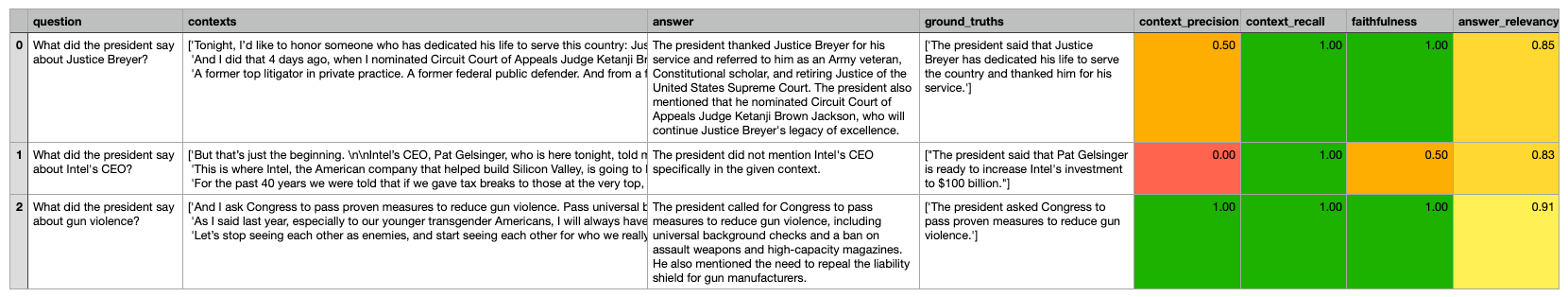

RAGAS (People's Choice Award)

RAGAS provides a suite of metrics to evaluate different aspects of RAG systems without relying on ground truth human annotations. These metrics are divided into two categories: retrieval and generation.

- Retrieval Metrics evaluate the performance of the retrieval system.

- Context Relevancy measures the signal-to-noise ratio in the retrieved contexts.

- Context Recall measures the ability of the retriever to retrieve all the necessary information needed to answer the question.

- Generation Metrics evaluate the performance of the generation system.

- Faithfulness measures hallucinations or the generation of information not present in the context.

- Answer Relevancy measures how to the point the answers are to the question.

The harmonic mean of these four aspects gives you the RAGAS score, which is a single measure of the performance of your QA system across all the important aspects.

To use RAGAS, you need a few questions and a reference answer if you're using context recall. Most of the measurements do not require any labeled data, making it easier for users to run it without worrying about building a human-annotated test dataset first.

Below, you can see the resulting RAGAs scores for the examples:

The following output is produced by Ragas:

1. Retrieval: context_relevancy and context_recall which represents the measure of the performance of your retrieval system.

2. Generation : faithfulness, which measures hallucinations, and answer_relevancy, which measures the answers to question relevance.

Additionally, RAGAs leverage LLMs under the hood for reference-free evaluation to save costs or combine RAGAS with LangSmith. |

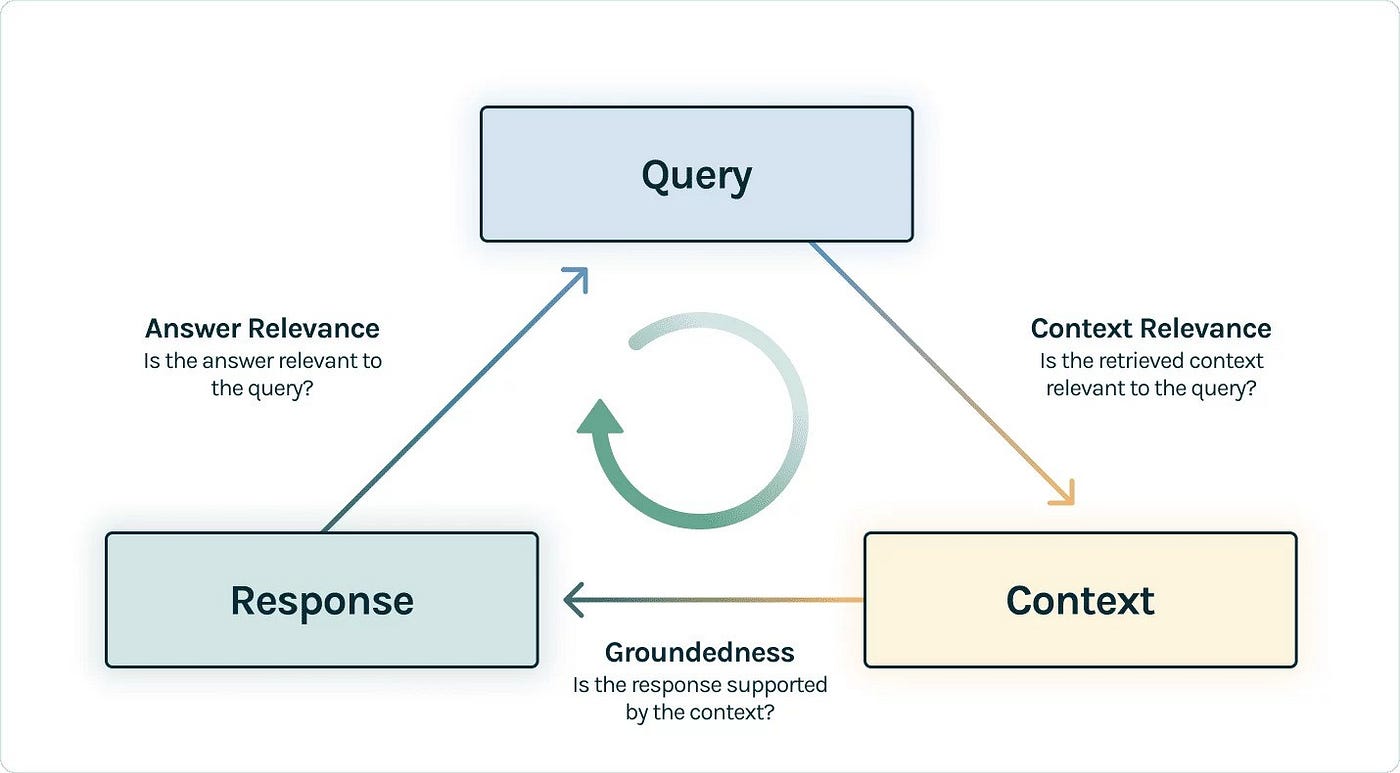

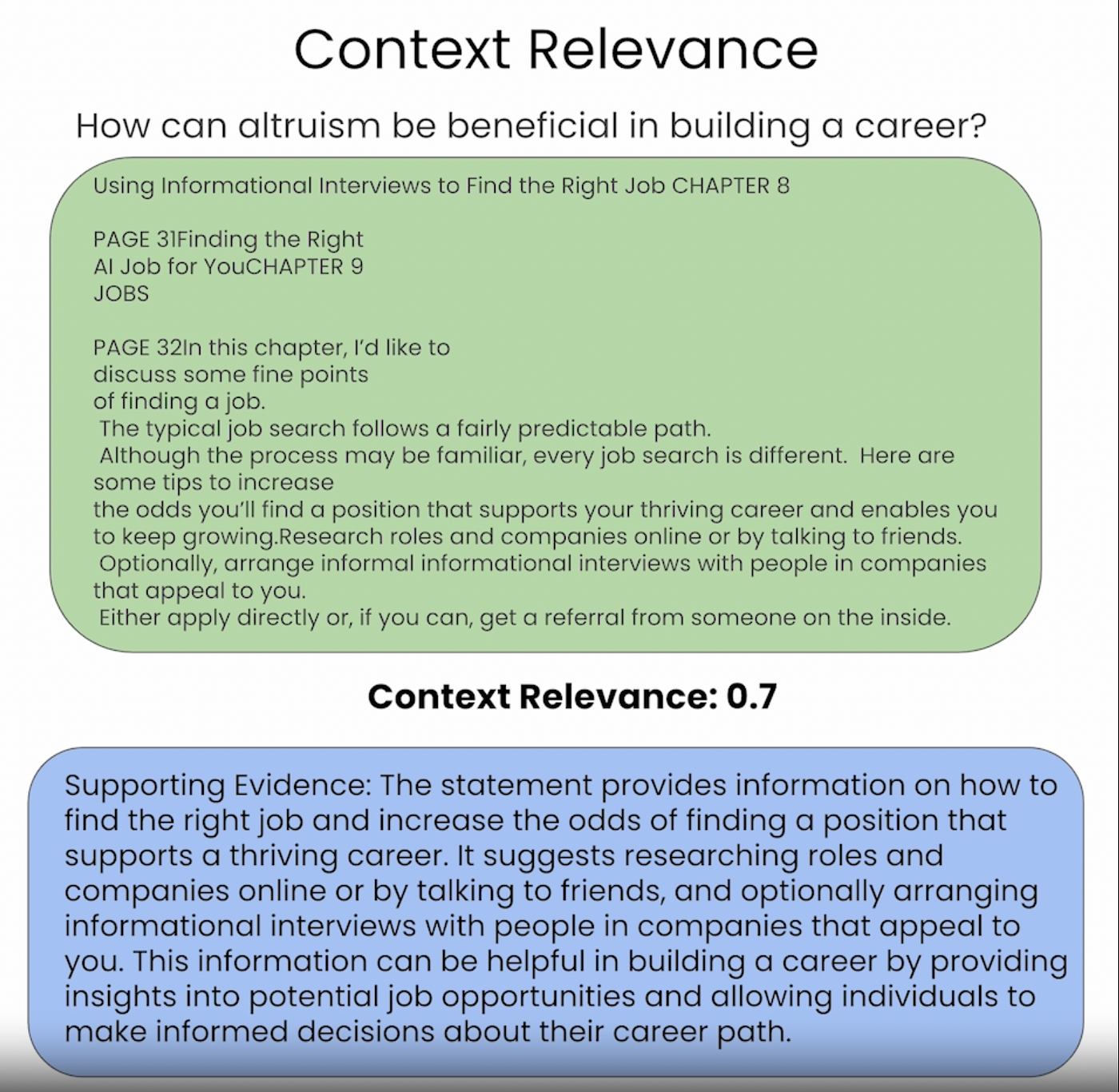

TruLens Eval RAG Triad of Metrics

The RAG Triad comprises three evaluative tests: context relevance, groundedness, and answer relevance. Context relevance involves verifying the pertinence of retrieved content to the input query, which is crucial to preventing irrelevant context from leading to inaccurate answers; this is assessed using a Language Model to generate a context relevance score. Groundedness checks the factual support for each statement within a response, addressing the issue of LLMs potentially generating embellished or factually incorrect statements. Finally, answer relevance ensures the response not only addresses the user's question but does so in a directly applicable manner. By meeting these standards, an RAG application can be considered accurate and free from hallucinations within the scope of its knowledge base, providing a nuanced statement about its correctness.

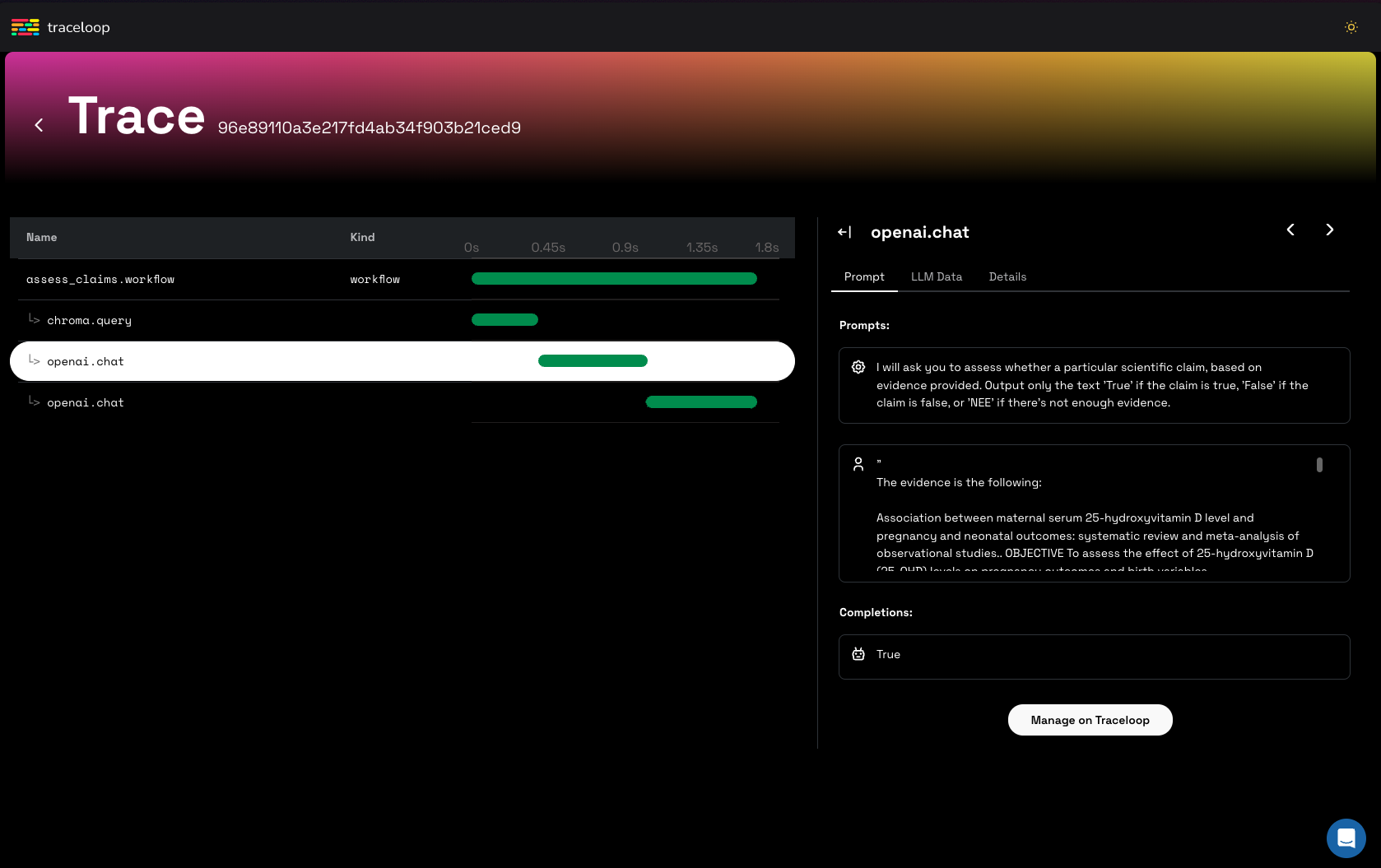

🔭 TraceLoop OpenLLMetry

OpenLLMetry provides observability for RAG systems. It allows tracing calls to VectorDBs, Public and Private LLMs, and other AI services. It gives visibility to query and index calls and LLM prompts and completions.

Traceloop helps you to debug and test changes to your models and prompts for every request. It can run on a cloud-hosted version.

Futures on RAG platforms

In the research timeline, the bulk of Retrieval-Augmented Generation (RAG) studies surfaced post-2020, with a notable surge following ChatGPT's release in December 2022, marking a pivotal moment. Initially, RAG research focused on reinforcement strategies during pre-training and supervised fine-tuning stages. However, in 2023, with the advent of large, costly-to-train models, the emphasis has shifted towards reinforcement during the inference stage, aiming to embed external knowledge cost-effectively through RAG modules.

SAP releases for Vector Engine and Generative AI Hub go in the direction to help BTP become a platform of choice for our RAG pipelines, where we will need to provide solutions that are driving the attention of Vector DBs ecosystem;

- Context Length: The effectiveness of RAG is constrained by the context window size of Large Language Models (LLMs). Late 2023 research aims to balance the trade-off between too short and too long windows, with efforts focusing on virtually unlimited context sizes as a significant question.

- Robustness: noisy or contradictory information during retrieval can negatively impact RAG's output quality. Enhancing RAG's resistance to such adversarial inputs is gaining momentum as a key performance metric.

- Hybrid Approaches (RAG+FT): Integrating RAG with fine-tuning, whether sequential, alternating, or joint training, is an emerging strategy. Exploring the optimal integration and harnessing both parameterized and non-parameterized advantages are ripe for research.

CONCLUSION

The journey of RAG on SAP has just started, and the future of Retrieval-Augmented Generation (RAG) looks promising yet challenging. As RAG continues to evolve, driven by significant strides post-2020 and catalyzed by the advent of models like ChatGPT, it faces hurdles such as context length limitations, robustness against misinformation, and the quest for optimal hybrid approaches. The emergence of diverse tools and frameworks provides a more transparent, effective way to optimize RAG systems. With SAP's advancements toward RAG-friendly platforms, our focus in the AI engineering space is on overcoming these challenges and ensuring accurate, reliable, and efficient augmented generation systems.

- SAP Managed Tags:

- Artificial Intelligence,

- SAP AI Core

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

"automatische backups"

1 -

"regelmäßige sicherung"

1 -

"TypeScript" "Development" "FeedBack"

1 -

505 Technology Updates 53

1 -

ABAP

14 -

ABAP API

1 -

ABAP CDS Views

2 -

ABAP CDS Views - BW Extraction

1 -

ABAP CDS Views - CDC (Change Data Capture)

1 -

ABAP class

2 -

ABAP Cloud

3 -

ABAP Development

5 -

ABAP in Eclipse

1 -

ABAP Platform Trial

1 -

ABAP Programming

2 -

abap technical

1 -

abapGit

1 -

absl

2 -

access data from SAP Datasphere directly from Snowflake

1 -

Access data from SAP datasphere to Qliksense

1 -

Accrual

1 -

action

1 -

adapter modules

1 -

Addon

1 -

Adobe Document Services

1 -

ADS

1 -

ADS Config

1 -

ADS with ABAP

1 -

ADS with Java

1 -

ADT

2 -

Advance Shipping and Receiving

1 -

Advanced Event Mesh

3 -

Advanced formula

1 -

AEM

1 -

AI

7 -

AI Launchpad

1 -

AI Projects

1 -

AIML

9 -

Alert in Sap analytical cloud

1 -

Amazon S3

1 -

Analytical Dataset

1 -

Analytical Model

1 -

Analytics

1 -

Analyze Workload Data

1 -

annotations

1 -

API

1 -

API and Integration

3 -

API Call

2 -

API security

1 -

Application Architecture

1 -

Application Development

5 -

Application Development for SAP HANA Cloud

3 -

Applications and Business Processes (AP)

1 -

Artificial Intelligence

1 -

Artificial Intelligence (AI)

5 -

Artificial Intelligence (AI) 1 Business Trends 363 Business Trends 8 Digital Transformation with Cloud ERP (DT) 1 Event Information 462 Event Information 15 Expert Insights 114 Expert Insights 76 Life at SAP 418 Life at SAP 1 Product Updates 4

1 -

Artificial Intelligence (AI) blockchain Data & Analytics

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise Oil Gas IoT Exploration Production

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise sustainability responsibility esg social compliance cybersecurity risk

1 -

ASE

1 -

ASR

2 -

ASUG

1 -

Attachments

1 -

Authorisations

1 -

Automating Processes

1 -

Automation

2 -

aws

2 -

Azure

1 -

Azure AI Studio

1 -

Azure API Center

1 -

Azure API Management

1 -

B2B Integration

1 -

Backorder Processing

1 -

Backup

1 -

Backup and Recovery

1 -

Backup schedule

1 -

BADI_MATERIAL_CHECK error message

1 -

Bank

1 -

Bank Communication Management

1 -

BAS

1 -

basis

2 -

Basis Monitoring & Tcodes with Key notes

2 -

Batch Management

1 -

BDC

1 -

Best Practice

1 -

bitcoin

1 -

Blockchain

3 -

bodl

1 -

BOP in aATP

1 -

BOP Segments

1 -

BOP Strategies

1 -

BOP Variant

1 -

BPC

1 -

BPC LIVE

1 -

BTP

13 -

BTP Destination

2 -

Business AI

1 -

Business and IT Integration

1 -

Business application stu

1 -

Business Application Studio

1 -

Business Architecture

1 -

Business Communication Services

1 -

Business Continuity

1 -

Business Data Fabric

3 -

Business Fabric

1 -

Business Partner

12 -

Business Partner Master Data

10 -

Business Technology Platform

2 -

Business Trends

4 -

BW4HANA

1 -

CA

1 -

calculation view

1 -

CAP

4 -

Capgemini

1 -

CAPM

1 -

Catalyst for Efficiency: Revolutionizing SAP Integration Suite with Artificial Intelligence (AI) and

1 -

CCMS

2 -

CDQ

12 -

CDS

2 -

Cental Finance

1 -

Certificates

1 -

CFL

1 -

Change Management

1 -

chatbot

1 -

chatgpt

3 -

CL_SALV_TABLE

2 -

Class Runner

1 -

Classrunner

1 -

Cloud ALM Monitoring

1 -

Cloud ALM Operations

1 -

cloud connector

1 -

Cloud Extensibility

1 -

Cloud Foundry

4 -

Cloud Integration

6 -

Cloud Platform Integration

2 -

cloudalm

1 -

communication

1 -

Compensation Information Management

1 -

Compensation Management

1 -

Compliance

1 -

Compound Employee API

1 -

Configuration

1 -

Connectors

1 -

Consolidation Extension for SAP Analytics Cloud

2 -

Control Indicators.

1 -

Controller-Service-Repository pattern

1 -

Conversion

1 -

Cosine similarity

1 -

cryptocurrency

1 -

CSI

1 -

ctms

1 -

Custom chatbot

3 -

Custom Destination Service

1 -

custom fields

1 -

Customer Experience

1 -

Customer Journey

1 -

Customizing

1 -

cyber security

3 -

cybersecurity

1 -

Data

1 -

Data & Analytics

1 -

Data Aging

1 -

Data Analytics

2 -

Data and Analytics (DA)

1 -

Data Archiving

1 -

Data Back-up

1 -

Data Flow

1 -

Data Governance

5 -

Data Integration

2 -

Data Quality

12 -

Data Quality Management

12 -

Data Synchronization

1 -

data transfer

1 -

Data Unleashed

1 -

Data Value

8 -

database tables

1 -

Dataframe

1 -

Datasphere

3 -

datenbanksicherung

1 -

dba cockpit

1 -

dbacockpit

1 -

Debugging

2 -

Defender

1 -

Delimiting Pay Components

1 -

Delta Integrations

1 -

Destination

3 -

Destination Service

1 -

Developer extensibility

1 -

Developing with SAP Integration Suite

1 -

Devops

1 -

digital transformation

1 -

Documentation

1 -

Dot Product

1 -

DQM

1 -

dump database

1 -

dump transaction

1 -

e-Invoice

1 -

E4H Conversion

1 -

Eclipse ADT ABAP Development Tools

2 -

edoc

1 -

edocument

1 -

ELA

1 -

Embedded Consolidation

1 -

Embedding

1 -

Embeddings

1 -

Employee Central

1 -

Employee Central Payroll

1 -

Employee Central Time Off

1 -

Employee Information

1 -

Employee Rehires

1 -

Enable Now

1 -

Enable now manager

1 -

endpoint

1 -

Enhancement Request

1 -

Enterprise Architecture

1 -

ESLint

1 -

ETL Business Analytics with SAP Signavio

1 -

Euclidean distance

1 -

Event Dates

1 -

Event Driven Architecture

1 -

Event Mesh

2 -

Event Reason

1 -

EventBasedIntegration

1 -

EWM

1 -

EWM Outbound configuration

1 -

EWM-TM-Integration

1 -

Existing Event Changes

1 -

Expand

1 -

Expert

2 -

Expert Insights

2 -

Exploits

1 -

Fiori

14 -

Fiori Elements

2 -

Fiori SAPUI5

12 -

first-guidance

1 -

Flask

1 -

FTC

1 -

Full Stack

8 -

Funds Management

1 -

gCTS

1 -

General

1 -

Generative AI

1 -

Getting Started

1 -

GitHub

9 -

Grants Management

1 -

groovy

1 -

GTP

1 -

HANA

6 -

HANA Cloud

2 -

Hana Cloud Database Integration

2 -

HANA DB

2 -

HANA XS Advanced

1 -

Historical Events

1 -

home labs

1 -

HowTo

1 -

HR Data Management

1 -

html5

8 -

HTML5 Application

1 -

Identity cards validation

1 -

idm

1 -

Implementation

1 -

input parameter

1 -

instant payments

1 -

Integration

3 -

Integration Advisor

1 -

Integration Architecture

1 -

Integration Center

1 -

Integration Suite

1 -

intelligent enterprise

1 -

iot

1 -

Java

1 -

job

1 -

Job Information Changes

1 -

Job-Related Events

1 -

Job_Event_Information

1 -

joule

4 -

Journal Entries

1 -

Just Ask

1 -

Kerberos for ABAP

8 -

Kerberos for JAVA

8 -

KNN

1 -

Launch Wizard

1 -

Learning Content

2 -

Life at SAP

5 -

lightning

1 -

Linear Regression SAP HANA Cloud

1 -

Loading Indicator

1 -

local tax regulations

1 -

LP

1 -

Machine Learning

3 -

Marketing

1 -

Master Data

3 -

Master Data Management

14 -

Maxdb

2 -

MDG

1 -

MDGM

1 -

MDM

1 -

Message box.

1 -

Messages on RF Device

1 -

Microservices Architecture

1 -

Microsoft Universal Print

1 -

Middleware Solutions

1 -

Migration

5 -

ML Model Development

1 -

Modeling in SAP HANA Cloud

8 -

Monitoring

3 -

MTA

1 -

Multi-Record Scenarios

1 -

Multiple Event Triggers

1 -

Myself Transformation

1 -

Neo

1 -

New Event Creation

1 -

New Feature

1 -

Newcomer

1 -

NodeJS

3 -

ODATA

2 -

OData APIs

1 -

odatav2

1 -

ODATAV4

1 -

ODBC

1 -

ODBC Connection

1 -

Onpremise

1 -

open source

2 -

OpenAI API

1 -

Oracle

1 -

PaPM

1 -

PaPM Dynamic Data Copy through Writer function

1 -

PaPM Remote Call

1 -

PAS-C01

1 -

Pay Component Management

1 -

PGP

1 -

Pickle

1 -

PLANNING ARCHITECTURE

1 -

Popup in Sap analytical cloud

1 -

PostgrSQL

1 -

POSTMAN

1 -

Prettier

1 -

Process Automation

2 -

Product Updates

5 -

PSM

1 -

Public Cloud

1 -

Python

4 -

python library - Document information extraction service

1 -

Qlik

1 -

Qualtrics

1 -

RAP

3 -

RAP BO

2 -

Record Deletion

1 -

Recovery

1 -

recurring payments

1 -

redeply

1 -

Release

1 -

Remote Consumption Model

1 -

Replication Flows

1 -

research

1 -

Resilience

1 -

REST

1 -

REST API

1 -

Retagging Required

1 -

Risk

1 -

Rolling Kernel Switch

1 -

route

1 -

rules

1 -

S4 HANA

1 -

S4 HANA Cloud

1 -

S4 HANA On-Premise

1 -

S4HANA

3 -

S4HANA_OP_2023

2 -

SAC

10 -

SAC PLANNING

9 -

SAP

4 -

SAP ABAP

1 -

SAP Advanced Event Mesh

1 -

SAP AI Core

8 -

SAP AI Launchpad

8 -

SAP Analytic Cloud Compass

1 -

Sap Analytical Cloud

1 -

SAP Analytics Cloud

4 -

SAP Analytics Cloud for Consolidation

3 -

SAP Analytics Cloud Story

1 -

SAP analytics clouds

1 -

SAP API Management

1 -

SAP BAS

1 -

SAP Basis

6 -

SAP BODS

1 -

SAP BODS certification.

1 -

SAP BTP

21 -

SAP BTP Build Work Zone

2 -

SAP BTP Cloud Foundry

6 -

SAP BTP Costing

1 -

SAP BTP CTMS

1 -

SAP BTP Innovation

1 -

SAP BTP Migration Tool

1 -

SAP BTP SDK IOS

1 -

SAP BTPEA

1 -

SAP Build

11 -

SAP Build App

1 -

SAP Build apps

1 -

SAP Build CodeJam

1 -

SAP Build Process Automation

3 -

SAP Build work zone

10 -

SAP Business Objects Platform

1 -

SAP Business Technology

2 -

SAP Business Technology Platform (XP)

1 -

sap bw

1 -

SAP CAP

2 -

SAP CDC

1 -

SAP CDP

1 -

SAP CDS VIEW

1 -

SAP Certification

1 -

SAP Cloud ALM

4 -

SAP Cloud Application Programming Model

1 -

SAP Cloud Integration for Data Services

1 -

SAP cloud platform

8 -

SAP Companion

1 -

SAP CPI

3 -

SAP CPI (Cloud Platform Integration)

2 -

SAP CPI Discover tab

1 -

sap credential store

1 -

SAP Customer Data Cloud

1 -

SAP Customer Data Platform

1 -

SAP Data Intelligence

1 -

SAP Data Migration in Retail Industry

1 -

SAP Data Services

1 -

SAP DATABASE

1 -

SAP Dataspher to Non SAP BI tools

1 -

SAP Datasphere

9 -

SAP DRC

1 -

SAP EWM

1 -

SAP Fiori

3 -

SAP Fiori App Embedding

1 -

Sap Fiori Extension Project Using BAS

1 -

SAP GRC

1 -

SAP HANA

1 -

SAP HCM (Human Capital Management)

1 -

SAP HR Solutions

1 -

SAP IDM

1 -

SAP Integration Suite

9 -

SAP Integrations

4 -

SAP iRPA

2 -

SAP LAGGING AND SLOW

1 -

SAP Learning Class

1 -

SAP Learning Hub

1 -

SAP Master Data

1 -

SAP Odata

2 -

SAP on Azure

2 -

SAP PAL

1 -

SAP PartnerEdge

1 -

sap partners

1 -

SAP Password Reset

1 -

SAP PO Migration

1 -

SAP Prepackaged Content

1 -

SAP Process Automation

2 -

SAP Process Integration

2 -

SAP Process Orchestration

1 -

SAP S4HANA

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Cloud for Finance

1 -

SAP S4HANA Cloud private edition

1 -

SAP Sandbox

1 -

SAP STMS

1 -

SAP successfactors

3 -

SAP SuccessFactors HXM Core

1 -

SAP Time

1 -

SAP TM

2 -

SAP Trading Partner Management

1 -

SAP UI5

1 -

SAP Upgrade

1 -

SAP Utilities

1 -

SAP-GUI

8 -

SAP_COM_0276

1 -

SAPBTP

1 -

SAPCPI

1 -

SAPEWM

1 -

sapfirstguidance

1 -

SAPHANAService

1 -

SAPIQ

1 -

sapmentors

1 -

saponaws

2 -

SAPS4HANA

1 -

SAPUI5

5 -

schedule

1 -

Script Operator

1 -

Secure Login Client Setup

8 -

security

9 -

Selenium Testing

1 -

Self Transformation

1 -

Self-Transformation

1 -

SEN

1 -

SEN Manager

1 -

service

1 -

SET_CELL_TYPE

1 -

SET_CELL_TYPE_COLUMN

1 -

SFTP scenario

2 -

Simplex

1 -

Single Sign On

8 -

Singlesource

1 -

SKLearn

1 -

Slow loading

1 -

soap

1 -

Software Development

1 -

SOLMAN

1 -

solman 7.2

2 -

Solution Manager

3 -

sp_dumpdb

1 -

sp_dumptrans

1 -

SQL

1 -

sql script

1 -

SSL

8 -

SSO

8 -

Substring function

1 -

SuccessFactors

1 -

SuccessFactors Platform

1 -

SuccessFactors Time Tracking

1 -

Sybase

1 -

system copy method

1 -

System owner

1 -

Table splitting

1 -

Tax Integration

1 -

Technical article

1 -

Technical articles

1 -

Technology Updates

15 -

Technology Updates

1 -

Technology_Updates

1 -

terraform

1 -

Threats

2 -

Time Collectors

1 -

Time Off

2 -

Time Sheet

1 -

Time Sheet SAP SuccessFactors Time Tracking

1 -

Tips and tricks

2 -

toggle button

1 -

Tools

1 -

Trainings & Certifications

1 -

Transformation Flow

1 -

Transport in SAP BODS

1 -

Transport Management

1 -

TypeScript

3 -

ui designer

1 -

unbind

1 -

Unified Customer Profile

1 -

UPB

1 -

Use of Parameters for Data Copy in PaPM

1 -

User Unlock

1 -

VA02

1 -

Validations

1 -

Vector Database

2 -

Vector Engine

1 -

Visual Studio Code

1 -

VSCode

2 -

VSCode extenions

1 -

Vulnerabilities

1 -

Web SDK

1 -

work zone

1 -

workload

1 -

xsa

1 -

XSA Refresh

1

- « Previous

- Next »

- Sapphire 2024 user experience and application development sessions in Technology Blogs by SAP

- General Splitter in CI - Namespace Prefix Problem in Technology Blogs by Members

- Supporting Multiple API Gateways with SAP API Management – using Azure API Management as example in Technology Blogs by SAP

- Consuming SAP with SAP Build Apps - Mobile Apps for iOS and Android in Technology Blogs by SAP

- Now available: starter kit for genAI on SAP BTP in Technology Blogs by SAP

| User | Count |

|---|---|

| 9 | |

| 8 | |

| 5 | |

| 5 | |

| 4 | |

| 4 | |

| 4 | |

| 3 | |

| 3 | |

| 3 |