- SAP Community

- Products and Technology

- Supply Chain Management

- SCM Blogs by SAP

- Integrating SAP Datasphere with SAP Integrated Bus...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

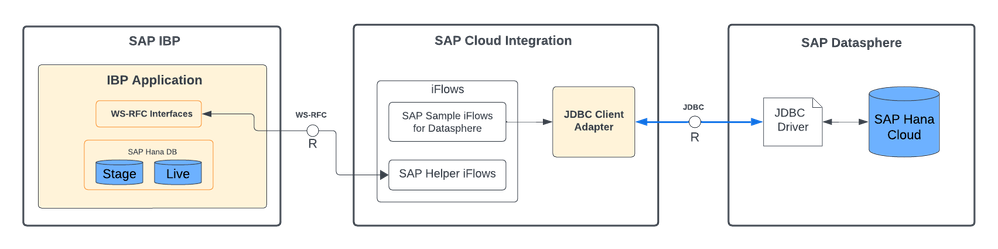

To create a transparent and a feasible supply chain plan – one needs not only master data and transactional data, but we also need contextual data that might be available in multiple data sources across the IT landscape. SAP Datasphere - the next generation of SAP Data Warehouse cloud is a sophisticated tool to consolidate data from various sources and build a business data fabric that can be semantically modelled and fed to the state-of -the art supply chain planning solution – SAP IBP. In this blog I explain the steps I took to read data from a space in SAP Datasphere, transform this data and then write it to SAP IBP using SAP Cloud Integration.

Here is a recap of what we did so far:

- In this experiment, we want to read data from SAP IBP using web-socket RFC as a protocol in SAP Cloud Integration. Then we transform this data using XSLT and then use JDBC to write this data to SAP Datasphere. For this,

- We created a communication interface using the SAP_COM_0931 in SAP IBP. Based on which we also created a destination on the BTP.

- In SAP Datasphere, we created a space, user and a table called IBPDEMAND. Imagine this table has some data which we want to write back to SAP IBP.

- We use SAP Cloud Integration as our middleware. We created a JDBC data source alias which we used in our iFlows.

Reading data from SAP Datasphere

In our previous blog, we did create our database user. We have the credentials which can use to read data using an Open SQL Statement. If you consider a simple SELECT statement, we can prepare an XML payload which we can send to the JDBC adapter as documented in this help. The following XML payload would help us do this.

<root>

<Select_Statement>

<dbTableName action="SELECT">

<table><xsl:value-of select="$SAPDSPTarget"/></table>

<access>

<PRODUCT/>

<CUSTOMER/>

<LOCATION/>

<CONSDEMAND/>

<KEYFIGUREDATE/>

<UNITS/>

<COMMENTS/>

</access>

<!—

<key1><PRODUCT hasQuot="Yes">IBP-120</PRODUCT></key1>

<key2><CUSTOMER hasQuot="Yes">0017100002</CUSTOMER></key2>

-->

</dbTableName>

</Select_Statement>

</root>The above XSLT would generate a select statement from a table where the table name is a parameter $SAPDSPTarget. This table name can be either parameterized via configuration or passed to this iFlow as part of a payload. I exposed the man iFlow as a HTTP end point and passed a JSON payload in which the Datasphere target table name was a key value pair. The XSLT can be used in an iFlow like show below.

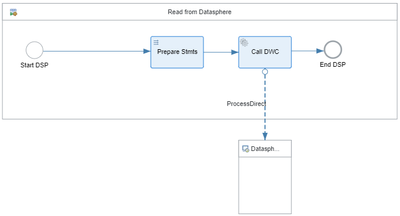

Fig.2. Reading data from SAP Datasphere. It uses a sub flow which is called via a Process direct.

In the above iFlow section, the Process direct calls a subflow which can be reused multiple times. The sub-flow uses the JDBC adapter to send the generated Open SQL command to SAP Datasphere. If there are records, they are represented in an XML payload as a response from the JDBC adapter in the following format:

<root><Select_Statement_response><row>…. Where, the row element is an array of records based on the select. A router is used to check if there were records fetched by the select statement. This response is stored in a temporary variable for further transformations.

Writing to SAP IBP

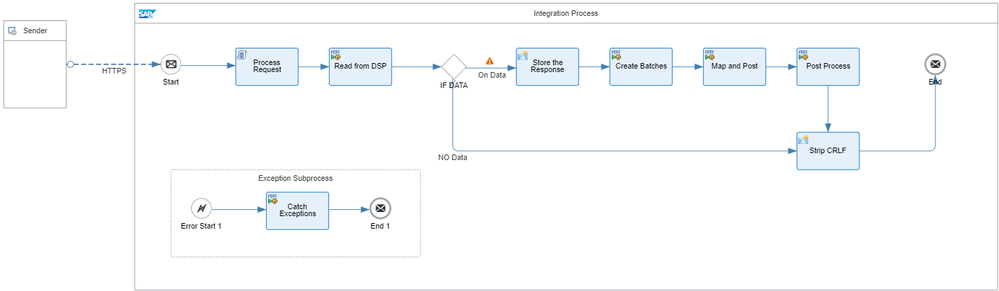

Data is written to SAP IBP in an asynchronous manner. The following steps explain the process:

- At first a batch id is created to write data to IBP in batches.

- Data is initially stored in a staging area which is internal to SAP IBP.

- Once all data is stored in the staging area, then the post processing is started based on the batch id.

- The status for the post processing step is monitored for errors. These errors are relevant to the business context. For example, a wrong posting date, a miss matching product, customer, location relationship, invalid planning area, etc.

- If all data was process successful, the data will be available in the target planning area.

The screenshot below, shows the entire steps end to end in an iFlow.

Once we receive the data from SAP Datapshere via the JDBC adapter, I used the following XSLT transformation to prepare a payload that is sent to the SAP IBP system to create a batch ID

<IBPWriteBatches>

<IBPWriteBatch Key="${header.IBPBatchKey}" Name="SAP Datasphere Planning Data"

Destination="${header.IBPDestination}"

Command="INSERT_UPDATE"

PlanningArea="${header.IBPPlanningArea}"

PlanningAreaVersion=""/>

</IBPWriteBatches>If the batch was created, I prepared the following payload to write this data from Datasphere to the staging tables.

<multimap:Messages>

<multimap:Message1>

<IBPWriteKeyFigures>

<xsl:attribute name="FieldList"><xsl:value-of select="$IBPFields"></xsl:value-of></xsl:attribute>

<xsl:attribute name="BatchKey"><xsl:value-of select="$IBPBatchKey"/></xsl:attribute>

<xsl:attribute name="FileName">SAPDatasphereDataLoad</xsl:attribute>

<xsl:variable name="InputPayload" select="parse-xml($DSPPayload)"/>

<xsl:for-each select="$InputPayload/root/Select_Statement_response/row">

<item>

<PRDID><xsl:value-of select="./PRODUCT"></xsl:value-of></PRDID>

<LOCID><xsl:value-of select="./LOCATION"></xsl:value-of></LOCID>

<CUSTID><xsl:value-of select="./CUSTOMER"></xsl:value-of></CUSTID>

<CONSENSUSDEMANDQTY><xsl:value-of select="./CONSDEMAND"></xsl:value-of></CONSENSUSDEMANDQTY>

<KEYFIGUREDATE><xsl:value-of select="./KEYFIGUREDATE"></xsl:value-of></KEYFIGUREDATE>

</item>

</xsl:for-each>

</IBPWriteKeyFigures>

</multimap:Message1>

</multimap:Messages>Taking a closer look, you can see that a FieldList is first created. This list contains a coma separated string with the name of the Key figure and all its root attributes which are needed in IBP. The same list is also used to create the column values in the for-each loop where the mapping between the column name from the Datasphere table and the SAP IBP attributes and keyfigure is done. Once this payload is created, we can use the helper iFlows to send this data to the SAP IBP backend. I used the process direct called - SAP_IBP_Write_-_Post_Data which is implemented in the helper iFlows which internally uses the Web-socket RFC calls to send this payload to the SAP IBP backend.

After all the records are written to SAP IBP, we can start the post processing step using the process direct - SAP_IBP_Write_-_Process_Posted_Data. This uses the batch id internally to call the respective web-socket RFC interface. Once the post processing step is completed a status message is sent back to the caller. If the post processing was successful, the XML response would look like,

<multimap:Message2>

<IBPWriteBatch Command="INSERT_UPDATE"

CreatedAt="2024-03-04T14:16:43Z" CreatedBy="CC0000000083"

Destination="IBP_G5R_100_CLONING" Id="2595"

Key="ConsensusDemand" Name="SAP Datasphere Planning Data"

ProcessingEndedAt="2024-03-04T14:17:46Z" ProcessingInProgress="false"

ProcessingStartedAt="2024-03-04T14:17:43Z" ScheduleStatus="OK"

ScheduledAt="2024-03-04T14:16:43.521Z" WorstProcessingStatus="PROCESSED">

<Files>

<File Count="2"

Name="SAPDatasphereDataLoad" PlanningArea="XPACNT2305"

Status="PROCESSED" TypeOfData="Key Figure Data"/>

</Files>

<Messages/>

<ScheduleResponse>

<Status>OK</Status>

<Messages/>

</ScheduleResponse>

</IBPWriteBatch>

</multimap:Message2>Here you can interpret that key figure data was written to the IBP Destination name IBP_G5R_100_CLONING in a planning area called XPACNT2305 and the status of the post processing was OK.

Conclusion

In this blog, we showed the main aspects of how to read data from SAP Datasphere and write this to SAP IBP using the Cloud Integration as a middleware. SAP IBP is bundled with a middleware tool – SAP Cloud Integration for data services (CI-DS). In my next blog, I share how we can achieve the same steps using CI-DS.

- SAP Managed Tags:

- SAP Datasphere,

- SAP Integration Suite,

- SAP Integrated Business Planning for Supply Chain

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

Business Trends

169 -

Business Trends

24 -

Catalog Enablement

1 -

Event Information

47 -

Event Information

4 -

Expert Insights

12 -

Expert Insights

39 -

intelligent asset management

1 -

Life at SAP

63 -

Product Updates

500 -

Product Updates

66 -

Release Announcement

1 -

SAP Digital Manufacturing for execution

1 -

Super Bowl

1 -

Supply Chain

1 -

Sustainability

1 -

Swifties

1 -

Technology Updates

187 -

Technology Updates

17

- SAP IBP Real-Time Integration: Empowering Businesses for Smarter, Faster Decisions in Supply Chain Management Blogs by SAP

- Announcement: New integration platform in SAP Business Network for Logistics in Supply Chain Management Blogs by SAP

- “Mind the Gap” – Improves ROI, Cost & Margin by Merging Planning Processes in Supply Chain Management Blogs by SAP

- Drive productivity, safely and sustainably, with SAP manufacturing solutions in Supply Chain Management Blogs by SAP

- RISE with SAP advanced asset and service management package in Supply Chain Management Blogs by SAP

| User | Count |

|---|---|

| 8 | |

| 7 | |

| 6 | |

| 4 | |

| 4 | |

| 4 | |

| 3 | |

| 3 | |

| 3 | |

| 3 |