- SAP Community

- Products and Technology

- Enterprise Resource Planning

- ERP Blogs by Members

- Insights on Columnar store for SAP Functional Cons...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Introduction:

I hope its not a surprise for any one reading this Article that SAP S/4HANA uses HANA DB and HANA DB uses Columnar Data Storage for most of its critical table. There is boat load of Articles around why Columnar Data Storage is good for the world and its blazing speed. My initial thoughts when I learnt how Columnar Daomta Storage works is, it is "less intuitive" than Row Data Storage. Row Data Storage (the old way that we had in ECC) was always nice and simple like "filter MKPF for the Posting date, collect all the Material docs, pass on the same to MSEG and voila you have all the item level details for a given posting date". You can play around these table joins more intuitively to create any report. Why would some one try to mess up with this familiarity?

There is a real good reason. Columnar Data Storage is REAL REAL fast, its not 1x or 2x fast. In my observation it is 100x or 1000x faster in some of the queries that we regularly use for the Business. For instance for one of the query which we used run on the weekends for a procurement report which used to take 6 hours or so in ECC, when we moved to S/4HANA (and little tweaking of the query) it took us only few seconds. It was too good to be true. Upon validation, we were surprised that it is "too good and true".

That led to my curiosity on how the Columnar Data Storage works. Being a tech dummy I had to research for sometime to get the details, I have tried to explain the same in a "Functional Consultant friendly" manner. Please note I could have over simplified the data and examples to make it more understandable.

The Classic Row Data Storage, how it looks like in DB:

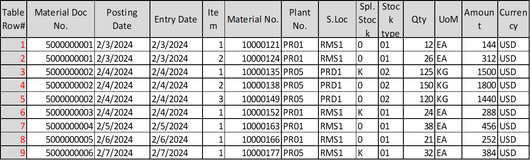

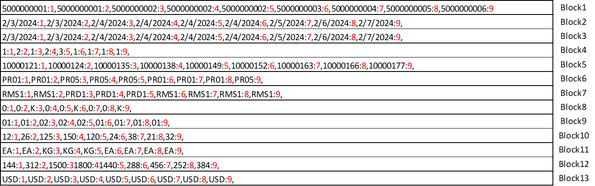

Lets consider the following data of Material Document (simplified the no. of columns). The first column here is the Row No.

Fig 1:

When this data gets stored in our classic Row Data Storage, it would be something like the below:

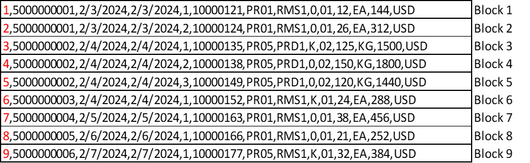

Fig 2:

In a Row stores, its plain and simple, the data is stored one row after another. For simplicity I have made 1 row of Material document details in 1 "Block" in Database, but in reality 1 DB Block would be much bigger (few KBs or even MBs) and would be storing several rows of Material documents continuously.

Lets not bother too much on what a Block is. Its a Database concept, you can consider this as chunk of Data that gets pulled for every IO (Input/Output) operation. So when my program requires data on say Material doc no. 5000000001, it pull all the data in Block 1. If my program requires data on Material doc no. 5000000003, then it still starts from Block 1, since its unable to find, it will discard the Block 1 and move to Block 2 to find, and continues this search till it finds the Block n where the data is.

Lets take a functional requirement to sum up all the "Amount" values of consignment items, this means wherever there is a Special stock K, accumulate the Amount value.

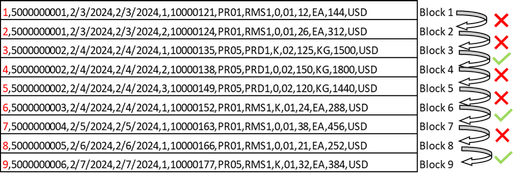

Lets see how the Row store handles this and how many “Blocks” it has to read before coming up with a value. As you can see below with the Row store the system reads all the Blocks from the DB before it calculates the final "Amount" value, like shown in the below fig.

Fig 3:

To explain it a bit, it goes to the first row, goes to the field Special Stock type and checks for “K”, if not, goes to the next row and repeats the cycle. When it finds a “K”, it sums up the “Amount”.

Although this method is simple (at least to explain), this scans the entire table, which could be in Billions. For one of the Retail clients that we were working, the projected Material Documents growth is 9.46 Billion/year, so you can imagine, how such a query would work in real time.

This is how Column Data Storage stores the data:

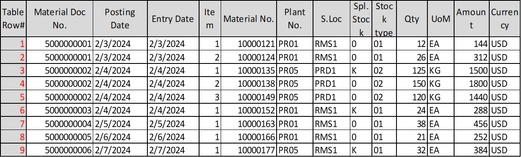

Fig 1 again:

With the same raw data example that we started with, the column store would look like the below:

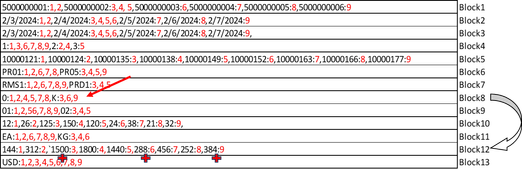

Fig 4:

The above fig is more for your visualization that the Blocks in the DB are filled column wise, a more realistic fig would be the below, which is just a transpose of the Fig4.

Fig 5:

Lets take it a bit slow, as I mentioned column store is not very intuitive. Few note worthy points:

- Columnar Data Storage fills the DB bocks column wise. When a new Material Document say 5000000007 comes next, the Doc no. would go to Block 1, the Posting Date would go to Block 2...Plant would go to Block 6 etc.

- The row nos. will still be tagged to each of the field value (represented the row nos in Red in the above table and in other tables as well). Note this is a very important concept, this tagging of row nos. to the field value connects them together although they are in different block.

- Reiterating that the above is a simplified example for easier understanding, in real world we could have millions of Material doc Nos. and the contiguous blocks containing Material doc Nos. could be in 100s.

Compression in Columnar Data Storage:

This is where it gets interesting. As you can see in each of the Blocks in Fig 5, in each of the Blocks there are many duplicate field values. For instance Material document 5000000001 is repeated twice, 5000000002 is repeated thrice in Block 1, Plants are repeated even more in Block 6. This is where the compression kicks in and de-duplicates these field values.

Fig 6:

Its very important to note here, the Row tagging are still on. For instance 5000000001 is still tagged to Row no. 1 and 2.

Its worth reminding ourselves, in SAP most of the transaction data field values are actually either a Master data (like Material no. in above example) or Configuration data (like Plant, S.Loc, UoM, Stock type etc in the above example). So the scope of of compression is very high since these Master data and Config data are very few in nos when compared to transaction data. This is the reason the "Data Foot Print" is less in S/4HANA, when compared to ECC. So when you migrate from ECC to S/4HANA you would require much lesser disk space.

Final piece - How the queries are blazing fast:

It took us sometime to understand how the Columnar Data Storage works, but I hope its worth it. Next comes Querying. Faster Querying is the other big advantage, rather the USP of Columnar Data Storage . Lets see with an example. Lets get back to our functional requirement of "sum up all the Amount values of consignment items" and see how the Columnar Data Storage method fares here.

Fig 7:

Here the system directly goes to Block 8 where the special stocks there and checks for “K” and the Row nos tagged to K. It then goes to Block 12 and sums up all the “Amount” values tagged with those Row nos. Compare this with Fig 7 with Fig 3 (Row Based) where there were multiple read of Blocks from the DB which is very expensive from a performance stand point. Finally it made sense to me how a 6 hour query in SAP ECC could get executed in less than 10 sec in S/4HANA.

The not so good about Columnar Data Storage:

1. As you can see Write/new record inserts are not as good. While in a Row Data Storage a new record insert (like a new Material document creation) is much simpler and has to go and get updated in the next available Block in the DB, in Columnar Data Storage various field values have to get distributed across multiple Blocks. SAP HANA manages this through techniques like Delta Merge (refer: https://help.sap.com/docs/SAP_HANA_PLATFORM/6b94445c94ae495c83a19646e7c3fd56/bd9ac728bb57101482b2ebf...)

2. Thoughtful Querying required. Lets say when we write a statement like Select * (means all field values) data When Stock type = K, this will try to pull all the field values, and remember these field values are spread across multiple blocks so there would be too many DB reads which will slow down the system again. We have seen real life cases where some bad queries would make S/4HANA much slower than ECC.

Conclusion:

I hope this Blog provided you with a glimpse of what's under the hood for Columnar Data Storage. As most Organizations are moving from ECC to S/4HANA, I feel it is all the more crucial now to understand these intricacies to implement and run the business.

Please share, Like and Comment anything else you want to share or add points.

- SAP Managed Tags:

- SAP HANA Cloud, SAP HANA database,

- MM (Materials Management),

- SD (Sales and Distribution)

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

"mm02"

1 -

A_PurchaseOrderItem additional fields

1 -

ABAP

1 -

ABAP Extensibility

1 -

ACCOSTRATE

1 -

ACDOCP

1 -

Adding your country in SPRO - Project Administration

1 -

Advance Return Management

1 -

AI and RPA in SAP Upgrades

1 -

Approval Workflows

1 -

Ariba

1 -

ARM

1 -

ASN

1 -

Asset Management

1 -

Associations in CDS Views

1 -

auditlog

1 -

Authorization

1 -

Availability date

1 -

Azure Center for SAP Solutions

1 -

AzureSentinel

2 -

Bank

1 -

BAPI_SALESORDER_CREATEFROMDAT2

1 -

BRF+

1 -

BRFPLUS

1 -

Bundled Cloud Services

1 -

business participation

1 -

Business Processes

1 -

CAPM

1 -

Carbon

1 -

Cental Finance

1 -

CFIN

1 -

CFIN Document Splitting

1 -

Cloud ALM

1 -

Cloud Integration

1 -

condition contract management

1 -

Connection - The default connection string cannot be used.

1 -

Custom Table Creation

1 -

Customer Screen in Production Order

1 -

Data Quality Management

1 -

Date required

1 -

Decisions

1 -

desafios4hana

1 -

Developing with SAP Integration Suite

1 -

Direct Outbound Delivery

1 -

DMOVE2S4

1 -

EAM

1 -

EDI

3 -

EDI 850

1 -

EDI 856

1 -

edocument

1 -

EHS Product Structure

1 -

Emergency Access Management

1 -

Energy

1 -

EPC

1 -

Financial Operations

1 -

Find

1 -

FINSSKF

1 -

Fiori

1 -

Flexible Workflow

1 -

Gas

1 -

Gen AI enabled SAP Upgrades

1 -

General

1 -

generate_xlsx_file

1 -

Getting Started

1 -

HomogeneousDMO

1 -

How to add new Fields in the Selection Screen Parameter in FBL1H Tcode

1 -

IDOC

2 -

Integration

1 -

Learning Content

2 -

LogicApps

2 -

low touchproject

1 -

Maintenance

1 -

management

1 -

Material creation

1 -

Material Management

1 -

MD04

1 -

MD61

1 -

methodology

1 -

Microsoft

2 -

MicrosoftSentinel

2 -

Migration

1 -

mm purchasing

1 -

MRP

1 -

MS Teams

2 -

MT940

1 -

Newcomer

1 -

Notifications

1 -

Oil

1 -

open connectors

1 -

Order Change Log

1 -

ORDERS

2 -

OSS Note 390635

1 -

outbound delivery

1 -

outsourcing

1 -

PCE

1 -

Permit to Work

1 -

PIR Consumption Mode

1 -

PIR's

1 -

PIRs

1 -

PIRs Consumption

1 -

PIRs Reduction

1 -

Plan Independent Requirement

1 -

Premium Plus

1 -

pricing

1 -

Primavera P6

1 -

Process Excellence

1 -

Process Management

1 -

Process Order Change Log

1 -

Process purchase requisitions

1 -

Product Information

1 -

Production Order Change Log

1 -

purchase order

1 -

Purchase requisition

1 -

Purchasing Lead Time

1 -

Redwood for SAP Job execution Setup

1 -

RISE with SAP

1 -

RisewithSAP

1 -

Rizing

1 -

S4 Cost Center Planning

1 -

S4 HANA

1 -

S4HANA

3 -

Sales and Distribution

1 -

Sales Commission

1 -

sales order

1 -

SAP

2 -

SAP Best Practices

1 -

SAP Build

1 -

SAP Build apps

1 -

SAP Cloud ALM

1 -

SAP Data Quality Management

1 -

SAP Maintenance resource scheduling

2 -

SAP Note 390635

1 -

SAP S4HANA

2 -

SAP S4HANA Cloud private edition

1 -

SAP Upgrade Automation

1 -

SAP WCM

1 -

SAP Work Clearance Management

1 -

Schedule Agreement

1 -

SDM

1 -

security

2 -

Settlement Management

1 -

soar

2 -

Sourcing and Procurement

1 -

SSIS

1 -

SU01

1 -

SUM2.0SP17

1 -

SUMDMO

1 -

Teams

2 -

User Administration

1 -

User Participation

1 -

Utilities

1 -

va01

1 -

vendor

1 -

vl01n

1 -

vl02n

1 -

WCM

1 -

X12 850

1 -

xlsx_file_abap

1 -

YTD|MTD|QTD in CDs views using Date Function

1

- « Previous

- Next »

- Building Low Code Extensions with Key User Extensibility in SAP S/4HANA and SAP Build in Enterprise Resource Planning Blogs by SAP

- Innovate Faster: The Power Duo of SAP Activate and Scaled Agile Framework (SAFe) in Enterprise Resource Planning Blogs by SAP

- Advanced WIP reporting in S/4HANA Cloud Public Edition in Enterprise Resource Planning Blogs by SAP

- Register now: SAP S/4HANA Cloud, Public Cloud – Partner Certification Academies in Enterprise Resource Planning Blogs by SAP

| User | Count |

|---|---|

| 5 | |

| 2 | |

| 2 | |

| 2 | |

| 2 | |

| 2 | |

| 2 | |

| 1 | |

| 1 | |

| 1 |