- SAP Community

- Products and Technology

- Technology

- Technology Blogs by Members

- The day my iFlows graduated from DevOps-High – add...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

| Part 1 of this series sheds light on automatic ticket creation for failed iFlows, tracking the fix via Git and end-to-end deployment. |

Dear community,

Last time we spoke about applying some nice DevOps practices to your iFlow developments on SAP CPI. We covered agile development with Azure Boards integration, linking code changes to user stories or issues, releasing the groovy scripts to SAP CPI and finally deploying the updated iFlow in your tenant automatically. You can find the corresponding blog post here.

However, my continuous integration process on the first blog was incomplete. I would like to complete the example today with automated unit-testing using JUnit and the Spock framework with code coverage. Once that is done, I can release my precious little iFlows into the wild to fend for themselves.

An evolution of testing your SAP CPI groovy Scripts

Most examples on this awesome CPI community space refer to testing your groovy scripts manually on your local development machine. Down below an evolution of the matter and how I perceived it.

- vadim.klimov 's post on how to get the SAP-specific Java libraries from your CPI tenant. With that you could finally do actual debugging from your IDE. https://blogs.sap.com/2017/10/02/dark-side-of-groovy-scripting-behind-the-scenes-of-cloud-integratio...

- Vadims Post combined with engswee.yeoh changed my iFlow development back in the day forever: https://blogs.sap.com/2017/10/06/how-do-you-test-your-groovy-scripts/

- Eng Swee moved it one step further with a nice testing framework and introduced test-driven-design principles with Spock: https://blogs.sap.com/2018/01/24/cpis-groovy-meets-spock-to-boldly-test-where-none-has-tested-before...

- Vadim Klimov developed a groovy wrapper script, that allows you to send groovy scripts via http with Postman for instance to get immediate feedback for your groovy script. That drives down the testing as you don’t need to wait for your iFlow to be deployed. For independent cases this is a really cool approach: https://blogs.sap.com/2019/06/17/rapid-groovy-scripting-in-cpi-dynamic-loading-and-execution-of-scri...

- A commercial option with the local Figaf tool and a post by daniel.graversen: https://blogs.sap.com/2019/08/25/run-unit-test-for-sap-cpi-easier/

- Avoiding local development at all cost ?:

- Great Chrome extension by @Dominic Beckbauer for enhanced tracing: https://blogs.sap.com/2020/03/05/cpi-chrome-plugin-to-enhance-sap-cloud-platform-integration-usabili...

- Nice post by fatih.pense on the simulation mode on the CPI web-ui: https://blogs.sap.com/2020/04/13/testing-parts-of-flows-in-cpi-with-the-simulation-feature/. He also created a website for live testing of groovy scripts: https://blogs.sap.com/2020/04/13/share-and-store-cpi-groovy-scripts-with-links/

- Chrome Extension to do more sophisticated groovy syntax checks on the SAP CPI web-ui: https://blogs.sap.com/2020/05/22/supereasy-groovy-syntax-checker-for-cpi/

All these options increase the quality of your SAP CPI development practices. However, they don’t allow you to go all in with DevOps for continuous integration and continuous deployment (CI/CD). I know you are anxious to finally see some code and pictures, so let’s dive into it.?

The moving parts to make it happen

Like I said in the beginning Unit-Testing is the missing piece in my example for a more complete continuous integration DevOps practice.

Fig.1 Integration project overview

The integration journey starts on the bottom right of fig.1 with your IDE. In my case that is Eclipse, because I wanted to be able to debug my CPI groovy scripts locally. Not all the IDEs support groovy fully for that. There are other examples on the community for IntelliJ for instance.

- Once you do a Git Pull-Request or Push you trigger the whole chain of CI/CD. There are some keywords for Azure Boards and Jira Cloud to automatically update the status of the User-Story or Issue to complete/done. In my example with Azure Boards this is “Fixes #AB414”.

Fig.2 Item status transition based on GitHub commit

- The GitHub integration triggers the update of the User Story based on the request comment linking the ID and starting the build process on Azure Pipelines.

Fig.3 Screenshot from GitHub request

- To be able to execute unit tests from Azure Pipeline runners I configured my project with the build-management tool gradle. Please note, that I used specific versions that create the desired results now. However, it might need some refactoring going forward. At this point it was most important to me to have repeatable results locally and on the Azure DevOps runner. Gradle developers would probably polish a couple of my setups ?.The unit tests are contained in “src/test/groovy/”. The actual source of the iFlow and the groovy scripts can be found under “src/main/groovy/”. That way I can keep using my python script “Templates/download-package.py” to get updates from the CPI-tenant for changes on the web-ui, while keeping the ability to run unit-tests at the same time.

Fig.4 Gradle project structure

- To make sure that a decent level of unit testing is applied by the integration developers a code coverage scan is performed. Azure Pipelines has built-in support for JaCoCo or Cobertura. I configured gradle to use JaCoCo. The code coverage threshold is 75%. Meaning the build fails in case the unit tests don’t cover enough “ground” on my groovy scripts.You can configure various flavours of coverage types like “by line”, code blocks, branches (e.g. created by if-conditions). In software engineering there are many metrics to measure the “completeness” of your testing. That quickly becomes a mathematical problem or even a stochastic one if you have many branches and conditions. With our boxed uses cases in iFlow development it shouldn’t matter much if you go for straight forward line or branch coverage for instance.I went for “C0” coverage. Here is a nice post with a simple overview on the different magnitudes of testing completeness describing the metrics from the software testing discipline. On my project there are examples for message headers, properties and whole XML.

Fig.5 Screenshot from Azure build pipe and code coverage settings

Fig.6 Screenshot from JaCoCo output for script1.groovy

Fig.7 Screenshot from unit-test run on eclipse

- Once the build completes it passes on the project structure to the release pipeline automatically. We don’t have any compiled software parts because we need only the groovy scripts to update the code on SAP CPI. The release pipe performs the actual update for the continuous deployment part.

Fig.9 Screenshot from release pipe on Azure DevOps

6-7 I integrated with SAP CPI’s API using a python script to do the update of the groovy scripts of the targeted iFlows. After the update, the corresponding iFlow is automatically deployed. That way you get true CD ?

Ok, we have established how to do proper continuous integration with unit testing and how it fits into the overall picture of DevOps practices. Looks great, doesn’t it? I agree but there is a coding practice, where the approach really starts to shine compared to local testing.

Sharing scripts across iFlows

One of the well-established design principles of software engineering is “separation of concerns” and “reusability “. Often with larger integration projects in CPI there is a chance that you will need the same method to create or modify a message multiple times in different places. An example could be the need to create an OData-Timestamp.

I’d like to show you two options how to re-use that function above in any iFlow on your tenant. With that future enhancements or bug fixes need to happen only in one place.

First, you need a way to host the shared script.

Option1: The mighty but unsupported utils iFlow

A great way to do that is a separate iFlow. That way you have only one instance of the script and deployment is straight forward. To load the groovy script from the Utils-iFlow during runtime, we need to rely on the findings from @Vadim Klimov. He investigated the CPI and found that SAP is using OSGi as service runtime.

Vadim also helped me with the snippet for the request:

String flowName = 'iFlowUtils'

String scriptName = 'myUtils.groovy';

// Get bundle context and from it, access reusable iFlow bundle

BundleContext context = FrameworkUtil.getBundle(Message).bundleContext

Bundle utilsBundle = context.bundles.find { it.symbolicName == flowName }

// Within the bundle, access reusable script and read its content

customStringUtilsScriptContent = utilsBundle.getEntry("script/$scriptName").text

// Parse script content and execute its function

Script customStringUtilsScript = new GroovyShell().parse(customStringUtilsScriptContent)

result = customStringUtilsScript.addPrefix(prefix, delimiter, value)

The shared method looks like this:

package iFlowUtils.src.main.resources.script

static String addPrefix(String prefix, String delimiter, String value){

return "$prefix$delimiter$value"

}

static String getCurrentOdataTime(String timemills){

return "/Date($timemills)/";

//https://blogs.sap.com/2017/01/05/date-and-time-in-sap-gateway-foundation/

}Unfortunately, this approach is prone to breaking changes by SAP. It relies on the current way SAP deploys the CPI runtime. In case SAP drops OSGi for instance it will stop working.

Option2: Attached jar-files with single build step

I am leveraging SAP’s iFlow feature to add custom libraries to your groovy scripts as JAR-files.

This means every iFlow, that wants to call the “shared” method needs a copy of the JAR file.

Fig.9 Screenshot from SAP CPI web-ui iFlow resources

But how does this work from a single source file? Well, gradle can create JAR files and the API of SAP CPI offers not only the option to upload groovy but also JARs. With the JAR attached you can simply import the class to your groovy script.

Fig.10 Screenshot of gradle build script

This build step adds the jar to lib folder on the target iFlow “TriggerError”. That doesn’t matter for the deployment much but resembles the internal Iflow structure. My goal was, that you could always zip the folder of an iFlow and be able upload it immediately on SAP CPI if you want.

Fig.11 GitHub folder structure for iFlow sources

Fig.12 Screenshot from python-script to update files on CPI

Just alter the python script to distribute the JAR file with the shared groovy script to as many iFlows as you want. Cool, right? This way you have a single source but can still re-use the functions in multiple iFlows. One of the downsides is, that you cannot look at the groovy script inside the jar-file at runtime. On the upside is a lower risk of breaking changes by SAP because this is a supported approach of adding libraries to your iFlows.

Thoughts on pipelining-strategy and iFlow development

In my example the CI/CD process updates all the mentioned iFlows using the Python script shown in Fig.12 even though you updated only a particular script maybe. To become more flexible, you could do one of the following things:

Create a whole Azure DevOps project per iFlow

I believe this is a little overkill because you won’t have different governance tasks from organizing the developer team or for the SCRUM process in Azure Boards.

Create a pipeline per iFlow with individual git repos

From a transparency perspective this is very clear and code-wise cleanly separated. It creates Git Repos maintenance overhead though.

Create a pipeline per iFlow with single git repos and path filter for build triggers

This approach gets the best of both options mentioned before. The setup is easy to understand and you save the maintenance effort on multiple Git repos. The filter setup to run the trigger only for your target iFlow looks like this:

Getting test messages for your unit-tests

A straightforward way to get CPI messages to test your groovy developments is the CPI trace feature. From there you can download the payload.

Fig.13 Screenshot of message trace view on CPI web-ui

In the sense of automation of this post on CI/CD I wanted to use the API of CPI to retrieve the last five messages for test runs. However, it seems that part of the API is not implemented yet by SAP. I will investigate some more and let you know once the python script to download the message traces becomes available 🙂

Fig.14 Screenshot of Postman for missing implementation of TraceMessages interface

Hints for replicating the environment

You will need to get the artifacts to enable debugging and unit-test execution for the Message class. You can find guidance on the structure in Cloud Foundry here:

- https://blogs.sap.com/2017/10/02/dark-side-of-groovy-scripting-behind-the-scenes-of-cloud-integratio...

- https://blogs.sap.com/2019/12/02/execution-of-osgi-shell-commands-on-cpi-runtime-node/

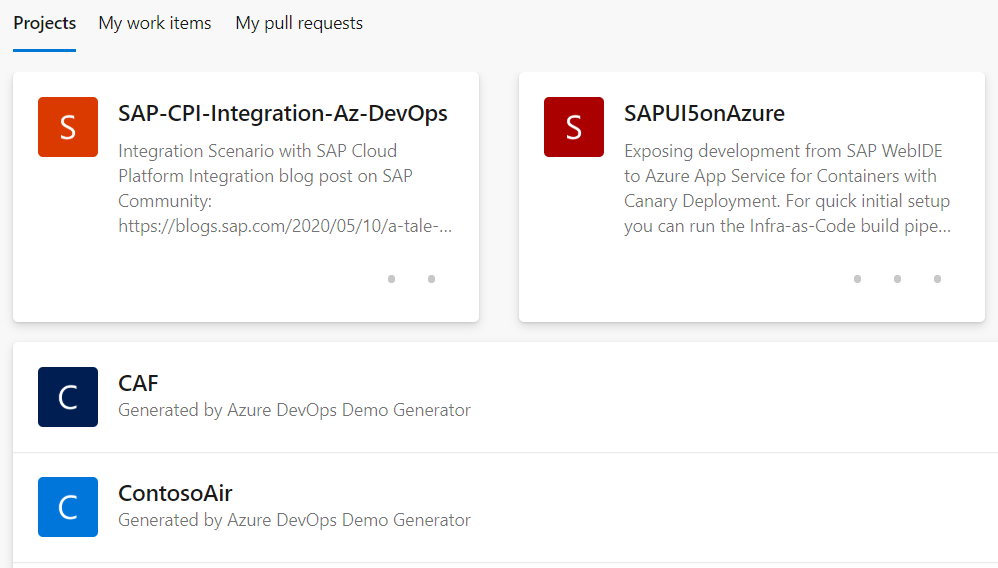

GitHub Repos: https://github.com/MartinPankraz/SAPCPI-Az-DevOps

Azure DevOps project: https://dev.azure.com/mapankra/SAP-CPI-Integration-Az-DevOps

Final Words

Aaaaand done! A full-cyle DevOps process for your SAP CPI iFlows with Azure DevOps. We have agile planning support with Azure Boards (or Jira Cloud), continuous integration with unit-testing (Junit + Spock) and a code coverage quality gate. In addition to that I showed how you could re-use groovy script methods across multiple iFlows. In such a setup CI/CD becomes very valuable, because changes to the shared methods impact all dependent groovy scripts on the other iFlows. In addition to that you need to update only one script for feature enhancements or bug fixes ?

Ready to enhance your iFlow development practices? Are you mostly using the web-ui? I’d like to hear from you how you ensure deployment quality.

#KUDOS to vadim.klimov and engswee.yeoh for their invaluable feedback and guidance on the tricky parts with OSGi and this post in general.

As always feel free to leave or ask lots of follow-up questions.

Best Regards

Martin

- SAP Managed Tags:

- SAP Integration Suite

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

"automatische backups"

1 -

"regelmäßige sicherung"

1 -

"TypeScript" "Development" "FeedBack"

1 -

505 Technology Updates 53

1 -

ABAP

14 -

ABAP API

1 -

ABAP CDS Views

2 -

ABAP CDS Views - BW Extraction

1 -

ABAP CDS Views - CDC (Change Data Capture)

1 -

ABAP class

2 -

ABAP Cloud

3 -

ABAP Development

5 -

ABAP in Eclipse

1 -

ABAP Platform Trial

1 -

ABAP Programming

2 -

abap technical

1 -

abapGit

1 -

absl

2 -

access data from SAP Datasphere directly from Snowflake

1 -

Access data from SAP datasphere to Qliksense

1 -

Accrual

1 -

action

1 -

adapter modules

1 -

Addon

1 -

Adobe Document Services

1 -

ADS

1 -

ADS Config

1 -

ADS with ABAP

1 -

ADS with Java

1 -

ADT

2 -

Advance Shipping and Receiving

1 -

Advanced Event Mesh

3 -

Advanced formula

1 -

AEM

1 -

AI

8 -

AI Launchpad

1 -

AI Projects

1 -

AIML

9 -

Alert in Sap analytical cloud

1 -

Amazon S3

1 -

Analytic Models

1 -

Analytical Dataset

1 -

Analytical Model

1 -

Analytics

1 -

Analyze Workload Data

1 -

annotations

1 -

API

1 -

API and Integration

4 -

API Call

2 -

API security

1 -

Application Architecture

1 -

Application Development

5 -

Application Development for SAP HANA Cloud

3 -

Applications and Business Processes (AP)

1 -

Artificial Intelligence

1 -

Artificial Intelligence (AI)

5 -

Artificial Intelligence (AI) 1 Business Trends 363 Business Trends 8 Digital Transformation with Cloud ERP (DT) 1 Event Information 462 Event Information 15 Expert Insights 114 Expert Insights 76 Life at SAP 418 Life at SAP 1 Product Updates 4

1 -

Artificial Intelligence (AI) blockchain Data & Analytics

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise Oil Gas IoT Exploration Production

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise sustainability responsibility esg social compliance cybersecurity risk

1 -

AS Java

1 -

ASE

1 -

ASR

2 -

ASUG

1 -

Attachments

1 -

Authentication

1 -

Authorisations

1 -

Automating Processes

1 -

Automation

2 -

aws

2 -

Azure

2 -

Azure AI Studio

1 -

Azure API Center

1 -

Azure API Management

1 -

B2B Integration

1 -

Backorder Processing

1 -

Backpropagation

1 -

Backup

1 -

Backup and Recovery

1 -

Backup schedule

1 -

BADI_MATERIAL_CHECK error message

1 -

Bank

1 -

Bank Communication Management

1 -

BAS

1 -

basis

2 -

Basis Monitoring & Tcodes with Key notes

2 -

Batch Management

1 -

BDC

1 -

Best Practice

1 -

BI

1 -

bitcoin

1 -

Blockchain

3 -

bodl

1 -

BOP in aATP

1 -

BOP Segments

1 -

BOP Strategies

1 -

BOP Variant

1 -

BPC

1 -

BPC LIVE

1 -

BTP

13 -

BTP AI Launchpad

1 -

BTP Destination

2 -

Business AI

1 -

Business and IT Integration

1 -

Business application stu

1 -

Business Application Studio

1 -

Business Architecture

1 -

Business Communication Services

1 -

Business Continuity

2 -

Business Data Fabric

3 -

Business Fabric

1 -

Business Partner

12 -

Business Partner Master Data

10 -

Business Technology Platform

2 -

Business Trends

4 -

BW4HANA

1 -

CA

1 -

calculation view

1 -

CAP

4 -

Capgemini

1 -

CAPM

1 -

Catalyst for Efficiency: Revolutionizing SAP Integration Suite with Artificial Intelligence (AI) and

1 -

CCMS

2 -

CDQ

12 -

CDS

2 -

Cental Finance

1 -

Certificates

1 -

CFL

1 -

Change Management

1 -

chatbot

1 -

chatgpt

3 -

CICD

1 -

CL_SALV_TABLE

2 -

Class Runner

1 -

Classrunner

1 -

Cloud ALM Monitoring

1 -

Cloud ALM Operations

1 -

cloud connector

1 -

Cloud Extensibility

1 -

Cloud Foundry

4 -

Cloud Integration

6 -

Cloud Platform Integration

2 -

cloudalm

1 -

communication

1 -

Compensation Information Management

1 -

Compensation Management

1 -

Compliance

1 -

Compound Employee API

1 -

Configuration

1 -

Connectors

1 -

Consolidation Extension for SAP Analytics Cloud

2 -

Control Indicators.

1 -

Controller-Service-Repository pattern

1 -

Conversion

1 -

Cosine similarity

1 -

CPI

1 -

cryptocurrency

1 -

CSI

1 -

ctms

1 -

Custom chatbot

3 -

Custom Destination Service

1 -

custom fields

1 -

Custom Headers

1 -

Customer Experience

1 -

Customer Journey

1 -

Customizing

1 -

cyber security

4 -

cybersecurity

1 -

Data

1 -

Data & Analytics

1 -

Data Aging

1 -

Data Analytics

2 -

Data and Analytics (DA)

1 -

Data Archiving

1 -

Data Back-up

1 -

Data Flow

1 -

Data Governance

5 -

Data Integration

2 -

Data Quality

12 -

Data Quality Management

12 -

Data Synchronization

1 -

data transfer

1 -

Data Unleashed

1 -

Data Value

8 -

Database and Data Management

1 -

database tables

1 -

Databricks

1 -

Dataframe

1 -

Datasphere

3 -

datenbanksicherung

1 -

dba cockpit

1 -

dbacockpit

1 -

Debugging

2 -

Defender

1 -

Delimiting Pay Components

1 -

Delta Integrations

1 -

Destination

3 -

Destination Service

1 -

Developer extensibility

1 -

Developing with SAP Integration Suite

1 -

Devops

1 -

digital transformation

1 -

Disaster Recovery

1 -

Documentation

1 -

Dot Product

1 -

DQM

1 -

dump database

1 -

dump transaction

1 -

e-Invoice

1 -

E4H Conversion

1 -

Eclipse ADT ABAP Development Tools

2 -

edoc

1 -

edocument

1 -

ELA

1 -

Embedded Consolidation

1 -

Embedding

1 -

Embeddings

1 -

Employee Central

1 -

Employee Central Payroll

1 -

Employee Central Time Off

1 -

Employee Information

1 -

Employee Rehires

1 -

Enable Now

1 -

Enable now manager

1 -

endpoint

1 -

Enhancement Request

1 -

Enterprise Architecture

1 -

Entra

1 -

ESLint

1 -

ETL Business Analytics with SAP Signavio

1 -

Euclidean distance

1 -

Event Dates

1 -

Event Driven Architecture

1 -

Event Mesh

2 -

Event Reason

1 -

EventBasedIntegration

1 -

EWM

1 -

EWM Outbound configuration

1 -

EWM-TM-Integration

1 -

Existing Event Changes

1 -

Expand

1 -

Expert

2 -

Expert Insights

2 -

Exploits

1 -

Fiori

15 -

Fiori Elements

2 -

Fiori SAPUI5

12 -

first-guidance

1 -

Flask

2 -

FTC

1 -

Full Stack

8 -

Funds Management

1 -

gCTS

1 -

GenAI hub

1 -

General

2 -

Generative AI

1 -

Getting Started

1 -

GitHub

10 -

Google cloud

1 -

Grants Management

1 -

groovy

2 -

GTP

1 -

HANA

6 -

HANA Cloud

2 -

Hana Cloud Database Integration

2 -

HANA DB

2 -

Hana Vector Engine

1 -

HANA XS Advanced

1 -

Historical Events

1 -

home labs

1 -

HowTo

1 -

HR Data Management

1 -

html5

8 -

HTML5 Application

1 -

Identity cards validation

1 -

idm

1 -

Implementation

1 -

Infuse AI

1 -

input parameter

1 -

instant payments

1 -

Integration

3 -

Integration Advisor

1 -

Integration Architecture

1 -

Integration Center

1 -

Integration Suite

1 -

intelligent enterprise

1 -

iot

1 -

Java

1 -

job

1 -

Job Information Changes

1 -

Job-Related Events

1 -

Job_Event_Information

1 -

joule

4 -

Journal Entries

1 -

Just Ask

1 -

Kerberos for ABAP

9 -

Kerberos for JAVA

8 -

KNN

1 -

Launch Wizard

1 -

Learning Content

2 -

Life at SAP

5 -

lightning

1 -

Linear Regression SAP HANA Cloud

1 -

Loading Indicator

1 -

local tax regulations

1 -

LP

1 -

Machine Learning

4 -

Marketing

1 -

Master Data

3 -

Master Data Management

14 -

Maxdb

2 -

MDG

1 -

MDGM

1 -

MDM

1 -

Message box.

1 -

Messages on RF Device

1 -

Microservices Architecture

1 -

Microsoft

1 -

Microsoft Universal Print

1 -

Middleware Solutions

1 -

Migration

5 -

ML Model Development

1 -

MLFlow

1 -

Modeling in SAP HANA Cloud

8 -

Monitoring

3 -

MPL

1 -

MTA

1 -

Multi-factor-authentication

1 -

Multi-Record Scenarios

1 -

Multilayer Perceptron

1 -

Multiple Event Triggers

1 -

Myself Transformation

1 -

Neo

1 -

Neural Networks

1 -

New Event Creation

1 -

New Feature

1 -

Newcomer

1 -

NodeJS

3 -

ODATA

2 -

OData APIs

1 -

odatav2

1 -

ODATAV4

1 -

ODBC

1 -

ODBC Connection

1 -

Onpremise

1 -

open source

2 -

OpenAI API

1 -

Oracle

1 -

PaPM

1 -

PaPM Dynamic Data Copy through Writer function

1 -

PaPM Remote Call

1 -

Partner Built Foundation Model

1 -

PAS-C01

1 -

Pay Component Management

1 -

PGP

1 -

Pickle

1 -

PLANNING ARCHITECTURE

1 -

Popup in Sap analytical cloud

1 -

PostgrSQL

1 -

POSTMAN

1 -

Prettier

1 -

Process Automation

2 -

Product Updates

6 -

PSM

1 -

Public Cloud

1 -

Python

5 -

python library - Document information extraction service

1 -

Qlik

1 -

Qualtrics

1 -

RAP

3 -

RAP BO

2 -

React

1 -

Record Deletion

1 -

Recovery

1 -

recurring payments

1 -

redeply

1 -

Release

1 -

Remote Consumption Model

1 -

Replication Flows

1 -

research

1 -

Resilience

1 -

REST

1 -

REST API

1 -

Retagging Required

1 -

Risk

1 -

rolandkramer

2 -

Rolling Kernel Switch

1 -

route

1 -

rules

1 -

S4 HANA

1 -

S4 HANA Cloud

1 -

S4 HANA On-Premise

1 -

S4HANA

4 -

S4HANA Cloud

1 -

S4HANA_OP_2023

2 -

SAC

10 -

SAC PLANNING

9 -

SAP

4 -

SAP ABAP

1 -

SAP Advanced Event Mesh

1 -

SAP AI Core

9 -

SAP AI Launchpad

8 -

SAP Analytic Cloud

1 -

SAP Analytic Cloud Compass

1 -

Sap Analytical Cloud

1 -

SAP Analytics Cloud

4 -

SAP Analytics Cloud for Consolidation

3 -

SAP Analytics Cloud Story

1 -

SAP analytics clouds

1 -

SAP API Management

1 -

SAP Application Logging Service

1 -

SAP BAS

1 -

SAP Basis

6 -

SAP BODS

1 -

SAP BODS certification.

1 -

SAP BTP

24 -

SAP BTP Build Work Zone

2 -

SAP BTP Cloud Foundry

8 -

SAP BTP Costing

1 -

SAP BTP CTMS

1 -

SAP BTP Generative AI

1 -

SAP BTP Innovation

1 -

SAP BTP Migration Tool

1 -

SAP BTP SDK IOS

1 -

SAP BTPEA

1 -

SAP Build

11 -

SAP Build App

1 -

SAP Build apps

1 -

SAP Build CodeJam

1 -

SAP Build Process Automation

3 -

SAP Build work zone

10 -

SAP Business Objects Platform

1 -

SAP Business Technology

2 -

SAP Business Technology Platform (XP)

1 -

sap bw

1 -

SAP CAP

2 -

SAP CDC

1 -

SAP CDP

1 -

SAP CDS VIEW

1 -

SAP Certification

1 -

SAP Cloud ALM

4 -

SAP Cloud Application Programming Model

1 -

SAP Cloud Integration for Data Services

1 -

SAP cloud platform

8 -

SAP Companion

1 -

SAP CPI

3 -

SAP CPI (Cloud Platform Integration)

2 -

SAP CPI Discover tab

1 -

sap credential store

1 -

SAP Customer Data Cloud

1 -

SAP Customer Data Platform

1 -

SAP Data Intelligence

1 -

SAP Data Migration in Retail Industry

1 -

SAP Data Services

1 -

SAP DATABASE

1 -

SAP Dataspher to Non SAP BI tools

1 -

SAP Datasphere

9 -

SAP DRC

1 -

SAP EWM

1 -

SAP Fiori

3 -

SAP Fiori App Embedding

1 -

Sap Fiori Extension Project Using BAS

1 -

SAP GRC

1 -

SAP HANA

1 -

SAP HANA PAL

1 -

SAP HANA Vector

1 -

SAP HCM (Human Capital Management)

1 -

SAP HR Solutions

1 -

SAP IDM

1 -

SAP Integration Suite

10 -

SAP Integrations

4 -

SAP iRPA

2 -

SAP LAGGING AND SLOW

1 -

SAP Learning Class

1 -

SAP Learning Hub

1 -

SAP Master Data

1 -

SAP Odata

2 -

SAP on Azure

2 -

SAP PAL

1 -

SAP PartnerEdge

1 -

sap partners

1 -

SAP Password Reset

1 -

SAP PO Migration

1 -

SAP Prepackaged Content

1 -

SAP Process Automation

2 -

SAP Process Integration

2 -

SAP Process Orchestration

1 -

SAP Router

1 -

SAP S4HANA

2 -

SAP S4HANA Cloud

2 -

SAP S4HANA Cloud for Finance

1 -

SAP S4HANA Cloud private edition

1 -

SAP Sandbox

1 -

SAP STMS

1 -

SAP successfactors

3 -

SAP SuccessFactors HXM Core

1 -

SAP Time

1 -

SAP TM

2 -

SAP Trading Partner Management

1 -

SAP UI5

1 -

SAP Upgrade

1 -

SAP Utilities

1 -

SAP-GUI

8 -

SAP_COM_0276

1 -

SAPBTP

1 -

SAPCPI

1 -

SAPEWM

1 -

sapfirstguidance

3 -

SAPHANAService

1 -

SAPIQ

2 -

sapmentors

1 -

saponaws

2 -

saprouter

1 -

SAPRouter installation

1 -

SAPS4HANA

1 -

SAPUI5

5 -

schedule

1 -

Script Operator

1 -

Secure Login Client Setup

8 -

security

9 -

Selenium Testing

1 -

Self Transformation

1 -

Self-Transformation

1 -

SEN

1 -

SEN Manager

1 -

service

1 -

SET_CELL_TYPE

1 -

SET_CELL_TYPE_COLUMN

1 -

SFTP scenario

2 -

Simplex

1 -

Single Sign On

8 -

Singlesource

1 -

SKLearn

1 -

Slow loading

1 -

soap

1 -

Software Development

1 -

SOLMAN

1 -

solman 7.2

2 -

Solution Manager

3 -

sp_dumpdb

1 -

sp_dumptrans

1 -

SQL

1 -

sql script

1 -

SSL

8 -

SSO

8 -

Story2

1 -

Substring function

1 -

SuccessFactors

1 -

SuccessFactors Platform

1 -

SuccessFactors Time Tracking

1 -

Sybase

1 -

system copy method

1 -

System owner

1 -

Table splitting

1 -

Tax Integration

1 -

Technical article

1 -

Technical articles

1 -

Technology Updates

15 -

Technology Updates

1 -

Technology_Updates

1 -

terraform

1 -

Testing

1 -

Threats

2 -

Time Collectors

1 -

Time Off

2 -

Time Sheet

1 -

Time Sheet SAP SuccessFactors Time Tracking

1 -

Tips and tricks

2 -

toggle button

1 -

Tools

1 -

Trainings & Certifications

1 -

Transformation Flow

1 -

Transport in SAP BODS

1 -

Transport Management

1 -

TypeScript

3 -

ui designer

1 -

unbind

1 -

Unified Customer Profile

1 -

UPB

1 -

Use of Parameters for Data Copy in PaPM

1 -

User Unlock

1 -

VA02

1 -

Validations

1 -

Vector Database

2 -

Vector Engine

1 -

Vectorization

1 -

Visual Studio Code

1 -

VSCode

2 -

VSCode extenions

1 -

Vulnerabilities

1 -

Web SDK

1 -

Webhook

1 -

work zone

1 -

workload

1 -

xsa

1 -

XSA Refresh

1

- « Previous

- Next »

- How to export all script objects from an Optimized Story Experience? (open a .package export file) in Technology Q&A

- Migrate Your Themes with Ease in Technology Blogs by SAP

- How to generate a wrapper for function modules (BAPIs) in tier 2 in Technology Blogs by SAP

- DevOps with SAP BTP in Technology Blogs by SAP

- 10+ ways to reshape your SAP landscape with SAP Business Technology Platform – Blog 7 in Technology Blogs by SAP

| User | Count |

|---|---|

| 7 | |

| 5 | |

| 5 | |

| 4 | |

| 3 | |

| 3 | |

| 3 | |

| 3 | |

| 3 | |

| 2 |