- SAP Community

- Products and Technology

- Spend Management

- Spend Management Blogs by SAP

- Using SAP Data Intelligence to send reporting data...

Spend Management Blogs by SAP

Stay current on SAP Ariba for direct and indirect spend, SAP Fieldglass for workforce management, and SAP Concur for travel and expense with blog posts by SAP.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Developer Advocate

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

09-09-2020

6:49 PM

In this blog post, I will cover how SAP Data Intelligence can be used to extract data from the SAP Ariba APIs and populate a dataset in SAP Analytics Cloud. The goal is to extract the suppliers that have been set to inactive the past 30 days in SAP Ariba and save the results in a dataset in SAP Analytics Cloud.

To achieve sending data from SAP Ariba to SAP Analytics Cloud we will need a few things first:

Now that we have access to the different systems, I will proceed to explain how to get SAP Data Intelligence talking with SAP Ariba and SAP Analytics Cloud.

I will build a pipeline in SAP Data Intelligence whose goal is to extract inactive suppliers in SAP Ariba and update a dataset in SAP Analytics Cloud. To accomplish this we will do the following:

The pipeline end result is shown in the figure below.

As some API specific parameters (header, query parameters) are not possible to set via the OpenAPI client operator UI, I will need to set them up via code using the Javascript operator. The code snippet below shows the different parameters that I need to set up for the OpenAPI client operator. Example of header and query parameters set in the Javascript operator:

In the OpenAPI client operator I configured 2 things:

Ariba API configuration:

The Python3 operator will be responsible of processing the OpenAPI client response. It will remove the active suppliers that might be included in the response and outputs only inactive suppliers. For simplicity reasons, the Python3 operator will output a string containing the inactive suppliers in CSV format.

Below the Python3 operator code snippet:

The Decode table operator converts the Python3 operator output string, in CSV format, and converts it into a table. This table is what the SAP Analytics Cloud producer expects to create/replace/append to a dataset in SAP Analytics Cloud.

Configuration:

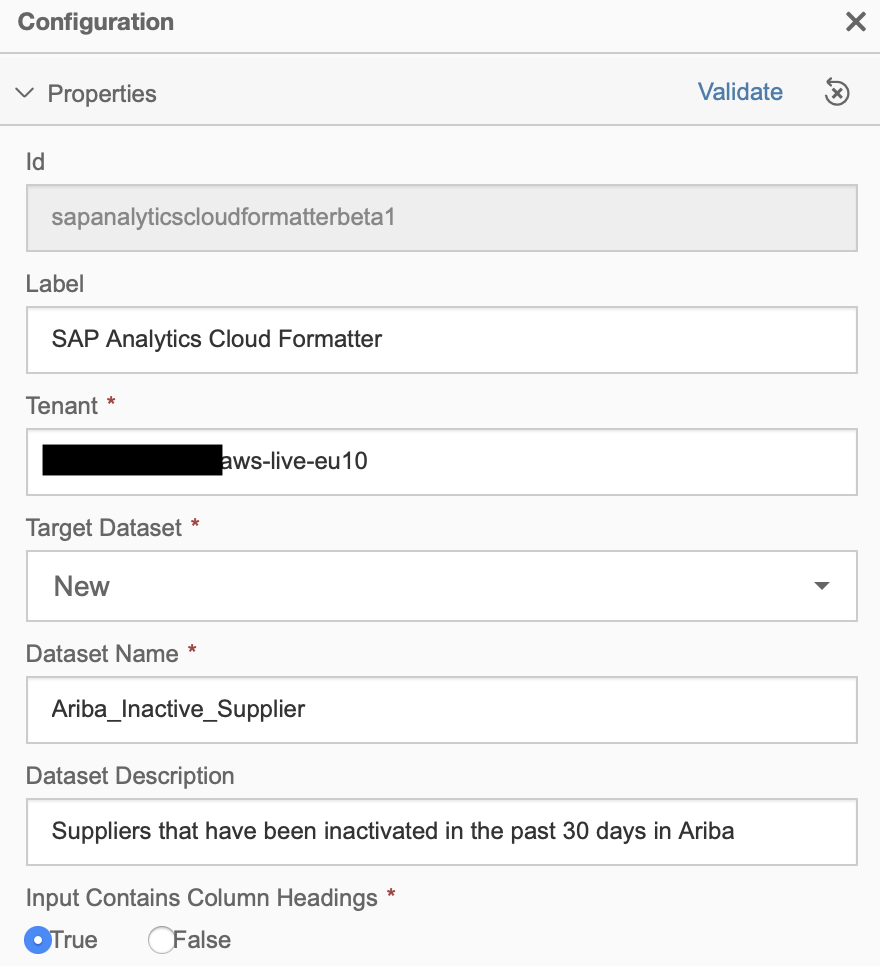

I now proceed to configure the dataset details in the SAP Analytics Cloud formatter operator. Note that in the configuration below I set the Target Dataset field as New.... after running the pipeline for the first time we can set it to Replace so that every time the pipeline runs, it will replace the dataset in SAP Analytics Cloud. I copied the dataset ID from the dataset created and set the Target Dataset option to replace and specify the dataset ID.

All I'm missing now is the SAP Analytics Cloud producer OAuth 2.0 details and we are ready to run the pipeline.

Now that all steps are completed, I run the SAP Data Intelligence pipeline and check the results in SAP Analytics Cloud.

As we can see, we have created a dataset containing only inactive suppliers in SAP Analytics Cloud. We can now use this dataset to create a report/dashboard in SAP Analytics Cloud.

To achieve sending data from SAP Ariba to SAP Analytics Cloud we will need a few things first:

- Create application in SAP Ariba Developer Portal and request API access. Unfortunately there is no trial version of SAP Ariba but I guess that if you are reading this blog post, it is because your company uses SAP Ariba :-). I covered in a previous blog post (Using the SAP Ariba APIs to extract inactive suppliers) how to create an application and request API access in the SAP Ariba Developer Portal. In this post, I will consume the same API (Operational reporting API for strategic sourcing). Go check out the blog post if you need to request API access for your SAP Ariba realm or aren't familiar with the API.

- Access to an SAP Data Intelligence instance. It is possible to deploy a trial instance of SAP Data Intelligence in your favourite hyperscaler if you don’t have access to an instance. If you want to find out how, follow the Instantiate and Explorer SAP Data Intelligence tutorial available at https://developers.sap.com.

- Access to an SAP Analytics Cloud. SAP Analytics Cloud offers a free trial. Go ahead and create a trial account if you don’t have access to an SAP Analytics Cloud instance. Also, an OAuth 2.0 client in SAP Analytics Cloud is required. Details on how to create an OAuth 2.0 client in SAP Analytics Cloud, required for SAP Data Intelligence, can be found in this blog post by ian.henry

Now that we have access to the different systems, I will proceed to explain how to get SAP Data Intelligence talking with SAP Ariba and SAP Analytics Cloud.

I will build a pipeline in SAP Data Intelligence whose goal is to extract inactive suppliers in SAP Ariba and update a dataset in SAP Analytics Cloud. To accomplish this we will do the following:

- Set up a Javascript operator to set attributes required by the OpenAPI client operator.

- Authenticate and retrieving reporting data from the Operation reporting API for strategic sourcing using the OpenAPI client operator.

- Use the Python3 operator to do some data manipulation as the API response returns active and inactive suppliers as we are only interested in reporting inactive suppliers.

- Use the Decode Table operator to convert the CSV output from the Python3 operator. This is required to send the data to SAP Analytics Cloud.

- Configure the SAP Analytics Cloud formatter and producer with our tenant details.

The pipeline end result is shown in the figure below.

SAP Data Intelligence pipeline

Step 1 - Set up Javascript operator

As some API specific parameters (header, query parameters) are not possible to set via the OpenAPI client operator UI, I will need to set them up via code using the Javascript operator. The code snippet below shows the different parameters that I need to set up for the OpenAPI client operator. Example of header and query parameters set in the Javascript operator:

- Headers:

- apiKey: The SAP Ariba Application ID is required in our API request.

- Query:

- realm: SAP Ariba realm code.

- includeInactive: The API response will include inactive objects.

- filters: The date filters are dynamic, so that the request only retrieves the suppliers from the past day.

$.addGenerator(start);

generateMessage = function() {

// Process the dates to be used in the API request filter parameter

var currentDate = new Date();

var dateTo = new Date();

dateTo.setDate(currentDate.getDate());

var dateFrom = new Date();

dateFrom.setDate(currentDate.getDate() - 30);

var updatedDateFrom = dateFrom.toISOString().slice(0,10) + "T00:00:00Z";

var updatedDateTo = dateTo.toISOString().slice(0,10) + "T00:00:00Z";

var msg = {};

msg.Attributes = {};

msg.Attributes["openapi.consumes"] = "application/json";

msg.Attributes["openapi.produces"] = "application/json";

msg.Attributes["openapi.method"] = "GET";

// Set API header parameters

msg.Attributes["openapi.header_params.apiKey"] = "ARIBA_API_KEY";

msg.Attributes["openapi.path_pattern"] = "/views/InactiveSuppliers";

// Set API query parameters

msg.Attributes["openapi.query_params.realm"] = "realm-t"

msg.Attributes["openapi.query_params.includeInactive"] = "true"

msg.Attributes["openapi.query_params.filters"] = '{"updatedDateFrom":"' + updatedDateFrom + '","updatedDateTo":"' + updatedDateTo + '"}'

msg.Attributes["openapi.form_params.grant_type"] = "openapi_2lo";

return msg;

}

function start(ctx) {

$.output(generateMessage());

}

Step 2 - Configure the OpenAPI client operator

In the OpenAPI client operator I configured 2 things:

- OAuth 2.0 credentials

- Operational reporting API details: It is not possible to specify dynamically all parameters, e.g. filters, hence why the code in Step 1 is required.

SAP Ariba OAuth 2.0 authentication details

Ariba API configuration:

SAP Ariba Operational Reporting API configuration

Step 3 - Data manipulation in the Python3 operator

The Python3 operator will be responsible of processing the OpenAPI client response. It will remove the active suppliers that might be included in the response and outputs only inactive suppliers. For simplicity reasons, the Python3 operator will output a string containing the inactive suppliers in CSV format.

Below the Python3 operator code snippet:

import json

def on_input(data):

api.logger.info("OpenAPI client message: {data}")

# Convert the body byte data type to string and replacing single quotes

json_string = data.body.decode('utf8').replace("'", '"')

# Converting to JSON

json_struct = json.loads(json_string)

records_csv = "SMVendorID,Name,TimeUpdated\n"

# Track number of inactive suppliers

count = 0

# Checking the records exist in the response

if 'Records' in json_struct:

for record in json_struct['Records']:

# Process only records that are inactive

if record['Active'] == False:

count += 1

# Prepare CSV line

line = f'"{record["SMVendorID"]}","{record["Name"]}","{record["TimeUpdated"]}"\n'

api.logger.info(f"Line: {line}")

records_csv += line

api.logger.info(f"Total inactive suppliers processed: {count}")

api.send("output", records_csv)

api.set_port_callback("input1", on_input)

Step 4 - Convert CSV to Table

The Decode table operator converts the Python3 operator output string, in CSV format, and converts it into a table. This table is what the SAP Analytics Cloud producer expects to create/replace/append to a dataset in SAP Analytics Cloud.

Configuration:

Decode Table operator configuration

Step 5 - Configure the SAP Analytics Cloud components

I now proceed to configure the dataset details in the SAP Analytics Cloud formatter operator. Note that in the configuration below I set the Target Dataset field as New.... after running the pipeline for the first time we can set it to Replace so that every time the pipeline runs, it will replace the dataset in SAP Analytics Cloud. I copied the dataset ID from the dataset created and set the Target Dataset option to replace and specify the dataset ID.

SAP Analytics Cloud formatter configuration

All I'm missing now is the SAP Analytics Cloud producer OAuth 2.0 details and we are ready to run the pipeline.

SAP Analytics Cloud producer configuration

Now that all steps are completed, I run the SAP Data Intelligence pipeline and check the results in SAP Analytics Cloud.

SAP Analytics Cloud Ariba_Inactive_Suppliers dataset

As we can see, we have created a dataset containing only inactive suppliers in SAP Analytics Cloud. We can now use this dataset to create a report/dashboard in SAP Analytics Cloud.

Labels:

9 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

Business Trends

113 -

Business Trends

11 -

Event Information

44 -

Event Information

3 -

Expert Insights

18 -

Expert Insights

25 -

Life at SAP

32 -

Product Updates

253 -

Product Updates

27 -

Technology Updates

82 -

Technology Updates

14

Related Content

- The Procurement Monthly - March 2024 in Spend Management Blogs by SAP

- Pre-requisites for SAP Ariba Category Management implementation in Spend Management Blogs by SAP

- SAP Ariba 2402 Release Key Innovations Preview in Spend Management Blogs by SAP

- Harnessing the Power of External Workforce: Two Decades of Change and Growth in Spend Management Blogs by SAP

- Issue with SAP ARIBA analytical reporting, number of rows extracted does not match in Spend Management Q&A

Top kudoed authors

| User | Count |

|---|---|

| 3 | |

| 1 | |

| 1 | |

| 1 | |

| 1 | |

| 1 | |

| 1 |