- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Data Intelligence integration with SAP Analytics C...

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Product and Topic Expert

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

12-05-2019

5:14 PM

In this blog post I will describe how we can push datasets from SAP Data Intelligence to SAP Analytics Cloud (SAC).

In Data Intelligence, we have two pipeline operators, SAC Formatter and SAC Producer.

There is a great example graph Push to SAP Analytics Cloud, to help get you started.

To achieve this we used these capabilities

Previously I have shared How to Automate Web Data acquisition with SAP Data Intelligence, now we can extend that, by pushing that dataset to SAC.

The previous graph looks as below and runs in Data Intelligence.

Before we can push the data to SAC, we need to format the data into the new message.table format. For this we use the Decode Table operator. Set input format as CSV

Using the SAC Cloud Formatter, we specify how SAC should create the dataset.

In the exchange rate data download from the web, we do not have usable column headings, those need to be provided in the Output Schema.

Switching to SAP Analytics Cloud.

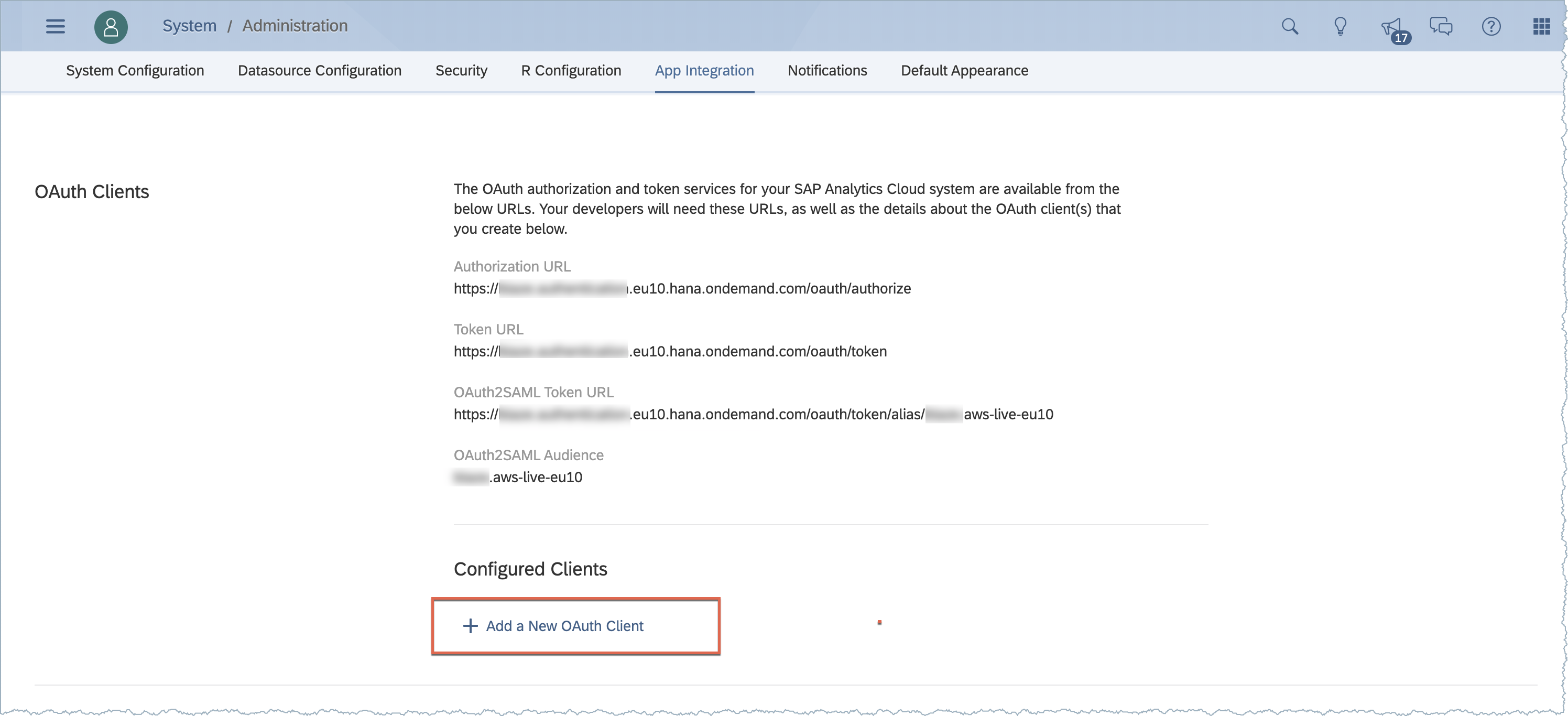

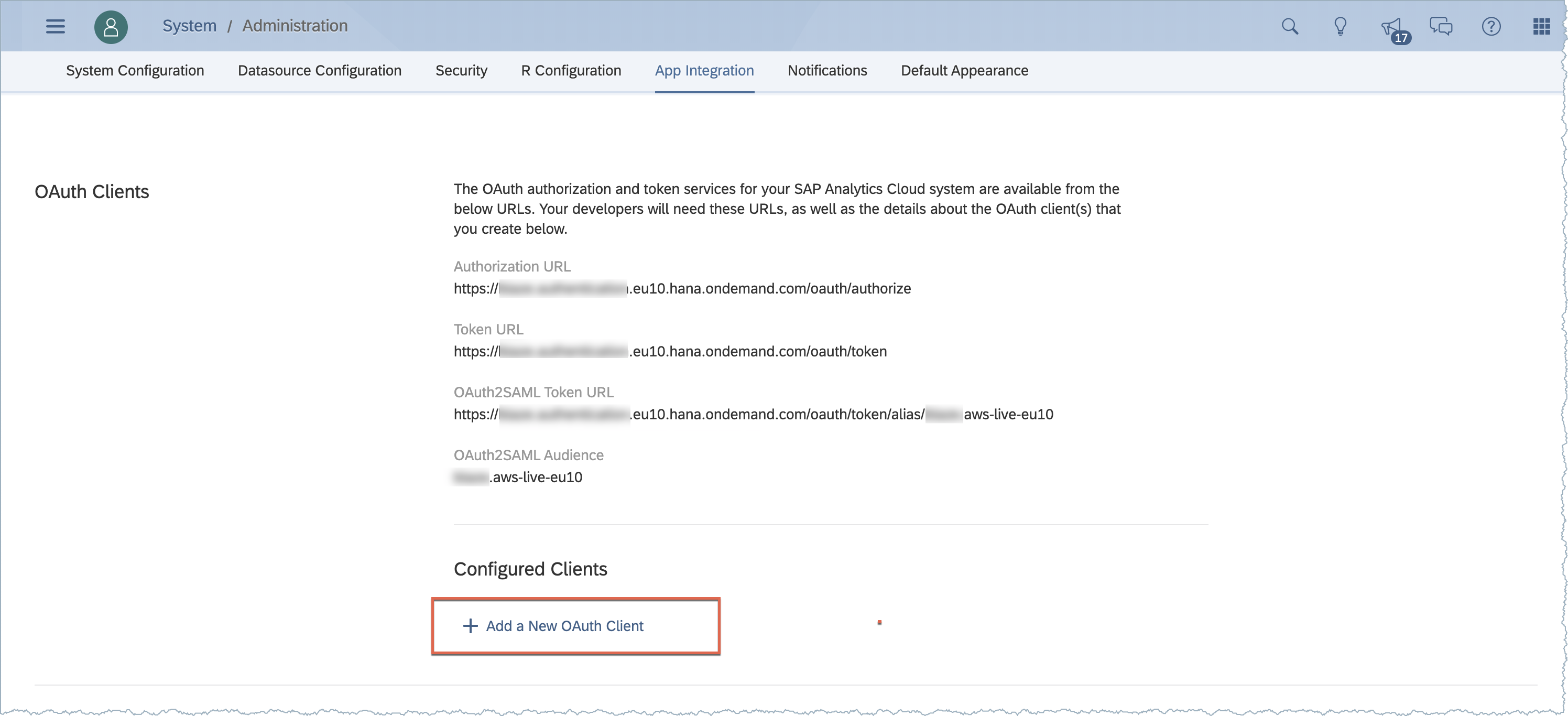

SAC has API access that we need to enable, we navigate to System Administration - App Integration.

Here we see the parameters used to authenticate.

We need to add a new client enable this access through by adding a new OAuth Client.

Complete the OAuth Client request as below, replacing the Data Intelligence host name

For CF SAC Tenants use this format for the redirect URI

For Neo Tenants use

Press the Add button, and it will generate a Client ID and Secret to use in our Data Intelligence operator.

If you have a Neo Tenant the New OAuth Client screen is slightly different, you are required to enter the OAuth Client ID and Secret that we will require in Data Intelligence.

Copy and paste your OAuth URLs from SAC and your OAuth Client ID and Secret information into the SAP Analytics Cloud Producer.

Our completed pipeline looks like this

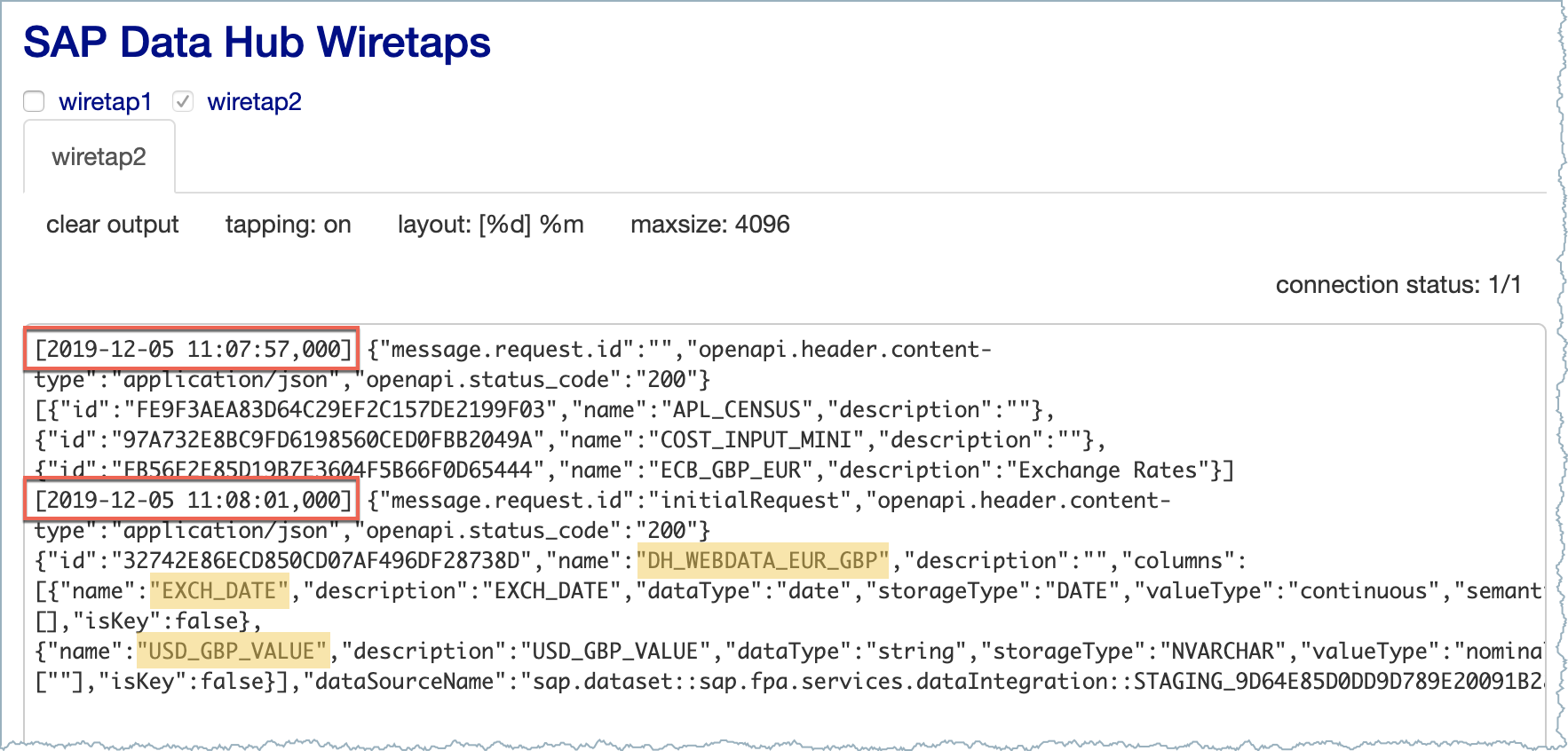

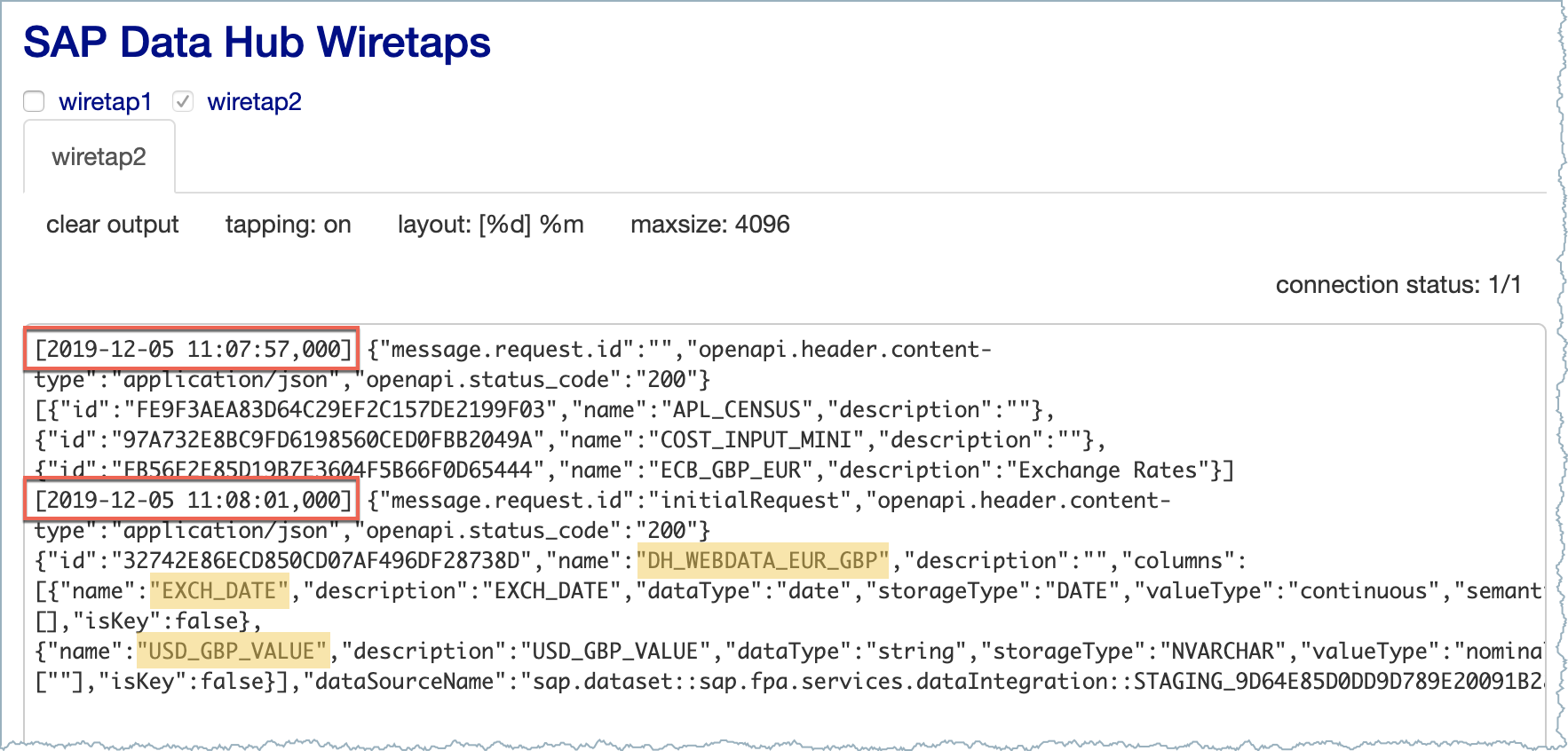

Run the pipeline and check the WireTap connected to the SAP Producer.

It shows an openapi.status_code 401 Unauthorized message.

To resolve the openapi.status_code 401 Unauthorized, we must authorize the API Token access. This is available through the Open UI option in the SAP Analytics Cloud Producer

Opening the UI, brings you here, click on the link, which grants authorization.

Granting permission shows you the Access Token

We should stop and re-run the pipeline, and check the Wiretap once more.

If you see other messages such as openapi.status_code 403 Forbidden then you will likely need to get the SAC dataset API enabled on your SAC tenant, this can be done by logging a support ticket or a Jira if you are SAP employee.

If we do have the dataset API enabled, the output should be similar to the Wiretap below showing we received 2 API responses.

At 11:07:57 we see the existing SAC datasets and their IDs.

At 11:08:01 we see the creation of our new dataset DH_WEBDATA_EUR_GBP

Switching to SAC we can verify the dataset has been created under "My Files"

Opening the dataset shows our data safely in SAC

From here we can quickly build some cool visualisation and even use that data with Smart Predict.

We can now seamlessly push data into SAC from SAP Data Intelligence Cloud or on-premises. This could be simple data movement or to integrate the output of machine learning models from Data Intelligence in SAP Analytics Cloud. I hope this blog post has helped you better understand another integration option.

In Data Intelligence, we have two pipeline operators, SAC Formatter and SAC Producer.

There is a great example graph Push to SAP Analytics Cloud, to help get you started.

To achieve this we used these capabilities

- SAP Data Intelligence Modeler

- Decode Table

- SAP Analytics Cloud Formatter

- SAP Analytics Cloud Producer

- SAP Analytics Cloud

- App Integration - Data Set API

- Dataset consumption

- SAC Dataset Limitations

- 2 billion rows

- 1000 columns

- 100 MB per HTTP request

Previously I have shared How to Automate Web Data acquisition with SAP Data Intelligence, now we can extend that, by pushing that dataset to SAC.

The previous graph looks as below and runs in Data Intelligence.

Before we can push the data to SAC, we need to format the data into the new message.table format. For this we use the Decode Table operator. Set input format as CSV

Using the SAC Cloud Formatter, we specify how SAC should create the dataset.

In the exchange rate data download from the web, we do not have usable column headings, those need to be provided in the Output Schema.

Switching to SAP Analytics Cloud.

SAC has API access that we need to enable, we navigate to System Administration - App Integration.

Here we see the parameters used to authenticate.

We need to add a new client enable this access through by adding a new OAuth Client.

Complete the OAuth Client request as below, replacing the Data Intelligence host name

For CF SAC Tenants use this format for the redirect URI

https://<SAP_Data_Intelligence_Hostname>/**For Neo Tenants use

https://<SAP_Data_Intelligence_Hostname>/app/pipeline-modeler/service/v1/runtime/internal/redirect

SAC CF OAuth Client Information

Press the Add button, and it will generate a Client ID and Secret to use in our Data Intelligence operator.

SAC CF OAuth Client Config

If you have a Neo Tenant the New OAuth Client screen is slightly different, you are required to enter the OAuth Client ID and Secret that we will require in Data Intelligence.

SAC Neo OAuth Client Configuration

Copy and paste your OAuth URLs from SAC and your OAuth Client ID and Secret information into the SAP Analytics Cloud Producer.

SAP Analytics Cloud Producer Node Config

Our completed pipeline looks like this

Run the pipeline and check the WireTap connected to the SAP Producer.

It shows an openapi.status_code 401 Unauthorized message.

To resolve the openapi.status_code 401 Unauthorized, we must authorize the API Token access. This is available through the Open UI option in the SAP Analytics Cloud Producer

Opening the UI, brings you here, click on the link, which grants authorization.

Granting permission shows you the Access Token

We should stop and re-run the pipeline, and check the Wiretap once more.

If you see other messages such as openapi.status_code 403 Forbidden then you will likely need to get the SAC dataset API enabled on your SAC tenant, this can be done by logging a support ticket or a Jira if you are SAP employee.

If we do have the dataset API enabled, the output should be similar to the Wiretap below showing we received 2 API responses.

At 11:07:57 we see the existing SAC datasets and their IDs.

At 11:08:01 we see the creation of our new dataset DH_WEBDATA_EUR_GBP

Switching to SAC we can verify the dataset has been created under "My Files"

Opening the dataset shows our data safely in SAC

From here we can quickly build some cool visualisation and even use that data with Smart Predict.

Conclusion

We can now seamlessly push data into SAC from SAP Data Intelligence Cloud or on-premises. This could be simple data movement or to integrate the output of machine learning models from Data Intelligence in SAP Analytics Cloud. I hope this blog post has helped you better understand another integration option.

- SAP Managed Tags:

- SAP Analytics Cloud,

- SAP Data Intelligence

Labels:

44 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,661 -

Business Trends

88 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

65 -

Expert

1 -

Expert Insights

178 -

Expert Insights

280 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

784 -

Life at SAP

11 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

330 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,886 -

Technology Updates

408 -

Workload Fluctuations

1

Related Content

- Unify your process and task mining insights: How SAP UEM by Knoa integrates with SAP Signavio in Technology Blogs by SAP

- 10+ ways to reshape your SAP landscape with SAP BTP - Blog 4 Interview in Technology Blogs by SAP

- 10+ ways to reshape your SAP landscape with SAP Business Technology Platform – Blog 4 in Technology Blogs by SAP

- Top Picks: Innovations Highlights from SAP Business Technology Platform (Q1/2024) in Technology Blogs by SAP

- What’s New in SAP Analytics Cloud Release 2024.08 in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 13 | |

| 10 | |

| 10 | |

| 9 | |

| 8 | |

| 7 | |

| 6 | |

| 5 | |

| 5 | |

| 5 |