- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Integrate SAP Data Intelligence and S/4HANA Cloud ...

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Product and Topic Expert

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

04-22-2020

8:28 PM

In part one of this blog, you saw how to configure the connectivity between SAP Data Intelligence and SAP S/4HANA Cloud for Metadata Browsing and Pipeline Integration in SAP Data Intelligence.

Following on from that, in this blog you will see how to use the newly created connection as part of the pipeline integration in the Data Intelligence modeler. You will be able to create a pipeline that fetches CDS view data from S/4HANA Cloud and writes the data to a file in Cloud storage.

As some changes were applied in S/4HANA Cloud 2002, with regards to how Data Intelligence and S/4HANA Cloud integrate for CDS views, I'll provide a quick recap of the connection properties here.

In S/4HANA Cloud, use Comm Scenarios SAP_COM_0576 and SAP_COM_0532 for metadata access and pipeline integration respectively. You'll use the apps in the Communication Management group to create the required connectivity.

As detailed in Part one, start by creating a user for use in Data Intelligence. You use the Maintain Communication User app for this.

You then use the Display Communication Scenarios app to find the provided scenarios and create your own Communication Arrangements from them.

You will be prompted to create a Communication System and you provide the user you created previously. Note down the password and username as you'll use these in the Data Intelligence connection management. You can assign the same user and Communication System to both of your Communication Arrangements. This way the user you provide in Data Intelligence has both metadata and pipeline capabilities.

In S/4HANA Cloud the required whitelisting and authorisations are pre-defined with the activation of these Communication Arrangements. In an on-premise scenario, you perform these authorisations and whitelisting steps yourself.

Your Communication Arrangements should look similar to the following.

In Data Intelligence we'll use the configuration you've just created above, to create a WebSocket RFC connection. This connection will be used in the pipeline modeler and metadata explorer. The WebSocket RFC connection is secure, so requires you to upload the SSL certificate from S/4HANA Cloud into Data Intelligence Connection Management. From the S/4HANA Cloud Fiori Launchpad, export the certificate and save it as Base64-encoded ASCII, single certificate. Note 2849542 has more details about this.

In Data Intelligence Connection Management Create a connection of Type: ABAP, Protocol: WebSocket RFC in SAP Data Intelligence as follows.

You can now use this connection to browse CDS view data in metadata explorer and fetch CDS view data in a pipeline. Note: Data Preview in metadata explorer is still not enabled for S/4HANA Cloud.

To keep our experiment well structured and allow further additions at a later date, we'll create a scenario in the Machine Learning Scenario Manager.

Give the scenario a name, such as CDS_VIEW_S4HC. In the scenario, create a pipeline called CDS_READ_S4HC and use the Blank Template for the pipeline. In my scenario below, I created a second pipeline called CDS_READ_WIRETAP for troubleshooting and experiments.

When you create the pipeline, the modeler will open in a new tab. Select the Graphs tab, then select Scenario Templates in the Category Visibility dropdown.

Depending on your Data Intelligence version, you may have the pre-provided CDS View to File pipeline as well as some others. To use this pipeline, you'll need to select it, the pipeline then opens in a new tab in the modeler. You could then copy the JSON of this pipeline into the JSON of your blank pipeline and make some adjustments as detailed below.

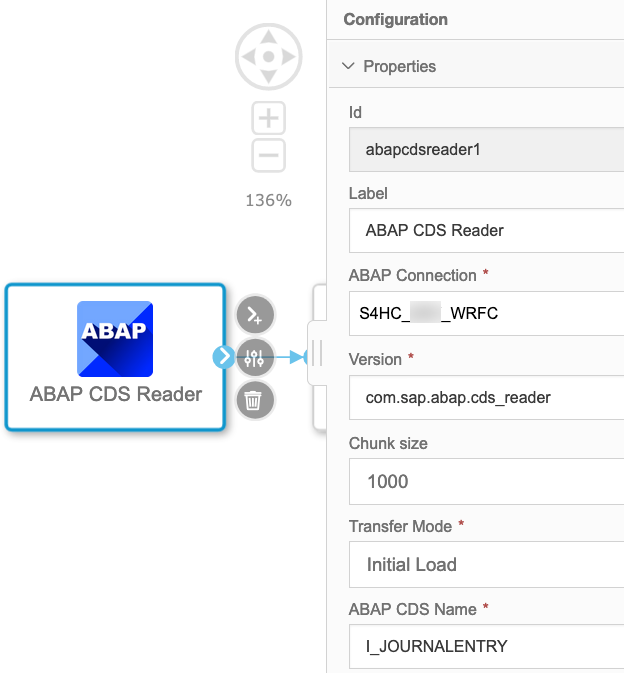

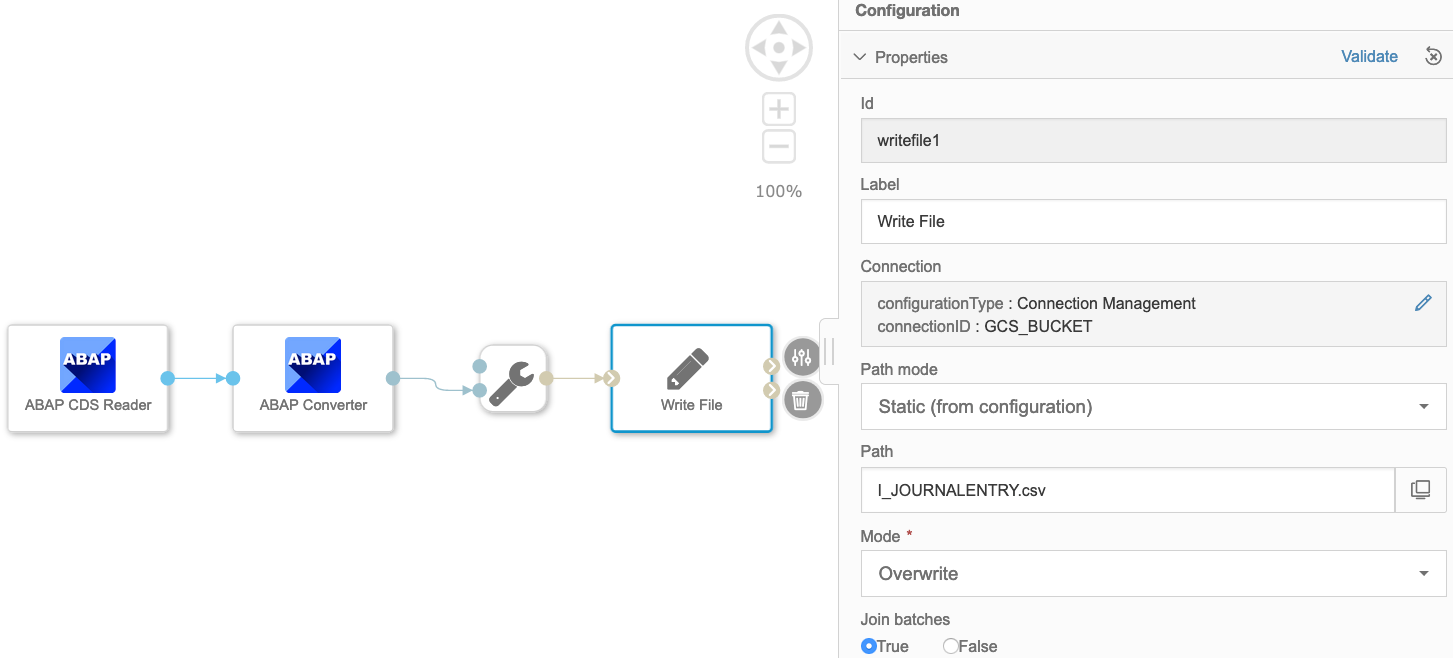

For simplicity I decided to create a simple pipeline using the available operators. The pipeline is as follows and consists of the ABAP CDS Reader operator, ABAP Converter operator and Write File operator.

In this scenario I'm writing the data to GCS, you could configure the Write File operator to write to the DI Data Lake or other storage of your choice.

Configure the ABAP CDS Reader operator using the following settings. Select the ABAP WRFC connection you created previously. Select the Chunk size, Transfer Mode and input the name of the CDS view you want to use. The name needs to be all caps and take note of additional eligibility criteria detailed later in this post. For now, use I_JOURNALENTRY as this CDS view meets the eligibility criteria for pipeline integration and data extraction.

Configure the ABAP Converter operator by selecting your S4HC connection and the required output file format.

Save the pipeline in the modeler and go back to the Scenario Manager, create a new version and then select the pipeline to be executed.

While the pipeline executes, you can monitor its status in the modeler.

After some time, in the metadata explorer, if you navigate to your Cloud storage or other target location that you specified, you'll see the CDS view file created.

View the factsheet and you see the data from your chosen CDS view.

With data from the CDS view now in Data Intelligence you could process this further in a separate pipeline or perform some ML tasks using a notebook in Data Intelligence. The opportunities are endless. I look forward to seeing what you create!

From my experiments, not all available CDS views are eligible for use in a pipeline integration scenario. Metadata access is restricted to published/released CDS views only. For pipeline integration, your CDS view should have the following annotations:

'@Analytics.dataExtraction.enabled: true' to be eligible for use with the ABAP CDS Reader operator

'@Analytics.dataExtraction.delta.changeDataCapture : automatic/mapping' to be eligible for data extraction.

For S/4HANA Cloud you can find out this information from the View Browser app.

Using the app you can search for available CDS views, view their annotations and more.

ranjitha.balaraman created a great, detailed blog on this process.

The official documentation about CDS views in S/4HANA Cloud is here.

This is an example of what the annotations look like:

The CDS_READ_WIRETAP pipeline I mentioned earlier consists of the ABAP CDS Reader operator, ABAP Converter operator and the Wiretap operator. I used this pipeline to determine if the scenario worked, if I had a correct CDS view etc. In the wiretap, you'll see data processing if the scenario and its settings are correct. The pipeline looked as follows:

In general, when i received an error like the following in the pipeline modeler, it was because the chosen CDS view did not meet the eligibility criteria.

Following on from that, in this blog you will see how to use the newly created connection as part of the pipeline integration in the Data Intelligence modeler. You will be able to create a pipeline that fetches CDS view data from S/4HANA Cloud and writes the data to a file in Cloud storage.

Recap on Functionality and Creating Connections

As some changes were applied in S/4HANA Cloud 2002, with regards to how Data Intelligence and S/4HANA Cloud integrate for CDS views, I'll provide a quick recap of the connection properties here.

In S/4HANA Cloud, use Comm Scenarios SAP_COM_0576 and SAP_COM_0532 for metadata access and pipeline integration respectively. You'll use the apps in the Communication Management group to create the required connectivity.

As detailed in Part one, start by creating a user for use in Data Intelligence. You use the Maintain Communication User app for this.

You then use the Display Communication Scenarios app to find the provided scenarios and create your own Communication Arrangements from them.

You will be prompted to create a Communication System and you provide the user you created previously. Note down the password and username as you'll use these in the Data Intelligence connection management. You can assign the same user and Communication System to both of your Communication Arrangements. This way the user you provide in Data Intelligence has both metadata and pipeline capabilities.

In S/4HANA Cloud the required whitelisting and authorisations are pre-defined with the activation of these Communication Arrangements. In an on-premise scenario, you perform these authorisations and whitelisting steps yourself.

Your Communication Arrangements should look similar to the following.

SAP Data Intelligence WebSocket RFC Connection

In Data Intelligence we'll use the configuration you've just created above, to create a WebSocket RFC connection. This connection will be used in the pipeline modeler and metadata explorer. The WebSocket RFC connection is secure, so requires you to upload the SSL certificate from S/4HANA Cloud into Data Intelligence Connection Management. From the S/4HANA Cloud Fiori Launchpad, export the certificate and save it as Base64-encoded ASCII, single certificate. Note 2849542 has more details about this.

In Data Intelligence Connection Management Create a connection of Type: ABAP, Protocol: WebSocket RFC in SAP Data Intelligence as follows.

![]()

You can now use this connection to browse CDS view data in metadata explorer and fetch CDS view data in a pipeline. Note: Data Preview in metadata explorer is still not enabled for S/4HANA Cloud.

Consume CDS View Data

To keep our experiment well structured and allow further additions at a later date, we'll create a scenario in the Machine Learning Scenario Manager.

Give the scenario a name, such as CDS_VIEW_S4HC. In the scenario, create a pipeline called CDS_READ_S4HC and use the Blank Template for the pipeline. In my scenario below, I created a second pipeline called CDS_READ_WIRETAP for troubleshooting and experiments.

When you create the pipeline, the modeler will open in a new tab. Select the Graphs tab, then select Scenario Templates in the Category Visibility dropdown.

Depending on your Data Intelligence version, you may have the pre-provided CDS View to File pipeline as well as some others. To use this pipeline, you'll need to select it, the pipeline then opens in a new tab in the modeler. You could then copy the JSON of this pipeline into the JSON of your blank pipeline and make some adjustments as detailed below.

For simplicity I decided to create a simple pipeline using the available operators. The pipeline is as follows and consists of the ABAP CDS Reader operator, ABAP Converter operator and Write File operator.

In this scenario I'm writing the data to GCS, you could configure the Write File operator to write to the DI Data Lake or other storage of your choice.

Configure the ABAP CDS Reader operator using the following settings. Select the ABAP WRFC connection you created previously. Select the Chunk size, Transfer Mode and input the name of the CDS view you want to use. The name needs to be all caps and take note of additional eligibility criteria detailed later in this post. For now, use I_JOURNALENTRY as this CDS view meets the eligibility criteria for pipeline integration and data extraction.

Configure the ABAP Converter operator by selecting your S4HC connection and the required output file format.

In the Write File operator, select your target for the output file, select the path and Mode. In this case we used Overwrite, the pipeline keeps running and pushing data to the selected target.

Save the pipeline in the modeler and go back to the Scenario Manager, create a new version and then select the pipeline to be executed.

While the pipeline executes, you can monitor its status in the modeler.

After some time, in the metadata explorer, if you navigate to your Cloud storage or other target location that you specified, you'll see the CDS view file created.

View the factsheet and you see the data from your chosen CDS view.

With data from the CDS view now in Data Intelligence you could process this further in a separate pipeline or perform some ML tasks using a notebook in Data Intelligence. The opportunities are endless. I look forward to seeing what you create!

A note on which CDS views are eligible

From my experiments, not all available CDS views are eligible for use in a pipeline integration scenario. Metadata access is restricted to published/released CDS views only. For pipeline integration, your CDS view should have the following annotations:

'@Analytics.dataExtraction.enabled: true' to be eligible for use with the ABAP CDS Reader operator

'@Analytics.dataExtraction.delta.changeDataCapture : automatic/mapping' to be eligible for data extraction.

For S/4HANA Cloud you can find out this information from the View Browser app.

Using the app you can search for available CDS views, view their annotations and more.

ranjitha.balaraman created a great, detailed blog on this process.

The official documentation about CDS views in S/4HANA Cloud is here.

This is an example of what the annotations look like:

A note on troubleshooting

The CDS_READ_WIRETAP pipeline I mentioned earlier consists of the ABAP CDS Reader operator, ABAP Converter operator and the Wiretap operator. I used this pipeline to determine if the scenario worked, if I had a correct CDS view etc. In the wiretap, you'll see data processing if the scenario and its settings are correct. The pipeline looked as follows:

In general, when i received an error like the following in the pipeline modeler, it was because the chosen CDS view did not meet the eligibility criteria.

- SAP Managed Tags:

- SAP Data Intelligence,

- SAP S/4HANA Public Cloud

Labels:

3 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

106 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

70 -

Expert

1 -

Expert Insights

177 -

Expert Insights

339 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

14 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,575 -

Product Updates

381 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,872 -

Technology Updates

470 -

Workload Fluctuations

1

Related Content

- SAP BTP FAQs - Part 1 (General Topics in SAP BTP) in Technology Blogs by SAP

- Threat Actors targeting SAP Applications in Technology Blogs by Members

- Understanding Data Modeling Tools in SAP in Technology Blogs by SAP

- SAP Datasphere + SAP S/4HANA: Your Guide to Seamless Data Integration in Technology Blogs by SAP

- Sapphire 2024 user experience and application development sessions in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 17 | |

| 11 | |

| 7 | |

| 7 | |

| 7 | |

| 7 | |

| 6 | |

| 6 | |

| 6 | |

| 6 |