- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- SAP Business Objects Data Quality Management ( DQM...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Relevant Systems

These enhancements were successfully implemented in the following systems:

- Customer Relationship Management ( CRM ) 7.02 SP 4

- SAP Business Objects Data Quality Management, version for SAP Solutions 4.0 SP2

This blog is relevant for:

- ABAP Developers who have been asked to include additional fields ( such as Date of Birth ) for duplicate matching with DQM

- ABAP Developers who have been asked to encapsulate the DQM functionality into a re-usable function / object.

This blog does not cover:

- Details of the Postal Validation, we are only concerned with Duplicate Check

Reference Material

User Guide:

http://help.sap.com/businessobject/product_guides/DQMgSAP/en/1213_dqsap_usersguide_en.pdf

Brian Kuzmanoski's Blog

Introduction

DQM is an add-on to your ERP / CRM system to provide address validation ( SAP calls it PV for Postal Validation ) and duplicate checking for Business Partners.

Technical details in this blog are relevant for a CRM 7.02 system as this is where I’ve implemented the enhancements explained later successfully however they ( enhancements ) should be relevant for any system in accordance with the DQM install guide.

In this blog entry I’ll only cover off items within a CRM system, I’m trying to get my colleague Brian Kuzmanoski to write a separate blog that deals with the DQM side of things for completeness of the solution overview.

Keep in mind I’m not going to explore every nook and cranny about the DQM add-on and this blog is just one answer to a couple of questions I’ve seen SCN, this is the first time I’ve touched DQM so have probably gone through the same learning curve as yourselves.

This is a great tool to provide address validation and formatting plus Business Partner duplicate checks in conjunction with an address directory, in my case the Australian address directory which is added to the DQM server ( every address in Australia ).

Installing the add-on out of the box with CRM and performing the initial setup, you get address and duplicate checking via T-CODE “BP” and automatic integration into the Account BSP Components.

The one catch I’ve found with DQM is that the duplicate checking is address focused which leads nicely into our problem definition. But first a brief look at how the duplicate check solution works:

SPRO Activities

When you’ve successfully installed DQM on your CRM system, you can find all your SPRO activities under:

SAP NetWeaver->Application Server->Basis Services->Address Management->SAP BusinessObjects Data Quality Management

SAP NetWeaver->Application Server->Basis Services->Address Management->Duplicate Check

Typical Scenario

You need to create a new customer account record in CRM, you go to your BP Account BSP or transaction BP in the backend CRM system and start entering their details. You then click Save and trigger the duplicate check. The following process is triggered in CRM:

- A “Driver” record is constructed which is the record you want to create in the SAP system ( only in memory, not in the DB yet ).

- A selection of “Passenger” records are selected based on the Match Code from the CRM Database

- The “Driver” and “Passenger” records are passed to DQM to perform the matching algorithms

- A result set is determined with percentile weightings for the matching routines and takes into consideration your configured “Threshold” ( configured in SPRO ).

The Match Code – Table /FLDQ/AD_MTCCODE

As part of the setup of DQM you would have run three reports ( refer to the user guide ) one of these reports went through all your Business Partners and generated a Match Code and stored it in table /FLDQ/AD_MTCCODE.

The Match Code is just an index that reduces the number of potential records with which DQM needs to run it’s matching routines.

When a Driver record is created, DQM knows how to generate it’s Match Code. When the “Candidate Selection” occurs ( selecting all the records with the same Match Code in CRM ) you are taking a slice of possible data rather than comparing every Business Partner in your database. This is obviously for efficiency however there are some pitfalls, we’ll cover these later.

The Results

If there are any possible matches then you may see several screens, one for the Address Validation and one if there are any possible duplicate records.

Presto, you’ve stopped a potential duplicate record entering your CRM system or you’ve validated and formatted the new customer’s address.

Notice from the screenshots there is a Similarity %, this is what we’re interested in, which begs the questions:

- How can DQM put more weight on Date of Birth and Gender rather than the address details

- How do I pass the additional fields across to DQM

- Do I need to go through a stack of enhancements and potentially break the standard SAP solution?

- What if I have my own services to create customers in CRM, can I encapsulate the Duplicate Check functionality and re-use it in my own code?

Problem Definition

You’ve installed DQM and taken advantage of the standard functionality provided, but you realise that you have requirements to match on more than address data such as Date of Birth, Middle Name or Gender.

You also want to create your own matching algorithms based on these additional fields on the DQM side which are not so address focused and want to put percentile weightings on the additional fields over address fields.

Remember what we talked about before, DQM is address so it doesn’t support all the Business Partner fields in the Driver and Passenger records when they are passed to DQM.

If you think of a typical scenario you probably want to match Date of Birth or Middle Name or Gender in combination with address details. Out of the box you can’t use these three fields.

You may have custom services that create your customers and therefore want to plug in the DQM Duplicate Check ( outside of the BAdi framework ) such as a web service

For this blog we’ll focus on passing across the following additional fields to DQM:

- Date of Birth

- Middle Name

- Gender

- Country of Birth

Technical Implementation

We took a path of least resistance approach to these enhancements. What we didn’t want to do was to completely re-write the duplicate checking framework just to accommodate a few additional fields from the Business Partner.

Yes , our solution can be considered a hack, but what it doesn’t do is force you down a path where you completely own the solution ( insert your favourite statement about TCO here ) where you have enhancement after enhancement all through standard SAP code which is a nightmare to maintain in the long run.

First let’s define some requirements:

- I want to use Date of Birth, Middle Name, Gender, Country of Birth in my driver and passenger records so DQM can match on these fields as well

- I want a class/function that encapsulates the call to DQM and I can re-use it elsewhere in my SAP System.

- I want to provide different % weightings to the other fields.

Encapsulating Badi ADDRESS_SEARCH

This is the Badi that is called to perform the duplicate check or address search. If you dive into the implementation you will find:

The method ADDRESS_SEARCH is where all the magic happens and SAP makes an RFC call into DQM for processing of matching algorithms and returns some results.

Create a Class

We created a class with a global variable go_fldq_duplicate_check which is an instantiation of class CL_EX_ADDRESS_SEARCH during the constructor.

We added a method called FIND_DUPLICATES where the driver record is constructed. To make it simple we added two structures as importing attributes for capturing the customer details:

- IS_BUILHEADER – BOL Entity Structure for BuilHeader

- IS_ADDRESS - BOL Entity Structure for BuilAddress

When constructing the Driver record, all you are doing is adding lines to lt_search_params such as:

CLEAR ls_search_param.

ls_search_param-tablename = 'ADRC'.

ls_search_param-fieldname = 'CITY1'.

ls_search_param-content = is_address-city.

APPEND ls_search_param TO lt_search_params.Passing Your Own Attributes – Hijack Other Fields

This is where we need to pass in our other fields Date of Birth, Middle Name, Gender and Country of Birth. We do this by hijacking a couple of available fields from the supported field list. NB: We also pass in all other address information as well just to adhere to the standard functionality.

You add these field values as search parameters:

CLEAR: ls_search_param.

ls_search_param-tablename = 'ADRC'.

ls_search_param-fieldname = 'NAME1'.

CONCATENATE is_builheader-birthdate is_builheader-middlename INTO ls_search_param-content SEPARATED BY space.

CONDENSE ls_search_param-content NO-GAPS.

APPEND ls_search_param TO lt_search_params.

CLEAR: ls_search_param.

ls_search_param-tablename = 'ADRC'.

ls_search_param-fieldname = 'NAME2'.

CONCATENATE is_builheader-*** is_builheader-countryorigin INTO ls_search_param-content SEPARATED BY space.

CONDENSE ls_search_param-content NO-GAPS

APPEND ls_search_param TO lt_search_paramsPitfalls Here

Yes, hijacking NAME1 and NAME2 fields is not optimal however it represents an opportunity to pass in additional fields so you can unpack them n the DQM side.

There is obviously some limitations here, i.e. the field lengths and if you have a lot of additional fields to pass in, how do you separate them logically so you know how to unpack them in DQM correctly every time.

Supported Field List - /FLDQ/AD_MTCCODE

This structure is used to construct the passenger records sent to DQM. Start here and also cross check on the DQM side the structure that is present there.

Call to DQM

Once the Driver Record is constructed i.e. your lt_search_params are populated the call is constructed like this:

Sample Code is provided below, we’ll just hard code a few items for demo purposes.

If IF_EX_ADDRESS_SEARCH~ADDRESS_SEARCH is executed successfully you will hopefully have some results in the importing parameter EX_T_SEARCH_RESULTS that contains the % weightings for your potential duplicates.

From here you can build your own return structure with these and other customer details.

So there you have it, you should have successfully encapsulated the DQM functionality in a class where you can now re-use it in other parts of your CRM system if you need to.

Pitfalls Here

When you encapsulate this functionality, you are constructing the Driver record manually which ensures your custom fields are passed in correctly.

In the typical scenario where you are creating a Business Partner via transaction BP or through the CRM Web UI, the Driver record is constructed using only available address fields plus First Name and Last Name.

You will need to make a small enhancement ( discussed next ) in order to ensure the scenario’s where you are using SAP GUI or CRM Web UI also pass the desired fields for the DQM matching algorithms to ensure consistent results across your business processes.

Driver and Passenger Records Enhancement

Before the physical RFC call is made, a selection of candidate records is chosen based on the Match Code.

Your Passenger records will not have all the custom fields you want sent across to DQM to perform the matching algorithms.

What we need to do here is create a small implicit enhancement where we can populate the other details of the candidate records with the same hijacking idea as we saw above.

Program /FLDQ/LAD_DUPL_CHECK_SEARCHF01 ( Transaction SE38 ) FORM GET_CANDIDATES

This is where the candidate selection occurs. The selections are stored in it_cand_matches. This has a table structure /FLDQ/AD_MTCCODE.

If you look at the table structure, you will see the available fields that make up the Passenger Records, as you can see there is mainly address fields in here including NAME1 and NAME2.

This is where we need to make a small enhancement in order to populate Date of Birth, Gender, Middle Name and Country of Birth as we did above when you made your class. Only this time, we’re dealing with actual Business Partners in the database so we need to read their records and populate the candidate selection record.

Make an implicit enhancement at the end of FORM GET_CANDIDATES, you can use the following code as a guide:

The ZCL_DQM_PARTNER class is a simple class in order to read BUT000 and BUT020 without having to do direct SELECT statements.

When the code in the enhancement is executed, you are populating Date of Birth, Gender, Middle Name into the passenger records so on arrival to DQM your matching algorithms can use them.

By performing this enhancement you are doing two things

- All your additional fields are populated in the candidate selection so DQM can actual use those fields in the matching routines when comparing to the Driver record

- When a standard scenario is executed, such as creating a Business Partner in SAP GUI or CRM Web UI, these details are correctly passed to DQM for matching.

You are obviously placing a little more load on the system here by retrieving more details about the Business Partner. Try and be as efficient as possible in this code to reduce any performance issues.

In reality, your Match Code should have reduced the number of candidates so any impact here should be minimal.

Populating the Driver Record when creating a Business Partner via transaction BP or via CRM Web UI

As mentioned a few times earlier, during the scenario when you create a Business Partner via SAP GUI ( transaction BP ) or CRM Web UI, these scenario’s you don’t have control over the Driver record as you did in your class with method FIND_DUPLICATES.

There is a relatively simple solution for this that doesn’t involve any more enhancements to standard SAP code.

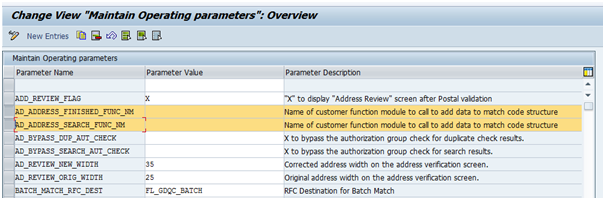

The DQM add-on in CRM allows you to implement your own code to determine the Driver record details and plug your code in via some settings in SPRO.

Under activity “Maintain Operating Parameters” you have two places where you can maintain your own function modules for some scenarios. You can refer to the User Guide for these.

The one we’re interested in is AD_ADDRESS_SEARCH_FUNC_NM.

Follow the user guide and implement your own Function Module as per those guidelines.

You place the name of your Function Module in the Parameter Value field above.

What your function module is going to do is essentially the same as what you did before in FIND_DUPLICATES except this time you need to pull the Business Partner context out of memory and populate the Driver record with any Business Partner details ( such as Date of Birth ) that have been entered by the user.

Here is a sample implementation, feel free to make improvements etc:

FUNCTION zdqm_match_code_duplicate_chk.

*"----------------------------------------------------------------------

*"*"Local Interface:

*" TABLES

*" IM_DRIVER STRUCTURE /FLDQ/AD_MTCCODE

*"----------------------------------------------------------------------

*LOCAL TABLES

DATA: lt_but000 TYPE TABLE OF bus000___i,

*LOCAL STRUCTURES

wa_match_codes TYPE /fldq/ad_mtccode,

ls_but000 LIKE LINE OF lt_but000,

*LOCAL VARIABLES

lv_gender TYPE char1,

lv_records_in_mem TYPE i.

LOOP AT im_driver INTO wa_match_codes.

CALL FUNCTION 'BUP_BUPA_MEMORY_GET_ALL'

EXPORTING

i_xwa = 'X'

TABLES

t_but000 = lt_but000.

DELETE lt_but000 WHERE partner IS INITIAL AND type IS INITIAL.

DESCRIBE TABLE lt_but000 LINES lv_records_in_mem.

CHECK lv_records_in_mem > 0.

IF lv_records_in_mem = 1.

READ TABLE lt_but000 INTO ls_but000 INDEX 1.

ELSE.

READ TABLE lt_but000 INTO ls_but000 WITH KEY name_first = wa_match_codes-name_first

name_last = wa_match_codes-name_last.

ENDIF.

CONCATENATE ls_but000-birthdt ls_but000-namemiddle INTO wa_match_codes-name1.

CONDENSE wa_match_codes-name1 NO-GAPS.

IF ls_but000-xsexf = abap_true.

lv_gender = '1'.

ELSEIF ls_but000-xsexu = abap_true.

lv_gender = '0'.

ELSEIF ls_but000-xsexm = abap_true.

lv_gender = '2'.

ENDIF.

CONCATENATE lv_gender ls_but000-cndsc INTO wa_match_codes-name2.

CONDENSE wa_match_codes-name2 NO-GAPS.

MODIFY im_driver FROM wa_match_codes INDEX sy-tabix.

CLEAR: ls_but000, lt_but000.

ENDLOOP.

ENDFUNCTIONBy implementing this Function Module you can now ensure that your additional fields are passed to DQM in the Driver Record when using the scenario of creating a Business Partner via SAP GUI or CRM Web UI.

Check Point – Is it working so far?

If you have:

- Installed DQM correctly

- Performed all necessary post install configuration and setup

- Successfully performed the “Hand Shake” procedure with DQM

- Setup your matching routines in DQM

- Built your own class in CRM which can be tested in isolation

- Created your enhancement point for Passenger records ( candidate selection )

- Created a Function Module to implement the Driver record population during standard Business Partner create scenario’s

You should be able to get some results back from testing your class. Just ensure you’ve actually setup some test data that makes sense in order to get results back from DQM.

Some Gotcha’s and Other Thoughts

With anything you work on for the first time, there are almost always downstream impacts that you didn’t consider.

Here are a couple:

BAPI_BUPA_CREATE_FROM_DATA

If you use this BAPI anywhere and you have installed DQM, then you will probably start to get failures if you’re trying to create a Business Partner that is a possible duplicate or the address is invalid if you have Postal Validation switched on. This is a problem if you have any interfaces that consume this function module.

Testing

Apart from your normal unit testing you will need to go through some regression tests because you’ve created an implicit enhancement point that affects some standard Business Partner creation scenarios.

Ensure the following behaves as expected:

- Create a business partner in SAP GUI via Transaction BP

- Create a Business Partner in CRM Web UI

- Ensure any interfaces that consume BAPI_BUPA_CREATE_FROM_DATA are working correctly now that DQM is switched on

Switching off PV and Duplicate Check

There are a few options for you to switch off the Postal Validation and Duplicate check if you need to. Although this doesn’t really tie in to the theme of this blog, we discovered some limitations here.

- You can activate / de-activate the Postal Validation and Duplicate check in a SPRO activity. This completely turns off these checks. If you do this, you will need to re-run the match-code generation when you turn it back on in case new Business Partners were created whilst DQM was switched off.

- You can assign a program or transaction code ( see screen shot below ). Here you can suppress several functions, Validation (PV) Search (Duplicate Check) Suggestions ( Postal address suggestions ).

The technical implementation of this check happens in Function Module /FLDQ/AD_CHECK_TCODE_VALIDITY.

This is where it gets interesting. The code behind this looks for the program name in SY-CPROG at runtime. Just beware that when this executes in a non-dialog process such as an RFC call or Web Service call, the value in SY-CPROG will be the overarching framework program. In the case of RFC calls , program SAPMSSY1. What this tells me is that “Maintain Exception Table of Transaction Codes” activity is only meant for dialog processes ( e.g. creating a business partner via transaction BP ).

Summary and Final Thoughts

The DQM add-on is really good piece of kit, we had the scenarios in this blog up and running in a couple of days including the DQM side which hopefully my colleague Brian Kuzmanoski will blog about very soon.

There are obviously a couple of limitations that we discovered, but remember the product is address focused, we’ve just demonstrated that you can include additional fields for matching even though our solution is not entirely optimal however we’ve avoided major enhancements which was the goal.

Just keep in mind that if you’re trying to switch off DQM via the “Exception” list, there is a limitation here for non-dialog processes.

Finally, DQM should be implemented in conjunction with an overall master data governance strategy, this is just an enabling tool for that strategy but by no means will solve all your master data problems.

There are further things to explore here such as how to connect DQM to a MDG system or even MDM, where you would effectively be cleansing your master data before it’s even created in an SAP system such as CRM.

Hope you enjoyed this blog. Please look out for Brian Kuzmanoski’s blog that covers the matching algorithms on the DQM side..

- SAP Managed Tags:

- SAP Data Services

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

126 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

88 -

Expert

1 -

Expert Insights

177 -

Expert Insights

410 -

General

2 -

Getting Started

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

18 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,571 -

Product Updates

456 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,868 -

Technology Updates

542 -

Workload Fluctuations

1

- How to Develop an OData Service: CURD operations, Paging, Sorting, Filtering, and Create_Deep_Entity in Technology Q&A

- Best Practice Content in SAP Signavio in Technology Blogs by SAP

- SAP BTP FAQs - Part 5 (Side by side Extensibility) in Technology Blogs by SAP

- CI/CD with SAP AI Core in Technology Blogs by SAP

- SAP Datasphere News in May in Technology Blogs by SAP

| User | Count |

|---|---|

| 15 | |

| 12 | |

| 10 | |

| 9 | |

| 9 | |

| 8 | |

| 8 | |

| 8 | |

| 8 | |

| 7 |