- SAP Community

- Products and Technology

- Enterprise Resource Planning

- ERP Blogs by Members

- Difficulties in price condition load for purchasin...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

This blog is the continuation to Stumbling blocks in purchasing info record migration by LSMW IDOC method

The project is outlined in the other blog, so I can directly start with the difficulties I had with the migration of the price conditions.

When you migrate info records by IDoc method, then the price conditions are not included. They have to be loaded in an extra step.

So again the question about the method. Batch Input vs. IDoc method.

In my last blog I explained that the IDoc method had the advantage that I can work with preassigned numbers and can create a mapping directly from the conversion step in LSMW. This advantage does not count for the conditions of an info record, because there is no extra mapping which has to be given to the business. The mapping was already there with the info record migration.

Still I decided to go with IDoc method, as it allows me to reload the data again and again if I do something wrong. With the batch input method I would need 2 LSMW objects worst case, for initial creation and for a change if something goes wrong. Trust me, even I make mistakes :wink: . Eventually already with the decision to use IDoc method here. You can leave a comment.

Now I knew how I am going to load the data, but I still had to solve the issue how do I get the data from the legacy system. If you check SCN with key words how send COND_A then you get 70 hits but rarely an answer. I actually found just one correct answer in relation to info record conditions and will spread it here again: it is possible from MEK3 transaction via condition info. However, at the time of migration I did not know about that. And I still think that it wouldn't be suitable for me, as MEK3 expects the condition type before you can continue to condition info, and I had 28 different condition types in the source system. Further I cannot even go into the condition info if there is no access defined for the condition type.

In the meantime I got an idea to send the conditions together with the info record using serialization, I will try this in my next info record migration.

But in this migration I decided to download the conditions using SE16 and a simple SQVI QuickView.

The goal was to migrate only valid conditions, no historic conditions.

I started with SE16 and downloaded all valid conditions from table A017 and A018; excluded the deleted conditions and those who had an validity end date lower than today. (We did not load conditions at material group level, hence no download from A025 table)

As you can see, the condition record number is listed at the end.

Next step was the development of the QuickView.

Just a join of the table KONH and KONP since we decided to do the few scales (KONM table) manually.

The chosen fields for our migration were:

| List fields | Selection fields |

|---|---|

KONH-KNUMH KONH-KOTABNR KONH-KAPPL KONH-KSCHL KONH-VAKEY KONH-DATAB KONH-DATBI KONP-KOPOS KONP-KAPPL KONP-KSCHL KONP-STFKZ KONP-KZBZG KONP-KSTBM KONP-KONMS KONP-KSTBW KONP-KONWS KONP-KRECH KONP-KBETR KONP-KONWA KONP-KPEIN KONP-KMEIN KONP-KUMZA KONP-KUMNE KONP-MEINS | KONH-KNUMH KONH-KOTABNR KONH-KAPPL KONP-LOEVM_KO KONH-VAKEY |

As the number of records exceeded the maximum numbers that can be added in the multiple selection I used the table number and excluded the records with deletion indicator. Nothing else as selection criteria.

116000 condition records caused a file of 44 MB. Too big to output the result from the QuickView directly in Excel. So it had to be downloaded as text and then imported to Excel.

Here you have to careful, if you have more about 60000 lines or more, then you can find 2 empty lines at position 59994 and 59995.

If you continue with sorting and filters, then you do not get all records, hence you need to remove those empty lines.

As a next step the fields have to be formatted. All value fields have to be formatted as number with 2 decimals and without delimiter for thousands.

After this I entered a formula VLOOKUP to identify the condition records that equal the condition record number from the A017 table download. Only those were kept for next processing step.

If you wonder why this is needed then have a closer look at this validity end date from KONH table. It is almost everytime 31.12.9999. If you enter a new price in the info record, then you usually care only about the valid from date and the condition is valid until a new condition is entered. But when a new condition is entered it does not change the valid-to date of the old condition. SAP selects the valid condition only based on the valid-from date.

And here I was lucky that I only had to load current valid conditions. I tried it a few times for all conditions but this caused a bigger chaos, because I was not able to process the IDocs in the needed sequence: the oldest condition first, the newest condition at the end. Even I had the correct sequence in the CONV file in LSMW, they became a mess when the IDocs got generated and processed.

As said, I kept only the latest conditions identified with VLOOKUP in the file. Be aware that this VLOOKUP for that many records can take hours depending on your PC and the numbers of processors. With 24 processors this was a job of a little more than 5 minutes.

Still I had to do a bit more cosmetic to get what I needed to load this file.

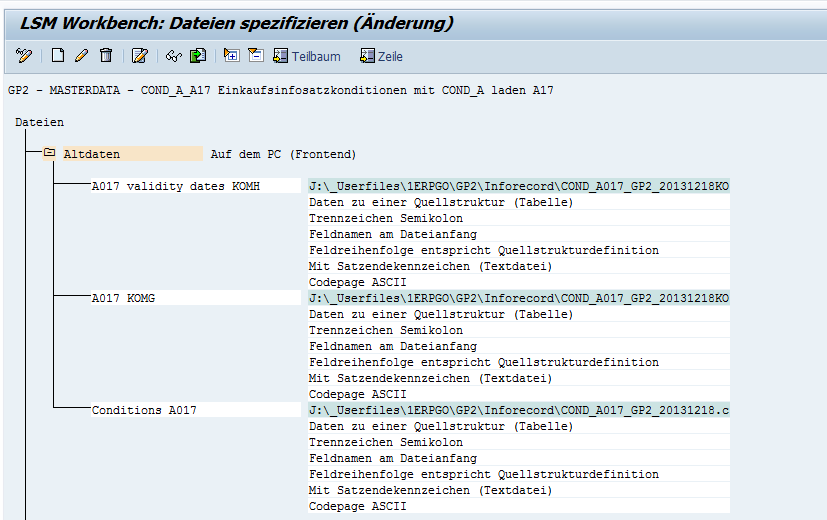

In this picture from LSMW you can see the IDoc structure: a header, the validity period, the conditions

The source file has to be prepared to deliver data for each of this segments in the structure.

Starting basis is my download file which has only the valid records now, this will be used for the detail, the KONP segment of the structure.

For the header segment I just copy the columns A to E into a new Excel tab.

for the validity period segment I copy the columns A to G into a new Excel tab.

From both new tabs I remove the duplicate records. ![]()

Now I save each tab as CSV file and assign the CSV files in LSMW to my source structures.

When you execute the step READ DATA then SAP joins all those 3 files based on the common fields in the beginning of each structure.

The rest is just the standard process of executing LSMW.

Important in the field mapping is the VAKEY field. SAP uses a variable key (VAKEY) to access the conditions. This is concatenated field with material number in the beginning, followed by vendor number, purchasing organisation, plant (only in case of A017 conditions) and info record type. As usual in a migration you have to replace the old values with new values to enable SAP again to find those conditions.

This means you have to create a data declaration to split this VAKEY field into its parts, like this:

data: begin of ZZVARKEYA,

ZLIFNR like LFM1-LIFNR,

ZMATNR like MARc-MATNR,

ZEKORG like LFM1-EKORG,

ZWERKS like MARC-WERKS,

ZINFTYP like EINE-ESOKZ,

end of zzvarkeyA.

you may want to do this for the source field and for the target field.

And then you need a little ABAP coding to replace the individual values. I have this mapping old to new in a Z-table with 3 fields, the identifier e.g. EKORG for purchasing organiatisation, the old value and the new value. I use a select statement to retrieve the value from this Z-table.

The value field KBETR can cause as well some headaches, especially if you have conditions with currencies that have no decimals like JPY or where the value is percentage. In both cases SAP has a strange way to store the values in the table.

The value field is defined in the data dictionary with 2 decimals. A currency like JPY does not get decimals when it is stored, it is just moved as is to this value field. And a price of 123JPY is then stored as 1.23 JPY.

A percentage is allowed to have 3 decimals, but stored in a field with 2 decimals makes it look extraordinary too.

And last but not least you may have negative condition values in the source file because of discount conditions.

All these circumstances have to be taken care in the conversion routine, which is in my case like this

g_amount = ZKONP-KBETR / 100.

if ZKONP-KONWA = '%'.

g_amount = ZKONP-KBETR / 1000.

endif.

if ZKONP-KONWA = 'JPY'.

g_amount = ZKONP-KBETR.

endif.

if ZKONP-KSCHL1 = 'RA00'

or ZKONP-KSCHL1 = 'RA01'

or ZKONP-KSCHL1 = 'RB01'

or ZKONP-KSCHL1 = 'RC00'.

g_amount = g_amount * -1.

endif.

write g_amount

to E1KONP-KBETR decimals 2.

REPLACE ALL OCCURRENCES OF '.' in E1KONP-KBETR with ''.

REPLACE ALL OCCURRENCES OF ',' in E1KONP-KBETR with '.'.

Because of the CSV file format I have to devide the value by 100 to get the normal condition value.

In case of a percentage the source value has to be devided by 1000

In case of the zero decimal currencies, I can take the value as is from the source.

If the condition value is negative, then I need to multiply with -1 to get a positive value as I an only carry positive values in this IDoc.

And the last lines take care about dots and commas as decimal separator. In Germany we use the comma as the separator for the decimals, while the Americans use the dot as a separator. And as I get often Excel files from all over the world for migrations I always have to care about the decimal separators.

And finally I want to share an issue that came up during our tests as I had not completed the condition migration.

The info records itself got loaded like explained in the previous blog including the information about the price and effective price, even when they were zero.

You can see that both fields are protected like they are when conditions exist. However, the conditions were not loaded at that time.

When the user clicked the condition button...nothing happened. A inconsistent situation, the info record itself has the information that conditions exist, while they are not there, hence the condition button does not work.

The only workaround is to change a value in another field and save the info record. Then go in again, now you click the condition button as usual. (Don't forget to correct the previously changed field again)

- SAP Managed Tags:

- MM (Materials Management)

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

"mm02"

1 -

A_PurchaseOrderItem additional fields

1 -

ABAP

1 -

ABAP Extensibility

1 -

ACCOSTRATE

1 -

ACDOCP

1 -

Adding your country in SPRO - Project Administration

1 -

Advance Return Management

1 -

AI and RPA in SAP Upgrades

1 -

Approval Workflows

1 -

ARM

1 -

ASN

1 -

Asset Management

1 -

Associations in CDS Views

1 -

auditlog

1 -

Authorization

1 -

Availability date

1 -

Azure Center for SAP Solutions

1 -

AzureSentinel

2 -

Bank

1 -

BAPI_SALESORDER_CREATEFROMDAT2

1 -

BRF+

1 -

BRFPLUS

1 -

Bundled Cloud Services

1 -

business participation

1 -

Business Processes

1 -

CAPM

1 -

Carbon

1 -

Cental Finance

1 -

CFIN

1 -

CFIN Document Splitting

1 -

Cloud ALM

1 -

Cloud Integration

1 -

condition contract management

1 -

Connection - The default connection string cannot be used.

1 -

Custom Table Creation

1 -

Customer Screen in Production Order

1 -

Data Quality Management

1 -

Date required

1 -

Decisions

1 -

desafios4hana

1 -

Developing with SAP Integration Suite

1 -

Direct Outbound Delivery

1 -

DMOVE2S4

1 -

EAM

1 -

EDI

2 -

EDI 850

1 -

EDI 856

1 -

EHS Product Structure

1 -

Emergency Access Management

1 -

Energy

1 -

EPC

1 -

Find

1 -

FINSSKF

1 -

Fiori

1 -

Flexible Workflow

1 -

Gas

1 -

Gen AI enabled SAP Upgrades

1 -

General

1 -

generate_xlsx_file

1 -

Getting Started

1 -

HomogeneousDMO

1 -

IDOC

2 -

Integration

1 -

LogicApps

2 -

low touchproject

1 -

Maintenance

1 -

management

1 -

Material creation

1 -

Material Management

1 -

MD04

1 -

MD61

1 -

methodology

1 -

Microsoft

2 -

MicrosoftSentinel

2 -

Migration

1 -

MRP

1 -

MS Teams

2 -

MT940

1 -

Newcomer

1 -

Notifications

1 -

Oil

1 -

open connectors

1 -

Order Change Log

1 -

ORDERS

2 -

OSS Note 390635

1 -

outbound delivery

1 -

outsourcing

1 -

PCE

1 -

Permit to Work

1 -

PIR Consumption Mode

1 -

PIR's

1 -

PIRs

1 -

PIRs Consumption

1 -

PIRs Reduction

1 -

Plan Independent Requirement

1 -

Premium Plus

1 -

pricing

1 -

Primavera P6

1 -

Process Excellence

1 -

Process Management

1 -

Process Order Change Log

1 -

Process purchase requisitions

1 -

Product Information

1 -

Production Order Change Log

1 -

Purchase requisition

1 -

Purchasing Lead Time

1 -

Redwood for SAP Job execution Setup

1 -

RISE with SAP

1 -

RisewithSAP

1 -

Rizing

1 -

S4 Cost Center Planning

1 -

S4 HANA

1 -

S4HANA

3 -

Sales and Distribution

1 -

Sales Commission

1 -

sales order

1 -

SAP

2 -

SAP Best Practices

1 -

SAP Build

1 -

SAP Build apps

1 -

SAP Cloud ALM

1 -

SAP Data Quality Management

1 -

SAP Maintenance resource scheduling

2 -

SAP Note 390635

1 -

SAP S4HANA

2 -

SAP S4HANA Cloud private edition

1 -

SAP Upgrade Automation

1 -

SAP WCM

1 -

SAP Work Clearance Management

1 -

Schedule Agreement

1 -

SDM

1 -

security

2 -

Settlement Management

1 -

soar

2 -

SSIS

1 -

SU01

1 -

SUM2.0SP17

1 -

SUMDMO

1 -

Teams

2 -

User Administration

1 -

User Participation

1 -

Utilities

1 -

va01

1 -

vendor

1 -

vl01n

1 -

vl02n

1 -

WCM

1 -

X12 850

1 -

xlsx_file_abap

1 -

YTD|MTD|QTD in CDs views using Date Function

1

- « Previous

- Next »

- Output Type SPED trigger Inbound Delivery after PGI for Inter-Company STO's Outbound delivery in Enterprise Resource Planning Blogs by Members

- Unplanned Delivery Cost of Purchasing in S4HANA Cloud, Public Edition in Enterprise Resource Planning Blogs by SAP

- Screen Layout question on ME29N transaction -prevent price change in Enterprise Resource Planning Q&A

- MM-SD SCHEDULE AGREEMENT INTEGRATION in Enterprise Resource Planning Blogs by Members

- Environment, Health and Safety in SAP S/4HANA Cloud Public Edition 2402 in Enterprise Resource Planning Blogs by SAP

| User | Count |

|---|---|

| 5 | |

| 2 | |

| 2 | |

| 2 | |

| 2 | |

| 1 | |

| 1 | |

| 1 | |

| 1 |