- SAP Community

- Products and Technology

- Financial Management

- Financial Management Blogs by SAP

- Migration to BPC on HANA considerations and how to...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Migration to BPC on HANA considerations and how to handle large amounts of transaction data during Migration

With the growing demand of HANA amongst customers, SAP Planning and Consolidation, version for SAP NetWeaver - Powered by SAP HANA has also gained momentum and is being implemented by various customers. In this blog we will try to share few of our experiences with the BPC on HANA Implementations, the various issues faced during implementation, the best approach we followed during the migration, best practices on BADI’s migration and how to load the 125 million records of transaction data from backend BW system.

1: Technical and Functional considerations while migrating to SAP Planning and Consolidation, version for SAP NetWeaver - Powered by SAP HANA

If a customer is planning to move to BPC on HANA then they need to make sure they meet the below system requirements and also please refer to the Product Availability Matrix for more details.

- SAP NetWeaver ABAP 7.3 SP06 (or above) Unicode Application Server. SAP NetWeaver 7.31 is not currently supported by BPC 10 on HANA but support is planned for the near future.

- Database: SAP HANA SPS3 Platform Edition or above

- Operating System: Any that is supported by SAP NetWeaver 7.3

Note: SAP HANA typically runs as an appliance on certified hardware. For the step by step instruction on how to migrate from BPC 7.5 to SAP Planning and Consolidation, version for SAP NetWeaver - Powered by SAP HANA please refer to the HTG published by Rob Marshall https://scn.sap.com/docs/DOC-33863. and also refer to the Technical Considerations for Migrating to BPC 10 on HANA HTG published by Bruno Ranchy https://scn.sap.com/docs/DOC-34745n .

Migration to SAP Planning and Consolidation, version for SAP NetWeaver - Powered by SAP HANA:

- The First step when migrating to SAP Planning and Consolidation, version for SAP NetWeaver - Powered by SAP HANA is to back-up your BPC 7.X Appset. You can back-up the meta and master data first and then just the transaction data or can backup all at once if you are not concerned about the transaction data volume.

- Bring the Appset back-up copy on to the SAP Planning and Consolidation, version for SAP NetWeaver - Powered by SAP HANA system and using the UJBR transaction code, you can restore the Appset (called Environment in BPC 10 and BPC on HANA). You can restore Meta and Master data first followed by Transaction data or all together at once using UJBR.

2: Commonly encountered errors during migration to BPC on HANA and after Migration steps:

Make sure the BPC Content is properly installed on the BPC Server including the activation of EnvironmentShell. Validate if BPC is installed properly by making a copy of the EnvironmentShell and test the basic functionalities. If it is not installed properly you might get the error message below while restoring the custom EnvironmentShell.

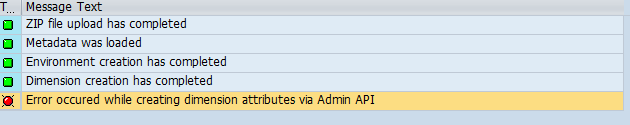

1. “Error occurred when creating Dimension Attributes via Admin API”. The root cause for the issue is, because the EnvironmentShell in the system was somehow not activated.

So run the program UJS_ACTIVATE_CONTENT, by only selecting "Activate BI content" option and activate the EnvironmentShell. Once the EnvironmentShell is activated then UJBR will successfully restore the custom Environments.

2. Sometimes during the EnvironmentShell restore you also encounter the error below with dimension formulas and in that case please make sure the note: 1782923 is implemented or upgrade to BPC on HANA SP09 which already includes the mentioned note

3. When restoring the Transaction data with Transaction code UJBR make sure to disable the work status in BPC Web Admin otherwise you will get the below error message.

Steps to follow after custom Environment is restored on SAP Planning and Consolidation, version for SAP NetWeaver - Powered by SAP HANA:

- Once the Environment is restored run the UJT_MIGRATE_75_TO_10 program which will convert the BPC 7.5 Appset to BPC 10 Environment.

- Add the users to the environment as mentioned in the Installation guide and then login into the Environment through Web Admin and process the dimensions.

- Also you can Optimize the Model (if you are ok with technical name being changed).

- Run program BPC_HANA_MIGRATE_FROM_10 with Transaction Code SE38 to make a standard BPC 10 infocube HANA optimized. This program will create the HANA hierarchy table/view to facilitate master data query and SQE optimizations. It will also migrate BPC related BW cubes to in-memory optimized cubes and creates additional HANA specific tables and SQL scripts used by the HANABPC component.

- Activate Global, Environment and Model accelerators in SPRO

3. Functions and Tasks which are handled/Not handled by Migration program UJT_MIGRATE_75_TO_10 and BPC_HANA_MIGRATE_FROM_10:

- Migration program automatically migrates Comments, Journals, Business Rules, Validations (now called Controls), and Administration settings and configurations (parameters)

- Content Library and BPF’s are automatically migrated with some limitations

- Manual migration steps that are not handled by program UJT_MIGRATE_75_TO_10

- BADI’s – Need to be moved from BPC 7.5 to 10 via a transport and the BADI migration is not handled by the migration tool. In BPC 10 the REST APIs are rewritten which means that any custom solutions (ABAP) that call the internal APIs of 7.5 needs to tweaked or rewritten.

- Custom Data Manager Packages/Process Chains – Also need to be moved from BPC 7.5 to 10 via a transport.

- Templates calling the BPC 7.5 API via a macro or visual basic will need to be modified to call the new BPC 10 API. Visit the EPM Add-in Academy for examples on how this can be done: http://wiki.sdn.sap.com/wiki/display/CPM/EPM+Add-in+Academy

- Live reports, Dashboards, and custom web pages are NOT migrated

BADI Migration best practices and API’s mapping examples:

- Try to use the API from CL_UJK_MOMEL, CL_UJK_READ, CL_UJK_WRITE. The 3 classes have wrapped a lot of useful UJA and UJO interfaces and are easy to use.

- Use CL_UJK_LOGGER to write the log.

- Inside the BAdi,

1. Try to use READ FROM TABLE KEY instead of READ FROM KEY

2. Try to READ from a Hashed table or Sorted table instead of standard table

3. When use ASSIGN COMPONENT, try to use ASSIGN COMPONENT <number> instead of ASSIGN COMPONENT <component name>

4. Try to use ASSIGN COMPONENT before the LOOP and use LOOP INTO instead of use ASSIGN COMPONENT inside the LOOP and use

LOOP ASSIGNING

- Use E2E and SE30 to analyze the performance and identify the bottle neck.

API Mapping from BPC 7.5 to BPC 10:

- 7.5 Interface Method Interface in BPC 10 Method is BPC 10

IF_UJ_MODEL CREATE_MD_DATA_REF IF_UJA_MEMBER_MANAGER CREATE_DATA_REF

IF_UJA_APPSET-DATA GET_APPSET_INFO IF_UJA_APPSET_MANAGER GET

IF_UJA_DIM_DATA GET_INFO IF_UJA_DIMENSION_MANAGER GET

IF_UJA_DIM_DATA GET_DEFAULT_MBR IF_UJA_MD_READER GET_DEF_MBR

4: How to move very large amounts of Transaction data during migration process:

If the transaction data is really huge and UJBR is running out of memory or timing out then we have another method of getting transaction data into the model. In this blog we will discuss a scenario where the transaction data is over 120 million records in one Application / Model and how transaction data is moved to SAP Planning and Consolidation, version for SAP NetWeaver - Powered by SAP HANA environment using Open Hub and Flat file data loading.

Now we will discuss a scenario where just the environment is restored with Meta and Master data and we will upload the Transaction data to the Model directly from the Backend BW system.

Basically there are 3 ways you can upload the transaction data into your environment.

- Using UJBR transaction code you can upload the transaction data from the Environment back-up copy. Usually ideal for environments with Transaction data up to 10 -15 million records.

- Export your transaction data from the 7.5 Appset to a flat file using data manager package and then upload the data into the SAP Planning and Consolidation, version for SAP NetWeaver - Powered by SAP HANA environment using the “Import transaction data from Flat file” data manager package. Ideal for maybe 1 million records. Anything more would also work but might take time to finish.

- Another option which works very well is loading the transaction data directly into BPC cube/model from the backend BW system. Using Open hub transfer transaction data to Flat files and then load data into cube/model using Flat file data loading from backend.

Steps to Create Open Hub Destination:

- Login into BW system

- Go to the transaction RSA1.

- Select “Open Hub Destination” option under Modeling

- Expand the Business Planning and Consolidation node

- Select the Environment/Appset from which you like to extract the transaction data

- On the context menu on Environment , select Create Open Hub Destination

- On the create Open Hub Destination window, under the InfoArea, give the Environment name and for Objectype, select Infocube and give the technical name of the Cube/model from which data will be extracted.

- Make sure to select the “Create Transformation and DTP” option and click “Ok”

- Once the Open hub destination change window opens, under the Destination tab select the “Destination Type as “File”, give all the server details like Server Name, Type of file name as “File Name” , Data format as “Separated by Separator (CSV).

- Under the Field Def tab, select the fields (which are actually the dimensions in BPC) which are included in that Model and delete rest of them. In total there should be 14 fields selected.

- Save and activate your Open Hub Destination.

- Select your open hub destination, right click to create the DTP with the generated transformation rules and extraction mode set to full. You can decide on the package size like 100.000 or 250.000 or 50.000 so on .

- Activate and run DTP. Once the DTP is completed check the server (whatever path you have given in open hub destination to save the Flat files) for the Flat files.

- You can breakdown the transaction data by Year like all 2010 transaction is loaded into 1 flat file, all 2011 into next flat file and 2012 into 3rd one. This way your data will be logically divided and easy to maintain.

Steps to load the transaction data into the BPC on HANA model/ cube using flat file data loading:

Once you have your transaction data ready in the flat files. Move the Flat files to the BPC on HANA server and login into the BW system.

- In the BW system , login into RSA1 transaction code.

- Under Modeling select Source system and create the Source System under the “File” since the source is Flat File .

- 3. Select the newly created Source system and on context menu select the “Display Datasource Tree” option. This would take you a Datasource screen.

1. 4. Create a DataSource for the Flat File Source system.

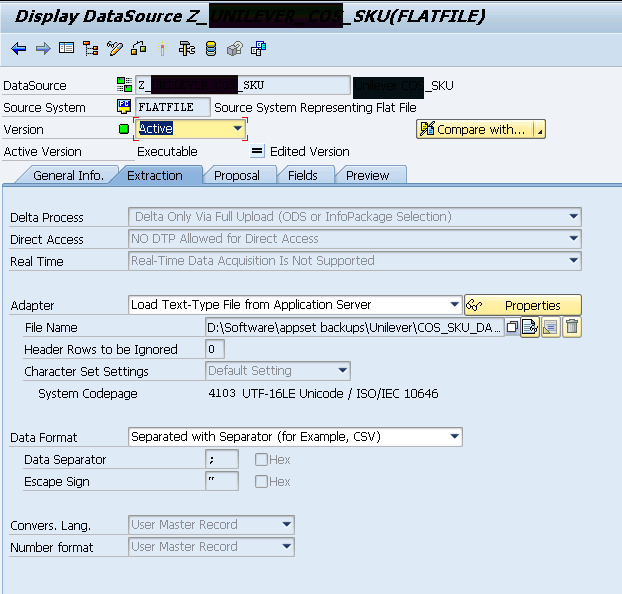

5. When creating the DataSource, make sure under the “Extraction Tab” , the Adapter and Data Format are selected properly. Adapter is the location where the Flat File is located and for Data Format select the “CSV” format as our Flat Files have CSV extension.

1. 6. Under the Fields tab, it would be easy if you could copy and paste the technical names of the dimensions under InfoObject field and press enter button (You can find the technical names by going to Cube – right click – Display – expand dimension folder) . This way when creating the DTP’s, the transformation rules are created and 1:1 mapping will be done for you automatically. You don't have to worry about manually mapping the source and target fields.

1. 7. Once the DataSource is created, right click on DataSource and Select “Create InfoPackage”. InfoPackage will transfer the data from Flat File to Persistence Storage Area “PSA”. I would suggest to run the Infopackage as a background job.

1. 8. Once the data is loaded into the PSA, now create a DTP. In order to create DTP, go to your cube – right click – Select Create Data Transfer Process” . Make sure the source and target fields are correct. DTP would also create Transformation rules for you with all the fields of source properly mapped to targets fields.

1. 9. Once the DTP is created, under the Extraction tab, make sure to use the “Parallel Extraction” option so that data will be loaded into the cube through parallel jobs. This way data will be loaded quickly.

1. 10. Under the Execution tab, select the Processing mode as mentioned in the screenshot

1. 11. Once the DTP job is finished successfully, go to cube – right click – select manage – under request tab, you can see all the requests and how many records are loaded by each request.

1. 12. To see the total number of records in the cube , go to cube – right click – select manage – select Contents tab – click on Fact table – select Number of entries and it would show the no: of records in the Cube / Model. Here we have loaded around 124 million of records.

11

******* (Special thanks to my colleague Cloudy for sharing the BADi best practices info)

1.

1.

- SAP Managed Tags:

- SAP Business Planning and Consolidation, version for SAP NetWeaver

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

- Payment Batch Configurations SAP BCM - S4HANA in Financial Management Blogs by Members

- SAP ECC Conversion to S/4HANA - Focus in CO-PA Costing-Based to Margin Analysis in Financial Management Blogs by SAP

- SAP Multi-Bank Connectivity (MBC) – All You Need to Know in Financial Management Blogs by SAP

- SAP Bank communication Management in Financial Management Blogs by SAP

- Migration of transactional data for fixed assets in Financial Management Q&A

| User | Count |

|---|---|

| 6 | |

| 3 | |

| 3 | |

| 2 | |

| 1 | |

| 1 | |

| 1 | |

| 1 | |

| 1 | |

| 1 |