- SAP Community

- Products and Technology

- Additional Blogs by Members

- Effective Cloud-based Software Testing

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

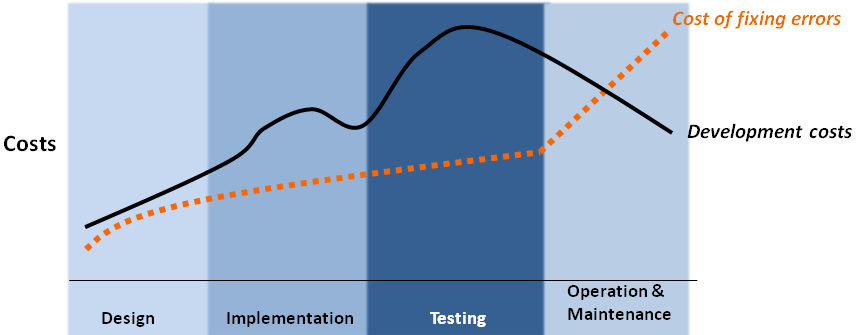

This blog discusses the motivation behind work I am doing with my colleague Carmelo Ragusa in Belfast on Cloud-based Software Testing. Some of this work is being done and trialed within an EU-funded project called BonFIRE. This is an important topic at this point in time for two main reasons: firstly, software testing traditionally and generally is an expensive part of the development process. It demands people, time, resources, rigour and infrastructure, which have been estimated at 30-50% of the development cost [1], where Quality Assurance (QA) teams are known to require 30 – 50 % of the available infrastructure, yet these are only utilized 50-80% for 50% of their lifetime [2]. This is significant waste. However, compromising and taking short-cuts in QA do not pay-off, as they result in unreliable software, unhappy customers and bugs that are more expensive to fix during operations [3]. Moreover, software bugs can lead to failures that are critical and expensive to recover from functionally, financially and reputably.

Therefore cloud computing presents an opportunity for reducing the cost and time for acquiring infrastructure, testing tools and skills, while maximizing coverage and quality of software tests. Therefore, software testing could be a killer application for cloud computing, given the disassociation of critical/sensitive data, applications and processes. When these three are coupled, organizations are less prone to consider cloud computing as a viable alternative operating environment for business.

A second reason why Cloud-based software testing is an important topic to investigate is the increased complexity of the cloud-based software stack and the additional capabilities (e.g. elasticity) that are promised. Moreover, network performance and security are even more critical properties to test as applications and data are hosted and accessed remotely during operations. The traditional Enterprise software stack is typically described as the data base, application server, business logic and presentation logic (either a web-server or compiled Graphical User Interface), as well as the operating system and management capabilities. However, the operational environment of software deployed in the cloud has a greater component and dependency complexity, resulting in more test cases being required before signing off on the quality of the software. For example, virtualization introduces a new software and execution properties that alter the observed behavior of software running on real hardware. The platforms used to manage the cloud platforms and the elasticity of application instances and virtual machines can fail and propagate to the application services and user experience. Furthermore, unexpected security or performance related bugs can be introduced by starting a particular guest operating system on a particular host operating system.

Using cloud computing for cloud-based software testing is hence both technically and economically motivated. The immediate notion of cloud-based software testing tends to be the Testing as a Service (TaaS) model offered by the likes of Cloud9, Sogeti, LoadStorm and HP LoadRunner in the cloud. However, there are 4 other models that can be labeled as cloud-based software testing, and should be considered when making a decision about how the cloud can be best exploited for reducing the software testing budget.

In order to justify transitioning to the cloud, we assume the baseline to be testing software on premise without reliance on remote servers hosted at a cloud provider. Pattern 1- Testing in the Cloud - is a simple model of using a single cloud infrastructure provider for hosting the software under test and testing suite. Pattern 2- System under test in the Cloud - shows the tester placing the system under test on a cloud provider’s infrastructure but hosts the test suite locally. The test payloads are sent over the Internet to the remote system under test. The software under test could be either owned by the tester or a SaaS solution under test. Pattern 3- Test-suite in the Cloud – is the TaaS model mentioned earlier. There exist providers of testing software in the cloud such that the test suite is hosted and controlled outside the domain of the SuT. Pattern 4 - Multi-site testing in the Cloud - more than one provider is used for distributing the SuT and the test suite in different domains. Pattern 5 - Brokered multi-site testing in the Cloud - the same as Pattern 4 with the inclusion of an intermediary for brokering test and infrastructure management requests and operations. The broker [B] also provides support functions for resources selection, reservation and deployment.

Given these different cloud testing models, how can an organization or software testers decide which is most applicable for their situation? We have developed and continue to evaluate a process for selecting and assessing cloud testing models, shown in the diagram below.

The process starts with a specification of the System under Test (SuT), creating a profile. This includes an identification of the classes of software components, containers for deploying the components, nature of requests, estimates of concurrent users, as well as model of cloud (SaaS, PaaS, IaaS) targeted. Secondly, the test environment and infrastructure are selected given a specification of target infrastructure properties and requirements. These include the operating system, distribution, network access and sizing estimates for the infrastructure. Thirdly, the types of tests and test cases to be performed are defined, given predefined test suites, scripts and tools. Tests may include functional, integration, connectivity, performance, scalability, security and regression testing, each of which requires different scaffolding and tools.

In order to determine that a selected cloud-based testing model is truly applicable to the situation, we have derived a set of metrics related to the overall objectives of cost reduction and increasing software quality. These are shown in the figure below.

Firstly, we define “simplicity” and “cost effectiveness” as our two metrics for cost reduction. Simplicity can be measured by the number of tasks, screens, resources and amount of information that needs to be managed for an end-to-end testing process. Cost effectiveness considers the difference in software testing expenditure in the on-premise case versus the selected cloud-based testing model. It is possible that over time the aggregate rental and usage fees of cloud resources can exceed the purchase and maintenance costs of on-premise resources, based on how resources are utilized. Another factor in cost effectiveness is reduction in waste, such that the selected model should prove to reduce the amount of wasted storage, CPU and bandwidth in comparison to the on-premise case. The second set of metrics indicates the potential increase in quality assurance for the software under test. “Predictability” and “Reproducibility” define the minimal deviation in plan execution, environment and results over successive runs. An unstable test environment, unless this is a desirable property of the test environment, can cause failures in the test process and inaccuracies in the test results and inferences. The reliability of the test results becomes questionable. In addition, if there is a lack in “Target Representation”, the test results can still be considered inconclusive. Success in target representation defines a reasonable match between the test environment’s properties and those of the intended production environment. Again cloud-based software introduces new software layers for operations, maintenance and dynamic scaling, and hence the tendency for new bugs. As conventional testing processes do not provide strong coverage of these layers, the test environment and test cases also have to include these features.

In summary, cloud-based software testing is evidently a potential area for having a positive technical and economic impact on organizations that develop, integrate and adapt software. The topic is gaining more visibility in the cloud media and in research but still lacks established best-practices and tools.

[1] M-C. Ballou, "Improving Software Quality to Drive Business Agility", IDC Survey and White Paper, 2008 - sponsored by Coverity. Here

[2] NIST Planning Report 2002 prepared by Gregory Tassey. "The Economic Impacts of Inadequate Infrastructure for Software Testing." Here

[3] B. Gauf, E. Dustin, "The Case for Automated Software Testing", Journal of Software Technology, v.10, n.3, October 2007 Here

- explore the business continuity recovery sap solutions on AWS DRS in Technology Blogs by Members

- SAP Business Transformation Journeys: Insights from ASUG Members on the Center of Excellence in Technology Blogs by Members

- 5 Reasons why Planners Should Consider the RISE with SAP Advanced Supply Chain Planning Package in Supply Chain Management Blogs by SAP

- Striking the Perfect Balance in Product Lifecycle Management Blogs by SAP

- SAP BeLux: stretch the limits of Dox premium invoices in Technology Blogs by SAP

| User | Count |

|---|---|

| 1 | |

| 1 | |

| 1 | |

| 1 |