- SAP Community

- Products and Technology

- Additional Blogs by Members

- SAP BW and Business Content Datasources: in pursui...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

In the middle of the night, lying on the bed, I am woken up by feeble voices whispering “Can someone tell us how to locate an R/3 table-field mapped to an infoobject in my (std) transfer structure?”.

To be honest I have no haunted bedroom and I usually sleep the sleep of the just, but, speaking seriously, if I have to think to one of the most unanswered post in BI Forums, certainly my thought goes to the question “how can I find the R/3 source of a specific object in BW ?!”.

Sometimes some good Samaritan (as How to locate which R/3 table-field is mapped to BW) arms himself with patience and tries to give a good answer.

But often this is not so easy. And just think SAP invests a lot of efforts to give as many info as possible...but, as usual, a serious chef rarely discloses his secret ingredient !!!

SAP BUSINESS INFORMATION WAREHOUSE: WHAT and HOW ?

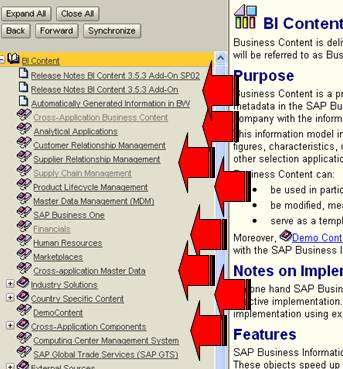

As already discussed in one of my previous LOGISTIC COCKPIT - WHEN YOU NEED MORE - First option: enhance it ! Business Content (BC) has represented (one of) huge added value(s) for a customer (often already owner of a SAP product - usually R/3 - and inclined to buy a Business Intelligence solution), and it played (and still plays) a leading role in SAP Business Warehouse’s success.

Into SAP Glossary, BC is defined as “predefined role and task-related information models that can be suited to enterprise-specific requirements. BC makes the supply of information available to roles in an enterprise, that require this to complete tasks. BC consists of roles, workbooks, queries, InfoCubes, InfoObjects, InfoSources and update rules, as well as extractors, for SAP R/3, SAP New Dimension Applications and for additional selected applications.”.

So, on one hand BW's comprehensive BC enables a quick and cost-effective implementation. On the other hand, it provides a model that can be used as a guideline during implementation using experience gained from other implementations.

Ok, but what about BC related documentation ? In order to have this quick and cost-effective implementation (due to a drastic reduction of the needed development phase), it’s pleonastic saying that I have to know (once identified my functional and technical requirements) WHAT I have to use (from BC) and HOW using it to satisfy my requirements.

About WHAT, SAP Help Portal is the kingdom of this kind of info.

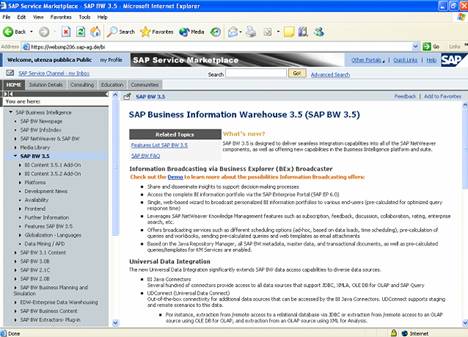

About HOW SAP Service Marketplace is the best source in which news, product documentation, technology articles and many others useful things are featured.

Often not holden in due consideration, there are also the SAP Best Practices for Business Intelligence, that contain the documentation for the installation and configuration of solutions based on a lot of ‘functional’ scenarios.

Just put it to the test !

(Sure, if you want to know WHAT and HOW all in one go, you have to come to /community [original link is broken]...but, if you are here, maybe you already know that and agree with me !!!)

If you have correctly understood what you have to do and how, you arrived at a good point, but now some philosophical question can arise.

Indeed, especially if the number/value you see arriving in your BW seems not so familiar, you can wonder:...

...WHERE DOES IT COME FROM ?!

(or “Desperately seeking something with which reconcile”)

Anyone working inside the BW world knows that very often implementing a flow can be difficult, but we all would rather build hundreds of ODS than see our boss rushing into our room and requiring BW data perfectly reconciled with R/3 (“...to the last penny, please !”).

Fortunately, SAP acknowledges the relevance of this issue and gives us some useful tool, first of all the Data Consistency section in the Service Marketplace and, new entry, a very scheming How To Paper.

Last, but not least, from PI-BASIS 2005.1 SAP plans to deliver reconciliation datasources, gradually for every application.

The problem is that this kind of reconciliation tricks takes into account BW data (after integration, transformation and other typical datawarehousing amenities) compared to R/3 data, but what if we don’t know with which we have to compare BW data ?

Not always this is so banal, especially if you don’t have deeply functional knowledgment of the involved area/module.

This is the reason for what it’s more probable to see questions as “How can we determine the origin tables of datasource X” rather than “Which method can we use to reconcile our BW data”...

SOMETIMES LIFE IS EASY: WHEN AN HELP COMES FROM THE HELP

That is, sometimes (but anyway this method should be the first step to take into account...) it’s enough to go to help.sap.com, insert the datasource name in the search engine and, if you are lucky, you can find a this ready-to-use hint:

But, unfortunately, not always we can thank our lucky stars and, sometimes, we can also find, after a similar search, not so intelligible informations...

As you can see from the above picture, in this case the origin table information has been replaced by a mysterious source...and I challenge anyone to try to understand from where (in R/3) this data comes (if you don’t have any experience in FI-AA, clearly...).

EVERYTHING IN A TABLE: ROOSOURCE WORLD

In the underground world of BW-worms, there is a table that is a real source of useful information, a sort of X-ray of every available datasource in the system: ROOSOURCE table.

Let’s start to have a quick look to it.

The most important fields are contained in the include ROEXTRACT:

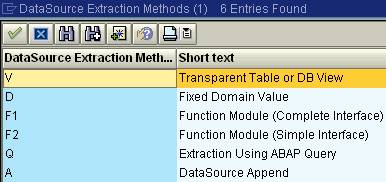

- DataSource Extraction Methods (EXMETHODS)

Just knowing what is the extraction method of a datasource and our search of the origins achieves a vast improvement!

A datasource can extract data in several ways:

'V': over a transparent table or a database view.

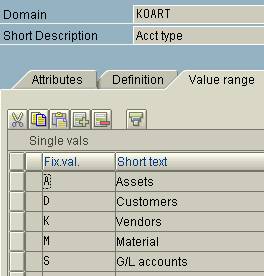

'D': using the fixed values of a domain (useful for very small amounts of data).

'F1': using a function module, which distributes the data in packages by itself (useful for large quantities of data).

'F2': as above, using a function module, but with a more simplified interface compared to 'F1' (see documentation for data element ROFNAME_S in SE11 for more details).

'Q': using a functional area of an ABAP query or using an infoset of the infoset query (depending from the release).

'A': with an append-datasource via BADI.

- DataSource Extractor (ROEXTRACTO)

This field contains, depending on the extraction method, what we need to know (or, at least, the place where we can look for...) !!!

'V': name of transparent table or database view using which (generic) extraction is to take place. In this case it’s enough to go to SE16 with this name and the game is over !

'D': name of domain whose texts are to be extracted (as in the picture below for KOART domain, inside 0ACCT_TYPE_TEXT datasource, via SE11).

'F1': name of function module for extraction. Go to SE37 to check the extraction code (take a look to a nice template in function group RSAX, FB RSAX_BIW_GET_DATA).

'F2': as above but the template, always in function group RSAX, is FB RSAX_BIW_GET_DATA_SIMPLE)

'Q': name of functional area or infoset query (look at SQ01 transaction).

'A': DDIC name of append structure for reasons of consistency

- Name of the provider structure for an InfoSource (ROSTRUC)

Here you will find the name of the structure that will pass data after the extraction and this is what we see when R/3 data arrives in BW (even if there could be also a userexit in the middle, the structure of every record will remain the same).

- DataSource uses generic interfaces (ROGENFLAG)

In this case the datasource uses the generic transfer structure to transfer data through the table parameter e_t_data, typed at runtime. This category includes only datasources generated by the customer (as in LIS or CO-PA).

- DataSource Delivers Consistent Data with Synchronous Access (ROVIRTFL)

This field shows if the datasource supports direct access from a virtual infocube in BW.

Now, some consideration.

Anything is custom is not involved in this weblog: if something has been built by us (or by someone else, with related technical documentation...), we should know from where this datasource extracts data (apart from loss of memory...)!

On a total of 1512 delivered (std) datasources (PI 2003_1_470), we have this distribution:

A (1), Q (3), F2 (7).

Most of A, Q and F2 datasources are verification and test ones (practically speaking, not relevant).

And, on the podium, D (162, all text master datasource), F1 (623) and V (716).

We already saw that there is no problem with domains and tables/views, so the only obstacle in finding out what we need (the tables of origin) is represented by the extraction done through a function module. And this is the case of the most used transactional extractors.

Often, a single function module can be used by several (and functional similar) datasources: MCEX_BW_LO_API for every LC datasources (more than 60!), MCS_BIW_LIS_API for the extraction of LIS (more than 20), MDEX_MATERIAL_MD (for all datasource extracting from knvk, knvv, kna1, knb1) and so on...

So, which tables are involved in a function module and how can I find some useful info ?

Apart from reading the ‘static code’ from SE37 (but this might be applicable not always), there are some other option...

SQL TRACE (when you flirt with your DB...)

If you want to know from which tables the extraction program pulls out the data and if you have a friendly relation with your DB, you can try your fortune with a reading of the SQL trace, a database-oriented tool provided by SAP as a performance trace function for monitoring and analyzing SQL statements used in reports and transactions (as glossary says).

You can start from RSA3 transaction, that allows to check the extraction content of every active datasource in BW simply in simulation mode (by the way, do you know that in RSA3 you can also, but only from BW 4.0 release, enter the ID of an existing request in your BW to have all the information for that request transfered to the extractor checker via an RFC call from the specified BW system ? Cool and really useful !)...

To activate the SQL trace choose from the menu path System, Utilities, Performance Trace or go directly to ST05 transaction; then turn the SQL trace on.

As already suggested, go to RSA3 and execute an extraction with the datasource for which you need more info.

Now you can return to ST05, turn your trace off (otherwise your system will continue to record every breath you take on your R/3 !!!) and click on the button ‘List trace’ to view the report and analyze the trace the system has been generated during the extraction simulation.

If you look at the column ‘Object’ you can find a list of all the tables hit with the actual values used for the selection.

DEBUGGING (when the truth is at the end of a winding road)

Ok.

Do you try with SQL trace ?

Are you amazed to see how many tables a system can potentially read during an extraction (and not everything is strictly related to BW) ?

Indeed, now you know what are the tables involved, but this doesn’t mean that you will be able to link a field to a table!

More: how could you understand that a BW-field content comes not simply from a specific R/3 table, but after several more or less complex calculation logics, alternative scenarios (if-clauses) and so on ?

This is paradoxical, but, in this case, last chance is the best chance: debug the extraction !

Generally speacking (from any transaction), it’s enough to enter /H in the transaction code field and press enter: this puts you into debugging mode (the same if you choose from the menu path System, Utilities, Debug).

But in our situation (we are in RSA3 transaction) SAP gives us the possibility to have easier life: just flag the checkbotton ‘Debug Mode’ (shown in the previous picture) and start your extraction.

On the first screen of your debug go to Breakpoints, Breakpoints at, Statement and enter the statement ‘SELECT’, then click on the ‘Continue’ button. In this way the system will stop the program at the first (and at every) ‘SELECT’ statement.

Clearly it’s necessary to know a little about ABAP, in order to understand where it’s useful to stop (by analyzing the processing blocks in the Overview screen) or to avoid unuseful and exhausting loops (in which a single select can be performed many many times!).

But, overall, only by following this way you can find how a specific field is calculated or why a certain record is not taken into account !

Debugging an extraction is surely not by any means an easy task, it is a job which requires a great deal of patience, but only through this method you will be able to see behind the matrix !!!

OTHER TIPS AND TRICKS...

Sometimes the solution is just in the extract structure name of a datasource (you can see it via RSA5/RSA6 transactions with a double-click on a specific datasource): for example, 0ABCPROCESS_ATTR datasource has BIW_CBPR as extract structure and, in this case, CBPR table (CO-ABC: Activity Master Table) is the source of extracted records.

Besides, you can look at the function module RSA1_SINGLE_OLTPSOURCE_GET that, after having inputed the datasource in OLTPSOURCE parameter, returns all tables linked to that datasource(OLTPSOURCE).

In the end, it’s evident that finding what’s the source of a specific info can be sometimes very easy and requires titanic efforts in other cases. Other different requirements can arise (as happened ETDAT), but there is always a common denominator: the pursuit of the origins. And, in my opinion, what do you get out of having everything ready-made?

- SAP ERP Functionality for EDI Processing: UoMs Determination for Inbound Orders in Enterprise Resource Planning Blogs by Members

- Exploring Integration Options in SAP Datasphere with the focus on using SAP extractors in Technology Blogs by SAP

- Modellierung ‘24 Conference: A Glimpse At The Future of Process Modeling in Technology Blogs by SAP

- API Composition with Graph: customizing your Business Data Graphs with Model Extensions in Technology Blogs by SAP

- SAP Datasphere Connectivity With S/4 HANA System & SAP Analytics Cloud : Technical Configuration in Technology Blogs by Members

| User | Count |

|---|---|

| 1 | |

| 1 | |

| 1 | |

| 1 |