- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- How to Implement the New Speech-to-Text in Chatbot...

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Developer Advocate

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

03-31-2022

9:03 AM

SAP Conversational AI has added speech-to-text support to its chatbot, but what is included in this support, and how are the different ways you can use it?

The documentation is available in GitHub (nice information, including about other Web Client APIs), but below is my deconstruction, plus an example of how I implemented speech-to-text. You can also do the 2 new speech-to-text tutorials:

You can also check out the Tech Byte video that explains/shows you how to do all this.

The speech-to-text capability provides a set of features, and you can use some of them or all of them. Here they are:

Simply by creating the

The object can be empty and you will still get the microphone. It may not do anything, but you'll get it.

Along with the microphone you are able to capture when someone presses. You can implement

In this method, you can start you speech-to-text service, set up callback methods for when audio is transcribed, open the browser microphone, and do any start-up tasks you need.

If your STT service provides interim results while the user talks, the chatbot provides a place to put these interim transcriptions

The Web Client provides a method you can call,

The interim transcription window contains the abort and stop buttons, and you can also capture when the user clicks these and do any needed cleanup:

If you want, the chatbot can handle interacting with the browser to capture the audio. This is configured in the

If the chatbot does handle the audio, then you can a few methods for handling this:

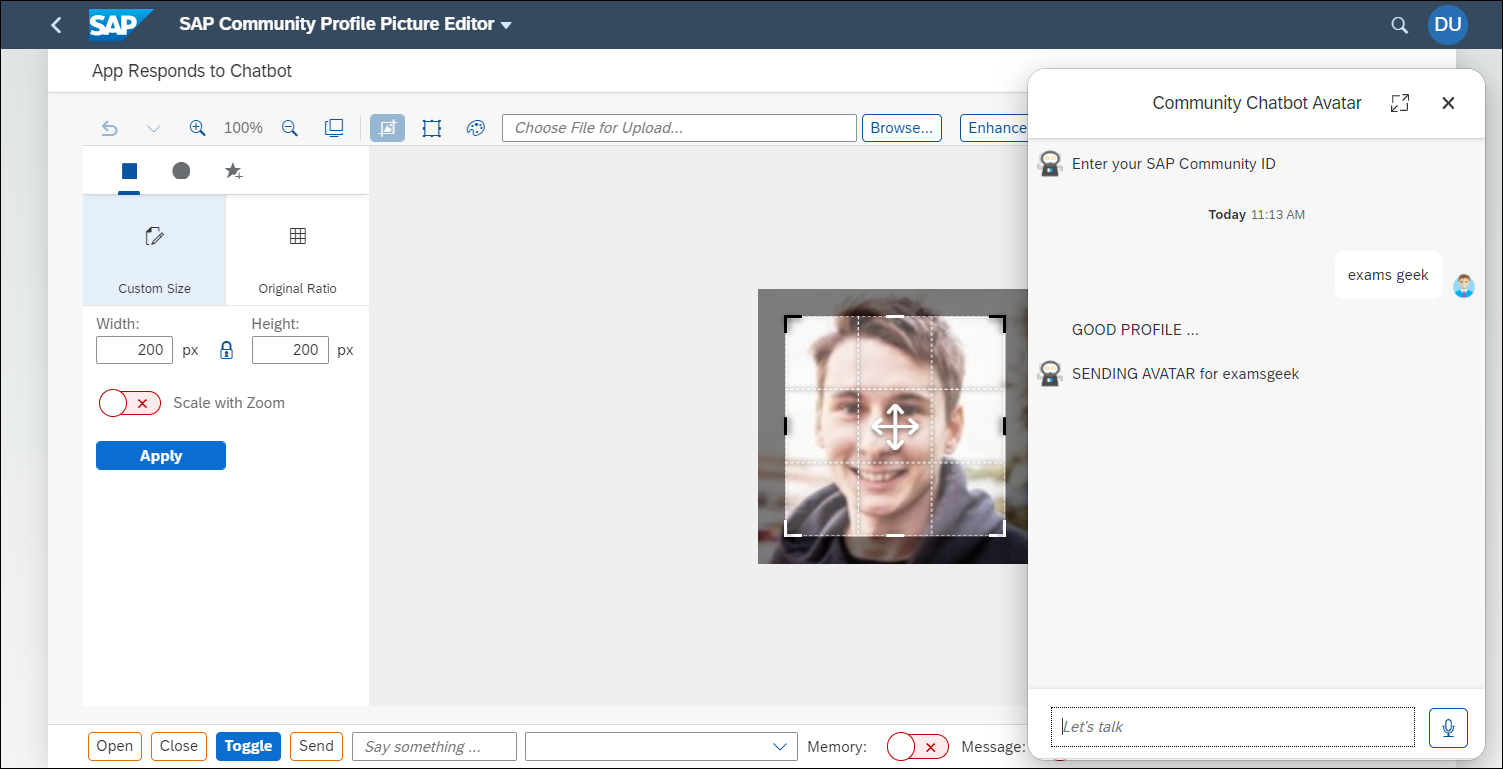

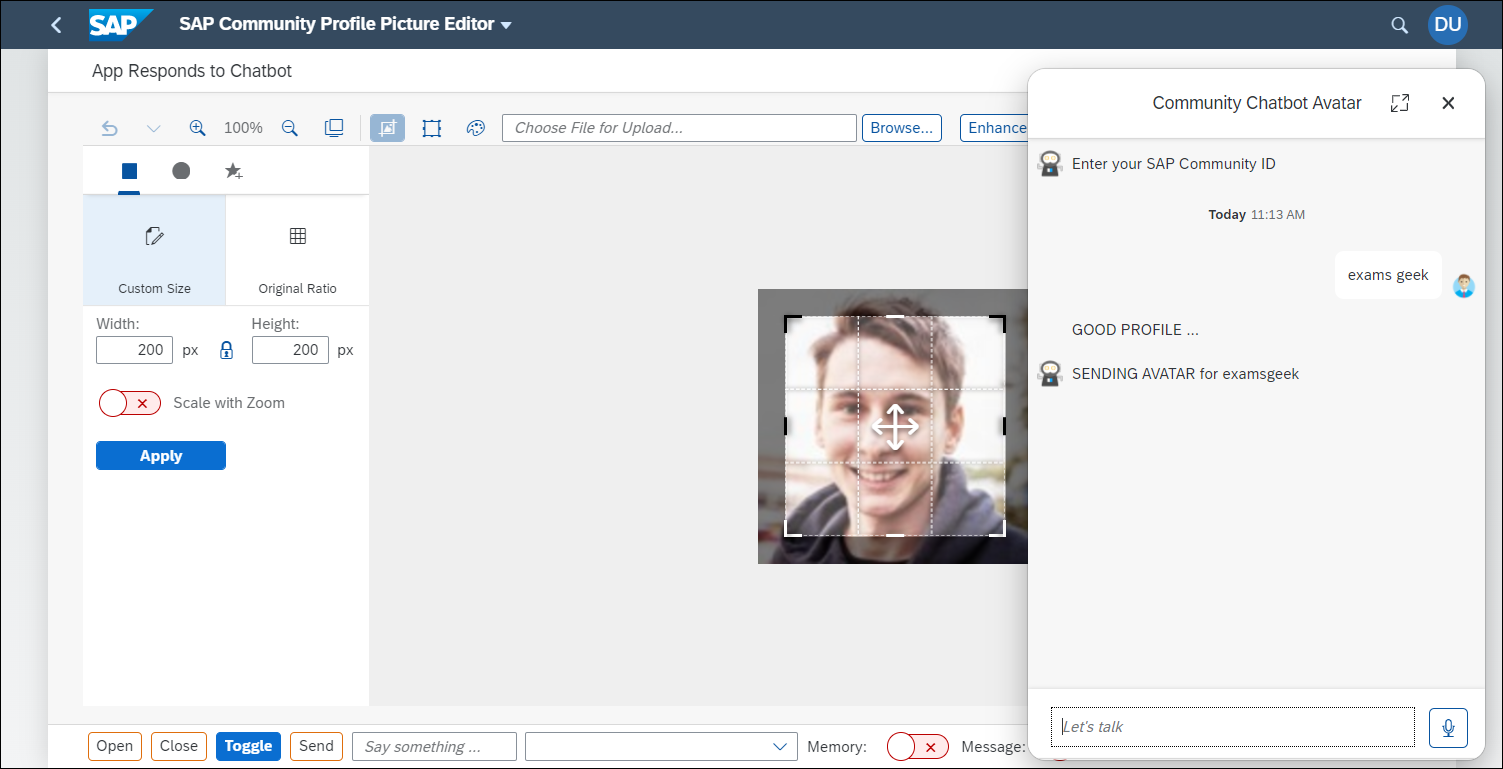

I used the recent SAP Community Code Challenge image editor project as the starting point. One of my added features was to enable a chatbot to select a community profile avatar to be loaded into the application.

Here's how I enabled speech to text for the chatbot. If you want to replicate this, you would need to get an IBM Cloud account, get a service plan for speech to text (there is a free one), and generate tokens for connecting.

I created a file called

I then created a file called

And the last new file I created was

In the SAPUI5 application, I did the following:

If you want to see all the details, see the tutorial at Add Speech-to-Text to Your Chatbot (with recorder).

I open the Community Contest image editor, and open the chatbot.

Then click on the microphone.

I say the name of a community ID, like "exams geek". The transcribed words go into the interim transcription area.

When I stop talking, the text is transferred into the conversation, and my client-side

The documentation is available in GitHub (nice information, including about other Web Client APIs), but below is my deconstruction, plus an example of how I implemented speech-to-text. You can also do the 2 new speech-to-text tutorials:

- Add Speech-to-Text to Your Chatbot, where a JavaScript library works with browser to handle speech recognition and transcription

- Add Speech-to-Text to Your Chatbot (with recorder), where the chatbot handles the speech recognition and sends audio to STT service.

You can also check out the Tech Byte video that explains/shows you how to do all this.

What's available with STT?

The speech-to-text capability provides a set of features, and you can use some of them or all of them. Here they are:

Microphone Button

Simply by creating the

window.sapcai.webclientBridge.sttGetConfig object, you will get the microphone displayed

The object can be empty and you will still get the microphone. It may not do anything, but you'll get it.

Trigger UI Events

Along with the microphone you are able to capture when someone presses. You can implement

window.sapcai.webclientBridge.sttStartListening.

In this method, you can start you speech-to-text service, set up callback methods for when audio is transcribed, open the browser microphone, and do any start-up tasks you need.

Display Interim Results

If your STT service provides interim results while the user talks, the chatbot provides a place to put these interim transcriptions

The Web Client provides a method you can call,

onSTTResult. The method takes the text you want to display and a boolean to indicate whether these are interim results (displayed in the interim transcription window) or final (sent to the chatbot conversation as the next utterance).The interim transcription window contains the abort and stop buttons, and you can also capture when the user clicks these and do any needed cleanup:

sttAbortCapture Audio (Media Recorder)

If you want, the chatbot can handle interacting with the browser to capture the audio. This is configured in the

window.sapcai.webclientBridge.sttGetConfig method. We'll see an example where we handle the audio capture ourselves in the example below.If the chatbot does handle the audio, then you can a few methods for handling this:

sttOnInterimAudioData,which receives an interim blob which you can pass on to your STT servicesttOnFinalAudioData, which receives a final blob when the user stops talking and which you can pass on to your STT servicesttStopListening, which is called when the user stops talking and in which you close websockets and do any other cleanup work

Using IBM Speech-to-Text Service

I used the recent SAP Community Code Challenge image editor project as the starting point. One of my added features was to enable a chatbot to select a community profile avatar to be loaded into the application.

- You open the chatbot and type the community ID, and the image editor loads the corresponding avatar.

- The chatbot checks if it is a valid ID and let's the user know. If not valid, the chatbot still loads the default avatar.

- The chatbot also fixes some formatting by eliminating spaces and converting to lowercase.

Here's how I enabled speech to text for the chatbot. If you want to replicate this, you would need to get an IBM Cloud account, get a service plan for speech to text (there is a free one), and generate tokens for connecting.

I created a file called

webclient.js with just the tokens I needed. In real life I would have hidden the tokens, and created a service to generate the token for calling the service, which expires every 30 minutes.const data_expander_preferences = "<chatbot preferences>";

const data_channel_id = "<chatbot channel ID>";

const data_token = "<chatbot token>";

const ibmtoken = "<IBM token>";

const ibmurl = "<IBM service URL for your tenant>";I then created a file called

webClientBridge.js with just the tokens I needed. In real life I would have hidden the tokens, and created a service to generate the tokens for calling the service, which expire every 30 minutes.const webclientBridge = {

// ALL THE STT METHODS

//--------------------

callImplMethod: async (name, ...args) => {

console.log(name)

if (window.webclientBridgeImpl && window.webclientBridgeImpl[name]) {

return window.webclientBridgeImpl[name](...args)

}

},

// if this function returns an object, WebClient will enable the microphone button.

sttGetConfig: async (...args) => {

return webclientBridge.callImplMethod('sttGetConfig', ...args)

},

sttStartListening: async (...args) => {

return webclientBridge.callImplMethod('sttStartListening', ...args)

},

sttStopListening: async (...args) => {

return webclientBridge.callImplMethod('sttStopListening', ...args)

},

sttAbort: async (...args) => {

return webclientBridge.callImplMethod('sttAbort', ...args)

},

// only called if useMediaRecorder = true in sttGetConfig

sttOnFinalAudioData: async (...args) => {

return webclientBridge.callImplMethod('sttOnFinalAudioData', ...args)

},

// only called if useMediaRecorder = true in sttGetConfig

sttOnInterimAudioData: async (...args) => {

// send interim blob to STT service

return webclientBridge.callImplMethod('sttOnInterimAudioData', ...args)

},

// OTHER BRIDGE METHODS

//--------------------

// called on each message to update the memory

// called on each message

onMessage: (payload) => {

payload.messages.forEach(element => {

if (element.participant.isBot && element.attachment.content.text.startsWith("SENDING AVATAR")) {

profile = element.attachment.content.text.substring(19);

window.sapcai.webclientBridge.imageeditor.setSrc("https://avatars.services.sap.com/images/" + profile + ".png")

}

});

}

}

window.sapcai = {

webclientBridge,

}And the last new file I created was

webClientBridgeImpl.jswith the implementation (I could have combined the last 2 files. This file opens a websocket to the IBM service when the user clicks the microphone and sends interim and final audio blobs to the service. The websocket callbacks put the transcribed texts into the interim transcription area or the conversation.const IBM_URL = ibmurl

const access_token = ibmtoken

let wsclient = null

const sttIBMWebsocket = {

sttGetConfig: async () => {

return {

useMediaRecorder: true,

interimResultTime: 50,

}

},

sttStartListening: async (params) => {

const [metadata] = params

const sttConfig = await sttIBMWebsocket.sttGetConfig()

const interim_results = sttConfig.interimResultTime

wsclient = new WebSocket(`wss://${IBM_URL}?access_token=${access_token}`)

wsclient.onopen = (event) => {

wsclient.send(JSON.stringify({

action: 'start',

interim_results,

'content-type': `audio/${metadata.audioMetadata.fileFormat}`,

}))

}

wsclient.onmessage = (event) => {

const data = JSON.parse(event.data)

const results = _.get(data, 'results', [])

if (results.length > 0) {

const lastresult = _.get(results, `[${results.length - 1}]`)

const m = {

text: _.get(lastresult, 'alternatives[0].transcript', ''),

final: _.get(lastresult, 'final'),

}

window.sap.cai.webclient.onSTTResult(m)

}

}

wsclient.onclose = (event) => {

console.log('OnClose')

}

wsclient.onerror = (event) => {

console.log('OnError', JSON.stringify(event.data))

}

},

sttStopListening: async () => {

const client = wsclient

setTimeout(() => {

if (client) {

client.close()

}

}, 5000)

},

sttAbort: async () => {

if (wsclient) {

wsclient.close()

wsclient = null

}

},

sttOnInterimAudioData: async (params) => {

if (wsclient) {

const [blob, metadata] = params

wsclient.send(blob)

}

},

sttOnFinalAudioData: async (params) => {

if (wsclient) {

const [blob, metadata] = params

wsclient.send(blob)

wsclient.send(JSON.stringify({

action: 'stop',

}))

}

},

}

window.webclientBridgeImpl = sttIBMWebsocketIn the SAPUI5 application, I did the following:

- Loaded these JavaScript files in the

sap.ui.define. - Loaded the chatbot script after rendering the view.

- Saved a reference to the image editor in the window object, so I could reference it within the chatbot client APIs. I imagine there is a better way to do this.

If you want to see all the details, see the tutorial at Add Speech-to-Text to Your Chatbot (with recorder).

Result

I open the Community Contest image editor, and open the chatbot.

Then click on the microphone.

I say the name of a community ID, like "exams geek". The transcribed words go into the interim transcription area.

When I stop talking, the text is transferred into the conversation, and my client-side

onMessage method captures the text, checks what it says, and then updates the image editor picture with the proper avatar.

- SAP Managed Tags:

- Machine Learning,

- SAP Conversational AI,

- API,

- Artificial Intelligence

Labels:

1 Comment

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,661 -

Business Trends

88 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

65 -

Expert

1 -

Expert Insights

178 -

Expert Insights

280 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

784 -

Life at SAP

11 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

330 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,886 -

Technology Updates

408 -

Workload Fluctuations

1

Related Content

- OpenAI Function Calling: Integrate with SAP BTP Destination for External API call in Technology Blogs by SAP

- Harnessing LangChain for RAG-Enhanced Private GPT Development on SAP BTP in Technology Blogs by Members

- SAP Data Sphere Business Case Scenarios & Implementation Stratergies. in Technology Blogs by Members

- Dynamic Response from Chatbot using Factory function in SAPUI5 [ Aggregation Binding ] in Technology Blogs by Members

- Exploring the potential of GPT in SAP ecosystem in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 13 | |

| 10 | |

| 10 | |

| 9 | |

| 8 | |

| 7 | |

| 6 | |

| 5 | |

| 5 | |

| 5 |