- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Lumira MongoDB Connectivity with External data sou...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Introduction :

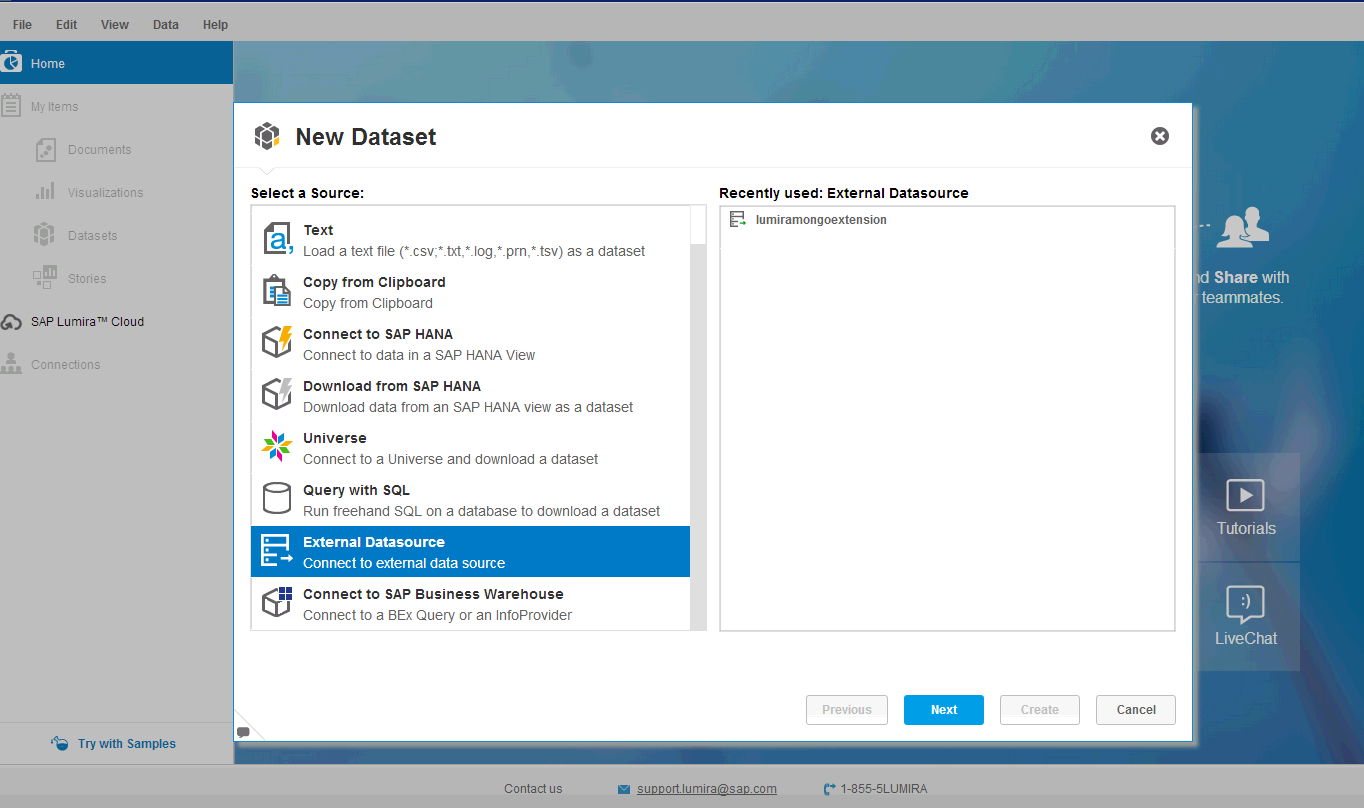

With SAP Lumira 1.17 , you will now be able to connect to your custom datasources over and above the standard datasource options you get . You now have an "External datasource" option available for that . Let me show you how to visualize MongoDB Data inside SAP Lumira using the same technology.

What we are going to achieve :

To demonstrate the mongodb and saplumira connectivity , I choose visualizing tag cloud of words from popular blogs inside Lumira , with data coming from MongoDB. MongoDB is the most obvious choice when it comes to social data and the aggregations over such data can easily be done using Mongo’s MapReduce feature .

Technologies chosen :

In order to demonstrate the lumira-MongoDB connectivity i have chosen the following technologies. 1) SAPUI5 2)JavaFx 3) Mongo Java Driver 4) MongoDB.

Background :

To get started , a bit of introduction on “External Data source extensions” is necessary . Lumira comes with standard set of datasource options like a ) csv b) excel c) clipboard d) Hana (online) e) Hana (offline) e) Plethora of sql datasources using Freehand sql f) BW g) Universe

In case if you have a datasource that doesn’t fall into the above categories then external datasource extensions could be for you. What you have to do is build an executable and put it under “daextensions” folder inside your lumira installation path (Something like “C:\Program Files\SAP Lumira\Desktop\daextensions\”). Your executable should listen to commands that are passed over the stdin & stream out the data over stdout. The commands from lumira for now are 1) Edit 2) Preview 3) Refresh which are nothing but stages of data availability to lumira.

Please read the documentation of “External datasource extensions” for lumira in case if you want an in-depth information. External data sources option may not be found while creating new dataset unless you make entries in SAPLumira.ini..

-Dactivate.externaldatasource.ds=true -Dhilo.externalds.folder=C:\Program Files\SAP Lumira\Desktop\daextensions

This article’s source code is also published which should help you in understanding more and get your hands dirty as well.

For building an executable , you need to generate runnable jar & give it as input to launchforj .

MH370 Flight:

The data from 3 of the blogs from internet were pulled and inserted into the MongoDB .To make it simpler , i have the data ready for you in JSON format . You should be able to locate it in “WebpageDB” folder inside the source code accompanied. The following command needs to be executed inorder to insert it into MongoDB…

mongoimport --db MongoWebpageDB --collection webpage --file webpage.json

With this command you should have a database by name “MongoWebpageDB” created and the data from webpage.json would be put into webpage collection .By the way i removed “stopwords” so that commonly used words are not present.

Data preview & Mongo Connectivity UI:

UI5Webview.java has the Main function which is the entry point . The args/commands passed from lumira will hit the “Main” method first . The arguments are then parsed to check for what lumira is requesting , the parsearguments function helps us in doing so. Once the mode is recognized as preview , the javafx webview component is initialized passing the SAPUI5 based html file as URL. One should be able to find all the sapui5 resources required in the webcontent folder.

The html file contains input elements so that the user can enter the MongoDB specific information that is required in order to connect to the DB.

If you have to pass through a wizard (Multiple screens) for selecting the datasource , then you may choose to do that in SAPUI5 . However the technology can be anything of your choice.

I have put the mongodb name , collection name , port of connectivity as the UI elements using SAPUI5 here . These are corresponding to the mongoimport command that we fired previously. On pressing the “Fetch data” in the UI , this metadata is streamed to lumira . You can look into the StreamDSInfo method in streamhelper class to get an overview on how the metdata is passed to lumira. The metdata passed to lumira will be something like..

beginDSInfo

csv_separator;,;true <br>

csv_date_format;M/d/yyyy;true <br>

csv_number_grouping;,;true<br>

csv_number_decimal;.;true

csv_first_row_has_column_names;true;true

lumongo_host;localhost;true

lumongo_port;27017;true

lumongo_user;abc;true

lumongo_pass;[C@14b746c8;true

lumongo_collection;testcollection;true

lumongo_dbname;test;true

endDSInfo

Connecting to MongoDB & MapReduce :

The connectivity to mongoDB is achieved using Java-mongoDB driver . Words from the database are mapped and count aggregation is performed using the wc_map & wc_reduce javascript files. The mapreduce command is fired against the webpage collection . The result set of the mapreduce command is looped through and all the results were written to the Stdout (system.out.println) . See the mapreduce code snippet from QueryAndStreamToLumira.java.

<code>

public void mapReduce() {

System.out.println("beginData");

try {

// access the input collection

DBCollection collection = m_db.getCollection(m_Collection);

// read Map file

String map = readFile("wc_map.js");

// read Reduce file

String reduce = readFile("wc_reduce.js");

//Prepare mapreduce command to be fired on the collection giving the map and reduce javascript files as input

MapReduceCommand cmd = new MapReduceCommand(collection, map,

reduce, null, MapReduceCommand.OutputType.INLINE, null);

//fire the mapreduce command

MapReduceOutput out = collection.mapReduce(cmd);

//The below is csv header streamed to lumira

System.out.println("word,count");

//Loop through the result set and start streaming to lumira inline

for (DBObject o : out.results()) {

DBObject idOBj = (BasicDBObject) o.get("_id");

String word = (String) idOBj.get("word");

DBObject obj = (BasicDBObject) o.get("value");

Double count = (Double) obj.get("count");

System.out.println(word + ","

+ Integer.toString(count.intValue()));

}

} catch (Exception e) {

//Sends the error information to lumira

System.err.print(e.toString());

}

//Notify lumira that data streaming has ended

System.out.println("endData");

}

</code>

CSV format

One thing that you should have noticed from the above code is "data is streamed to lumira in CSV format" . This is important or else lumira will not be able to understand your data. You have signal lumira that data streaming would start by printing “beginData” to stdout. The next step would be sending your comma seperated header names and only after this you should start streaming comma seperated tabular data. Once you are done with whole of your result sent , you have to notify lumira that datastreaming has ended.

Once the data is streamed to lumira you should be able to preview it inside as shown in picture below .

After finishing the acquistion , you can go to visualize room and drag and drop required dimension and measure for words . Select the chart type as tag cloud .

Thats it, you have mongodb data visualized in lumira now .

Source code https://github.com/devicharan/saplumiramongodb

- SAP Managed Tags:

- SAP Lumira

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,661 -

Business Trends

88 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

65 -

Expert

1 -

Expert Insights

178 -

Expert Insights

280 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

784 -

Life at SAP

11 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

330 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,886 -

Technology Updates

408 -

Workload Fluctuations

1

- ABAP Cloud Developer Trial 2022 Available Now in Technology Blogs by SAP

- 10+ ways to reshape your SAP landscape with SAP Business Technology Platform – Blog 4 in Technology Blogs by SAP

- Consuming CAPM Application's OData service into SAP Fiori Application in Business Application Studio in Technology Blogs by Members

- SAP Build Code - Speed up your development with Generative AI Assistant - Joule in Technology Blogs by SAP

- 10+ ways to reshape your SAP landscape with SAP BTP - Blog 3 Interview in Technology Blogs by SAP

| User | Count |

|---|---|

| 13 | |

| 10 | |

| 10 | |

| 9 | |

| 7 | |

| 6 | |

| 5 | |

| 5 | |

| 5 | |

| 4 |