- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Proxy Third-Party Python Library Traffic - Using S...

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Advisor

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

04-24-2023

11:50 AM

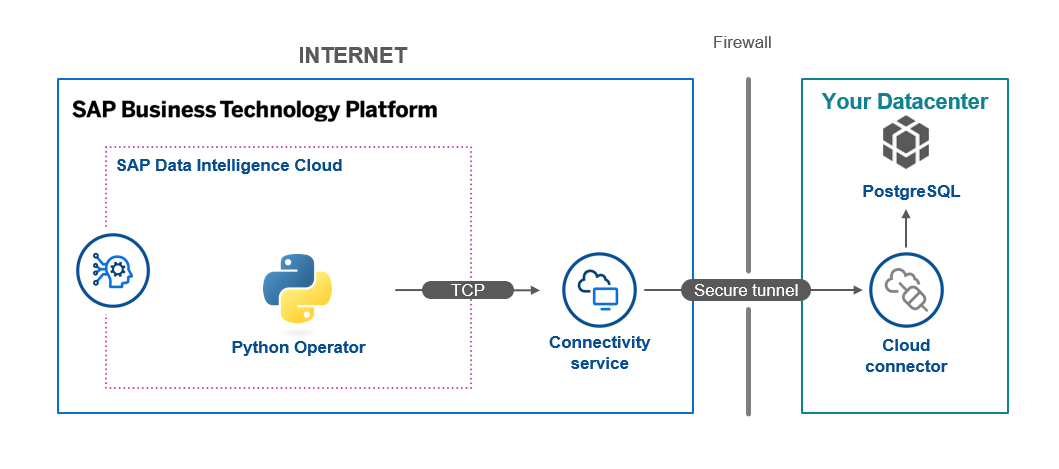

Custom Python operators are the most convenient way to develop non-standard integrations in SAP Data Intelligence. The vast range of Python libraries available is virtually unlimited. In this blog post, I will demonstrate how to proxy TCP traffic of third-party libraries through the SAP Cloud Connector, enabling the integration of on-premises systems to be as effortless as internet-accessible ones.

Please note that this blog post is a continuation of my previous post, "Proxy Third-Party Python Library Traffic - General", where I provided a more comprehensive explanation of the approach and libraries used.

Szenario: In this example, I will show how to connect to a PostgreSQL database via the Cloud Connector. The database is running on my Windows machine, and the Cloud Connector is connected to that machine. This replicates a real world on-premises setup.

Before proceeding with the steps outlined in this guide, it is essential to have an instance of the SAP Cloud Connector installed. While it is possible to install the cloud connector on a server, for the purposes of this demonstration, we will be using a Windows machine. We recommend following the instructions provided in this blog (https://blogs.sap.com/2021/09/05/installation-and-configuration-of-sap-cloud-connector/) to install and configure the cloud connector.

The second requirement is a BTP subaccount with a Data Intelligence cluster. To this subaccount, we will connect the Cloud Connector.

The first step is to create a configuration in the Cloud Connector that connects to our subaccount and exposes the PostgreSQL host as a TCP resource.

On the BTP end, we can check the cockpit and the connected cloud connectors in the respective menu tabs. If you cannot see this tab, you may be missing some roles. It is important to note that we see the LocationID “FELIXLAPTOP”, which is an identifier that distinguishes multiple cloud connectors connected to the same subaccount.

For our purposes, we do not want to hard-code the connection details, because we need a little help from the connection management to access the Connectivity Service of BTP. In the Connection Management application from SAP Data Intelligence we can create connections of all types. We create a connection of type HTTP with host, port and SAP Cloud Connector as the gateway.

In there we specify the internal host of the Cloud Connector resource, its port and the Postgres username and password.

Note: Not all connection types allow you to access via the Cloud Connector. See the official product documentation for details.

In the operators menu of Data Intelligence we create a new custom operator based on the Python3 operator.

We build a custom Dockerfile with the required libraries. We use psycopg2 to connect to Postgres, SocketSwap as a local proxy, and sapcloudconnectorpythonsocket to open a socket via the Cloud Connector.

Now to the key part, the custom script:

And we need a second file in which we put the function we are passing into the SocketSwap Proxy. Note: This function is placed in a seperate file on the top level, to make it pickleable.

In the custom operator, script we just use a generator to start the connect_postgres function. Inside that function, we use the standard psycopg functions to make a small SQL query to the database. The only difference here: We wrap it in the SocketSwapContext to redirect its traffic through a CloudConnectorSocket.

Note: The SocketSwapContext takes mainly 3 arguments: A socket_factory, a callable that returns a connected socket, socket_factory_args, the local proxy host and its port. As we can see in the example, I pass the socket_factory function to the context manager. This function uses the already introduced CloudConnectorSocket functionality to open a Python socket using the Cloud Connector as proxy server. Check out my previous post about TCP and the SAP Cloud Connector to learn more details about this.

The local proxy is setup automatically in a background daemon process and listens to the given host, port. Usually this will be the localhost and a free port. In your third-party library you can then connect to that proxy and its traffic will be redirected automatically to the address specified as destination in the cloud connector socket.

We can wrap the new operator in a Data Intelligence Graph and fill the configuration parameter with the POSTGRES_ONPREM_SYSTEM connection ID.

In this example, we can check the logs and see the following:

The proxy registered successfully and was able to redirect the traffic coming to localhost:2222 to the on-premises PostgreSQL. As a result of the query, we get the current_timestamp in the python datetime object.

I hope this blog gave you a perspective of the possibilities you have even through the Cloud Connector to interact with any system. If you have any questions of scenarios for the SocketSwap package, feel free to leave a comment.

Please note that this blog post is a continuation of my previous post, "Proxy Third-Party Python Library Traffic - General", where I provided a more comprehensive explanation of the approach and libraries used.

Szenario Architecture

Szenario: In this example, I will show how to connect to a PostgreSQL database via the Cloud Connector. The database is running on my Windows machine, and the Cloud Connector is connected to that machine. This replicates a real world on-premises setup.

Prerequisite:

Before proceeding with the steps outlined in this guide, it is essential to have an instance of the SAP Cloud Connector installed. While it is possible to install the cloud connector on a server, for the purposes of this demonstration, we will be using a Windows machine. We recommend following the instructions provided in this blog (https://blogs.sap.com/2021/09/05/installation-and-configuration-of-sap-cloud-connector/) to install and configure the cloud connector.

The second requirement is a BTP subaccount with a Data Intelligence cluster. To this subaccount, we will connect the Cloud Connector.

1. Configuration:

The first step is to create a configuration in the Cloud Connector that connects to our subaccount and exposes the PostgreSQL host as a TCP resource.

Cloud Connector configuration

On the BTP end, we can check the cockpit and the connected cloud connectors in the respective menu tabs. If you cannot see this tab, you may be missing some roles. It is important to note that we see the LocationID “FELIXLAPTOP”, which is an identifier that distinguishes multiple cloud connectors connected to the same subaccount.

Registered Cloud Connector Resources

2. Creating a Data Intelligence Connection:

For our purposes, we do not want to hard-code the connection details, because we need a little help from the connection management to access the Connectivity Service of BTP. In the Connection Management application from SAP Data Intelligence we can create connections of all types. We create a connection of type HTTP with host, port and SAP Cloud Connector as the gateway.

In there we specify the internal host of the Cloud Connector resource, its port and the Postgres username and password.

Connection with Cloud Connector Gateway

Note: Not all connection types allow you to access via the Cloud Connector. See the official product documentation for details.

3. Developing a Custom Operator:

In the operators menu of Data Intelligence we create a new custom operator based on the Python3 operator.

We build a custom Dockerfile with the required libraries. We use psycopg2 to connect to Postgres, SocketSwap as a local proxy, and sapcloudconnectorpythonsocket to open a socket via the Cloud Connector.

FROM $com.sap.sles.base

RUN python3 -m pip --no-cache-dir install 'psycopg2-binary' --user

RUN python3 -m pip --no-cache-dir install 'sapcloudconnectorpythonsocket' --user

RUN python3 -m pip --no-cache-dir install 'SocketSwap' --userNow to the key part, the custom script:

import psycopg2

from SocketSwap import SocketSwapContext

import multiprocessing

from operators.<your_operator>.socket_factory import socket_factory

multiprocessing.set_start_method("spawn") # needed because DI Python Operator is itself executed in Threads

postgres_connection = api.config.http_connection

api.logger.info(str(postgres_connection))

def connect_postgres():

"""

This function demos how to easily setup the local proxy using the SocketSwapContext-Manager.

It exposes a local proxy on the localhost 127.0.0.1 on port 2222

The connection factory is provided to handle the creation of a socket to the remote target

"""

with SocketSwapContext(socket_factory, [postgres_connection], "127.0.0.1", 2222):

api.logger.info("Proxy started!")

# Set up a connection to the PostgreSQL database

conn = psycopg2.connect(

host="127.0.0.1",

database="postgres",

user="postgres",

password="password",

port=2222

)

api.logger.info("Postgres connected successfully!")

# Create a cursor object to execute SQL queries

cur = conn.cursor()

# Execute a SELECT query to retrieve data from a table

cur.execute("SELECT CURRENT_TIMESTAMP;")

# Fetch all the rows returned by the query

rows = cur.fetchall()

# Print the rows to the console

for row in rows:

api.logger.info(str(row))

# Close the cursor and connection

cur.close()

conn.close()

def gen():

api.logger.info("Generator Start")

connect_postgres()

api.add_generator(gen)And we need a second file in which we put the function we are passing into the SocketSwap Proxy. Note: This function is placed in a seperate file on the top level, to make it pickleable.

from sapcloudconnectorpythonsocket import CloudConnectorSocket

def socket_factory(postgres_connection):

cc_socket = CloudConnectorSocket()

cc_socket.connect(

dest_host=postgres_connection["connectionProperties"]["host"], # virtualhost

dest_port=postgres_connection["connectionProperties"]["port"], # 5432

proxy_host=postgres_connection["gateway"]["host"], # connectivity-service-proxy

proxy_port=20004, # 20004 SOCKS5 Proxy Port of connectivity service

token=postgres_connection["gateway"]["authentication"], # auth token

location_id="FELIXLAPTOP"

)

return cc_socketIn the custom operator, script we just use a generator to start the connect_postgres function. Inside that function, we use the standard psycopg functions to make a small SQL query to the database. The only difference here: We wrap it in the SocketSwapContext to redirect its traffic through a CloudConnectorSocket.

Note: The SocketSwapContext takes mainly 3 arguments: A socket_factory, a callable that returns a connected socket, socket_factory_args, the local proxy host and its port. As we can see in the example, I pass the socket_factory function to the context manager. This function uses the already introduced CloudConnectorSocket functionality to open a Python socket using the Cloud Connector as proxy server. Check out my previous post about TCP and the SAP Cloud Connector to learn more details about this.

The local proxy is setup automatically in a background daemon process and listens to the given host, port. Usually this will be the localhost and a free port. In your third-party library you can then connect to that proxy and its traffic will be redirected automatically to the address specified as destination in the cloud connector socket.

4. Test the custom operator:

We can wrap the new operator in a Data Intelligence Graph and fill the configuration parameter with the POSTGRES_ONPREM_SYSTEM connection ID.

Custom Operator Parameters

In this example, we can check the logs and see the following:

Generator Start

Proxy started!

Postgres connected successfully!

(datetime.datetime(2023, 4, 24, 10, 10, 52, 593842, tzinfo=datetime.timezone.utc),) The proxy registered successfully and was able to redirect the traffic coming to localhost:2222 to the on-premises PostgreSQL. As a result of the query, we get the current_timestamp in the python datetime object.

I hope this blog gave you a perspective of the possibilities you have even through the Cloud Connector to interact with any system. If you have any questions of scenarios for the SocketSwap package, feel free to leave a comment.

- SAP Managed Tags:

- SAP Data Intelligence,

- Python,

- SAP Connectivity service,

- Cloud Connector

Labels:

12 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

91 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

66 -

Expert

1 -

Expert Insights

177 -

Expert Insights

296 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

342 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

420 -

Workload Fluctuations

1

Related Content

- ML Scenario Implementation (Logistics Regression Model) using SAP Data Intelligence in Technology Blogs by Members

- SAP Data Intelligence Python Operators with pyodbc in Technology Blogs by SAP

- SAP Cloud ALM for operations - Intelligent Event Processing : Send Chat Message to Microsoft Teams in Technology Blogs by SAP

- Breaking Boundaries: Clean Core and AI-First Strategies Transforming SAP in Technology Blogs by Members

- AI-Embedded Flexible Energy Grid: implementation deep dive in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 37 | |

| 25 | |

| 17 | |

| 13 | |

| 7 | |

| 7 | |

| 7 | |

| 6 | |

| 6 | |

| 6 |