- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Migrating CDS projects from HANA Service or HANA 2...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Problem:

Using CAP CDS (Cloud Application Programming - Core Data Service) one would expect that this is working with HANA Cloud out of the box. That is unfortunately not the case. But with a little switch things are getting better. In the beginning CAP CDS for HANA was generating HDBCDS. This was also seen as the future for HANA Cloud at a time where there was no HANA Cloud. Today we know better and do not have HDBCDS in HANA Cloud. This leads to compatibility issues. On HANA 2 and HANA Service this was not a problem. Now customers want to migrate to HANA Cloud.

Self-Service-Migration helps you today to migrate the database content from the source systems to HANA Cloud. But you also want to migrate your development (also called design-time objects) into the new world to continue and extend work you started several years ago.

Self-Service-Migration assistant is indicating errors in HDI containers due to the usage of HDBCDS artefacts. If you try to migrate this to HANA Cloud you will end up in problems since HANA Cloud does not have plug-in for HDBCDS. Here a full list of unsupported plug-ins.

Solutions:

You have to convert your CAP project from HDBCDS generation to HDBTABLE generation. This is "just" a setting for CDS in the project. The CDS is smart enough to generate the same outcome in the database at the end. Only that HDBCDS is more a logical data model than a pure data model. Final result as so call run-time object in the database are for CAP applications equal.

Changes in .cdsrc.json (the JAVA style solution)

"requires": {

"db": {

"kind": "hana"

}

}

"hana": {

"deploy-format": "hdbtable"

}

Alternative do:

Changes in Package.json on project level (Nodejs style)

"cds": {

"hana":{

"deploy-format": "hdbtable"

},

"requires": {

"db": {

"kind": "hana",

}

}

}

An the winner is ? The Package.json is the stronger definition. In CAP CDS it does not really matter where you give the directive but it has to be clear. So Package.json does overwrite all other formats (if it exists). My personal recommendation is to use PACKAGE.JSON. By this you are always sure what you get. The CAP CDS generator will now produce you the proper artefacts that will run in HANA Cloud as well. If you now have switched the CAP generator from HDBCDS format to HDBTABLE format on thing remains. Clean up!

If you now have switched the CAP generator from HDBCDS format to HDBTABLE format on thing remains. Clean up!

HDI deploys all the artifacts into the database and typical calculated the difference from before and afterwards.

Now since you before deployed the HDBCDS artifacts they are still within your design time storage.

Within WebIDE/BAS this is not a problem since they attach the option

- - auto-undeploy

(see NPMJS documentation and search for "Delta Deployment and Undeploy Allowlist")

to the deploy command automaticly. But the xs/cf deploy does not do that.

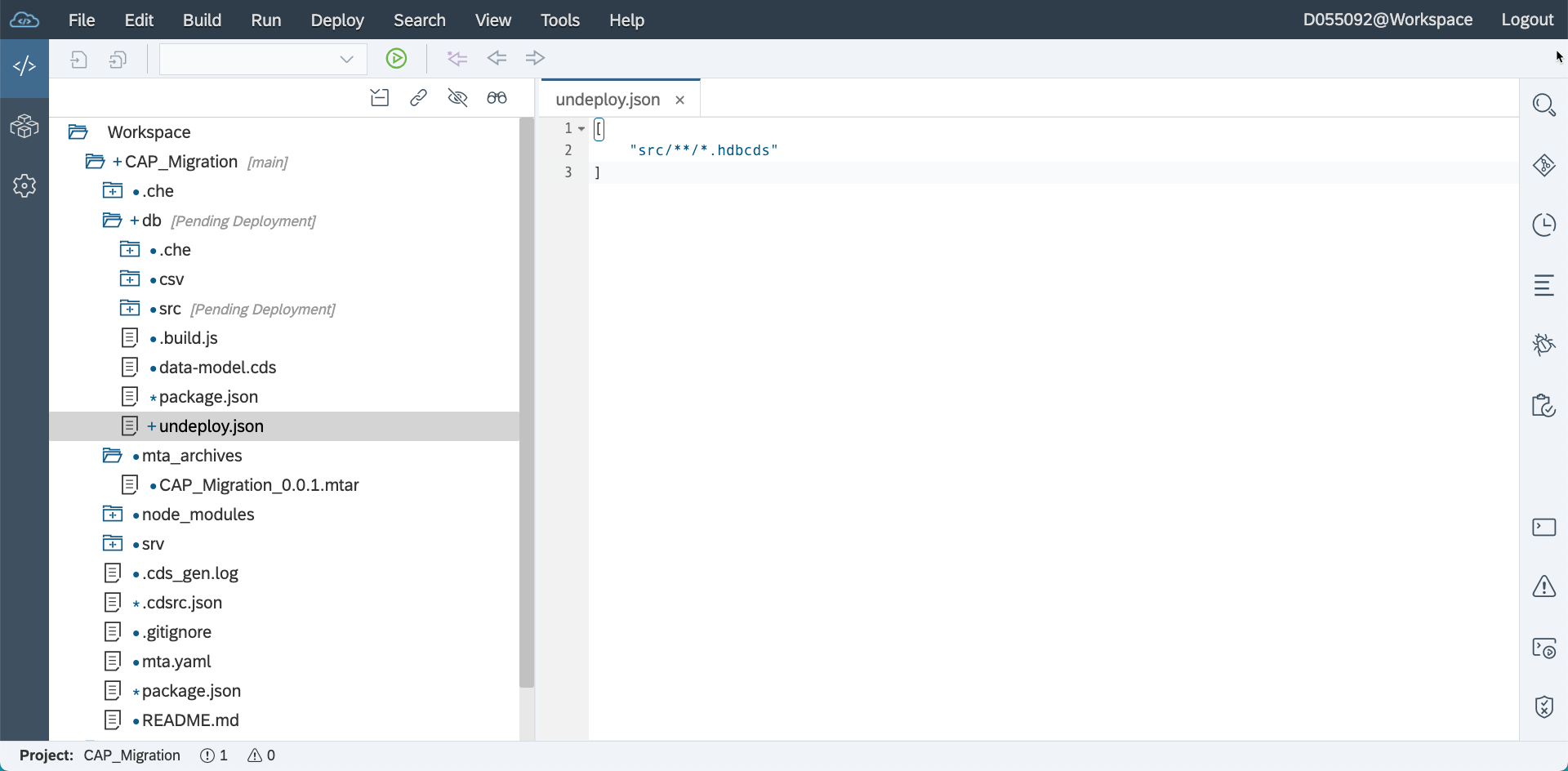

So it is strongly recommended to maintain an undeploy.json document.

[ "src/**/*.hdbcds" ]

Why you shoud do this?:

1.) You may get errors due to double definition (HDBCDS and HDBTABLE) of objects even if you not migrate.

2.) The self-service-migration will warn you in the pre-migration checker. This will eliminate this warnings and ensures a save migration.

Summary:

Changing the CAP CDS generation directives will give you code that is also executable in HANA Cloud.

Do not forget to clean up your code with the "undeploy.json" file. This will allow to prevent errors in unattended environments using batch or CTS+ or CI/CD environments.

- SAP Managed Tags:

- SAP HANA Cloud,

- SAP HANA

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

93 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

66 -

Expert

1 -

Expert Insights

177 -

Expert Insights

299 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

345 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,872 -

Technology Updates

427 -

Workload Fluctuations

1

- Consuming on-Premise Service in CAP Project in Technology Q&A

- Consuming SAP with SAP Build Apps - Mobile Apps for iOS and Android in Technology Blogs by SAP

- SAP CAP: Controller - Service - Repository architecture in Technology Blogs by Members

- Improving Time Management in SAP S/4HANA Cloud: A GenAI Solution in Technology Blogs by SAP

- Unify your process and task mining insights: How SAP UEM by Knoa integrates with SAP Signavio in Technology Blogs by SAP

| User | Count |

|---|---|

| 41 | |

| 25 | |

| 17 | |

| 14 | |

| 9 | |

| 7 | |

| 6 | |

| 6 | |

| 6 | |

| 6 |