- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- SAP Integration Suite - Code your own Cloud Integr...

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Product and Topic Expert

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

04-17-2023

3:12 PM

You may remember the cringey performance of Steve Ballmer yelling "Developers!" on stage.

Well, sometimes I would like to yell "APIs!" because they truly are at the heart of every cloud system and definitively deserve that shoutout. But I am not Steve, so I stay behind my keyboard and write blogs - lucky you.

You may have an SAP Cloud Integration in place and you would like to have a way to precisely analyse what is going on there in terms of usage. You can already do a lot with our internal monitoring or the predefined reports, but if you want to do more you have to rely on coding.

Hence this blog will provide an example of how to use the Cloud Integration APIs ("APIs! APIs! APIs!") in order to build your own report in Python.

Especially, the code I share with you will report on the information about the "Edit Status" of an iflow, if it is deployed and how often it has been used in the last month.

As a disclaimer: I am no developer and this is my first coding attempt in Python. So you may see optimization potential here - please refrain from blaming me publicly 🙂

1- Install all the necessary Python extensions in Visual Studio Code.

This is pretty easy and lets you simply run and debug your code.

2- Get acquainted with the details of the SAP Cloud Integration APIs ("APIs! APIs! APIs!"). They are pretty easy to use but you need to get your head around them a little.

Life hack: once you have found the REST API documentation in the SAP API Business Hub, you can add it to your favourites so you find it quickly later.

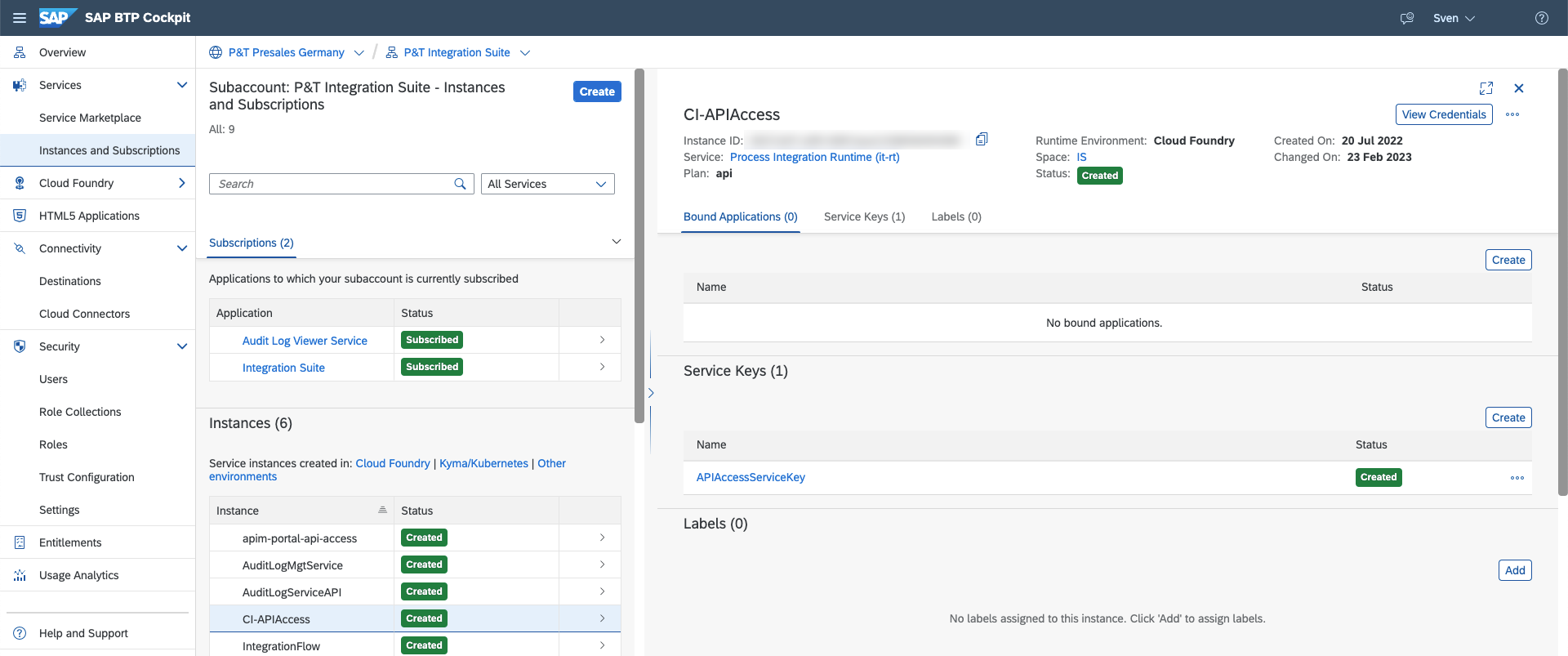

3- If you have not done it yet, create an API Service Key in your Integration Suite sub-account, using the plan "api".

The most interesting part of this blog is the actual code - that you can copy from below. Yes, it is quite long, but pretty easy to understand.

Still, I will quickly explain what I did there and how to use that code hereunder.

Basically, there are 2 methods in the code:

GetAccessToken: this method will access your Cloud Integration information through the Cloud Integration API. In order to do so, it needs the content of your API Service Key you created previously.

Hence, please copy the content of the sub-account service key into a file named "config.json" in the same folder as your python file.

analyzeIFlows:

This main method will loop through all integration packages of your sub-account and create an output file in the desired format (CSV or JSON). Set this at line 125.

Feel free to modify the code to suit your needs - that is is main idea of it!

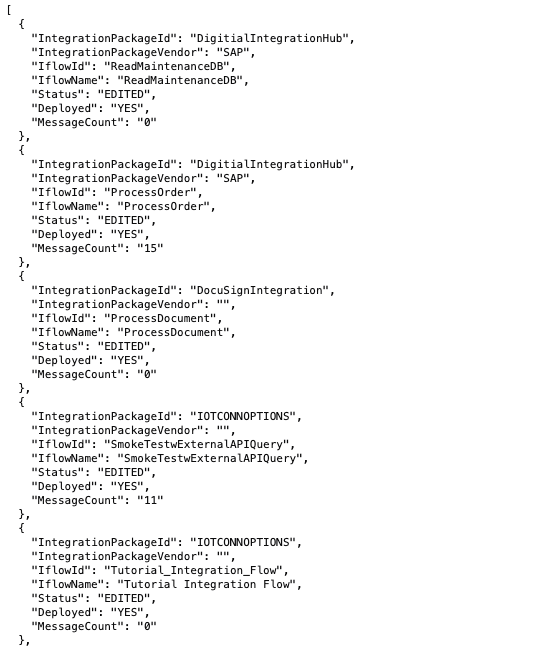

Once you run the code (it may take a while), it will generate either a CSV or a JSON file.

All the best, happy coding, and shoutout to APIs!

Well, sometimes I would like to yell "APIs!" because they truly are at the heart of every cloud system and definitively deserve that shoutout. But I am not Steve, so I stay behind my keyboard and write blogs - lucky you.

What is this blog about?

You may have an SAP Cloud Integration in place and you would like to have a way to precisely analyse what is going on there in terms of usage. You can already do a lot with our internal monitoring or the predefined reports, but if you want to do more you have to rely on coding.

Hence this blog will provide an example of how to use the Cloud Integration APIs ("APIs! APIs! APIs!") in order to build your own report in Python.

Especially, the code I share with you will report on the information about the "Edit Status" of an iflow, if it is deployed and how often it has been used in the last month.

As a disclaimer: I am no developer and this is my first coding attempt in Python. So you may see optimization potential here - please refrain from blaming me publicly 🙂

Get started

1- Install all the necessary Python extensions in Visual Studio Code.

This is pretty easy and lets you simply run and debug your code.

2- Get acquainted with the details of the SAP Cloud Integration APIs ("APIs! APIs! APIs!"). They are pretty easy to use but you need to get your head around them a little.

Life hack: once you have found the REST API documentation in the SAP API Business Hub, you can add it to your favourites so you find it quickly later.

3- If you have not done it yet, create an API Service Key in your Integration Suite sub-account, using the plan "api".

The code

The most interesting part of this blog is the actual code - that you can copy from below. Yes, it is quite long, but pretty easy to understand.

Still, I will quickly explain what I did there and how to use that code hereunder.

import requests

from datetime import date, timedelta

import os

from requests.auth import HTTPBasicAuth

import json

def getAccessToken(apiEndpoint: str, clientId: str, clientSecret: str) -> str:

tokenEndpoint = apiEndpoint+"?grant_type=client_credentials"

r = requests.get(url=tokenEndpoint, auth=HTTPBasicAuth(

clientId, clientSecret))

if r.status_code == 200:

j = json.loads(r.text)

token = j["access_token"]

return token

print("Error in generating access token" + r.status_code)

def analyzeIFlows(url: str, accessToken: str, outputFileType: str):

# Check if output is correctly set

if outputFileType=="CSV" or outputFileType=="JSON":

# Get all integration packages of Cloud Integration Tenant

CIurl: str = url+"/api/v1/IntegrationPackages"

r = requests.get(url=CIurl, headers={

"Authorization": "Bearer "+accessToken,

"Content-Type":'application/json',

"Accept":'application/json'

})

# If the response if ok, start processing

if r.status_code == 200:

# Generate the output file

from datetime import datetime

now = datetime.now()

f = open("CIAnalysisResults " + now.strftime("%m-%d-%Y %H-%M-%S") + "." + outputFileType, "w")

# CSV response string

csvResponse = "Integration package ID;Integration package vendor;Iflow ID;IFlow Name;Status;Deployed;Messagecount (last month)\n"

# JSON response array

jsonResponseArray = []

# Load the JSON results of the query

integrationPackageList = json.loads(r.content)

# Loop through all integration packages

for integrationPackage in integrationPackageList['d']['results']:

# Get the integration flows for each integration package

CIurl: str = url+"/api/v1/IntegrationPackages('"+integrationPackage["Id"]+"')/IntegrationDesigntimeArtifacts"

r = requests.get(url=CIurl, headers={

"Authorization": "Bearer "+accessToken,

"Content-Type":'application/json',

"Accept":'application/json'

})

# Loop through all integration flows

integrationFlowList = json.loads(r.content)

for integrationFow in integrationFlowList['d']['results']:

# for every iflow, check if it is deployed or not

iFlowDeployed = "NO"

CIurl: str = url+"/api/v1/IntegrationRuntimeArtifacts('"+integrationFow["Id"]+"')"

r = requests.get(url=CIurl, headers={

"Authorization": "Bearer "+accessToken,

"Content-Type":'application/json',

"Accept":'application/json'

})

if (r.status_code == 200):

iFlowDeployed = "YES"

# get the number of calls from the MPL

# Format start and end date for the MPL query

today = date.today()

endDate = date(today.year, today.month - 1, 1)

startDate = endDate.replace(day=28) + timedelta(days=4)

startDate = startDate - timedelta(days=startDate.day)

endDate = endDate.strftime("%Y-%m-%dT00:00:01")

startDate = startDate.strftime("%Y-%m-%dT23:59:59")

# for every iflow, get the number of messages

CIurl: str = url+"/api/v1/MessageProcessingLogs?&$inlinecount=allpages&$filter=LogEnd ge datetime'"+endDate+"' and LogStart le datetime'"+startDate+"' and IntegrationFlowName%20eq%20'"+integrationFow["Id"]+"'"

r = requests.get(url=CIurl, headers={

"Authorization": "Bearer "+accessToken,

"Content-Type":'application/json',

"Accept":'application/json'

})

if (r.status_code == 200):

messageProcessingLogs = json.loads(r.content)

messageCount = messageProcessingLogs['d']['__count']

# get details of each iflow

csvResponse+=(integrationPackage["Id"]+";"+integrationPackage["Vendor"]+";"+integrationFow['Id']+";" + integrationFow['Name'])

jsonResponse = {

"IntegrationPackageId": integrationPackage["Id"],

"IntegrationPackageVendor": integrationPackage["Vendor"],

"IflowId": integrationFow['Id'],

"IflowName": integrationFow['Name']

}

# Check if creation date equals modification date

if abs(int(integrationFow['CreatedAt']) - int(integrationFow['ModifiedAt'])) <= 1:

jsonResponse["Status"] = "NOT EDITED"

csvResponse+=(";NOT EDITED;")

else:

jsonResponse["Status"] = "EDITED"

csvResponse+=(";EDITED;")

# Add if iFLow is deployed or not

jsonResponse["Deployed"] = iFlowDeployed

csvResponse+=(iFlowDeployed+";")

# Add number of messages

jsonResponse["MessageCount"] = messageCount

csvResponse+=(messageCount+"\n")

#Generate JSON

jsonResponseArray.append(jsonResponse)

else:

print("Error in getting Integration Packages: "+str(r.status_code))

jsonResponse = json.dumps(jsonResponseArray)

# Write the data to file

if outputFileType == "CSV":

f.write(csvResponse)

else:

f.write(jsonResponse)

f.close()

else:

print ("Please use 'CSV' or 'JSON' as outputFileType")

# Get config file location

configFilePath: str = open(os.path.dirname(

os.path.abspath(__file__))+'/config.json', "r")

# Reading from config file

configObj: json = json.loads(configFilePath.read())

# Get Access Token

accessToken = getAccessToken(

configObj["oauth"]["tokenurl"], configObj["oauth"]["clientid"], configObj["oauth"]["clientsecret"])

# Set the output mode (CSV or JSON)

outputFileType = "CSV"

# Run the analysis

analyzeIFlows(configObj["oauth"]["url"], accessToken, outputFileType) Basically, there are 2 methods in the code:

- getAccessToken: this method simply connects to the BTP sub-account and retrieves an OAuth token to be used later in the request.

- analyzeIFlows: this is the main method which connects to the API of your sub-account in order to retrieve the necessary information.

GetAccessToken: this method will access your Cloud Integration information through the Cloud Integration API. In order to do so, it needs the content of your API Service Key you created previously.

Hence, please copy the content of the sub-account service key into a file named "config.json" in the same folder as your python file.

analyzeIFlows:

This main method will loop through all integration packages of your sub-account and create an output file in the desired format (CSV or JSON). Set this at line 125.

For each integration package, the code will retrieve the list of integration flows.

For each integration flow, the code will retrieve the number of messages for the past month.

Also, for each integration flow, the code will check if the package was edited.

Here, I am using the "ModifiedAt" and the "CreatedAt" information. If they were created and modified at almost the same time (give or take a second), I consider that they have not been modified. I have not found any reliable information telling precisely if an iflow has been modified, so this is a small workaround.

Feel free to modify the code to suit your needs - that is is main idea of it!

Run it!

Once you run the code (it may take a while), it will generate either a CSV or a JSON file.

All the best, happy coding, and shoutout to APIs!

- SAP Managed Tags:

- SAP Integration Suite,

- Cloud Integration,

- SAP Business Technology Platform

Labels:

4 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,661 -

Business Trends

88 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

65 -

Expert

1 -

Expert Insights

178 -

Expert Insights

282 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

784 -

Life at SAP

11 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

330 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,886 -

Technology Updates

408 -

Workload Fluctuations

1

Related Content

- Hack2Build on Business AI – Highlighted Use Cases in Technology Blogs by SAP

- SAP Partners unleash Business AI potential at global Hack2Build in Technology Blogs by SAP

- It’s Official - SAP BTP is Again a Leader in G2’s Reports in Technology Blogs by SAP

- Magic Numbers : A Solution to Foreign Characters in SAP CPI in Technology Blogs by Members

- Convert multiple xml's into single Xlsx(MS Excel) using groovy script in Technology Blogs by Members

Top kudoed authors

| User | Count |

|---|---|

| 13 | |

| 11 | |

| 11 | |

| 10 | |

| 9 | |

| 7 | |

| 6 | |

| 5 | |

| 5 | |

| 5 |