- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Handle High Frequency Inbound Processing into SAP ...

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Advisor

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

03-07-2023

11:32 PM

Background Information

With the migration to SAP BW/4HANA, source system of type “Web Service” is not available. And here comes a new capability “Write Interface” for DataStore Objects allowing to push data into inbound queue tables of Staging DataStore Objects and Standard DataStore Objects, replacing the push capability of PSA tables of DataSources of Web Service Source Systems. See SAP Note 2441826 - BW4SL & BWbridgeSL - Web Service Source Systems.

There are two loading scenarios supported via the Write Interface:

- For every call, a new internal request is opened ("One-step"), i.e., BW opens and closes an RSPM Request internally around the transferred records.

- The external system administrates the RSPM Request explicitly and sends Data Packages into this Request ("Write into Request"), i.e., the external system opens the RSPM Request, n times sends data (with information on request and package) and closes it.

For systems that have new data coming in a low frequency, you can use the “One-step” procedure. Each time, when a set of new data records come, they are sent to the BW system and an RSPM request is generated.

For systems that have new data coming in a high frequency (with a low volume), you can use the “Write into Request” procedure, first open an RSPM request, and each time whenever new data comes, send data with the corresponding request and package, and close the request at the end.

“Write into Request” requires the external system to administrate the RSPM request and know how to package the data records. However, if you are in a situation where there is high-frequency and low-volume data, but the external system does not have the capability to handle RSPM requests and has to use “One-step” data sending, where each call creates a new RSPM Request. This will result in too many RSPM requests generated in a short period. Please note that generally, a large number of RSPM requests (several thousands of Requests in the same Datastore Objects) require the execution of the RSPM housekeeping to avoid further performance issues.

To avoid this performance limitation which is caused by many RSPM requests generated by “One-step” data sending you may consider having an alternative object where you can write data and use it for the temporary storage of data and load the needed data from this object via DataSource.

ODP CDS DataSource based on ABAP CDS View

In this case, we would need to create an ABAP CDS view, and first, accept the high-frequency incoming data here (in the underlying table), then generate an ODP CDS DataSource based on this ABAP CDS view and load data from the DataSource with the custom frequency. In this way, you can avoid having too many RSPM requests generated in a short period for the target ADSO. Also, the process chain which loads data from the ABAP CDS view can be run with frequency according to the business requirements. For example, hourly.

The ABAP CDS view and the DataSource are acting like an extra inbound table (without request generated) for this ADSO. So, instead of directly writing data into the target ADSO, the system first writes data to the underlying table of the ABAP CDS view and the BW system loads data from the ODP which represents the ABAP CDS view.

Basically, the procedure would look like below:

1. Create a database table. All the fields (except the technical fields like REQTSN, DATAPAKID, RECORD, and RECORDMODE) are just copied from the inbound table of the target ADSO. Besides that, we add 2 fields, one as a key field and another for the delta mechanism. In the below example, “RECORD_ID” is used as a key field, and “DELTA_TIMESTAMP” is used for the delta mechanism.

2. Create an ABAP CDS view. Still not so much effort here. By using a CDS view, you can easily configure the delta mechanism as well as other custom settings you would like to have by various annotations. In the example, it is using the generic delta extraction annotation Analytics.dataExtraction.delta.byElement.name.

Then, all other modules are much easier. Since we are using exactly the same fields as in the target ADSO, the fields in the DataSource are auto-detected from the ABAP CDS view, and mappings in the transformation between DataSource and target ADSO are auto-generated with a direct assignment.

3. Create ODP CDS DataSource. Since the data extraction function is already configured in the ABAP CDS view, it is auto-released/exposed for ODP usage. So, you can easily create an ODP CDS DataSource based on the ABAP CDS view.

4. Create transformation and DTP between DataSource and target ADSO. As mentioned before, the fields in the ABAP CDS view are the same as fields in the target ADSO inbound table. The DataSource derived from it has the exact same fields as the target ADSO. As a result, the system will automatically map them in the transformation.

So far, this data flow is now available, and you can use it in your Process Chain.

In the example, we have a target ADSO named ADSO_0004 and it has below key fields and non-key fields.

1. Enable Writing to Table and ABAP CDS View

1.1 Create a DDIC Structure with the Semantic Fields of the ADSO

These fields can be found in the inbound table for DataStore ADSO_0004 /BIC/AADSO_00041. Ignore the technical key fields (REQTSN, DATAPAKID, and RECORD) as well as the record mode field “RECORDMODE” and copy the (modeled) semantical fields into a Structure created in SE11.

The field list from the inbound queue of the ADSO can be copied over, like below:

1.2 Create a Database Table as Message Queue

You can create a database table based on the DDIC structure of 1.1 as include. A custom technical key field to define the order and a delta timestamp field like below needs to be added for the data processing.

- The field RECORD_ID defines a unique record identifier which also defines the order over all records.

- The field DELTA_TIMESTAMP is used later to enable a generic delta.

- The .INCLUDE contains the semantic fields from the generated structure.

1.3 Create an RFC Function Module to Receive and Store the Pushed Message

Whenever new data comes in whatever frequency, you can use code like below to write the new data records and generate an identical value for the custom key field “RECORD_ID” and delta field “DELTA_TIMESTAMP” of the table.

The data is received within the structure of the semantic fields, modeled in 1.1, and then moved to the database table format. The order of the incoming data is kept in fields RECORD_ID.

Note that parallel calls of this function would be able to receive data in parallel, however, the order of these two function calls cannot be predicted, i.e., one data chunk would be inserted with a “lower” RECORD_ID and the other with a “higher”.

Code Example

- A “TSN” (transaction sequence number) is used to define the order of the messages.

- The variable l_record is used to keep the order within the records sent in a function call.

- An invalid move should be recognized within the move-corresponding:

- If an exception is raised, these needs are recognized on the sender side (like for the Push Datastore Object).

- Data has to be sent again in case of a persistent error or the data type etc. needs to be corrected.

- The non-key field DELTA_TIMESTAMP is filled “redundantly” to enable the generic delta pointer.

FUNCTION zpush_adso_0004.

*"----------------------------------------------------------------------

*"*"Local Interface:

*" TABLES

*" IT_DATA STRUCTURE ZOT_ADSO_0004 [structure from above]

*" EXCEPTIONS

*" FAILED

*"----------------------------------------------------------------------

CHECK NOT it_data[] IS INITIAL.

DATA: lt_dbdata TYPE STANDARD TABLE OF zadso_0004," DB table from above

l_record TYPE n LENGTH 6,

l_timest TYPE rstimestmp.

DATA(l_tsn) = cl_rspm_tsn=>tsn_odq_to_bw( cl_odq_tsn=>get_tsn( ) ).

l_timest = l_tsn(14). " UTC timestamp short

TRY.

* Convert to DB structure

LOOP AT it_data ASSIGNING FIELD-SYMBOL(<ls_data>).

ADD 1 TO l_record.

APPEND INITIAL LINE TO lt_dbdata ASSIGNING FIELD-SYMBOL(<ls_dbdata>).

MOVE-CORRESPONDING <ls_data> TO <ls_dbdata>.

<ls_dbdata>-record_id = |{ l_tsn(14) }{ l_tsn+14(6) }{ l_record(6) }|.

<ls_dbdata>-delta_timestamp = l_timest.

ENDLOOP.

* Insert Message Records to DB

INSERT zadso_0004 FROM TABLE lt_dbdata. " DB table from above

CALL FUNCTION 'DB_COMMIT'.

CATCH cx_root INTO DATA(lrx_root).

CALL FUNCTION 'RS_EXCEPTION_TO_SYMSG'

EXPORTING

i_r_exception = lrx_root

i_deepest = 'X'.

MESSAGE ID sy-msgid TYPE sy-msgty NUMBER sy-msgno

WITH sy-msgv1 sy-msgv2 sy-msgv3 sy-msgv4

RAISING failed.

ENDTRY.

ENDFUNCTION. 2. Processing the Data Within BW Related Objects

2.1 Create an ABAP CDS View with Generic Delta Definition on the Message Queue

You can use Analytics Annotations to enable data extraction and delta mechanism in ABAP CDS views. For detailed information about Analytics Annotations, please check Analytics Annotations | SAP Help Portal.

In our example, we are using Analytics.dataExtraction.enabled to mark the view suitable for data replication and Analytics.dataExtraction.delta.byElement.name to enable generic delta extraction. See below example code:

@AbapCatalog.sqlViewName: 'ZADSO_0004_DS'

@AbapCatalog.compiler.compareFilter: true

@AbapCatalog.preserveKey: true

@AccessControl.authorizationCheck: #NOT_REQUIRED

@EndUserText.label: 'adso 4'

@Analytics:{ dataCategory: #FACT,

dataExtraction: { enabled: true,

delta.byElement: { name: 'DeltaTimestamp',

maxDelayInSeconds : 60

}

}

}

define view ZADSO_0004_CDS

as select from zadso_0004

{

key record_id as RecordId,

@Semantics.systemDateTime.lastChangedAt: true

delta_timestamp as DeltaTimestamp,

carrid as Carrid,

connid as Connid,

fldate as Fldate,

bookid as Bookid,

customid as Customid,

custtype as Custtype,

smoker as Smoker,

luggweight as Luggweight,

wunit as Wunit,

invoice as Invoice,

class as Class,

forcuram as Forcuram,

forcurkey as Forcurkey,

loccuram as Loccuram,

loccurkey as Loccurkey,

order_date as OrderDate,

counter as Counter,

agencynum as Agencynum,

cancelled as Cancelled,

reserved as Reserved,

passname as Passname,

passform as Passform,

passbirth as Passbirth

} 2.2 Create DataSource Based on the ABAP CDS View

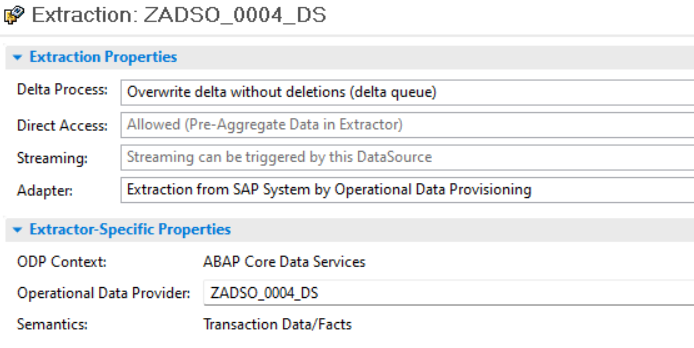

Since the ABAP CDS view is enabled for data extraction, it is auto-released/exposed for ODP. Under ODP source system (with context ABAP_CDS) that points to the same BW/4HANA system, you can create a new DataSource and select the corresponding Operational Data Provider.

After creation, you can find that, in the extraction properties, the DataSource is already delta-enabled.

2.3 Create DTP/Transformation

Then you can create a transformation and a DTP to load data from the DataSource to the target ADSO.

Please note that there is no need to map the technical key fields RECORD_ID and DELTATIMESTAMP field, however, to enforce the correct order we would need a start routine in the Transformation

SORT source_package BY recordid ASCENDING.Together with an Extraction Grouped by the key fields of the Active Table of the ADSO (in the DTP).

All other fields are just direct assignment mapping since they are the same fields.

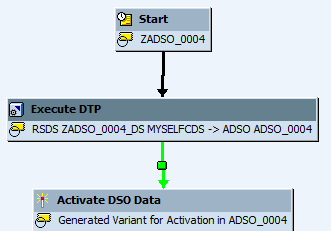

2.4 Maintain Process Chain

Depending on the requirements of further processing, you can use an hourly or daily, or any other custom frequency Process Chain to run the DTP and activate data in the target ADSO.

Thus, the frequency of DTP execution, which each creates an RSPM request in the target ADSO, is completely de-coupled from the incoming data calls.

Additionally, Clean Data in Table

As time goes by, data will get accumulated in the DB table we created for temporary storage. In this case, you can add an extra program to delete the old data in the DB table. You may put the program at the end of the Process Chain so that whenever loading and activating new data records, old data in the DB table will be deleted or run the program with a custom configuration.

This program code below can be used for different inbound tables and for each Process Variant in a Process you have to create a Program Variant that specifies

- the table name p_tab, here ZADSO_0004, and

- number of days after which the data records are considered to be outdated and shall be deleted (here, for example, 7 days)

Of course, you could also create a different program, which is specified for each inbound table w/o using this generic approach where the table name is specified by a parameter.

Example Code

REPORT z_inbound_message_cleanup.

PARAMETERS: p_tab TYPE tabname DEFAULT '',

p_days TYPE i DEFAULT 7.

PERFORM main USING p_tab

p_days.

FORM main USING i_tabname TYPE tabname

i_older_than_days TYPE i.

DATA: lt_dfies TYPE STANDARD TABLE OF dfies,

l_now TYPE rstimestmp,

l_secs TYPE i.

CHECK i_older_than_days GT 0.

CALL FUNCTION 'DDIF_NAMETAB_GET'

EXPORTING

tabname = i_tabname

TABLES

dfies_tab = lt_dfies

EXCEPTIONS

not_found = 1

OTHERS = 2.

IF sy-subrc <> 0.

MESSAGE ID sy-msgid TYPE 'I' NUMBER sy-msgno

WITH sy-msgv1 sy-msgv2 sy-msgv3 sy-msgv4.

RETURN.

ELSE.

LOOP AT lt_dfies ASSIGNING FIELD-SYMBOL(<ls_dfies>)

WHERE fieldname EQ 'DELTA_TIMESTAMP'.

EXIT.

ENDLOOP.

IF sy-subrc NE 0.

MESSAGE 'No field DELTA_TIMESTAMP found' TYPE 'I'.

RETURN.

ELSEIF <ls_dfies>-inttype NE 'P'.

MESSAGE 'DELTA_TIMESTAMP not of type P' TYPE 'I'.

RETURN.

ENDIF.

ENDIF.

* ---- Days to Seconds

l_secs = i_older_than_days * 24 * 60 * 60.

* ---- Timestamp now

GET TIME STAMP FIELD l_now.

TRY.

* ---- Timestamp

DATA(l_timestamp) = cl_abap_tstmp=>subtractsecs_to_short( tstmp = l_now

secs = l_secs ).

* ---- Delete messages "older than x days"

DELETE FROM (i_tabname) WHERE delta_timestamp LT l_timestamp.

IF sy-subrc EQ 0.

MESSAGE |Messages older than { i_older_than_days } days deleted from table { i_tabname }| TYPE 'I'.

ELSE.

MESSAGE |No (further) messages older than { i_older_than_days } days deleted from table { i_tabname }| TYPE 'I'.

ENDIF.

CALL FUNCTION 'DB_COMMIT'.

CATCH cx_root INTO DATA(lrx_root).

CALL FUNCTION 'RS_EXCEPTION_TO_SYMSG'

EXPORTING

i_r_exception = lrx_root

i_deepest = 'X'.

MESSAGE ID sy-msgid TYPE 'I' NUMBER sy-msgno

WITH sy-msgv1 sy-msgv2 sy-msgv3 sy-msgv4.

RETURN.

ENDTRY.

ENDFORM.Epilogue

In this article, we are using an ABAP CDS view and an ODP CDS DataSource as an alternative way to achieve high-frequency inbound queue processing. You might also consider other options like HANA view and HANA DataSource etc. The basic concepts are the same, first writing the high-frequency incoming data in a temporary object and then loading data from this object with a suitable frequency.

- SAP Managed Tags:

- SAP BW/4HANA,

- BW Data Staging (WHM)

Labels:

2 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

91 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

66 -

Expert

1 -

Expert Insights

177 -

Expert Insights

295 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

341 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

419 -

Workload Fluctuations

1

Related Content

- Activating Embedded AI – Intelligent GRIR Reconciliation in Technology Blogs by SAP

- Workload Analysis for HANA Platform Series - 1. Define and Understand the Workload Pattern in Technology Blogs by SAP

- Workload Analysis for HANA Platform Series - 2. Analyze the CPU, Threads and Numa Utilizations in Technology Blogs by SAP

- SAP Background Job Processing in Technology Q&A

- Managing Recurring Pay Components in SuccessFactors Using Batch Upsert in Technology Blogs by Members

Top kudoed authors

| User | Count |

|---|---|

| 35 | |

| 25 | |

| 17 | |

| 13 | |

| 8 | |

| 7 | |

| 6 | |

| 6 | |

| 6 | |

| 6 |