- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Data flow using Python – in SAP "Data Warehouse Cl...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Overview :

In real world Data Warehouse Implementations, Business requirment demands a need to cleanse, consolidate and integrate the data to suit the Analysis / Reporting for the Organizations.The data flow in "SAP Data Warehouse Cloud" Provides us a possibility to transfer data from a source to Target destinations(DWC Tables) and cleanse, consolidate and integrate the data so that it can be used for analysis, reporting and possibly also for planning.

We can Design a data flow to easily transport and transform data through a graphical user interface, before publishing the data to the destination table. you can drag and drop sources from the Source Explorer, combine them as necessary, add additional operators to remove or add columns, aggregate the data, and run Python scripts.

This Blog Tries to answer some of the Python scripts required to be used in real world DWC implementations and help us to transform data for the dynamic requirments of the business.

#Sample Data Flow Screen :

- What is the Key Take Away from this Blog :

- Guides you to design DWC Dataflow

- Guides you to Transform data using Python scripts

- Guides you step by step way to Implement DML operations in python.

- Insert New Record using script

- Modify Specific Field Contents.

- Updating Values in specific Row number.

- Concatenating Field Values.

- Deleting specific Record

Step by Step Approach:

- Step 1: In DWC Home Page -> Data Builder Option will Present the following screen

The above Dataflow option - select the New data Data flow, which will provide us the option to select the source tables/extractors.

- Step 2: Drag the source and Target Objects from the left Panel.

As shown above, select the source from the left panel and also select projections and select Target objects in to the design area. Please note for target tables , we need to select the above shown option and deploy it.

Please insert SCRIPT operator box from the top panel to transform data with some logics and conditions. Here in we are going to see step by sep usage of python scrip in dataflow.

- Scenario 1 : Insert New Record using script

- Scenario 2 : Modify Specific Field Contents.

- Scenario 3 : Updating Values in specific Row number.

- Scenario 4 : Concatenating Field Values.

- Scenario 5 : Deleting specific Record

- Step 3: In Script Operator box - Select the Change Button from Properties

- Step 4: Insert a New record using Python script ( Scenario 1)

For Demonstration purpose - we have considered source table with the column names shown below :

Once you navigate to script editor, for inserting new records - data.append code allows us to insert a new record in the recordset. please note as per our scenario we have to copy the last record values, for this purpose line no 19 : data(-1) is used in the code. It captures the last record field values in a new dataframe called newRow.

Once we have the above code in place of the script editor , please save the code and deploy and 'RUN' the dataflow. as demonstrated here , the resultsets after the dataflow run - looks like this below screen.

The same process need to be followed for any script implementation. for demonstration purpose in scenario 1 used for Inserting new records.

- Scenario 2 : Modify Specific Field Contents.

As demonstrated in the above step by step approach . follow step 3 & 4 for this scenario2, to modify the field contents of the existing recordset. Please note same process need to be followed for any script implementation.

In this , we have modified the Rownum, company code by incrementing 1. as discussed earlier the 'append' command helps us to add records. in the above screen line no - 33 & 34 performs value increment.

As we can see the output above , which modifies the rownum and company code values.

- Scenario 3 : Updating Values in specific Row number.

This scenario - we will change the field contents base on condition. here we wanted to update the 'Remarks' for row no 2.

The code which is used in line no : 16. The key command is to perform this activity is data.at[ ]. here we have to mention the row position. as we all know in Array record count starts from 0. hence to replace the row no 2 : we need to provide 1.

The output of the operation is shown above. row number 2 'Remarks' has been modified.

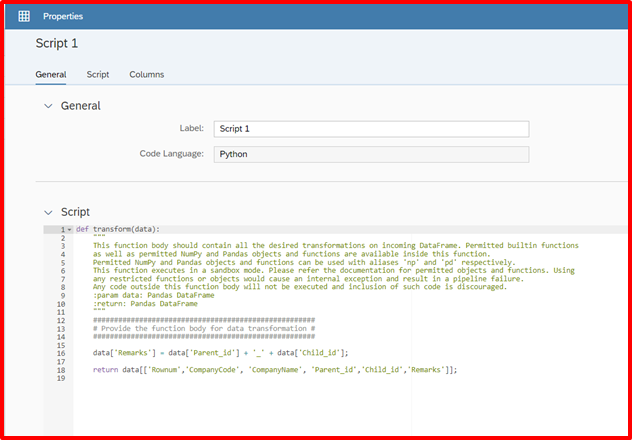

- Scenario 4 : Concatenating Field Values.

In this scenario - we will concatenate values extracted from other fields. Here the 'Remarks' Column is updated with 'Parent_id' and 'Child_id" with column values.

The Line no : 16 performs this operation.

The output shown above . Field values are concatenated in the last field. Using the '+' operator we can combine and concatenate fields and store it in required fields. Please note we must use str('integer value') , if we want to concatenate the integer values.

- Scenario 5 : Deleting a Specific Record from Result set.

In this scenario - we will delete the complete record based on conditions. we wanted to remov rownum less than 3. Hence we have copied the required rows to the new dataframe and re-assigned the filtered dataset to the original one.

Line No : 17 - the required results are copied to df_filtered data frame. and in line no : 18 , this is re-assigned to the actual data frame "data".

As demonstrated the we can the output rownumber 3 and above. These codes will be very handy for transforming datas during live implementation projects.

Conclusion:

Data Warehouse Cloud - is a powerful tool to re-vitalize the datawarehousing solution from SAP. In this Blog We managed to demonstrate one of the key features from DWC dataflow in combination with Python scripts.

- Handy python code to help in Implementations.

- Provides us confidence to transform data based on logics.

- Guides us to filter and update the recordsets for dynamic requirments.

- Giri Raaj. PMP -

- SAP Managed Tags:

- SAP Datasphere

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

93 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

66 -

Expert

1 -

Expert Insights

177 -

Expert Insights

299 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

344 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

422 -

Workload Fluctuations

1

- Checking HANA Cloud Vector Engine performances in Technology Blogs by SAP

- LLM, RAG and Cloud Foundry: No space left on device in Technology Q&A

- Error while HANA Cloud tenant in Python on SAP HANA Cloud in Technology Q&A

- Python RAG sample for beginners using SAP HANA Cloud and SAP AI Core in Technology Blogs by SAP

- New Machine Learning features in SAP HANA Cloud in Technology Blogs by SAP

| User | Count |

|---|---|

| 40 | |

| 25 | |

| 17 | |

| 13 | |

| 7 | |

| 7 | |

| 7 | |

| 6 | |

| 6 | |

| 6 |