- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Fluid Tele-collaboration in Virtual Reality using ...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Fluid Tele-collaboration in Virtual Reality using the Metaverse Today:

An SAP Recruitment Event Case Study

Authors: Nikolaos Mavridis, Kai Wussow

Especially during the pandemic, the question of creative collaboration through virtual means became a key element for the future of companies, which was why we created a series of experiments concerning Virtual Reality and the Metaverse. A full-blown business simulation exercise was designed and implemented together with SAP Skill Management in order to test creative collaboration in the metaverse. It was implemented as a case study exercise, utilized in a recruiting event, adressing “How it feels to be an SAP consultant.” The results were quite encouraging, informative, and in some respects also surprising!

Introduction

Although Virtual Reality (VR) has existed in basic forms since more than 25 years, today with the advent of new affordable high-quality devices such as the Oculus Quest 2, which cost a few hundred euros, it is starting to reach increasing maturity. And together with this development, the "Metaverse", as a concept but also as a buzz-word, has started to be everywhere around us; including, the famous renaming of the very successful Facebook company, into "Meta". But beyond the buzz, these two developments, might well start to fundamentally change the way we work, we socialize, we interact with professionals, we collaborate, and more: And might even create a much stronger distribution of activity across the physical-virtual “realms” as well as within their fuzzy border areas: The physical realm, where humanity primarily existed since its beginnings, with the virtual realm - which we are just starting to "physicalize" more and more realistically but also to move beyond physical limitations, and also, the "augmented" / blended realm - where blended physical/virtual reality starts to exist, sometimes even blurring the boundaries between the two.

Figure 1: The Oculus Quest II Virtual Reality Headset

Figure 2: From Physical Reality towards Virtual Reality passing through Augmented Reality based on Paul Milgram [1]

Further developments might increase the blending variations of the physical-virtual world, when combined with the ever-increasing physical sensing taking place through the Internet-of-Things (IoT), and with the creation of increasingly extensive, detailed, and accurate Digital Twins, as well as with more fluid blending of Artificial with Natural Intelligence as well as Individual with Collective Intelligence.

For example, when speaking to an Avatar in the Metaverse, one might be speaking to an AI-driven entity, or to a human-driven (in real time) entity, or to a Hybrid-entity which is sometimes algorithmically driven but sometimes relinquishes control to a human (for example, when the AI is incapable of addressing a specific request): a technique that is already widely used in customer-service chatbots. Furthermore, such a technique enables the creation of situated “training sets” which through Machine Learning can then increase the autonomy of AI agents. Also, note that through the right collaboration of teams consisting both of human as well as AI elements, interacting in real-time or off-line, and then assisting a single human, one might also blend effectively individual with collective intelligence.

Figure 3: A text message conversation (Left) and a conversation with a Metaverse Avatar (Right): Am I speaking to a human, to an AI, or maybe both? (some sentences from AI, some from human)

The big question though is: In what ways can these developments be beneficial? And what are the foreseeable next steps and viable-feasible-desirable applications that will massively start to be used? Towards that direction, SAP, already a world-class leader in IT and especially in ERP and transactional systems, but also with a strong presence in innovative technologies through its Business Technology Platform (BTP), is starting to experiment towards using Virtual Worlds:

Together with the SAP Practice and Skills Management Team we looked for a use case that would allow us to test the potential of VR technologies for creative collaboration. In recent years virtual meetings and workshops have gained a lot of attraction and quite some success due to the pandemic, but also showed clear restrictions in terms of creative quality and and social engagement in teams. For SAP creative collaboration in Design Thinking, Life Centered Design and other collaborative formats is of high value. We therefore settled for creating an SAP Recruitment Event Case Study. It involves a collaborative team effort, includes an active review by Jurors by definition and has a controllable setup in terms of participants, contents available and also clear experimental Peers, since this type of creative collaboration had been repeated in many formats of presence and in virtual formats. Our experimental study took place with Karlsruhe Institute of Technology (KIT) students, which had the form of a Business Simulation Exercise, in the form of an: “Introduction to what it feels like to be an SAP consultant”. Importantly enough, the event took place twice, in two versions: One in the Physical World, and the second in Virtual Reality, so certain direct comparisons can take place.

It is worth noting some of the other relevant activities at SAP regarding the Metaverse, for example, the "Metaverse One" page and the relevant parts of the "Oven Innovation Enablement" program, as well as the (not really Digital Twin, yet interesting Simulation) "Clover" program, and the "Metaverse Global Challenge" of the d-shop. [Kind note to readers: Some of these links might not be yet accessible outside SAP]

In this post, not only will we discuss both the structure as well as the key findings of this event, but we also provide a wider background, as well as a wider discussion including the future. This post is structured around a number of key questions, namely:

Q1) What was he Purpose of the event?

Q2) What are Relevant Ideas on the future of Recruiting leveraging the Metaverse

Q3) What are Relevant Ideas on the future of Team Collaboration?

Q4) What were the Methods used in the Experimental Event? (including Software and Hardware Choices, Process Design: Temporal & Organizational Structure of the Event, Roles and Team Structure, Expected Deliverables, Juror's Evaluation and Live Feedback, Results Derivarion)

Q5) What were the preliminary Take-Home Messages from this experimental event?

Q6) What is the wider Discussion around the event, including Future Steps?

Q1) What was the Purpose of the event?

On the 22nd of June of 2022, a prototype event was organized by SAP, which took place in the Metaverse, and more specifically in the Raum.APP space, and was accessed through Oculus Quest VR Headsets. This event had the form of an:

“Introduction to what it feels like to be an SAP consultant”

through an appropriate simulation exercise (the “Brews Brothers Coffee Company”) which was administered to KIT students (through fuks.org) that might also be prospective recruits for SAP. A somewhat-analogous physical event took place (some days before).

Figure 4: Some screenshots from our Experiment: The Recruiting Case Study using the “Brews Brothers Coffee Company” case – where you can get a first glimpse of the space, avatars, objects

The purpose of the experiment was to get experience in how such events (as well as with a wider scope of purposes) can take place in Virtual Spaces in the Metaverse, through designing a specific timeplan and stages, and recording various evidence/log materials including:

M1) AV-recordings from various angles and

M2) Specially designed questionnaires administered at key stages, and also following up the event with a

M3) 1-hour interview session where the participants freely voiced their opinions and gave important feedback.

As a result of this, a number of interesting take-home messages arose, as well as enhancements to the process; and most importantly further events are planned in order to obtain further experience, achieve statistical significance in some of the findings, and test the v2 of the event (as improved after the first run), towards creating a further improved v3.

Q2) What are relevant Ideas on the future of Recruiting leveraging the Metaverse?

Recruitment of appropriate potential full-time employees, as well as part-time, or even interns, is of primary importance to companies worldwide. Especially in IT, where tele-working can nowadays cover an impressively large fraction of required working activities, the advantages of international workforce can be numerous; and the need for physical relocation of this workforce are starting to decrease, even more so as several of the legal and taxation-related obstacles are starting to be resolved.

On the basis of the above developments, but anyway even within national- or even city-boundaries, moving recruitment events to the virtual realm, has started to become increasingly attractive; if not for the totality of such events, at least for a good fraction. In the past, beyond for example physical university-campus events or job fairs, SAP has utilized videoconferencing tools with break-out room functionality in order for small teams to collaborate and then rejoin the main session, and even online design-thinking tools such as Mural, as part of its virtual recruitment events.

Q3) What are relevant Ideas on the future of Team Collaboration?

Yet design-thinking techniques are just one form of team collaboration (among many others!), which are usually utilized towards brainstorming and deliberations. There is a wealth of design-thinking techniques that exist in the literature; a classic selection being part of IDEO's "The Field Guide to Human-Centered Design". Can we try to create a Metaverse-version of this handbook, without just copying the physical analogues, but rather by optimally adapting them to the Metaverse, and even creating new techniques which have no existing physical-world analogue?

Figure 5: “The Field Guide to Human-Centered Design” – the classic reference book from IDEO. Can we create an analogous guide for the case of the Metaverse?

But thinking beyond just design thinking, and centering on team collaboration, one can ask a fundamental (yet broad) question: How can one facilitate effect team formation and collaboration in different settings, i.e. moving from physical co-location in a room, to traditional tele-collaboration using audio or video-conference and offline tools, to immersive tele-collaboration using VR in the Metaverse?

And here, it is important to note the differences between the Physical World and the Metaverse: at its current state, but also at its future states. It might be true, on the one hand, that embodied co-presence in the Metaverse certainly still lacks many of the qualities and capabilities of physical embodied co-presence (for example, fluid haptic interaction, fidelity-realisticity of visual percepts, and much more!), but it is also true, one the other hand, that the Metaverse also contains capabilities that go beyond the limitations of the physical world (for example: instant tele-portation; absolutely accurate "memory" through replay of past situations; fluid change of embodiments and human-object interactions which are not physically possible, and more!). But then the key questions arise:

KQ1) So how can we utilize these extra "affordances" that only the Metaverse has, in order to facilitate better team collaboration, while at the same time compensating/modifying so that the physical qualities that the Metaverse lacks do not create disadvantages? (i.e. Maximize the utility of the capabilities that the Metaverse has but which the Physical World doesn't, while Minimizing the negative effects of the capabilities that the Physical World offers that the Metaverse doesn't)

And then, other relevant questions follow:

KQ2) So how can we create the right team-collaboration setups (layout, objects and their interactive capabilities, UX capabilities for users, toolboxes of multiple kinds, process flows / ceremonies etc) in order to create highly effective tele-collaboration in the Metaverse?

KQ3) How should the above be "tailored"/"customized" to specific types of tele-collaboration?

KQ4) And given the choice, which types of collaborative work should one choose to do in the Metaverse vs. the Physical World vs. other media and all their hybrid combinations?”

Yet another direction is concerned with measuring, describing, and enhancing team structures, patterns, and collaboration effectiveness. There is a wealth of existing results in relevant scientific disciplines; yet only recently have we been able to create very detailed logs of team collaboration using electronic means and to effectively start to analyze them. For example, one could mention the pioneering work of Sandy Pentland's group at the MIT Media Lab: Tanzeem Choudhury's "Sociometer" wearable devices [2] which analyzed interactions between team members, and generated link structures of groups - showing the way towards the empirical augmentation of existing theories of organizational behavior and social networks. Furthermore, numerous results exist when it comes to e-exchanges between group members and their patterns: For example, Peter Gloor's work on "Collaborative Innovation Networks" [3] from the MIT Center for Collective Intelligence, and subsequent work on finding collaborative innovation networks through correlating performance with social network structure. But by following the stream of the above three lines of research, one can notice that the Metaverse enables us to have very detailed logs of interactions of the team members with one another as well as with objects, and also logs of their bodily states. Therefore, a new set of key questions arises:

KQ5) Can we have identify the relations between (A): {bodily and interaction measurables} and (B): {team collaboration types, stages, significant events during teamwork, leadership styles}? (And many more such types of "team analytics" as well as interpretations/classifications)

KQ6) Can we utilize the above analytics towards increased self- and team-awareness and improving team-members individually as well as team collaboration as a whole?

KQ7) Can we thus evaluate and improve individual- and team-level collaborative skills, and can we predict and measure the effectiveness of combinations of specific individuals in teams, thus aiding towards effective team-building too?

Interestingly enough, for other simpler tasks (for example, training people to deliver talks and presentations to an audience seated on a meeting table), tools have started to exist that operate along similar lines - for example “VR Easy Speech” offering rhetoric and presentations coaching through Virtual Reality. However, such tools aiming towards team building and team collaboration offering measurable analytics and guided-tracked improvement training, are yet to appear; although, arguably, they could have immense importance towards optimizing the leap from individual intelligence & effectiveness, to team collective intelligence & effectiveness.

Figure 6: “VR Easy Speech” offering rhetoric and presentations coaching through Virtual Reality

Q4) What were the Methods used in the Experimental Event?

In this section, we will cover Software and Hardware Choices, the Process Design - i.e. the Temporal & Organizational Structure of the Event, The Roles of different participants and the Structure of the Team, Expected Deliverables, the specially-designed Questionnaires for the Juror's Evaluation and the participants as well as the Live Feedback session, and we will close with the qualitative and quantitative results derivation.

Q4.1) Software and Hardware Choices

In terms of the choice of Virtual Reality (VR) World supporting tele-meetings and tele-collaboration, initially there was some experimentation taking place with "Spatial". Quite importantly, some of the manipulable-object-creation capabilities of this choice, were appreciated. Later, for a mixture of not necessarily so technical reasons, it was decided to switch to "Raum" - which was somewhat inferior in the aforementioned capabilities, but nevertheless was preferable is certain respects. A beautiful two-floor-plus-terrace villa surrounded by sea, and containing various rooms, some of which with glass doors, as well as staircases, was used as the chosen environment: This was nicely mapped to the need for break-out rooms for team collaboration, as well as an area where the "interviews" with the simulated client would take place, and also areas for the initial "introduction and training" session, among others. Special objects were also created, for example to be used as referents to indexically pointed to while the "Introduction to SAP's Business Technology Platform" is taking place and the presenter is speaking about it, potentially with small dialogic segments from the participants.

Figure 7: The “Raum” office where the event took place; A beautiful two-floor-plus-terrace villa surrounded by sea, containing various rooms as well as manipulable/reformable objects

In terms of VR Headsets the choice of the Oculus Quest 2 was made, and SAP acquired a number of these devices in their stripped-down "business" version, and these were distributed to the participants.

As per deliverable M1 in section Q1, audiovisual (AV) recording of the sessions was one of the deliverables that could also be used for further cross-analysis. Two different forms of recording were used: Classic AV screen recording (through screen video capture software), as well as in-VR recording through special avatars that played the role of "Cameramen", both in fixed positions (for example in suitable places in the break-out rooms of the teams), as well as actual walking cameramen controlled by humans that had that role.

Moving from deliverable M1 to M2 as per section Q1, specially designed questionnaires were administered at key stages, and also following up the event. In more detail, the following questionnaires were administered (through Qualtrics) and analyzed later (through Qualtrics and through exported files that were imported in appropriate BTP products):

Qu1) Initial participants questionnaire

Qu2) During-the-event short progress questionnaires

Qu3) Main Participant Questionnaire (16 questions, including leadership-style questions as well as TMX, the “Team Members Exchange” standard questionnaire measuring the quality of reciprocal exchange among team members, as per [4])

Qu4) VR-Only Questionnaire (administered only in Metaverse event and not in Physical Event)

Qu5) Jurors Questionnaire (16 questions)

Qu6) Post-Event Questionnaire

Finally, as per M3, freeform end-of-event interviews took place, in which the main interviewer was Kai Wussow, but also judges and other organizers participated, and a fraction of the participants took part. The total duration was on the order of one and a half hour, and some of the questions asked, starting with open questions such as: “How was your experience?”, “What were the good things and the negative parts?”, and both the participants (8 of 13) as well as the two jurors took part in the interview.

Q4.2 Process Design:Temporal & Organizational Structure of the Event

In order to limit the actual VR recruitment session to less than four hours (which actually, as we found out, was already quite lengthy regarding comfort levels), an introduction and training workshop regarding the Oculus headset and Raum software took place earlier (in March 2022), and had a duration of 1.5 hours, while the actual events took place in June 2022, with the Metaverse version having a duration of 4 hours, while the Physical version lasted 3 hours.

The detailed agenda of the Metaverse event can be seen below:

Figure 8: The Detailed Agenda of VR Metaverse Case Study Event

Q4.3 Roles and Team Structure

The different roles that existed in the event were R1-R5:

R1) Participants

The participants were 13 students of Karlsruhe Institut of Technology (KIT). All of them were affilieated with the organization fuks.org. During the day of the event, one could not attend, and another one had a hardware crash early on after joining the meeting, so the remaining 11 were the fully active participants, and were organized as 3 groups.

R2) Judges

There were three SAP personnel serving as judges (a Strategic Advisor, a Consulting Director and an Intern for Human Factors Research).

R3) Brews-brothers CEO & CTO Impersonators

Kai Wussow, Chief Enterprise Business Architect at SAP played the role of CEO of the fictional companmy “Brews Brothers", which was the client of the consultancy simulation, while another SAP employee played the role of the CTO of the fictional company “Brews Brothers”.

R4) Coaches & Introducers

Three SAP personnel, played the roal of participant team “coaches”, while Nikolaos Mavridis and another SAP person introduced the participants to the fundamentals of the Intelligent Enterprise of SAP and to the SAP Business Technology Platform, its purpose and products, so that they can use these in the proposed solutions for “Brews Brothers”.

5) Cameramen

Last but not least, two externals at SAP, served as human “cameramen”, moving around the virtual space and performing video recordings, in conjunction with the fixed cameras that were placed in the rooms.

Also, it is worth noting that other senior members from SAP were also invited in preparation meetings, and that Dr. Evanthia Dimara, Assistant Professor at Utrecht University, also helped significantly during the experimental design.

Q4.4) Expected Deliverables

The expected deliverable that each team was preparing was a presentation to be given to the “Brews Brothers” C-level executive impersonators, to be observed by the judges too. This presentation was prescribed not to simply be a “powerpoint” presentation given in VR; but rather to utilize a toolchest for creating 3-D elements that will then form the “referents” to be pointed at while the teams are narrating their presentation: And this toolchest we called the “Walled Garden”. Notice that this is one of the most important differences for VR presentations as compared to videoconference and real life: It is much easier to create and utilize complex (and sometimes even interactive) 3D objects that will then serve as “prompts”/”referents” around which the presentation can be structured, and around which the participants/audience as well as the presentation givers/presenters can be physically walking around and examining/interacting with while the presentation is taking place. Of course, the appropriate content and capabilities of such a toolchest, remains to be optimized; as well as the skills and knowledge required by the creators, in order to utilize it effectively.

The total duration of each team’s presentation was fixed at approx. 12 minutes, including time for Q/A from the jurors. In Figure 9 you can see a scene from the presentation of one of the teams:

Figure 9: A scene from the presentation of one of the teams

It is worth noting two different types of elements constructed from the “walled garden” that were used in this presentation: 2-D notes (like giant “Post-It” stickers that are often used for design thinking sessions), as well as 3-D floating elements (in this case “floating rectangles”, such as the ones in the right corner of the room).

Figure 10: Other elements from the “Walled Garden” that can be used in presentations: Cartoon characters, “spoken word” bubbles, paper drawings, and walking paths

There exist many more elements that can be used, beyond these two: For example, in Figure 10 you can see cartoon characters, with “spoken word clouds” above them, and paper pictures, arranged in a an area where a “virtual tour guide” can walk client’s avatars around while narrating, and making stops at each cartoon character. The cartoon characters could represent personas; and they could even become interactive and dialogic, and replaced by AI- or human-controlled avatars, thus enabling memorable interactive “walk-through” experiences to the guests.

Q4.5) Scenes from the event

Now, let us move to some screenshots from the actual event, together with some basic descriptions and commentary (note that the RAUM office where the event took place was shown earlier in Figure 7):

Figure 11: A scene from team building, where each team member posts a note on the wall, with their photo and their name – here you can see all three groups in the left, middle, and right areas.

Figure 12: A scene from the case study intro: Jacob (CTO) presenting the case study, as taken through the eyes of Kai (CEO) upper left, and the participants watching

Figure 13: Introducing the Intelligent Enterprise Strategy: Narrator presenting, participant audience around the “referent” 3D elements that had been created beforehand in order to liiustrate basic concepts of the SAP Intelligent Enterprise

Figure 14: Learning more about SAP Solutions

Figure 15: Teams start working on the Case Study

Figure 16: Coming Closer to the final solution

And finally, as shown before in Figure 9, the teams gave the Final Case Study presentations.

Q4.6) Juror's Evaluation, Participant Questionnaires, and Live Feedback

The questionnaire Qu5 (which can be found in Appendix 5) was completed by the jurors around session 05 (as per Figure 8), following the participant teams presentation and the Q/A. Numerous more Qualtrics questionnaires were administered, namely: The Initial Participants Questionnaire (Qu1), administered at the beginning of the event, the during-the-event short progress questionnaires (Qu2), tracking team progress and sentiment, administered in two points during the period allocated for the teams to prepare their presentations. Most importantly, also a post-event questionnaire was submitted (period 07) as well as two lengthy questionnaire, namely the main participant questionnaire (Qu3, at period 08) and the VR-only questionnaire (Qu4, at period 08). It is noteworthy that the VR-only questionnaire, which was designed by Kai Wussows, was not administered in the physical version of the event, and aimed towards explicating the differences between the physical-world vs. virtual-world versions. Also that the Main Participant Questionnaire (Qu3), contained 16 questions, including leadership-style questions as well as TMX, the standard “Team Members Exchange” standard questionnaire measuring the quality of reciprocal exchange among team members, as per [4].

Q4.7) From Videos and Questions to Results

Following the conclusion of the events, the analysis process started. SAP Qualtrics software was used to administer and to perform the initial analysis of the questionnaires:

Figure 17: The Questionnaires design screen, in SAP Qualtrics software

Figure 18: Example of the automated answer analysis available through SAP Qualtrics

Furthermore, comments were collected by all the people playing roles in the event, if any such comments existed, and the 1-hour post-event interview was digested (*link to Interview!*)

Most importantly, Data Science techniques using Python and SAP HANA were used in order to analyze the questionnaires and find further statistical patterns. In the future, given richer movement/interaction data through logs derived from Mozilla Hubs (as Raum was not providing such logs), further data-science analysis will take place, in order to find connections between the behavioral/interaction data and the overall team and personal qualities.

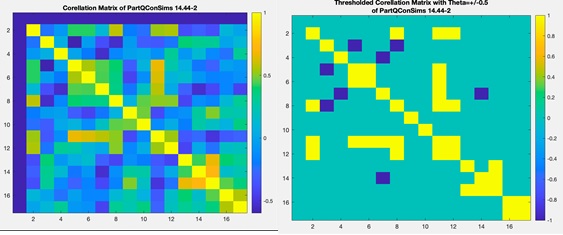

Regarding the external-to-qualtrics Data Science analysis, csv files were exported and imported, and a correlation analysis of the answers of the relevant questions of the main participant questionnaire took place, among others. In Figure 19, the color-coded initial corellation results can be seen, in non-thresholded (right) vs. double-side-thresholded (left) versions.

Figure 19: Color-coded corellations between answers to questions: non-thresholded (left) versus thresholded (right) result matrices.

Q5) What were the preliminary Take-Home messages from this experimental event?

A number of interesting take-home messages arose from the events. In particular:

H1) 20% or so of the participants dropped out: They reported that this was due to headache or tiredness, and also sometimes due to technical problems (one VR headset stopped working due to overheating).

H2) Jurors reported that deliverables were better in VR than in the real-world, both in terms of content as well as in terms of appearance

H3) Participants reported that deliverables were of lower quality in VR as compared to the real-world

H4) Participants reported that they experienced a steep learning curve, as well as a satisfying feeling while doing the work in VR, and while filling the walls with content

Now, moving on the the 16-Question VR & Physical Questionnaire (Qu3, including TMX), and starting with the statistically significant differences between the Physical vs. Virtual cases:

H5) Suggestions about better work to other team members took place more often in physical

H6) In virtual, participants reported more understanding of their problems and needs by other team members, as compared to physical

H7) In virtual, participants reported more recognition of their potential by other team members, as compared to physical

Furthermore, in terms of correlations between answers:

H8) Other team members were more likely to recognize your potential if:

- Strongly concentrated non-consultative leadership did not exist

- More collective decision-making style existed

H9) The event was reported to be worth of the participants time when:

- Strong top-down leadership did not exist

H10) If there was flexibility about switching job responsibilities:

- Then you often volunteered your efforts to help others

H11) The more other team members asked you to help out in busy situation:

- The more did you volunteer your efforts to help others, and vice-versa

- The more collective the decision-making style was, and vice-versa

And now, let us move on to the 26-question VR-only questionnaire (Qu4):

H12) I believe VR Events to be more efficient to Physical Events:

- The more my learning curve & comprehension improved when actively engaged in the challenge

H13) I believe VR Events to be more effective to Physical Events:

- The more it was easy for me to walk around and understand the space in VR.

H14) The more it was easy for me to generate new ideas to problem-solving:

- The more I deeply enjoyed today’s workshops

- The less I needed guidance in the workshop

- The more I felt inspired to think outside of the box during the session

H14) My emotions in VR being amplified compared to Physical Events:

- Corellated with VR being a positive experience for me

H15) I had a strong feeling of working together and human interaction when:

- VR was a positive experience for me

- My emotions in VR were amplified as compared to physical events

Q6) What is the wider discussion around the event, including future steps?

First of all, it is worth considering the take-home messages H1-H15 above, as they arose from the analysis of the deliverables. Some of them are somewhat trivial, while other provide valuable insights. What is apparently highly important though, is to take the following future steps:

F1) Run the experiment more times, in order to gather a richer data set enabling more statistical strength in the observations, while making sure that the physical vs. virtual versions are as aligned as possible.

F2) Make sure that the automated behavioral/interaction log (possibly through Mozilla hubs, for example as per [5], is indeed taken, and appropriately analyzed, alone as well as in conjunction with the questionnaires and other observations, in order to reveal correlations and patterns across these two realms of data.

F3) Further refine the experimental setup, questionnaires, and activities, in order to provide further insights and/or test explanatory hypothesis for the observations that arose from the data so far.

F4) Extend the events from simulation-exercise student recruitment events, to other genres, such as purely team collaboration events, customer walk-through events, and so on, in order to derive insights towards the understanding and improvement of all of these cases

F5) Explore connections between this event’s setup and other relevant SAP activities in the Metaverse as well as in related 4th Industrial Revolution technologies, for example digital twins, chatbots that could be driving interactive avatars within the Metaverse, and more

CONCLUSION

The design, running, and analysis of these experiments was a very pleasant experience for all involved, and the overall consensus is that this is a very promising first run, and we clearly did indeed learn a few things. Also, it was clear that further involvement of SAP in the giant steps being taken towards forming the Metaverse’s future and uses, should be of primary importance, and thus this initiative explores one of these directions, which can furthermore bring immediate utility.

Thus, with appropriate continuation of this initiative given the future steps F1-F5 outlines above, and in conjunction with other relavant initiatives, let us conclude with a hope (which also forms a reachable vision, and not just a hope!):

Let us continue our efforts, so that we can indeed reach a state in the near future, in which “Flexible teams from all over the world can have Natural, Effective and Exciting collaborations in the MetaVerse opening up avenues of Creativity and Innovation that were impossible before, creating new and fulfilling jobs, while achieving team-building and team-membership-skills improvement and ever-increasing self-awareness and other-awareness, and also widely-encompassing empathy and friendship with humans from all locations and walks of life!”

REFERENCES

[1] Milgram, P. and Kishino, F., 1994. A taxonomy of mixed reality visual displays. IEICE TRANSACTIONS on Information and Systems, 77(12), pp.1321-1329.

[2] Choudhury, T. and Pentland, A., 2003, October. Sensing and modeling human networks using the sociometer. In Seventh IEEE International Symposium on Wearable Computers, 2003. Proceedings. (pp. 216-222). IEEE.

[3] Gloor, P.A., 2006. Swarm creativity: Competitive advantage through collaborative innovation networks. Oxford University Press.

[4] Seers, A. (1989) Team-Member Exchange Quality: A New Construct for Role-Making Research. Organizational Behavior & Human Decision Processes, 43, 118-135.

https://doi.org/10.1016/0749-5978(89)90060-5

[5] Shamma, D.A. (2020) “VR Research Collection in Mozilla Hubs”, available online (as per 10/01/23) https://ayman.medium.com/vr-research-in-mozilla-hubs-63fd3002eedf

- SAP Managed Tags:

- Design Thinking,

- SAP Business Technology Platform

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,661 -

Business Trends

88 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

65 -

Expert

1 -

Expert Insights

178 -

Expert Insights

280 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

784 -

Life at SAP

11 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

330 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,886 -

Technology Updates

408 -

Workload Fluctuations

1

- Understanding the Data Review steps in Onboarding and the impact on Employee Central in Technology Blogs by SAP

- SF Recruiting Contract Creation using SAP BTP, SAP BPA & SF Work Zone in Technology Q&A

- Fairness in Machine Learning - A New Feature in SAP HANA Cloud PAL in Technology Blogs by SAP

- Job Profile Builder Basics in Technology Q&A

- It’s time again to participate in SAP Customer Engagement Initiative Projects in Technology Blogs by SAP

| User | Count |

|---|---|

| 13 | |

| 11 | |

| 10 | |

| 9 | |

| 9 | |

| 7 | |

| 6 | |

| 5 | |

| 5 | |

| 5 |