- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- How to run a HANA Stored Procedure in SAP Datasphe...

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Advisor

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

01-09-2023

3:09 PM

There are situations where one might need to run some backend calculations from a story in SAP Analytics Cloud (SAC). For example, when we want to execute complex logic or calculations to data or parameters that have been updated.

In this post I will show how to use the multi-actions in SAP Analytics Cloud to call a Stored Procedure residing in SAP HANA Cloud or SAP Datasphere (DSP).

For this purpose I will use the recently published blog about how to create an API in Cloud Foundry to call Stored Procedures in SAP HANA Cloud, using the SAP Clo.... In that post I created a stored procedure that adds a record to a table CALL_HISTORY with the current timestamp and some additional information. The same procedure will be used here, but deployed in SAP Datasphere. I will use the Multi-Actions in a SAP Analytic Cloud story to call that API. A view will be created in SAP DSP to display the content of CALL_HISTORY in the same SAC story.

The HANA stored procedure will be deployed in a HDI container that can reside in SAP HANA Cloud or within SAP Datasphere. This container will have the HANA native artefacts: table and procedures.

On top of that, SAP Cloud Application Programming model will be used to provide the API service as well as the user authentication in Cloud Foundry.

This scenario is fully covered in the blog: how to create an API in Cloud Foundry to call Stored Procedures in SAP HANA Cloud, using the SAP Clo....

In the next sections I will used the name DWC_API_Service_Test for the application and services, which is exactly the same as the HANA_API_Service_Test created in that blog, but pointing to a HANA Cloud instance within SAP Datasphere, as described in the next option.

In this case the same blog (how to create an API in Cloud Foundry to call Stored Procedures in SAP HANA Cloud, using the SAP Clo...), will be used as baseline. However, as the project will be deployed in the HANA Cloud instance within SAP Datasphere, there are some additional considerations to take into account:

All these considerations are explained in the blog: Develop on SAP Data Warehouse Cloud using SAP HANA Deployment Infrastructure (HDI), by nidhi.sawhney

Once the API service is deployed in Cloud Foundry, it can be found in the BTP Cockpit, within the space applications. My application is named DWC_API_Service_Test-srv, which appears like:

By opening the DWC_API_Service_Test-srv application, the API URL can be found in the Application Routes.

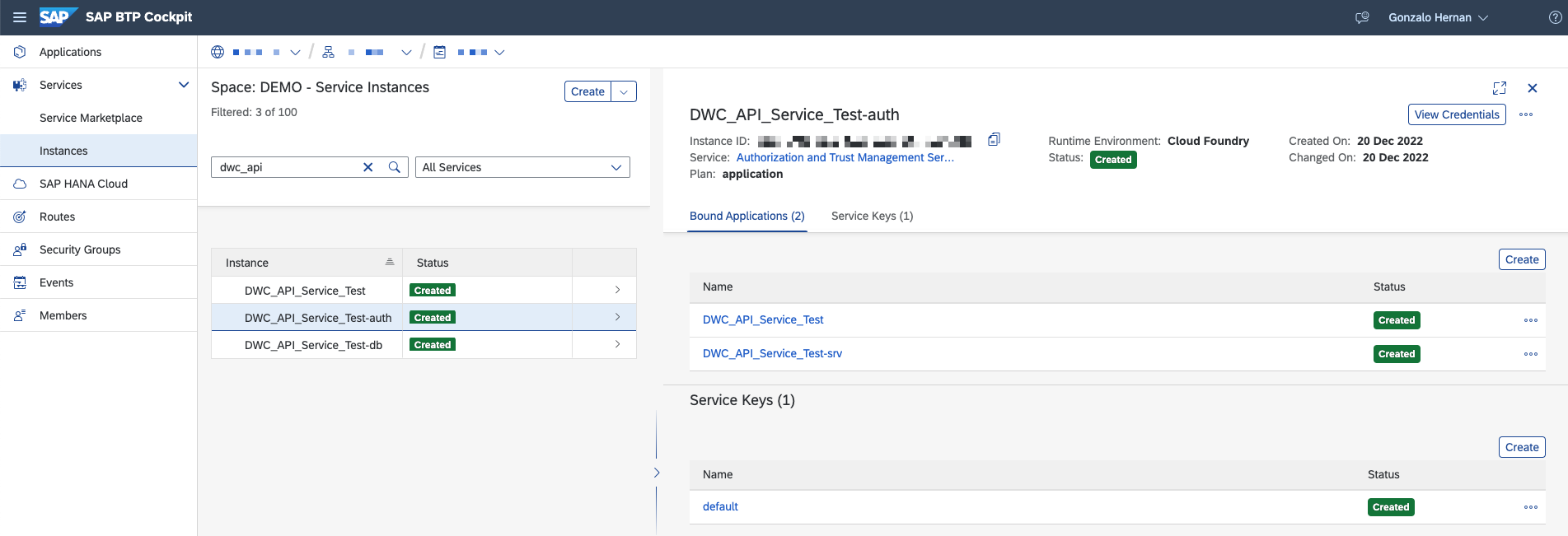

Apart from that, the user authentication information will be needed, which is described in the services instances. In my case, this is the DWC_API_Service_Test-auth service:

By clicking on View Credentials (top-right), all the details are displayed. Only the first three parameters are needed: clientid, clientsecret, and URL.

With all these information we are ready to create a connection to the API in SAC.

In SAP Analytics Cloud I will create a new connection for HTTP API:

The Data Service URL is the Application URL from the previous step.

For this API, the authentication type is OAuth 2.0 Client Credentials. The next three parameters are from the authentication service:

After clicking OK, the connection should be successfully created.

The next step is to create a new Multi-Actions, by clicking on Multi-Actions on the left menu. I named it DWC_API_Service_Test. Then I added an API step,

A name should be given to the API step. In my case I used Stored_Procedure_API.

At this moment in time, only POST APIs are allowed in Multi-Actions. Therefore, I use the service: act_register_call_with_param (see previous blog: how to create an API in Cloud Foundry to call Stored Procedures in SAP HANA Cloud, using the SAP Clo...)

In the API URL, the application URL is written with the addition of /catalog/

All parameters should be in the body:

For the execution results, I selected “Synchronous Return”, and I saved it

In order to access the table CALL_HISTORY, the HDI container needs to be added to the SAP DSP space:

Once the HDI container is added, a graphical view can be created in the Data Builder. When creating a view, the table should be visible by going to sources. I created an analytical view with VALUE as a Measure in order to consume it from SAP Analytics Cloud.

After its deployment, it should be accessible from SAP Analytics Cloud.

In SAP Analytics Cloud, I am going to create a basic story that connects to SAP Datasphere. After creating the new canvas, I added data from data source, in this case live data from SAP Datasphere.

In this example I just created a table to display the data and the Multi-Actions. The Multi-Action appears in the insert options as a Planning Trigger.

In the Multi-Actions settings I selected the Multi-Action name previously defined.

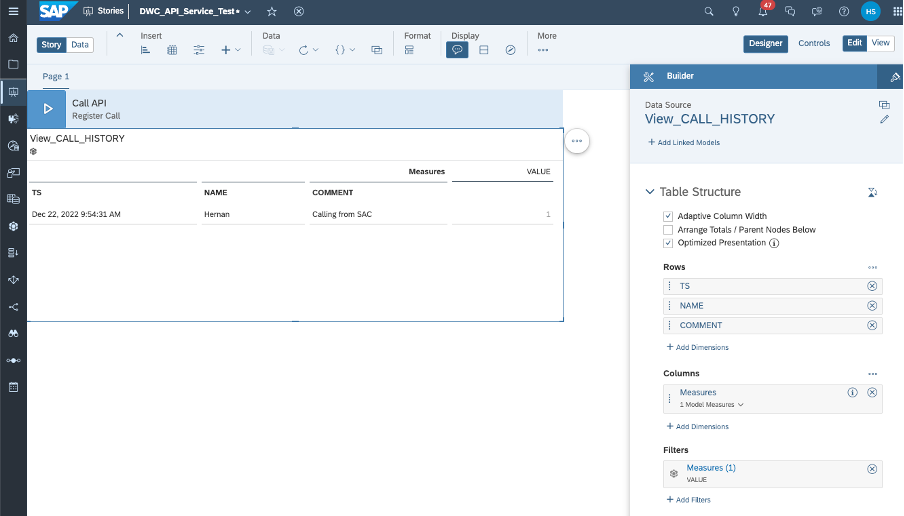

Then I added a table to display the View_CALL_HISTORY from SAP DSP.

Now, by clicking on the Multi-Action control, the API will be triggered. Once its execution finished, the data can be refreshed by clicking the Refresh button in the toolbar.

This blog post showcase an example on how to trigger the execution of a HANA Stored Procedure via API from SAP Analytics Cloud. The Stored Procedure can reside in SAP Datasphere as well as in an independent SAP HANA Cloud.

In this way, business users can execute logic in the backend directly from a report. Therefore, more complex use cases can be implemented. For example, Monte Carlo simulations leveraging the SAP HANA Predictive Analysis Library (PAL) could be triggered directly from SAP Analytics Cloud.

I want to thank ian.henry, maria.tzatsou2 and nekvas75 for all the discussions, shared experiences and received support in relation to this blog post!

Check community resources and ask questions on

In this post I will show how to use the multi-actions in SAP Analytics Cloud to call a Stored Procedure residing in SAP HANA Cloud or SAP Datasphere (DSP).

For this purpose I will use the recently published blog about how to create an API in Cloud Foundry to call Stored Procedures in SAP HANA Cloud, using the SAP Clo.... In that post I created a stored procedure that adds a record to a table CALL_HISTORY with the current timestamp and some additional information. The same procedure will be used here, but deployed in SAP Datasphere. I will use the Multi-Actions in a SAP Analytic Cloud story to call that API. A view will be created in SAP DSP to display the content of CALL_HISTORY in the same SAC story.

Project Setup

The HANA stored procedure will be deployed in a HDI container that can reside in SAP HANA Cloud or within SAP Datasphere. This container will have the HANA native artefacts: table and procedures.

On top of that, SAP Cloud Application Programming model will be used to provide the API service as well as the user authentication in Cloud Foundry.

Option 1: HDI container in SAP HANA Cloud

This scenario is fully covered in the blog: how to create an API in Cloud Foundry to call Stored Procedures in SAP HANA Cloud, using the SAP Clo....

In the next sections I will used the name DWC_API_Service_Test for the application and services, which is exactly the same as the HANA_API_Service_Test created in that blog, but pointing to a HANA Cloud instance within SAP Datasphere, as described in the next option.

Option 2: HDI container within SAP Datasphere

In this case the same blog (how to create an API in Cloud Foundry to call Stored Procedures in SAP HANA Cloud, using the SAP Clo...), will be used as baseline. However, as the project will be deployed in the HANA Cloud instance within SAP Datasphere, there are some additional considerations to take into account:

- The BTP space needs to be connected to the SAP DWC tenant.

- The HDI container needs to be deployed in the HANA Cloud instance of the SAP DWC tenant.

- The HDI content (table and stored procedures) needs to become accessible from SAP DSP by adding roles to the project. These roles should provide SELECT and SELECT METADATA privileges to read the table and EXECUTE privilege to run the store procedures.

All these considerations are explained in the blog: Develop on SAP Data Warehouse Cloud using SAP HANA Deployment Infrastructure (HDI), by nidhi.sawhney

API credentials

Once the API service is deployed in Cloud Foundry, it can be found in the BTP Cockpit, within the space applications. My application is named DWC_API_Service_Test-srv, which appears like:

SAP BTP Cockpit - Applications

By opening the DWC_API_Service_Test-srv application, the API URL can be found in the Application Routes.

SAP BTP Cockpit - API URL

Apart from that, the user authentication information will be needed, which is described in the services instances. In my case, this is the DWC_API_Service_Test-auth service:

SAP BTP Cockpit - Services Instances

By clicking on View Credentials (top-right), all the details are displayed. Only the first three parameters are needed: clientid, clientsecret, and URL.

SAP BTP Cockpit - Service Credentials

With all these information we are ready to create a connection to the API in SAC.

HTTP API Connection in SAP Analytics Cloud

In SAP Analytics Cloud I will create a new connection for HTTP API:

SAP Analytics Cloud - New Connection

The Data Service URL is the Application URL from the previous step.

For this API, the authentication type is OAuth 2.0 Client Credentials. The next three parameters are from the authentication service:

- OAuth Client ID = clientid

- Secret = clientsecret

- Token URL is the user authentication URL with the addition of /oauth/token

SAP Analytics Cloud - HTTP API connection details

After clicking OK, the connection should be successfully created.

Multi-Actions in SAP Analytics Cloud

The next step is to create a new Multi-Actions, by clicking on Multi-Actions on the left menu. I named it DWC_API_Service_Test. Then I added an API step,

SAP Analytics Cloud - Multi-Action

A name should be given to the API step. In my case I used Stored_Procedure_API.

At this moment in time, only POST APIs are allowed in Multi-Actions. Therefore, I use the service: act_register_call_with_param (see previous blog: how to create an API in Cloud Foundry to call Stored Procedures in SAP HANA Cloud, using the SAP Clo...)

In the API URL, the application URL is written with the addition of /catalog/

All parameters should be in the body:

{

"comment": "Calling from SAC",

"name": "Hernan",

"value": 1

} For the execution results, I selected “Synchronous Return”, and I saved it

SAP Analytics Cloud - Multi-Action API Step

View in SAP Datasphere

In order to access the table CALL_HISTORY, the HDI container needs to be added to the SAP DSP space:

SAP Datasphere - Space Management

Once the HDI container is added, a graphical view can be created in the Data Builder. When creating a view, the table should be visible by going to sources. I created an analytical view with VALUE as a Measure in order to consume it from SAP Analytics Cloud.

SAP Datasphere - New Graphical View

After its deployment, it should be accessible from SAP Analytics Cloud.

Story in SAP Analytics Cloud

In SAP Analytics Cloud, I am going to create a basic story that connects to SAP Datasphere. After creating the new canvas, I added data from data source, in this case live data from SAP Datasphere.

SAP Analytics Cloud - Data Source

In this example I just created a table to display the data and the Multi-Actions. The Multi-Action appears in the insert options as a Planning Trigger.

SAP Analytics Cloud - Insert Multi-Action

In the Multi-Actions settings I selected the Multi-Action name previously defined.

Then I added a table to display the View_CALL_HISTORY from SAP DSP.

SAP Analytics Cloud Story - Table configuration

Now, by clicking on the Multi-Action control, the API will be triggered. Once its execution finished, the data can be refreshed by clicking the Refresh button in the toolbar.

SAP Analytics Cloud story - Refresh data

Summary

This blog post showcase an example on how to trigger the execution of a HANA Stored Procedure via API from SAP Analytics Cloud. The Stored Procedure can reside in SAP Datasphere as well as in an independent SAP HANA Cloud.

In this way, business users can execute logic in the backend directly from a report. Therefore, more complex use cases can be implemented. For example, Monte Carlo simulations leveraging the SAP HANA Predictive Analysis Library (PAL) could be triggered directly from SAP Analytics Cloud.

I want to thank ian.henry, maria.tzatsou2 and nekvas75 for all the discussions, shared experiences and received support in relation to this blog post!

Additional Resources

Check community resources and ask questions on

Labels:

3 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,661 -

Business Trends

88 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

65 -

Expert

1 -

Expert Insights

178 -

Expert Insights

280 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

784 -

Life at SAP

11 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

330 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,886 -

Technology Updates

408 -

Workload Fluctuations

1

Related Content

- 10+ ways to reshape your SAP landscape with SAP Business Technology Platform – Blog 4 in Technology Blogs by SAP

- Top Picks: Innovations Highlights from SAP Business Technology Platform (Q1/2024) in Technology Blogs by SAP

- Enhanced Data Analysis of Fitness Data using HANA Vector Engine, Datasphere and SAP Analytics Cloud in Technology Blogs by SAP

- SAP Datasphere : データアクセス制御 (行レベルセキュリティ) in Technology Blogs by SAP

- Join and innovate with the SAP Enterprise Support Advisory Council (ESAC) Program in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 13 | |

| 11 | |

| 10 | |

| 9 | |

| 9 | |

| 7 | |

| 6 | |

| 5 | |

| 5 | |

| 5 |