- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- After simulation, try out validation. Identity Pro...

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Product and Topic Expert

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

11-23-2022

9:31 AM

Working with Identity Provisioning transformations requires some knowledge of JSON data format and JSONPath syntax. A good understanding of the system-specific user and group attributes is also a must.

But even if you feel confident in your expertise, managing transformations can be tough at times.

If you recognize yourself in these questions, keep reading. The Identity Provisioning service released a validate job to make your life easier. You can use it to test the attribute mapping of the entities and see if, how and which of them will be provisioned to the target system without modifying it. It is similar to the simulate job (released in July), which estimates the number of entities to be provisioned. But unlike it, the validate job only verifies their content, that is, the attributes.

By implementing validation along with simulation, Identity Provisioning (IPS) closes the loop with customers who were very much looking for solution to “dry run” their changes.

The Validate Job is displayed on the Jobs tab of the given source system in the Identity Provisioning admin console.

Note: This job is available only for Identity Provisioning tenants running on SAP Cloud Identity infrastructure. If your tenant is running on Neo environment, you won't be able to use it until you migrate first. See: Go for your quick win! Migrate Identity Provisioning tenants to SAP Cloud Identity infrastructure.

Choosing Run Now however does not trigger the job literally. It just opens a dialog box where you must import CSV (comma-separated values) files – one for users and/or one for groups, each of them containing a maximum of 10 entities.

The cool thing about it is that you don’t even need to configure the connection to your source and target systems to validate the transformations. You only need to enable them in the IPS admin console and link the source system to the target ones.

There is no doubt however that you have to make initial effort when creating the CSV input files. But if you get used to it, you’ll see how much it pays off.

Use a text editor, preferably Notepad or Notepad++.

Note: If you choose Microsoft Excel, be aware that it adds special characters, which IPS cannot escape. These characters will produce incorrect validation results.

The 1st row must contain the attribute names of the respective user or group entity as defined in the read transformation of the source system. These are the sourcePath values.

The next (up to 10) rows must contain the attribute values of particular users or groups. Each row lists the values of 1 particular user or group.

The number of attribute values (fields) must match the number of attribute names (headers) in the file. Otherwise, you will get an error when importing it and won't be able to proceed.

Needless to say, attributes and values must be comma separated. The file must be saved as .csv text file.

Tip: If the backend system provides the option to download users and groups in CSV files, you can take advantage and use it, like this one for example: Download a list of users in Azure Active Directory portal. But you need to ensure the content of the file fulfils the requirements above. It might take more time to adjust the file than create it on your own.

Let’s test user provisioning from MS Azure AD source to Identity Authentication (IAS) and SAP Commissions target systems using the default transformations of the three systems.

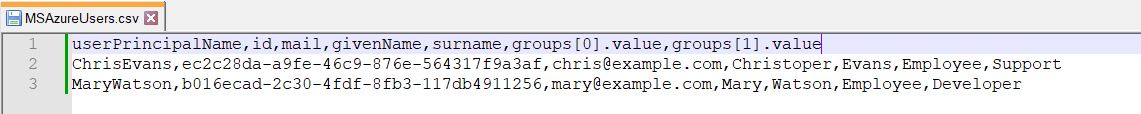

1. Create and save a CSV file containing 2 users with a few attributes (2nd and 3rd row).

2. Open the MS Azure AD source system and choose: Jobs -> Validate Job -> Run Now. Import the CSV file in the Import Users field and choose Validate.

3. You are informed that the result of a validate job is provided in a downloadable ZIP file. It contains two CSV files - one for each of the target systems connected to the source.

4. Open the files and view how the users defined in MS Azure AD would be provisioned to the respective target system.

Identity Authentication:

The 1st row contains the attribute names as defined in the write transformation of the target system. These are the targetPath values. The following attributes are listed:

The 2nd and the 3rd rows contain the attribute values of the users. The following values are listed:

Let’s now test user provisioning from SAP SuccessFactors to Identity Authentication when the read transformation is modified. A condition is set to skip reading of users without emails:

"condition": "($.email EMPTY false)",

This will ensure that only users with emails will be provisioned to IAS, where this is a required attribute.

1. Create and save a CSV file containing 3 users with a few attributes.

2. Open the SAP SuccessFactors source system and choose: Jobs -> Validate Job -> Run Now. Import the CSV file in the Import Users field and choose Validate.

3. You are informed that there are failed or skipped entities in the traces.log file.

4. Open the traces.log file and view what’s wrong.

The result shows that:

5. Open the .csv file and view how the users defined in SAP SuccessFactors would be provisioned to IAS.

Now, it’s your turn to play around. You must have come across lots of use cases in your work with IPS and can’t help but start testing.

Just one more thing, there is no right or wrong validation. As long as the validate job provides correct results, it is always successful even if it shows failed or skipped entities only.

But even if you feel confident in your expertise, managing transformations can be tough at times.

- “How do I map user or group attributes from source to target systems?”

- “If my users don’t have emails, can I map the email attribute to another one?”

- “Is my condition to provision only users that are members of particular group feasible?”

If you recognize yourself in these questions, keep reading. The Identity Provisioning service released a validate job to make your life easier. You can use it to test the attribute mapping of the entities and see if, how and which of them will be provisioned to the target system without modifying it. It is similar to the simulate job (released in July), which estimates the number of entities to be provisioned. But unlike it, the validate job only verifies their content, that is, the attributes.

By implementing validation along with simulation, Identity Provisioning (IPS) closes the loop with customers who were very much looking for solution to “dry run” their changes.

How to Run

The Validate Job is displayed on the Jobs tab of the given source system in the Identity Provisioning admin console.

Note: This job is available only for Identity Provisioning tenants running on SAP Cloud Identity infrastructure. If your tenant is running on Neo environment, you won't be able to use it until you migrate first. See: Go for your quick win! Migrate Identity Provisioning tenants to SAP Cloud Identity infrastructure.

Choosing Run Now however does not trigger the job literally. It just opens a dialog box where you must import CSV (comma-separated values) files – one for users and/or one for groups, each of them containing a maximum of 10 entities.

The cool thing about it is that you don’t even need to configure the connection to your source and target systems to validate the transformations. You only need to enable them in the IPS admin console and link the source system to the target ones.

There is no doubt however that you have to make initial effort when creating the CSV input files. But if you get used to it, you’ll see how much it pays off.

How to Create a CSV File

Use a text editor, preferably Notepad or Notepad++.

Note: If you choose Microsoft Excel, be aware that it adds special characters, which IPS cannot escape. These characters will produce incorrect validation results.

The 1st row must contain the attribute names of the respective user or group entity as defined in the read transformation of the source system. These are the sourcePath values.

- The attribute names use the JSONPath dot-notation.

- Complex attributes with sub-attributes are separated with ".", for example: name.familyName

- Arrays of simple attributes must have numeric index, for example: schemas[0]

- Arrays of complex attributes must have numeric index and sub-attributes separated with ".", for example: emails[0].value

- Extension schema attributes are separated from schema name with ":", for example: urn:ietf:params:scim:schemas:extension:enterprise:2.0:User:manager.value, where urn:ietf:params:scim:schemas:extension:enterprise:2.0:User is the schema name.

The next (up to 10) rows must contain the attribute values of particular users or groups. Each row lists the values of 1 particular user or group.

The number of attribute values (fields) must match the number of attribute names (headers) in the file. Otherwise, you will get an error when importing it and won't be able to proceed.

Needless to say, attributes and values must be comma separated. The file must be saved as .csv text file.

Tip: If the backend system provides the option to download users and groups in CSV files, you can take advantage and use it, like this one for example: Download a list of users in Azure Active Directory portal. But you need to ensure the content of the file fulfils the requirements above. It might take more time to adjust the file than create it on your own.

Use Case 1 – Validate Default Transformations

Let’s test user provisioning from MS Azure AD source to Identity Authentication (IAS) and SAP Commissions target systems using the default transformations of the three systems.

1. Create and save a CSV file containing 2 users with a few attributes (2nd and 3rd row).

2. Open the MS Azure AD source system and choose: Jobs -> Validate Job -> Run Now. Import the CSV file in the Import Users field and choose Validate.

3. You are informed that the result of a validate job is provided in a downloadable ZIP file. It contains two CSV files - one for each of the target systems connected to the source.

4. Open the files and view how the users defined in MS Azure AD would be provisioned to the respective target system.

Identity Authentication:

SAP Commissions:

The 1st row contains the attribute names as defined in the write transformation of the target system. These are the targetPath values. The following attributes are listed:

- Required and optional attributes supported in the respective write transformation for which a value is provided (group[0].value and group[1].value attributes are listed only in the SAP Commissions validation result, as they are not supported for the user resource in IAS write transformation).

- Optional attributes for which a default value is provided in the transformation (externalId in SAP Commissions)

- Attributes mapped to constants (urn:ietf:params:scim:schemas:extension:sap:2.0:User:mailVerified in IAS)

The 2nd and the 3rd rows contain the attribute values of the users. The following values are listed:

- Values of required and optional attributes supported in the respective write transformation which are read from the source system (Chris and Mary’s groups: Employee, Support and Developer are listed only in the SAP Commissions validation result, as they are not supported for the user resource in IAS write transformation).

- Default values of optional attributes which are provided in the transformation (Chris and Mary does not have displayName in MS Azure AD, but as this attribute contains а "defaultValue": "" in SAP Commissions, the double commas are shown in the result).

- Constant values ("constant": false, "constant": "disabled")

Use Case 2 – Validate Modified Transformations

Let’s now test user provisioning from SAP SuccessFactors to Identity Authentication when the read transformation is modified. A condition is set to skip reading of users without emails:

"condition": "($.email EMPTY false)",

This will ensure that only users with emails will be provisioned to IAS, where this is a required attribute.

1. Create and save a CSV file containing 3 users with a few attributes.

2. Open the SAP SuccessFactors source system and choose: Jobs -> Validate Job -> Run Now. Import the CSV file in the Import Users field and choose Validate.

3. You are informed that there are failed or skipped entities in the traces.log file.

4. Open the traces.log file and view what’s wrong.

The result shows that:

- User 1 - John Smith is skipped, because he doesn't have an email address and therefore does not match the condition.

- User 2 - Although Julie Armstrong matches the condition, she doesn't have the required userId and therefore reading her user failed.

5. Open the .csv file and view how the users defined in SAP SuccessFactors would be provisioned to IAS.

- User 3 - Emily Brown is the only user that will be provisioned successfully to IAS.

Now, it’s your turn to play around. You must have come across lots of use cases in your work with IPS and can’t help but start testing.

Just one more thing, there is no right or wrong validation. As long as the validate job provides correct results, it is always successful even if it shows failed or skipped entities only.

- SAP Managed Tags:

- SAP Cloud Identity Services,

- Identity Provisioning,

- Security

Labels:

5 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,661 -

Business Trends

87 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

64 -

Expert

1 -

Expert Insights

178 -

Expert Insights

273 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

784 -

Life at SAP

11 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

326 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,886 -

Technology Updates

403 -

Workload Fluctuations

1

Related Content

- [SAP BTP Onboarding Series] Joule with SFSF – Common Setup Issues in Technology Blogs by SAP

- Business data reconciliation using DTV tool in Technology Blogs by SAP

- Recap — SAP Data Unleashed 2024 in Technology Blogs by Members

- Clone SAP HANA Database Instance via Template based cloning in Technology Blogs by SAP

- SAP GUI MFA with SAP Secure Login Service and Microsoft Entra ID in Technology Blogs by Members

Top kudoed authors

| User | Count |

|---|---|

| 12 | |

| 10 | |

| 10 | |

| 7 | |

| 7 | |

| 7 | |

| 6 | |

| 6 | |

| 5 | |

| 4 |