- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Bringing the power of Optimization Libraries to SA...

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Advisor

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

10-18-2022

11:18 AM

Do you wonder if Mathematical Optimization software like MIP Codes from FICO-Xpress or Gurobi can be easily deployed on SAP BTP Runtimes. This is a 2-part blog which will walk through quick and easy steps to deploy on 1. SAP’s AI Core via the AI Launchpad 2. SAP Kyma (this blog post).

Steps to create the docker are the same in the blog post on deploying to SAP AI Core.

Now which deployment option is best suited depends very much on the use-case and how the optimization results results need to be integrated in the business process and the overall technology landscape. I will provide some guidelines at the end of the blog but the choice of deployment option would need a wider consideration depending on the use-case you have in mind.

Mathematical Optimization techniques are widely needed to solve a wide range of problems in decision making and finding optimal solution(s) when many exist.Besides the many real world complex problems that depend on Mathematical Optimization, these techniques are also increasingly being used to enhance Machine Learning solutions to provide explainability and interpretability as shown by for example

These techniques are useful when for example it is not sufficient to classify credit scores or health scores into good or bad but also provide what can be changed to make the classification go from bad to good.

SAP Products like SAP Integrated Business Planning incorporate optimization in the supply chain solutions. For other problems where you need to extend the solutions and embed ML or Optimization, this blog post walks through the steps of easily installing third-party libraries on BTP so these solutions can be integrated with the enterprise solutions which needs these capabilities.

Incase the usecase has optimization models which are expressible with small code foot print, using the Kyma Serverless functions might be an option too. For this approach you can follow the blog post Deploying HANA ML with SAP Kyma Serverless Functions

and add (or replace instead of hana-ml) your chosen optimization library in the function dependencies.

To go through the steps described in the blog make sure you already have the following

SAP Kyma provides many equivalent capabilities via SAP Kyma Cockpit or programmatically via kubectl (as described here).In this blog post I will primarily use SAP Kyma Cockpit as it is easier to get started with minimal coding effort.

The subsequent step is to include the development artifacts into an existing project structure, for which the kubectl way might be better suited. Since this step is highly project dependent it is not covered in this blog post.

Follow the steps to create the docker image as described in blog post on deploying to SAP AI Core.

For example I had previously pushed the docker image to docker.io. I can test the image locally by pulling it from it. I will use the same image to now deploy the optimization example on SAP Kyma which is at sapgcoe/sap-optiml-example1.0.

The docker image has 4 files and the rest are installed via pip, please refer to the blog post on deploying to SAP AI Core for the code snippets for :

Here we would like to use our own docker image, hence we will follow through the steps of creating a full deployment and not a Serverless Function in Kyma.

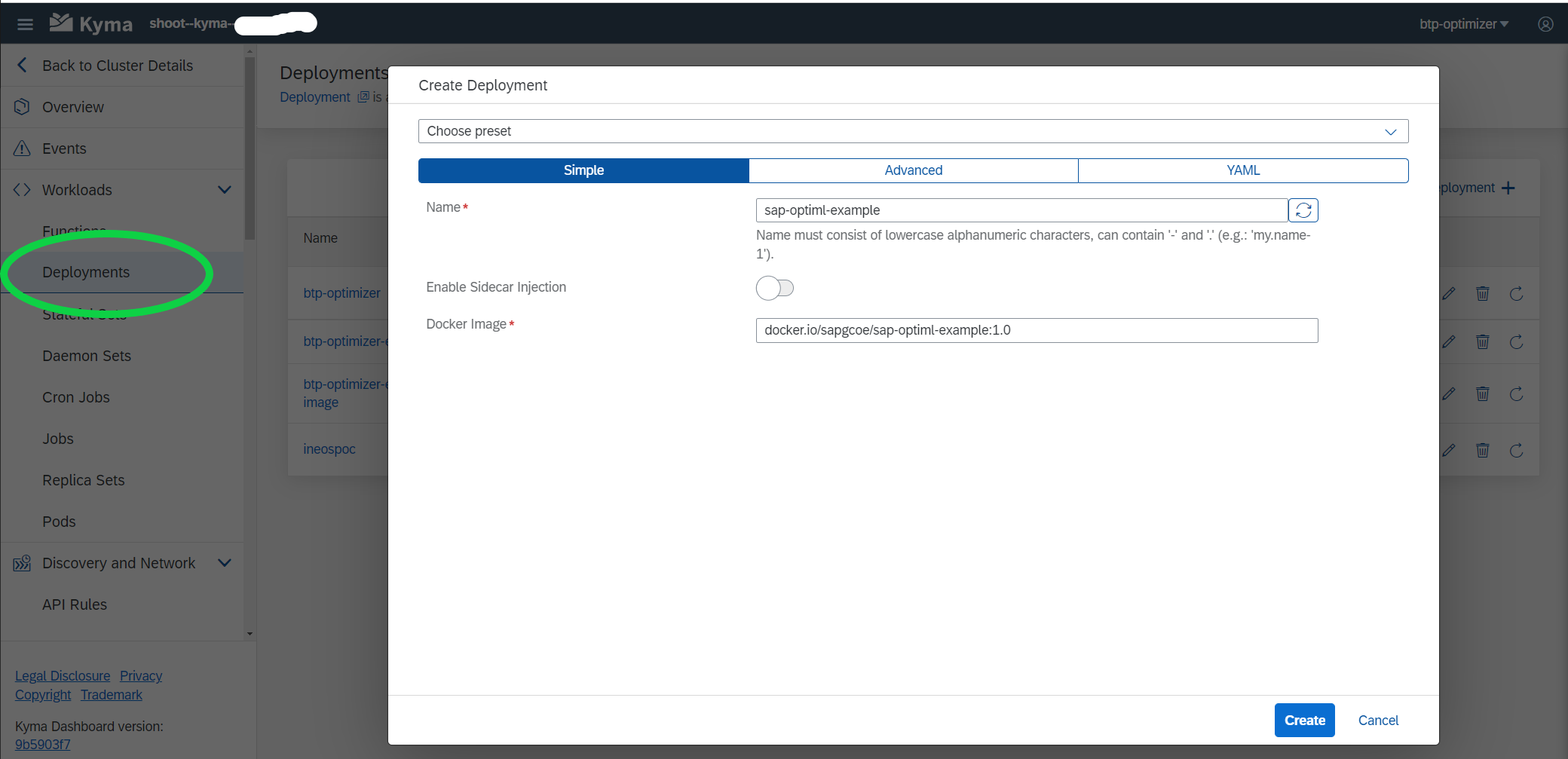

On the Kyma console go to Deployments and press Create Deployment. We will start with Simple from the Create Deployment pop-up

Now click on YAML in the above pop-up and add the commands to specify what should run in the docker (it is the same command we gave in the workflow deployment for SAP AI Core Deployment)

Code snippet to add after image in the yaml file as shown above

If the Docker image is public you would not need to specify a docker secret. If it Private you would need to add Docker Secret with kubectl create secret docker-registry. You can either patch to default to above secret when pulling from docker repo or specify the secret in the yaml file above with :

Once you click create you will see the deployment running as below

Once we have a deployment running as above we will now create a service which is then subsequently exposed via API Rule to be used by client code. In the Kyma Cockpit go to Discovery and Network -> Services and click on Create Service. Then edit the pop-up yaml to specify the app, which is the name of the deployment created in Step above, in our example sap-optiml-example. You need to specify the protocol to TCP. You can keep the default port as shown in the Service or specify your own for example 9001

Now that we have a Service we are at the last step of deployment which is to create an API Rule. This creates the url that client code can call to run the Optimization. You can Create API Rule from above Service screen or go to Discovery and Network -> API Rules and Click on Create API Rule

For the sub-domain specify a name to make this API uniquon your cluster. Currently our app.py code in the Docker image exposes the functions via GET only. You can specify POST if your functions would need POST.

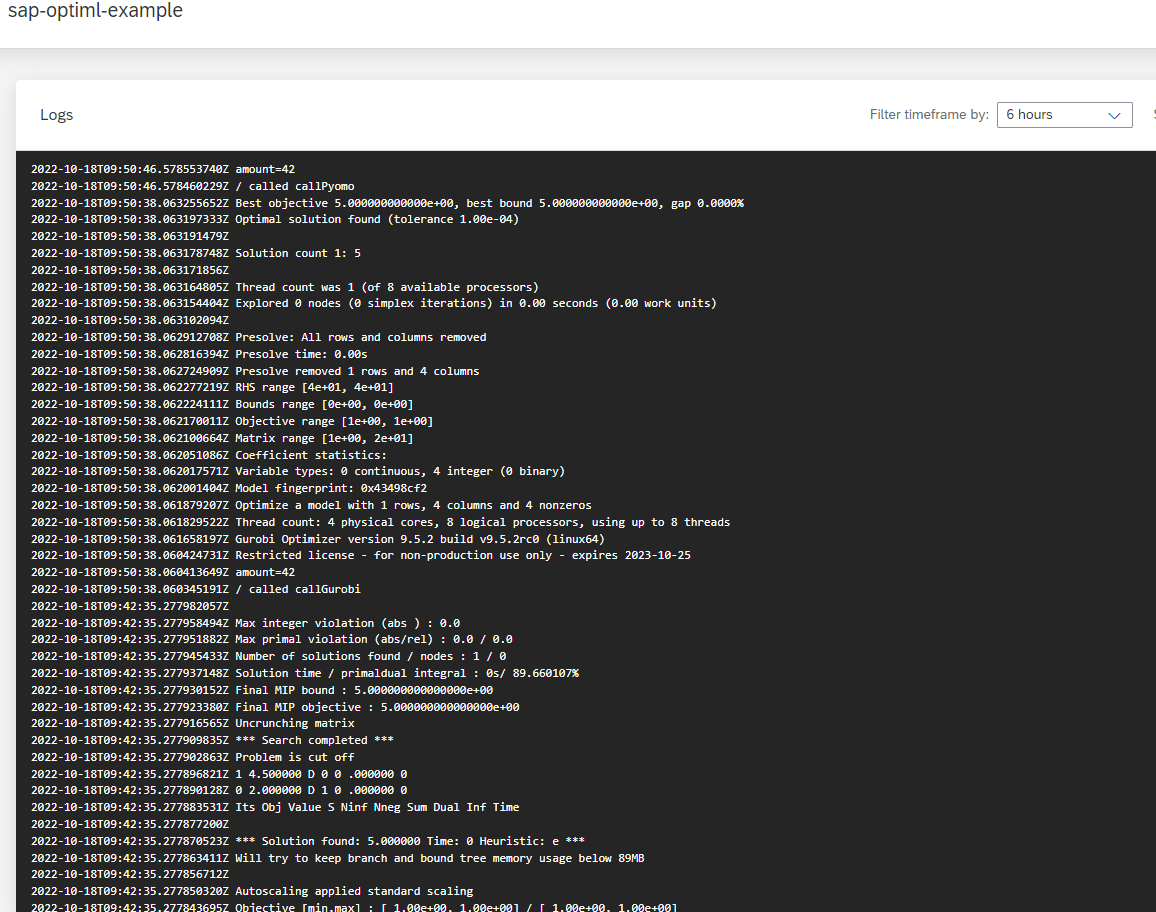

One the API rule is created you can test the API as usual for example via Postman call or since we have only implemented GET and not added authentication mechanism, you can open the url created via the API in the browser and test the endpoints:

You can similarly test the other 2 endpoints /v1/callGurobi or /call/Pyomo. You will get the same answer and the logs will give details of the corresponding execution

When to use SAP Kyma for Optimization Deployment

When to use SAP Kyma for Optimization loads depends heavily on your use-case. Some guidelines on when this can make the overall integration easier :

This blog post and the one on deploying on SAP AI Core show how easily one can deploy Optimization loads on SAP BTP Runtimes. The dockerized approach facilitates the testing of optimization functionality specific to your use-case and enabling the deployment with ease into a productive landscape.

Happy Optimizing development for your OptiML enabled applications on SAP BTP!

Steps to create the docker are the same in the blog post on deploying to SAP AI Core.

Now which deployment option is best suited depends very much on the use-case and how the optimization results results need to be integrated in the business process and the overall technology landscape. I will provide some guidelines at the end of the blog but the choice of deployment option would need a wider consideration depending on the use-case you have in mind.

Background

Mathematical Optimization techniques are widely needed to solve a wide range of problems in decision making and finding optimal solution(s) when many exist.Besides the many real world complex problems that depend on Mathematical Optimization, these techniques are also increasingly being used to enhance Machine Learning solutions to provide explainability and interpretability as shown by for example

- Interpretable AI and develop-more-accurate-machine-learning-models-with-mip

- Use-cases on finding counterfactuals to provide options which could make ML Classifier classify differently Counterfactual Analysis for Functional Data

These techniques are useful when for example it is not sufficient to classify credit scores or health scores into good or bad but also provide what can be changed to make the classification go from bad to good.

SAP Products like SAP Integrated Business Planning incorporate optimization in the supply chain solutions. For other problems where you need to extend the solutions and embed ML or Optimization, this blog post walks through the steps of easily installing third-party libraries on BTP so these solutions can be integrated with the enterprise solutions which needs these capabilities.

Incase the usecase has optimization models which are expressible with small code foot print, using the Kyma Serverless functions might be an option too. For this approach you can follow the blog post Deploying HANA ML with SAP Kyma Serverless Functions

and add (or replace instead of hana-ml) your chosen optimization library in the function dependencies.

Prerequisites

To go through the steps described in the blog make sure you already have the following

- Docker Desktop for building docker images

- Setup BTP Account with SAP Kyma runtime as described here Create Kyma Environment on BTP. If you are new to Kyma you can start here SAP Discovery Mission

SAP Kyma provides many equivalent capabilities via SAP Kyma Cockpit or programmatically via kubectl (as described here).In this blog post I will primarily use SAP Kyma Cockpit as it is easier to get started with minimal coding effort.

The subsequent step is to include the development artifacts into an existing project structure, for which the kubectl way might be better suited. Since this step is highly project dependent it is not covered in this blog post.

Create the Docker Image

Follow the steps to create the docker image as described in blog post on deploying to SAP AI Core.

For example I had previously pushed the docker image to docker.io. I can test the image locally by pulling it from it. I will use the same image to now deploy the optimization example on SAP Kyma which is at sapgcoe/sap-optiml-example1.0.

The docker image has 4 files and the rest are installed via pip, please refer to the blog post on deploying to SAP AI Core for the code snippets for :

- Dockerfile

- requirements.txt

- optimizer.py (Sample code from 3 Optimization Libraries: FICO-Xpress,Gurobi ,Pyomo)

- app.py (Sample Flask server code to call above sample code in optimizer.py)

Create Deployment on Kyma

Here we would like to use our own docker image, hence we will follow through the steps of creating a full deployment and not a Serverless Function in Kyma.

On the Kyma console go to Deployments and press Create Deployment. We will start with Simple from the Create Deployment pop-up

Create Kyma Deployment

Now click on YAML in the above pop-up and add the commands to specify what should run in the docker (it is the same command we gave in the workflow deployment for SAP AI Core Deployment)

Specify how to run the Docker Image

Code snippet to add after image in the yaml file as shown above

command:

- /bin/sh

- '-c'

args:

- > #Specify using gunicorn runtime and calling app flask app from app.py file

set -e && echo "Starting" && gunicorn --chdir /app app:app -b 0.0.0.0:9001

ports:

- containerPort: 9001

protocol: TCPIf the Docker image is public you would not need to specify a docker secret. If it Private you would need to add Docker Secret with kubectl create secret docker-registry. You can either patch to default to above secret when pulling from docker repo or specify the secret in the yaml file above with :

imagePullSecrets:

-name:<your docker repo secret> Once you click create you will see the deployment running as below

Kyma Deployment Running

Create Service on Kyma

Once we have a deployment running as above we will now create a service which is then subsequently exposed via API Rule to be used by client code. In the Kyma Cockpit go to Discovery and Network -> Services and click on Create Service. Then edit the pop-up yaml to specify the app, which is the name of the deployment created in Step above, in our example sap-optiml-example. You need to specify the protocol to TCP. You can keep the default port as shown in the Service or specify your own for example 9001

Create Kyma Service for Deployment

When you click Create you will see a Service like this

Kyma Service Created

Create API Rule on Kyma to expose the Optimization Functionality

Now that we have a Service we are at the last step of deployment which is to create an API Rule. This creates the url that client code can call to run the Optimization. You can Create API Rule from above Service screen or go to Discovery and Network -> API Rules and Click on Create API Rule

Create Kyma API Rule

For the sub-domain specify a name to make this API uniquon your cluster. Currently our app.py code in the Docker image exposes the functions via GET only. You can specify POST if your functions would need POST.

Test the Deployment

One the API rule is created you can test the API as usual for example via Postman call or since we have only implemented GET and not added authentication mechanism, you can open the url created via the API in the browser and test the endpoints:

- /v1/status : This should return "Container is up & running' as specified in the app.py

- /v1/callXpress?amount = 42

{"dime":1,"nickel":1,"penny":2,"quarter":1}Call Optimizer for given amount

More details will be available in the logs on Kyma Cockpit for pods in thisdeployment

- Now if we test /v1/callOptimizer API endpoint it will return an error as the environment variable for default optimizer is not yet defined. This is needed in the app.py.To define the environment variable go to the deployment yaml and Edit it to add the env variable as follows: (here we are Xpress as default, or you can do 'Gurobi' or 'Pyomo')

You can similarly test the other 2 endpoints /v1/callGurobi or /call/Pyomo. You will get the same answer and the logs will give details of the corresponding execution

Test API Endpoints

When to use SAP Kyma for Optimization Deployment

When to use SAP Kyma for Optimization loads depends heavily on your use-case. Some guidelines on when this can make the overall integration easier :

- If you have to embed optimization loads as part of Events in SAP Applications then you could use the event subscription functionality in SAP Kyma and trigger the workload

- You need to scale Optimization loads dynamically, for example you have periods of time when many optimization runs need to execute

- You need to specify the compute resources for the Optimization jobs

- You have other workloads on Kyma and would like to manage intelligence enabled apps from a central Cockpit

Conclusion

This blog post and the one on deploying on SAP AI Core show how easily one can deploy Optimization loads on SAP BTP Runtimes. The dockerized approach facilitates the testing of optimization functionality specific to your use-case and enabling the deployment with ease into a productive landscape.

Happy Optimizing development for your OptiML enabled applications on SAP BTP!

- SAP Managed Tags:

- Machine Learning,

- SAP BTP, Kyma runtime

Labels:

1 Comment

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,661 -

Business Trends

87 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

64 -

Expert

1 -

Expert Insights

178 -

Expert Insights

273 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

784 -

Life at SAP

11 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

326 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,886 -

Technology Updates

403 -

Workload Fluctuations

1

Related Content

- Join and innovate with the SAP Enterprise Support Advisory Council (ESAC) Program in Technology Blogs by SAP

- Pilot: SAP Datasphere Fundamentals in Technology Blogs by SAP

- AI Foundation on SAP BTP: Q1 2024 Release Highlights in Technology Blogs by SAP

- SAP PI/PO migration? Why you should move to the Cloud with SAP Integration Suite! in Technology Blogs by SAP

- Augmenting SAP BTP Use Cases with AI Foundation: A Deep Dive into the Generative AI Hub in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 13 | |

| 10 | |

| 10 | |

| 7 | |

| 7 | |

| 6 | |

| 6 | |

| 5 | |

| 5 | |

| 4 |