- SAP Community

- Products and Technology

- Technology

- Technology Blogs by Members

- HANA Workload Management deep dive part I

Technology Blogs by Members

Explore a vibrant mix of technical expertise, industry insights, and tech buzz in member blogs covering SAP products, technology, and events. Get in the mix!

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

jgleichmann

Active Contributor

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

09-08-2022

4:49 AM

last updated: 2023-04-11 10:15 CEST

We all know that HANA needs a lot of system resources due its in-memory design. When we are not monitoring the prod. systems well the system can get overloaded by too highly parallelized applications, a memory leak or a SQL which was not restrictive enough ending up in a OOM (out of memory) dump. This behavior is nothing special and can + will happen to any system. This is not only the case for user workload. Also system workload like the merge, partitioning process and consistency check can be controlled.

There are several automatisms that are triggered by threshold events. Other actions are managed by dynamic workload management or parameters.

How can you manage it?

To handle the workload of system correctly

you have to understand how HANA manages its resources (part I),

how you can identify bottlenecks (part II) and

how to configure the right parameters / workload classes (part III).

This can save money if you solved it in the past with more hardware and avoid bottlenecks for your important workload in peak situations.

HANA workload management deep dive part II

HANA workload management deep dive part III

Workload - holistic view

Every system should be sized carefully regarding CPU and memory resources. It should be monitored frequently regarding database growth and CPU peaks. To understand how the workload management is working, first you must know about the internal treatment. On an intel platform which is the most widespread one, you have on activated Hyper Threading (HT) 2 threads per physical core in the other case you only have one. This includes also the hyperscalers. The SMT (simultaneous multi-threading) on IBM Power (and the IBM Cloud - non intel servers) depends on the setup. With Power9 you normally have 8 threads per core and on Power10 you have 4 when you want to run HANA workload.

But don't get confused by these threads. If one thread of a core is utilized by 100% pure calculation load the other thread(s) cannot overperform this 100%. Only if there are interrupts for memory, network or disk accesses more threads per core can be useful. This means if you have a pure CPU calc load with a lot of threads, this load can be handled best by more phys. cores compared to more logical threads. If the phys. core is overloaded, you have wait events. In the end HT works only if there are unused processor cycles, due to interrupts. Less utilized systems or workloads that are not highly multi-threaded may not benefit from enabling SMT. It always depends on your workload!

Intel: has more phys. cores (hyperthreading gains in average 15% performance depending on the load)

Power: has a better single thread performance due to the higher clock frequency and higher memory bandwidth

Source : hardwareinside.de

Ok, know we know more about cores and threads. But how HANA is handling the CPU resources?

To get an overview you can run the "infrastructure overview" script from the SQL collection (note 1969700).

You will get something like this:

HOST CPU_CLOCK CORES THREADS SOCKETS CPU_MANUFACTURER CPU_MODEL

============= ========== ===== ======= ======= =================== ===============================================

host1 2693 96 192 4 GenuineIntel Intel(R) Xeon(R) Platinum 8280M CPU @ 2.70GHzOne socket means one NUMA node. On one NUMA node is the attached memory connected by the bus system. The NUMA scoring is also an important aspect and has a big influence on your performance. Take attention when you are running your systems virtualized!

In our example we have 96 cores with HT on => 192 threads.

96(cores) / 4(sockets) = 24

This means we have 24 cores per socket / numa node resulting in 48 threads each node.

HANA will recognize the visible CPUs - means the logical cores - at every startup. Every change on the topology must not be published from OS to the HANA (IBM PPC: /proc/powerpc/topology_updates should be set to off) . In all other cases the DB can crash due to NUMA changes.

If you want know more about NUMA and its possibilities / how to analyze / tune please have a look at the documentation.

The default_statement_concurrency_limit is set to 48 - therefore an application workload may consume 25% of the available CPU threads resources in our scenario (192 threads).

If, however, the application submits 4 such requests via 4 different sessions simultaneously this workload may easily exhaust the CPU resources. This illustrates the importance of finding the right workload management balance for your individual system; there is no "one-configuration-fits-all” approach and you may therefore need to change the workload management related configuration parameters to fit your own specific application requirements. In the given scenario, for example, the solution may be to further decrease the concurrency degree of individual database requests by adjusting default_statement_concurrency_limit to even lower levels.

If you want to find out which statements might be affected by adjusting this parameter just run "HANA_Threads_ThreadSamples_StatisticalRecords" and edit the modification section: MIN_PX_MAX provide 25% (our 48 threads). All statements in the result set might be affected by this default configuration change.

By default HANA will take the number of this called logical threads as basis value for:

|

In this case we have 192 threads to handle the workload of the system. Some of them are frequently used by internal system actions like savepoints, disk flushes, system replication snapshots, delta merges etc.

The rest can be occupied by the user workload:

OLAP workload, for example reporting in BW systems or with SAP Analytics Cloud live connections.

OLTP workload, for example transactions in an ERP system.

Mixed workload, meaning both OLAP and OLTP, for example modern ERP systems with transactions and analytical reporting.

Every database session (1:1 relation to one work process inside the application server) can have multiple threads.

If the thread state is "Job Exec Waiting" the process will wait for a JobWorker thread executing the actual work. The reason can be a limitation of the system (e.g. admission control / dyn. workload) or the sql thread itself (workload class or user parameter) or a system bottleneck. The general waiting threads should not exceed 5% over a longer time frame. This results in queueing events like mini checks M0882 "Max. parked JobWorker ratio", M0883 "Queued jobs" or M0888 "Job queueing share (%, short-term)" of the mini checks.

Dyn. Workload

If there is a high number of Jobworkers consuming CPU resources that should be better used by SqlExecutors or request threads processing OLTP load the number will be automatically reduced down to 30-40%. You can have influence on this behavior by setting some parameters (check the section below or note 2222250).

If there are many active, but waiting threads in the system, these settings can significantly reduce the dynamic concurrency. Even with a value of 100 for max_concurrency_dyn_min_pct it was observed that the dynamic concurrency was reduced to around 20 % of max_concurrency when hundreds of waiting threads were permanently active in the system. In general, you should eliminate the root cause for the waiting threads in these scenarios, but in some cases, it can be beneficial to reduce the two parameter values in order to reduce concurrency reductions.

With SAP HANA >= 2.00.059.03 and >= 2.00.063 an improved dynamic calculation reducing the risk of too low values is available.

Parameters

###########

General CPU

###########

indexserver.ini -> [sql] -> sql_executors

<service>.ini -> [sql] -> max_sql_executors

<service>.ini -> [session] -> busy_executor_threshold

<service>.ini -> [execution] -> max_concurrency

<service>.ini -> [execution] -> default_statement_concurrency_limit

global.ini -> [execution] -> other_threads_act_weight = 40

global.ini -> [execution] -> load_factor_sys_weight_pct (in %, default: 10) global.ini -> [execution] -> load_factor_job_weight_pct (in %, default: 20)

|

Since Rev. 56 the hdblcm will calculate some of this parameters during the installation or update/upgrade to it. It is enabled by parameter --apply_system_size_dependent_parameters=on which is the default.

| indexserver.ini [parallel] tables_preloaded_in_parallel = MAX(5, 0.1 * CPU_THREADS) indexserver.ini [optimize_compression] row_order_optimizer_threads = MAX(4, 0.25 * CPU_THREADS) global.ini [execution] default_statement_concurrency_limit = 0.25 * CPU_THREADS (only applied if HANA has at least 16 CPU threads) |

################

General Memory

################

global.ini -> [memorymanager] -> statement_memory_limit

global.ini -> [memorymanager] -> statement_memory_limit_threshold

global.ini -> [memorymanager] -> total_statement_memory_limit

|

Details: 3202692 - How to set Memory Limit for SQL Statements

##################

Delta Merges

##################

| indexserver.ini -> [indexing] -> parallel_merge_part_threads indexserver.ini -> [indexing] -> parallel_merge_threads

indexserver.ini -> [mergedog] -> num_merge_threads

indexserver.ini -> [mergedog] -> max_cpuload_for_parallel_merge

|

The default should only be adjusted if you are sure that you have enough system resources besides the normal workload.

##################

Optimize compression

##################

| indexserver.ini -> [optimize_compression] -> change_compression_threads estimate_compression_threads get_candidates_threads prepare_threads row_order_optimizer_threads (10 % - 25 % of available CPU threads, at least 4)

|

##################

Partitioning

##################

| indexserver.ini -> [partitioning] -> bulk_load_threads split_threads

|

Takeaway

It can happen that one query or multiple SQLs consume all CPU resources of the system if your parameters or workload classes are defined incorrectly. With proper parametrization or workload classes this won't happen. Beware which load your system must handle. Do frequent health checks if your system is still able to handle the load with growing data payload. With every resizing your parameters and workload classes have to be reviewed.

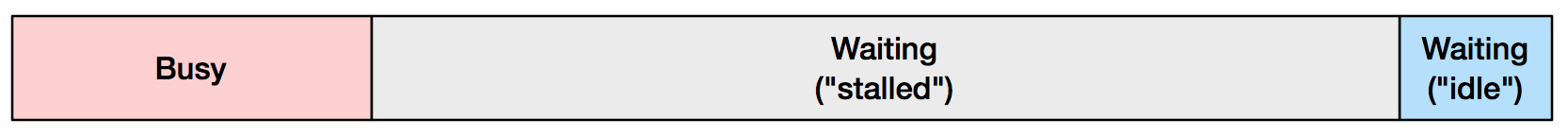

Most monitoring tools just displaying the number of occupied CPUs but not take SMT into account or even waiting ("stalled"). Stalled means the processor was not making forward progress with instructions, and usually happens because it is waiting on memory I/O.

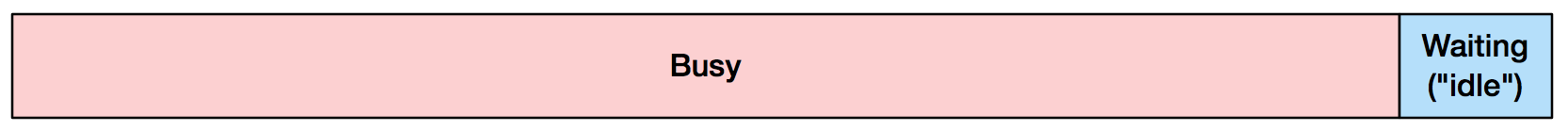

What is displayed:

What is really the case:

Source: CPU Utilization is Wrong

Check the real values with tools like perf, top or tiptop which can display the IPC (instructions per cycle). If this value is under 1 it can be an indicator that you are likely memory stalled. For more insides on this topic read the publications of Brendan Gregg.

2 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

"automatische backups"

1 -

"regelmäßige sicherung"

1 -

"TypeScript" "Development" "FeedBack"

1 -

505 Technology Updates 53

1 -

ABAP

14 -

ABAP API

1 -

ABAP CDS Views

2 -

ABAP CDS Views - BW Extraction

1 -

ABAP CDS Views - CDC (Change Data Capture)

1 -

ABAP class

2 -

ABAP Cloud

2 -

ABAP Development

5 -

ABAP in Eclipse

1 -

ABAP Platform Trial

1 -

ABAP Programming

2 -

abap technical

1 -

absl

1 -

access data from SAP Datasphere directly from Snowflake

1 -

Access data from SAP datasphere to Qliksense

1 -

Accrual

1 -

action

1 -

adapter modules

1 -

Addon

1 -

Adobe Document Services

1 -

ADS

1 -

ADS Config

1 -

ADS with ABAP

1 -

ADS with Java

1 -

ADT

2 -

Advance Shipping and Receiving

1 -

Advanced Event Mesh

3 -

AEM

1 -

AI

7 -

AI Launchpad

1 -

AI Projects

1 -

AIML

9 -

Alert in Sap analytical cloud

1 -

Amazon S3

1 -

Analytical Dataset

1 -

Analytical Model

1 -

Analytics

1 -

Analyze Workload Data

1 -

annotations

1 -

API

1 -

API and Integration

3 -

API Call

2 -

Application Architecture

1 -

Application Development

5 -

Application Development for SAP HANA Cloud

3 -

Applications and Business Processes (AP)

1 -

Artificial Intelligence

1 -

Artificial Intelligence (AI)

4 -

Artificial Intelligence (AI) 1 Business Trends 363 Business Trends 8 Digital Transformation with Cloud ERP (DT) 1 Event Information 462 Event Information 15 Expert Insights 114 Expert Insights 76 Life at SAP 418 Life at SAP 1 Product Updates 4

1 -

Artificial Intelligence (AI) blockchain Data & Analytics

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise Oil Gas IoT Exploration Production

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise sustainability responsibility esg social compliance cybersecurity risk

1 -

ASE

1 -

ASR

2 -

ASUG

1 -

Attachments

1 -

Authorisations

1 -

Automating Processes

1 -

Automation

1 -

aws

2 -

Azure

1 -

Azure AI Studio

1 -

B2B Integration

1 -

Backorder Processing

1 -

Backup

1 -

Backup and Recovery

1 -

Backup schedule

1 -

BADI_MATERIAL_CHECK error message

1 -

Bank

1 -

BAS

1 -

basis

2 -

Basis Monitoring & Tcodes with Key notes

2 -

Batch Management

1 -

BDC

1 -

Best Practice

1 -

bitcoin

1 -

Blockchain

3 -

BOP in aATP

1 -

BOP Segments

1 -

BOP Strategies

1 -

BOP Variant

1 -

BPC

1 -

BPC LIVE

1 -

BTP

11 -

BTP Destination

2 -

Business AI

1 -

Business and IT Integration

1 -

Business application stu

1 -

Business Application Studio

1 -

Business Architecture

1 -

Business Communication Services

1 -

Business Continuity

1 -

Business Data Fabric

3 -

Business Partner

12 -

Business Partner Master Data

10 -

Business Technology Platform

2 -

Business Trends

1 -

CA

1 -

calculation view

1 -

CAP

3 -

Capgemini

1 -

CAPM

1 -

Catalyst for Efficiency: Revolutionizing SAP Integration Suite with Artificial Intelligence (AI) and

1 -

CCMS

2 -

CDQ

12 -

CDS

2 -

Cental Finance

1 -

Certificates

1 -

CFL

1 -

Change Management

1 -

chatbot

1 -

chatgpt

3 -

CL_SALV_TABLE

2 -

Class Runner

1 -

Classrunner

1 -

Cloud ALM Monitoring

1 -

Cloud ALM Operations

1 -

cloud connector

1 -

Cloud Extensibility

1 -

Cloud Foundry

4 -

Cloud Integration

6 -

Cloud Platform Integration

2 -

cloudalm

1 -

communication

1 -

Compensation Information Management

1 -

Compensation Management

1 -

Compliance

1 -

Compound Employee API

1 -

Configuration

1 -

Connectors

1 -

Consolidation Extension for SAP Analytics Cloud

1 -

Controller-Service-Repository pattern

1 -

Conversion

1 -

Cosine similarity

1 -

cryptocurrency

1 -

CSI

1 -

ctms

1 -

Custom chatbot

3 -

Custom Destination Service

1 -

custom fields

1 -

Customer Experience

1 -

Customer Journey

1 -

Customizing

1 -

Cyber Security

2 -

Data

1 -

Data & Analytics

1 -

Data Aging

1 -

Data Analytics

2 -

Data and Analytics (DA)

1 -

Data Archiving

1 -

Data Back-up

1 -

Data Governance

5 -

Data Integration

2 -

Data Quality

12 -

Data Quality Management

12 -

Data Synchronization

1 -

data transfer

1 -

Data Unleashed

1 -

Data Value

8 -

database tables

1 -

Datasphere

2 -

datenbanksicherung

1 -

dba cockpit

1 -

dbacockpit

1 -

Debugging

2 -

Delimiting Pay Components

1 -

Delta Integrations

1 -

Destination

3 -

Destination Service

1 -

Developer extensibility

1 -

Developing with SAP Integration Suite

1 -

Devops

1 -

digital transformation

1 -

Documentation

1 -

Dot Product

1 -

DQM

1 -

dump database

1 -

dump transaction

1 -

e-Invoice

1 -

E4H Conversion

1 -

Eclipse ADT ABAP Development Tools

2 -

edoc

1 -

edocument

1 -

ELA

1 -

Embedded Consolidation

1 -

Embedding

1 -

Embeddings

1 -

Employee Central

1 -

Employee Central Payroll

1 -

Employee Central Time Off

1 -

Employee Information

1 -

Employee Rehires

1 -

Enable Now

1 -

Enable now manager

1 -

endpoint

1 -

Enhancement Request

1 -

Enterprise Architecture

1 -

ETL Business Analytics with SAP Signavio

1 -

Euclidean distance

1 -

Event Dates

1 -

Event Driven Architecture

1 -

Event Mesh

2 -

Event Reason

1 -

EventBasedIntegration

1 -

EWM

1 -

EWM Outbound configuration

1 -

EWM-TM-Integration

1 -

Existing Event Changes

1 -

Expand

1 -

Expert

2 -

Expert Insights

1 -

Fiori

14 -

Fiori Elements

2 -

Fiori SAPUI5

12 -

Flask

1 -

Full Stack

8 -

Funds Management

1 -

General

1 -

Generative AI

1 -

Getting Started

1 -

GitHub

8 -

Grants Management

1 -

groovy

1 -

GTP

1 -

HANA

5 -

HANA Cloud

2 -

Hana Cloud Database Integration

2 -

HANA DB

1 -

HANA XS Advanced

1 -

Historical Events

1 -

home labs

1 -

HowTo

1 -

HR Data Management

1 -

html5

8 -

HTML5 Application

1 -

Identity cards validation

1 -

idm

1 -

Implementation

1 -

input parameter

1 -

instant payments

1 -

Integration

3 -

Integration Advisor

1 -

Integration Architecture

1 -

Integration Center

1 -

Integration Suite

1 -

intelligent enterprise

1 -

Java

1 -

job

1 -

Job Information Changes

1 -

Job-Related Events

1 -

Job_Event_Information

1 -

joule

4 -

Journal Entries

1 -

Just Ask

1 -

Kerberos for ABAP

8 -

Kerberos for JAVA

8 -

Launch Wizard

1 -

Learning Content

2 -

Life at SAP

1 -

lightning

1 -

Linear Regression SAP HANA Cloud

1 -

local tax regulations

1 -

LP

1 -

Machine Learning

2 -

Marketing

1 -

Master Data

3 -

Master Data Management

14 -

Maxdb

2 -

MDG

1 -

MDGM

1 -

MDM

1 -

Message box.

1 -

Messages on RF Device

1 -

Microservices Architecture

1 -

Microsoft Universal Print

1 -

Middleware Solutions

1 -

Migration

5 -

ML Model Development

1 -

Modeling in SAP HANA Cloud

8 -

Monitoring

3 -

MTA

1 -

Multi-Record Scenarios

1 -

Multiple Event Triggers

1 -

Neo

1 -

New Event Creation

1 -

New Feature

1 -

Newcomer

1 -

NodeJS

2 -

ODATA

2 -

OData APIs

1 -

odatav2

1 -

ODATAV4

1 -

ODBC

1 -

ODBC Connection

1 -

Onpremise

1 -

open source

2 -

OpenAI API

1 -

Oracle

1 -

PaPM

1 -

PaPM Dynamic Data Copy through Writer function

1 -

PaPM Remote Call

1 -

PAS-C01

1 -

Pay Component Management

1 -

PGP

1 -

Pickle

1 -

PLANNING ARCHITECTURE

1 -

Popup in Sap analytical cloud

1 -

PostgrSQL

1 -

POSTMAN

1 -

Process Automation

2 -

Product Updates

4 -

PSM

1 -

Public Cloud

1 -

Python

4 -

Qlik

1 -

Qualtrics

1 -

RAP

3 -

RAP BO

2 -

Record Deletion

1 -

Recovery

1 -

recurring payments

1 -

redeply

1 -

Release

1 -

Remote Consumption Model

1 -

Replication Flows

1 -

Research

1 -

Resilience

1 -

REST

1 -

REST API

1 -

Retagging Required

1 -

Risk

1 -

Rolling Kernel Switch

1 -

route

1 -

rules

1 -

S4 HANA

1 -

S4 HANA Cloud

1 -

S4 HANA On-Premise

1 -

S4HANA

3 -

S4HANA_OP_2023

2 -

SAC

10 -

SAC PLANNING

9 -

SAP

4 -

SAP ABAP

1 -

SAP Advanced Event Mesh

1 -

SAP AI Core

8 -

SAP AI Launchpad

8 -

SAP Analytic Cloud Compass

1 -

Sap Analytical Cloud

1 -

SAP Analytics Cloud

4 -

SAP Analytics Cloud for Consolidation

2 -

SAP Analytics Cloud Story

1 -

SAP analytics clouds

1 -

SAP BAS

1 -

SAP Basis

6 -

SAP BODS

1 -

SAP BODS certification.

1 -

SAP BTP

20 -

SAP BTP Build Work Zone

2 -

SAP BTP Cloud Foundry

5 -

SAP BTP Costing

1 -

SAP BTP CTMS

1 -

SAP BTP Innovation

1 -

SAP BTP Migration Tool

1 -

SAP BTP SDK IOS

1 -

SAP Build

11 -

SAP Build App

1 -

SAP Build apps

1 -

SAP Build CodeJam

1 -

SAP Build Process Automation

3 -

SAP Build work zone

10 -

SAP Business Objects Platform

1 -

SAP Business Technology

2 -

SAP Business Technology Platform (XP)

1 -

sap bw

1 -

SAP CAP

2 -

SAP CDC

1 -

SAP CDP

1 -

SAP Certification

1 -

SAP Cloud ALM

4 -

SAP Cloud Application Programming Model

1 -

SAP Cloud Integration for Data Services

1 -

SAP cloud platform

8 -

SAP Companion

1 -

SAP CPI

3 -

SAP CPI (Cloud Platform Integration)

2 -

SAP CPI Discover tab

1 -

sap credential store

1 -

SAP Customer Data Cloud

1 -

SAP Customer Data Platform

1 -

SAP Data Intelligence

1 -

SAP Data Migration in Retail Industry

1 -

SAP Data Services

1 -

SAP DATABASE

1 -

SAP Dataspher to Non SAP BI tools

1 -

SAP Datasphere

9 -

SAP DRC

1 -

SAP EWM

1 -

SAP Fiori

2 -

SAP Fiori App Embedding

1 -

Sap Fiori Extension Project Using BAS

1 -

SAP GRC

1 -

SAP HANA

1 -

SAP HCM (Human Capital Management)

1 -

SAP HR Solutions

1 -

SAP IDM

1 -

SAP Integration Suite

9 -

SAP Integrations

4 -

SAP iRPA

2 -

SAP Learning Class

1 -

SAP Learning Hub

1 -

SAP Odata

2 -

SAP on Azure

1 -

SAP PartnerEdge

1 -

sap partners

1 -

SAP Password Reset

1 -

SAP PO Migration

1 -

SAP Prepackaged Content

1 -

SAP Process Automation

2 -

SAP Process Integration

2 -

SAP Process Orchestration

1 -

SAP S4HANA

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Cloud for Finance

1 -

SAP S4HANA Cloud private edition

1 -

SAP Sandbox

1 -

SAP STMS

1 -

SAP SuccessFactors

2 -

SAP SuccessFactors HXM Core

1 -

SAP Time

1 -

SAP TM

2 -

SAP Trading Partner Management

1 -

SAP UI5

1 -

SAP Upgrade

1 -

SAP-GUI

8 -

SAP_COM_0276

1 -

SAPBTP

1 -

SAPCPI

1 -

SAPEWM

1 -

sapmentors

1 -

saponaws

2 -

SAPUI5

4 -

schedule

1 -

Secure Login Client Setup

8 -

security

9 -

Selenium Testing

1 -

SEN

1 -

SEN Manager

1 -

service

1 -

SET_CELL_TYPE

1 -

SET_CELL_TYPE_COLUMN

1 -

SFTP scenario

2 -

Simplex

1 -

Single Sign On

8 -

Singlesource

1 -

SKLearn

1 -

soap

1 -

Software Development

1 -

SOLMAN

1 -

solman 7.2

2 -

Solution Manager

3 -

sp_dumpdb

1 -

sp_dumptrans

1 -

SQL

1 -

sql script

1 -

SSL

8 -

SSO

8 -

Substring function

1 -

SuccessFactors

1 -

SuccessFactors Time Tracking

1 -

Sybase

1 -

system copy method

1 -

System owner

1 -

Table splitting

1 -

Tax Integration

1 -

Technical article

1 -

Technical articles

1 -

Technology Updates

1 -

Technology Updates

1 -

Technology_Updates

1 -

Threats

1 -

Time Collectors

1 -

Time Off

2 -

Tips and tricks

2 -

Tools

1 -

Trainings & Certifications

1 -

Transport in SAP BODS

1 -

Transport Management

1 -

TypeScript

2 -

unbind

1 -

Unified Customer Profile

1 -

UPB

1 -

Use of Parameters for Data Copy in PaPM

1 -

User Unlock

1 -

VA02

1 -

Validations

1 -

Vector Database

1 -

Vector Engine

1 -

Visual Studio Code

1 -

VSCode

1 -

Web SDK

1 -

work zone

1 -

workload

1 -

xsa

1 -

XSA Refresh

1

- « Previous

- Next »

Related Content

- Analyze Expensive ABAP Workload in the Cloud with Work Process Sampling in Technology Blogs by SAP

- Introducing Blog Series of SAP Signavio Process Insights, discovery edition – An in-depth exploratio in Technology Blogs by SAP

- When to Use Multi-Off in 3SL in Technology Blogs by SAP

- SAP HANA Cloud Vector Engine: Quick FAQ Reference in Technology Blogs by SAP

- Empowering Retail Business with a Seamless Data Migration to SAP S/4HANA in Technology Blogs by Members

Top kudoed authors

| User | Count |

|---|---|

| 11 | |

| 10 | |

| 7 | |

| 6 | |

| 4 | |

| 4 | |

| 3 | |

| 3 | |

| 3 | |

| 3 |