- SAP Community

- Products and Technology

- Spend Management

- Spend Management Blogs by SAP

- Deploy your own custom image classification model ...

Spend Management Blogs by SAP

Stay current on SAP Ariba for direct and indirect spend, SAP Fieldglass for workforce management, and SAP Concur for travel and expense with blog posts by SAP.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

former_member81

Discoverer

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

09-06-2022

6:11 PM

Introduction

In this blog, we shall be looking into how we can deploy an image classification model that you have stored on your local computer with SAP AI core. Together with this blog and our SAP AppGyver blog, you will be able to host your very own image classification model on AppGyver and make live predictions of images.

Business Context

This blog is directly linked to SAP’s mobile application called Ariba which uses the “Aribot” model to make classifications for images. SAP's Ariba mobile application helps field technicians to identify the type of a mechanical part using image recognition. It retrieves specifics in detail, enabling users to create a purchase request on the spot, automating this usually tedious process. We shall be tackling the image modelling part that goes into SAP Ariba.

What you will learn

At the end of this blog, you will learn how to deploy and make inferences on images using your own keras model on SAP AI Core and retrieve a deployment link that exposes prediction API endpoints for mobile applications to use.

Pre-requisites

- SAP AI Core access keys via SAP BTP Account

- AWS S3 Service keys

- Python (We shall be using the SAP AI Core SDK)

- Jupyter Notebook installed with Anaconda

- Docker desktop account synced with SAP AI Core. If you have not synced your Docker with SAP AI Core, find out how here by following steps 1,2 and 7.

- Github account synced with SAP AI Core. If you have not synced your Github with SAP AI Core, find out how here by following steps 1 to 3.

- Pre-built keras model.

It is highly recommended that you watch the SAP AI Core tutorials to familiarize yourself with SAP AI Core.

Let’s begin!

Step 1

Launch a Jupyter notebook and connect to SAP AI Core by copying the code below. Please enter your SAP AI Core service keys.

from ai_core_sdk.models import Artifact

from ai_core_sdk.ai_core_v2_client import AICoreV2Client

ai_core_client = AICoreV2Client(

# `AI_API_URL`

base_url = "<base url>" + "/v2", # The present SAP AI Core API version is 2

# `URL`

auth_url= "<auth url>" + "/oauth/token",

# `clientid`

client_id = "<client id>",

# `clientsecret`

client_secret = "<client secret>"

)

Step 2

Create a resource group with ID “aribot”. We have named it aribot as the model aribot has been created with SAP AI Core previously. You can choose any name you want.

response = ai_core_client.resource_groups.create("aribot")Step 3

Upload your keras model to AWS S3 Bucket. Run the following function. Ensure that your model is located within your present working directory.

import logging

import boto3

from botocore.exceptions import ClientError

import os

def upload_file_to_s3(file_name, bucket, object_name=None):

"""Upload a file to an S3 bucket

:param file_name: File to upload

:param bucket: Bucket to upload to

:param object_name: S3 object name. If not specified then file_name is used

:return: True if file was uploaded, else False

"""

# If S3 object_name was not specified, use file_name

if object_name is None:

object_name = os.path.basename(file_name)

# Upload the file

s3_client = boto3.client('s3')

try:

response = s3_client.upload_file(file_name, bucket, object_name)

except ClientError as e:

# logging.error(e)

return False

return True

model_filename = 'keras_model.h5'

res = upload_file_to_s3(img_filename, '<S3 BUCKET NAME>', f'aribot/model/{model_filename}')

To see your model in S3, run the following command in your terminal:

aws s3 ls s3://<BUCKET NAME>/aribot/model/Step 4

Create an object store secret to connect your AWS S3 bucket to SAP AI Core. Note that the parameter path_prefix should point to the parent folder of the folder where your model is in AWS S3. Use your AWS S3 Access keys to fill up the keys.

response = ai_core_client.object_store_secrets.create(

resource_group = 'aribot',

type = "S3",

name = "aribot-secret",

path_prefix = "aribot",

endpoint = "<fill up>",

bucket = "<fill up>",

region = "<fill up>",

data = {

"AWS_ACCESS_KEY_ID": "<fill up>",

"AWS_SECRET_ACCESS_KEY": "<fill up>"

}

)

print(response.__dict__)

You should see the response: ‘message secret has been created’.

Step 5

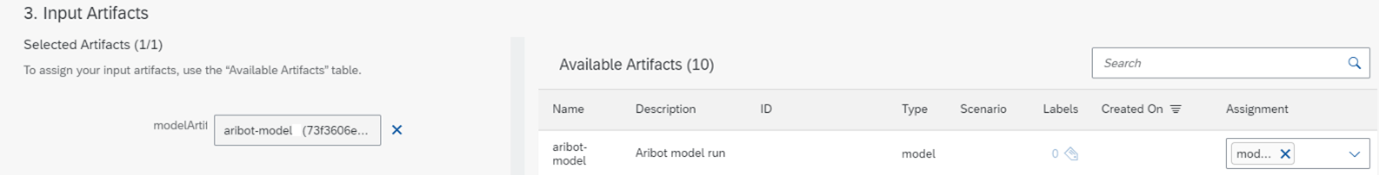

Register the model as an artifact and save the artifact id in a variable. Make a note of the ID. This ID uniquely identifies your artifact.

response = ai_core_client.artifact.create(

resource_group = 'aribot',

name = "aribot-model",

kind = Artifact.Kind.MODEL,

url = "ai://aribot-secret/model",

scenario_id = "aribot-run-1",

description = "Aribot model run 1"

)

print(response.__dict__)

artifact_id = response.__dict__['id']

Step 6

Set up the serving code to be containerized in Docker. Note, the current serving code is meant for the Aribot model.

Download and save each of the following files to your local computer. These are the code files that you will upload as a docker image and use later for deployment in SAP AI Core. All the files should be located in the same folder.

Dockerfile

requirements.txt

tf_template.py

serve.py

Next, log into your docker account using terminal. This command can only be run if you have the docker desktop set up in your computer.

docker login <YOUR_DOCKER_REGISTRY> -u <YOUR_DOCKER_USERNAME>Navigate to your downloaded files location and build docker the image using the code files that you downloaded.

docker build -t docker.io/<YOUR_DOCKER_USERNAME>/aribot:0.0.1 .

docker push docker.io/< YOUR_DOCKER_USERNAME>/aribot:0.0.1

Step 7

Create workflow to serve your model. Copy and paste the following .yaml file within your Github repository folder which is connected to SAP AI Core. Your folder directory should look something like this.

apiVersion: ai.sap.com/v1alpha1

kind: ServingTemplate

metadata:

name: aribot-classifier-1 #Enter a unique name here

annotations:

scenarios.ai.sap.com/description: "Aribot image classification"

scenarios.ai.sap.com/name: "aribot-image-clf"

executables.ai.sap.com/description: "Aribot Tensorlfow GPU Serving executable"

executables.ai.sap.com/name: "aribot-serve-exectuable"

labels:

scenarios.ai.sap.com/id: "aribot-clf"

ai.sap.com/version: "1.0.0"

spec:

inputs:

artifacts:

- name: modelArtifact

template:

apiVersion: "serving.kubeflow.org/v1beta1"

metadata:

labels: |

ai.sap.com/resourcePlan: starter

spec: |

predictor:

imagePullSecrets:

- name: docker-registry-secret

containers:

- name: kfserving-container

image: "docker.io/<YOUR_DOCKER_USERNAME>/aribot:0.0.1"

ports:

- containerPort: 9001

protocol: TCP

env:

- name: STORAGE_URI

value: "{{inputs.artifacts.modelArtifact}}"

- Under ai.sap.com/resourcePlan, you can choose to use 'Infer-S' instead. This will enable the GPU node in deployment. Find all the available resource plans here.

- Replace docker-registry-secret with the name of your docker registry secret. You can create and use multiple docker secrets in SAP AI Core.

- Under containers, set your docker image URL.

Step 8

Sync workflow with SAP AI Core. We shall now create an application using AI Core SDK. Run the following code in your jupyter notebook. Remember to replace the github repository url with your own one.

response = ai_core_client.applications.create(

application_name = "aribot-clf-app-1",

revision = "HEAD",

repository_url = "https://github.com/<GITHUB_USERNAME>/aicore-pipelines",

path = "aribot"

)

print(response.__dict__)

You should then see the following response: ‘Application has been successfully created’

We shall now verify that the workflow has synced between SAP AI Core and Github. Run the following code till you see the message

- ‘healthy_status’: ‘Healthy’,

- ‘sync_status’: ‘Synced’

response = ai_core_client.applications.get_status(application_name = 'aribot-clf-app-1')

print(response.__dict__)

print('*'*80)

print(response.sync_ressources_status[0].__dict__)

Step 9

After your workflow has been synced, launch SAP AI Lauchpad and log into your SAP AI Core account. We shall now create a configuration for deployment with our model.

Create a new configuration by heading to Configuration > Create. Ensure that you have the correct resource group chosen.

Next, select the respective variables for the configuration. Use the screenshot below as a reference.

Next, choose your model as the artifact and select review > create > create deployment.

Once completed, you should be able to see this:

Copy the deployment URL and the deployment ID.

Step 10

We shall now make a prediction on a test image. Copy the following code:

img_filename = './raw_images/IMG_5694.JPG' #images are stored in the raw_image folder in your local machine.

def get_img_byte_str(): #returns img in base 64 encoded

from io import BytesIO

import base64

with open(img_filename, "rb") as fh: #rb - read img as raw bytes

buf = BytesIO(fh.read()) #reading file and constructing bytes io obj from the file

img_data = base64.b64encode(buf.getvalue()).decode() #so can send over the network

return img_data #base 64 encoded

base64_data = get_img_byte_str()

import requests

# URL

deployment_url = "<INSERT_YOUR_DEPLOYMENT_URL>" + “/v1/predict” #need to append endpoint

# Preparing the input for inference

test_input = {'imgData':get_img_byte_str()}

endpoint = f"{deployment_url}" # endpoint implemented in serving engine

headers = {"Authorization": ai_core_client.rest_client.get_token(),

'ai-resource-group': "aribot",

"Content-Type": "application/json"}

response = requests.post(endpoint, headers=headers, json=test_input)

print('Inference result:', response.content)

You should then see your predictions being made. For example, for this blog, we get the output as so:

Inference result: b'{"Prediction": "Adapter Pk2", "Probability": "1.0"}Conclusion

You have reached the end of the tutorial, congratulations! Hope you were able to run through all the code blocks successfully. After this, you can follow the next tutorial on how to use the deployment link with SAP AppGyver to deploy this model on a mobile application. If you have any questions or comments, feel free to post one down below in the comment section! To learn more about this topic, check out the following links:

If you liked this blog, follow my profile as I'll be posting more in the future!

- SAP Managed Tags:

- Artificial Intelligence,

- Ariba Cloud Integration,

- SAP AI Core,

- SAP Build Apps

Labels:

2 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

Business Trends

113 -

Business Trends

10 -

Event Information

44 -

Event Information

2 -

Expert Insights

18 -

Expert Insights

23 -

Life at SAP

32 -

Product Updates

253 -

Product Updates

21 -

Technology Updates

82 -

Technology Updates

13

Related Content

- How to load Spend data in SAP Ariba Category Management in Spend Management Blogs by SAP

- Taxonomy in SAP Ariba Category Management in Spend Management Blogs by SAP

- Integrate Beroe - Third party market intelligence provider to Ariba Category Management in Spend Management Blogs by SAP

- SAP Ariba Category management Technical Architecture and BTP Integration setup Guidelines in Spend Management Blogs by SAP

- Enhancing Procurement with ChatGPT: A Comprehensive Business and Technical Guide in Spend Management Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 7 | |

| 5 | |

| 4 | |

| 3 | |

| 2 | |

| 1 | |

| 1 | |

| 1 | |

| 1 | |

| 1 |