- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- AI on mobile: Powering your field force - Part 3 o...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Building deep learning model within SAP BTP

Dear readers,

This is a blog series from the Meter Reading project and we attempted to discover the possibilities for ML models in a web browser. This long journey has provided many lessons learned in terms of deep learning and deployment in web browsers. As a beginner in computer vision, I would like to share the lessons I learned from this project. I would like to special thank you for our project members - aditi.arora16, vriddhishetty, gunteralbrecht, anoop.mittal Roopa Prabhu Nagavara,, namagupta and S, Deepak Chethan.

In the previous AI on mobile: Powering your field force – Part 2 of 5, anoop.mittal, we read about the high-level overview on basic design principles.

Then, how can we extract digits from a captured image📷 ?

We can build the deep learning model on the SAP Data Intelligence and write the model to the SAP HANA Cloud. To build the deep learning model for digit recognition, we have five steps as follows. Are you ready to start meter reading journey with SAP BTP?

Understand Scenarios

In this posting, we will build the deep learning model with the meter reading scenario on SAP BTP.

Automatic Meter Reading (AMR) is a technology that automatically collects consumption and status data from a water meter or energy metering devices (gas, electric).

AMR has a two-step approach for object detection and object recognition. Region of interest (ROI) is the detection of digits, and many studies have adopted YOLO, a CNN-based object detection system, to detect digits.

In this scenario, we developed a front-end application with a bounded region that captures a region of interest (ROI), thus we decided to consider only an object recognition approach. For the object recognition, we used a deep learning model, which is part of a broader machine learning model using neural network in the SAP BTP ecosystem.

Check Requirements

python == 3.7.0

tensorflow==2.5.0

opencv-python == 4.5.5.62

numpy==1.19.5

import os

import numpy as np

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

from tensorflow.keras.layers import LSTM #python ==3.7 mandatory, (python==3.8.12, not mandatory)

from PIL import ImageFont, ImageOps, Image

import json

import cv2

import random

from hdfs import InsecureClient

import io

from io import BytesIO

from PIL import Image

# Converting to TFLite

from tensorflow import liteCreate Training Pipeline

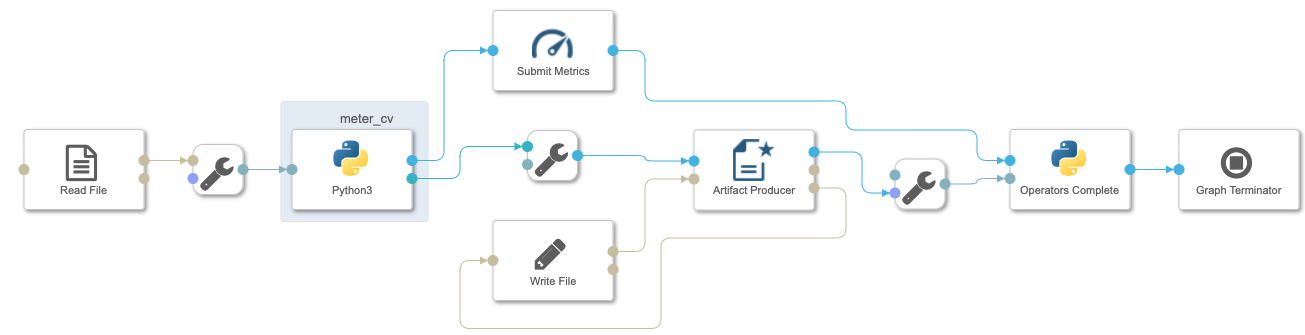

SAP Data Intelligence

We used SAP Data Intelligence for training pipeline and used Python Producer template in SAP Data Intelligence.

Training pipeline

Prepare data

For the data preparation, we create annotated images as captured by mobile application and this is the example images. We stored our image datasets in the data lake in SAP Data Intelligence.

Example of dataset

Split data

In the SAP Data Intelligence, we split the dataset into training and validation sets.

def split_data(images, labels, train_size=0.9, shuffle=True):

# 1. Get the total size of the dataset

size = len(images)

# 2. Make an indices array and shuffle it, if required

indices = np.arange(size)

if shuffle:

np.random.shuffle(indices)

# 3. Get the size of training samples

train_samples = int(size * train_size)

# 4. Split data into training and validation sets

x_train, y_train = images[indices[:train_samples]], labels[indices[:train_samples]]

x_valid, y_valid = images[indices[train_samples:]], labels[indices[train_samples:]]

return x_train, x_valid, y_train, y_validPreprocessing

In the SAP Data Intelligence, we get 'image path' from the data lake, so we need to client.read and please refer to the example python code. This is different from the local python code!

# to send metrics to the Submit Metrics operator, create a Python dictionary of key-value pairs

client = InsecureClient('http://datalake:50070')

directory='/shared/ml/images/'For image preprocessing, we decode and resize the image, convert the dtype and adjust the image brightness, sharpness and quality before training as follows:

def encode_single_sample(img_path, label):

img_path = tf.get_static_value(img_path).decode('utf-8')

label = tf.get_static_value(label).decode('utf-8')

with client.read(img_path) as reader:

# 1. Read image

img_arr = reader.read()

img = np.array(img_arr)

# 2. Decode or convert to grayscale

img = tf.image.decode_png(img, channels=3)

# 3. Convert to float32 in [0, 1] range

img = tf.image.convert_image_dtype(img, tf.float32)

# 4. Resize to the desired size

img = tf.image.resize(img, [img_height, img_width])

# 4.1. brightness

img = tf.image.adjust_brightness(img, delta=0.1)

# 4.2 sharpness

img = tf.image.random_contrast(img, 1.2, 1.5)

# 4.3 increase quality

img = tf.image.adjust_jpeg_quality(img, 75)

# 5. Transpose the image because we want the time

# dimension to correspond to the width of the image.

img = tf.transpose(img, perm=[1, 0, 2])

# 6. Map the characters in label to numbers

label = char_to_num(tf.strings.unicode_split(label, input_encoding="UTF-8"))

# 7. Return a dict as our model is expecting two inputs

return {"image": img, "label": label}

Train dataset

We use the batch training with batch size=16 with tf.data.Dataset for efficient training.

batch_size = 16

train_dataset = tf.data.Dataset.from_tensor_slices((np.array(dataset_train['image']), np.array(dataset_train['label'])))

train_dataset = (

train_dataset.map(

encode_single_sample, num_parallel_calls=tf.data.experimental.AUTOTUNE)

.batch(batch_size)

.prefetch(buffer_size=tf.data.experimental.AUTOTUNE)

)Build Model

When capturing the images, recognizing the sequence of digits is important. We use Convolutional Recurrent Neural Network (CRNN) to build deep learning model. Since CRNN models are network architectures specifically designed to recognize sequence-like objects in images, CRNN is suitable for our AMR scenario. CRNN is effective with smaller model and remarkable performance without predefined lexicon, compared to conventional methods such as CNN and RNN based algorithms.

CRNN architecture(reference link to here)

By using the maximum length of the captcha in the meter reading image instead of a predefined dictionary, this approach produces predictions of varying lengths.

# Maximum length of any captcha in the dataset

max_length = max([len(label) for label in train_labels])

# Create characters

characters = ['0','1', '2', '3', '4', '5', '6', '7', '8', '9']

# Mapping characters to integers

char_to_num = layers.experimental.preprocessing.StringLookup(

vocabulary=list(characters), num_oov_indices=0, mask_token=None

)

We define that the size of the input image is 350 X 50 and channel is 3 as a tensor.

Model: "model_v1"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

image (InputLayer) [(None, 350, 50, 3)] 0

__________________________________________________________________________________________________

Conv1 (Conv2D) (None, 350, 50, 32) 896 image[0][0]

__________________________________________________________________________________________________

pool1 (MaxPooling2D) (None, 175, 25, 32) 0 Conv1[0][0]

__________________________________________________________________________________________________

Conv2 (Conv2D) (None, 175, 25, 64) 18496 pool1[0][0]

__________________________________________________________________________________________________

pool2 (MaxPooling2D) (None, 87, 12, 64) 0 Conv2[0][0]

__________________________________________________________________________________________________

reshape (Reshape) (None, 87, 768) 0 pool2[0][0]

__________________________________________________________________________________________________

dense1 (Dense) (None, 87, 64) 49216 reshape[0][0]

__________________________________________________________________________________________________

dropout_4 (Dropout) (None, 87, 64) 0 dense1[0][0]

__________________________________________________________________________________________________

bidirectional_8 (Bidirectional) (None, 87, 256) 197632 dropout_4[0][0]

__________________________________________________________________________________________________

bidirectional_9 (Bidirectional) (None, 87, 128) 164352 bidirectional_8[0][0]

__________________________________________________________________________________________________

label (InputLayer) [(None, None)] 0

__________________________________________________________________________________________________

dense2 (Dense) (None, 87, 11) 1419 bidirectional_9[0][0]

__________________________________________________________________________________________________

ctc_loss (CTCLayer) (None, 87, 11) 0 label[0][0]

dense2[0][0]

==================================================================================================

Total params: 432,011

Trainable params: 432,011

Non-trainable params: 0

__________________________________________________________________________________________________Training model

### Get the model

model = build_model()

# Get the prediction model by extracting layers till the output layer

prediction_model = keras.models.Model(

model.get_layer(name="image").input, model.get_layer(name="dense2").output

)Convert to TFLite

We convert to the TFLite to integrate with the PWA app.

#Convert to TFLite

converter = lite.TFLiteConverter.from_keras_model(prediction_model)

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.experimental_new_converter=True

converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS

,tf.lite.OpsSet.SELECT_TF_OPS]

tfmodel = converter.convert()Save model

This is the step to save artifact to SAP Data Intelligence Data Lake. We send the model blob to the output port and Artifact Producer operator will use this to persist the model and create an artifact ID. The interesting lessons learned was that the TFLite model itself is a byte type, so we don't need to change it.

metrics_dict = {"kpi1": "1"}

# send the metrics to the output port - Submit Metrics operator will use this to persist the metrics

api.send("metrics", api.Message(metrics_dict))

# create & send the model blob to the output port

model_blob = tfmodel

#model_blob = bytes(tfmodel, 'utf-8') # error

api.send("modelBlob", model_blob)Write to HANA

To read the SAP Data Intelligence artifact, we need pipeline to write to SAP HANA.

Create consumer pipeline in SAP Data Intelligence

To create writing to HANA pipeline, we use Python Consumer template in SAP Data Intelligence.

Writing to HANA pipeline

Lesson's learned

✅ Check the data type

Checking the data type is really important. Please note the datatypes as each library support different datatypes.

✅ Check the requirements

I've been struggling with different types of errors. And I'd like to ask you to check your python version and tensorflow version to minimize errors.

import tensorflow as tf

print(tf.__version__)✅ Collaboration

We tried to find a suitable approach for running an inference TFLite model on the web browser using C++ and WebAssembly backend. Discovering the appropriate architecture with new and innovative technologies is an amazing collaboration, and I have learned to collaborate with other areas.

Blog series

In the AI on mobile: Powering your field force – Part 4 of 5, aditi.arora16 will explain how to load a TensorFlow Lite Machine Learning model in C++ and run inference on the web browser with the help of WebAssembly backend.

- In Part 1, vriddhishetty describes the context of the meter reading projects

- In Part 2, anoop.mittal describes the solution backend, particularly how the service was created with CAP & Spring boot

- In Part 3, lsm1401 describes the intuition and steps behind creation of the ML model that will be referenced by the backend at run time

- In Part 4, aditi.arora16 describes how she created the web assembly binary and how she loaded the model in browser for inference

- In Part 5, namagupta describes how he built the front end that stitches all working parts together, particularly how the PWA app was built with Angular

References

Shi, B., Bai, X., & Yao, C. (2016). An end-to-end trainable neural network for image-based sequence recognition and its application to scene text recognition. IEEE transactions on pattern analysis and machine intelligence, 39(11), 2298-2304.

Laroca, R., Barroso, V., Diniz, M. A., Gonçalves, G. R., Schwartz, W. R., & Menotti, D. (2019). Convolutional neural networks for automatic meter reading. Journal of Electronic Imaging, 28(1), 013023.

Salomon, G., Laroca, R., & Menotti, D. (2020, July). Deep learning for image-based automatic dial meter reading: Dataset and baselines. In 2020 International Joint Conference on Neural Networks (IJCNN) (pp. 1-8). IEEE.

https://blogs.sap.com/2022/03/16/computer-vision-with-sap-data-intelligence/

https://en.wikipedia.org/wiki/Automatic_meter_reading

https://levity.ai/blog/difference-machine-learning-deep-learning

https://keras.io/examples/vision/handwriting_recognition/

- SAP Managed Tags:

- Machine Learning,

- SAP Data Intelligence,

- Artificial Intelligence,

- SAP HANA,

- SAP Business Technology Platform

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

93 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

66 -

Expert

1 -

Expert Insights

177 -

Expert Insights

299 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

345 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

427 -

Workload Fluctuations

1

- Gamifying SAP C4C with BTP, the Flutter or Build Apps way. in Technology Blogs by SAP

- Partner-2-Partner Collaboration in Professional Services in Technology Blogs by SAP

- It's Christmas! Ollama+Phi-2 on SAP AI Core in Technology Blogs by SAP

- What’s New in SAP Analytics Cloud Release 2023.25 in Technology Blogs by SAP

- Vector Search in pure ABAP (any DB) in Technology Blogs by Members

| User | Count |

|---|---|

| 40 | |

| 25 | |

| 17 | |

| 14 | |

| 8 | |

| 7 | |

| 7 | |

| 7 | |

| 6 | |

| 6 |