- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Develope kafka rest api with nodejs to make it cal...

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Product and Topic Expert

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

05-20-2022

3:18 AM

Sap cloud integration has kafka adapter , but currently the kafka adapter does not support calling kafka on premise through SAP Cloud connector . We can refer to note 316484 for this . There are 2 options for resolving this currently :

1, Expost the kafka on premise to public web, then CPI kafka adapter can connect to it .

2, Build rest api proxy for kafka , then CPI can produce or consume message on kafka op by using CPI http adapter with the help of sap cloud connector .

Today I want try to investigate option 2 . There are existing open source kafka rest proxies, but maybe customer has some concern to use them . In this blog I will try to develope kafka rest api with nodejs to make it callable from CPI through sap cloud connector . In this part I , I will prepare kafka envirement .

To build the scenario, first let me use docker to create kafka service on my computer . Of course I have installed docker on my laptop . The following is the steps .

docker pull wurstmeister/zookeeper

docker pull wurstmeister/kafka

docker run -d --name zookeeper -p 2181:2181 wurstmeister/zookeeper

docker run -d --name kafka --publish 9092:9092 --link zookeeper --env KAFKA_ZOOKEEPER_CONNECT=zookeeper:2181 --env KAFKA_ADVERTISED_HOST_NAME=localhost --env KAFKA_ADVERTISED_PORT=9092 wurstmeister/kafka

docker ps

docker exec -it 664dcfafd35c /bin/bash

kafka-topics.sh --create --zookeeper zookeeper:2181 --replication-factor 1 --partitions 1 --topic dblab01

kafka-topics.sh --list --zookeeper zookeeper:2181

kafka-console-producer.sh --broker-list localhost:9092 --topic dblab01

The following is the code .

npm install kafkajs

node server.js

to be continue in Part II .

Best regards!

Jacky Liu

1, Expost the kafka on premise to public web, then CPI kafka adapter can connect to it .

2, Build rest api proxy for kafka , then CPI can produce or consume message on kafka op by using CPI http adapter with the help of sap cloud connector .

Today I want try to investigate option 2 . There are existing open source kafka rest proxies, but maybe customer has some concern to use them . In this blog I will try to develope kafka rest api with nodejs to make it callable from CPI through sap cloud connector . In this part I , I will prepare kafka envirement .

To build the scenario, first let me use docker to create kafka service on my computer . Of course I have installed docker on my laptop . The following is the steps .

Step 1 : pull docker image for zookeeper and kafka

docker pull wurstmeister/zookeeper

docker pull wurstmeister/kafka

Step 2 : Create and start zookeeper container

docker run -d --name zookeeper -p 2181:2181 wurstmeister/zookeeper

Step 3 : Create and start kafka container and connect kafka to zookeeper container in step 2

docker run -d --name kafka --publish 9092:9092 --link zookeeper --env KAFKA_ZOOKEEPER_CONNECT=zookeeper:2181 --env KAFKA_ADVERTISED_HOST_NAME=localhost --env KAFKA_ADVERTISED_PORT=9092 wurstmeister/kafka

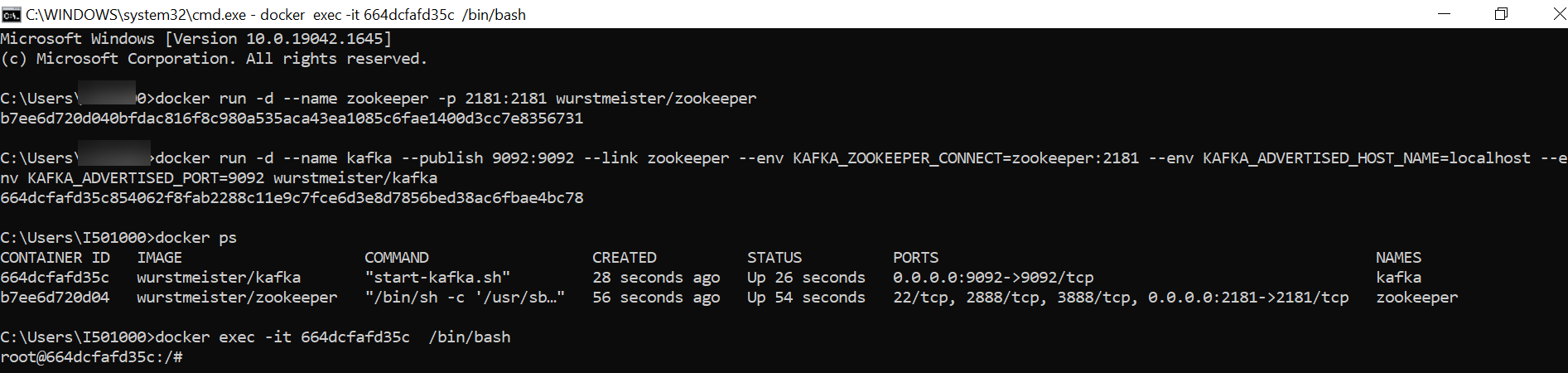

Step 4 : Enter kafka container

docker ps

docker exec -it 664dcfafd35c /bin/bash

Step 5 : create and check topic in kafka container

kafka-topics.sh --create --zookeeper zookeeper:2181 --replication-factor 1 --partitions 1 --topic dblab01

kafka-topics.sh --list --zookeeper zookeeper:2181

Step 6 : start producer and produce data in kafka container

kafka-console-producer.sh --broker-list localhost:9092 --topic dblab01

Step 7 : start consumer and consume data in kafka container

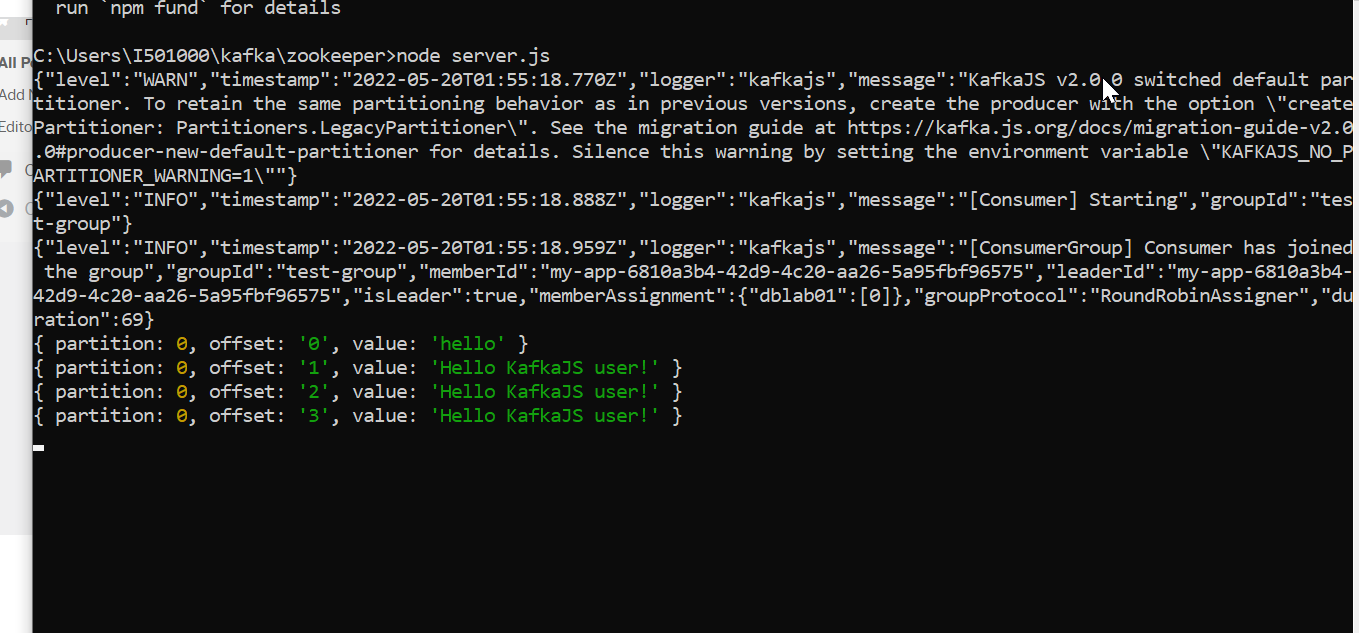

Step 8 : start server.js to to test kafka locally .

The following is the code .

const { Kafka } = require('kafkajs')

const kafka = new Kafka({

clientId: 'my-app',

requestTimeout: 25000,

connectionTimeout: 30000,

authenticationTimeout:30000,

retry: {

initialRetryTime: 3000,

retries: 0

},

brokers: ['localhost:9092']

})

const producer = kafka.producer()

const consumer = kafka.consumer({ groupId: 'test-group' })

const run = async () => {

// Consuming

await consumer.connect()

await consumer.subscribe({ topic: 'dblab01', fromBeginning: true })

await consumer.run({

eachMessage: async ({ topic, partition, message }) => {

// await new Promise(r=>setTimeout(r,3000))

console.log({

partition,

offset: message.offset,

value: message.value.toString(),

})

},

})

}

run().catch((e)=>{

debugger

})

setInterval(async ()=>{

await producer.connect()

await producer.send({

topic: 'dblab01',

messages: [

{ value: 'Hello KafkaJS user!' },

],

})

},1000)

npm install kafkajs

node server.js

to be continue in Part II .

Best regards!

Jacky Liu

- SAP Managed Tags:

- Cloud Integration

Labels:

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

92 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

66 -

Expert

1 -

Expert Insights

177 -

Expert Insights

295 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

341 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

419 -

Workload Fluctuations

1

Related Content

- Integration Suite transport iflows for testing in Technology Q&A

- Setup NWDS 7.50 for developing a custom UWL connector in Technology Q&A

- Using Java Functionality from your ABAP Programs in Technology Blogs by SAP

- Proxy Third-Party Python Library Traffic - Using SAP Cloud Connector in SAP Data Intelligence Python Operators in Technology Blogs by SAP

- Develope kafka rest api with nodejs to make it callable from CPI through sap cloud connector Part II in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 35 | |

| 25 | |

| 17 | |

| 13 | |

| 8 | |

| 7 | |

| 6 | |

| 6 | |

| 6 | |

| 6 |