- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- SAP HANA Cloud: Federation template

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Employee

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

03-25-2022

10:08 AM

Please have a look into SAP NOTE 3319855 - HDI Container Architecture & Design Considerations and SAP NOTE 3319833 - Timeout situations & long deployment runtime due to high number of HDI-related ob... for further guidance w.r.t. HDI/GIT Architecture.

In this blogpost (which is part of a series linked here ) I would like to provide a simple and scalable approach for HDI container design under the assumption of a sap hana cloud data mart/lake scenario where multiple remote systems are to be connected to using different replication interfaces.

In order to have the frame of these concepts as crisp and clear as possible i’ll focus on the essentials only.

Teaser Outcome:

Figure 1 HDI - Architecture with namespaces

Prerequisite knowledge:

If you need to dig into the basics first please consider reading this:

Additional sources:

Below is a meta-level conceptualization of the applied approach.

Figure 2 HDI meta concept for remote source system setups

All objects deployed inside an HDI container will fall into the respective database schema <sid_schema>, this is indicated by the dashed lines in the picture above.

We will use dedicated .hdbmigrationtable artifacts to provide the database tables.

In our example we used SDI (HANA Adapter) for the Shangri-la datafoundation and SDA/RTR for the Joermungandr data foundation. Replication tasks can create various objects and we will use some of them.

Virtual tables objects will be created by the replication task, which we will use to read the data from the remote system. There is no need to create virtual tables on your own, except for testing purposes. And Tasks will be used to perform the initial load of the table.

The datalake-reporting container will use synonyms to access the tables inside the datafoundation HDI containers. The data will be exposed to upstream consumers using calculation views.

In essence everytime you add a remote system you repeat the pattern applied to the datafoundations Shangri-la and Joermungandr as indicated here by the bottom right box.

Figure 3 Scalable parts of the architecture

Where your Datafoundation <name>-hdi is a placeholder for the name of your remote system.

Figure 4 meta-level file overview

In total you will need the following steps to create this example:

You will need a privileged user in the remote system to create users and add privileges

For this example we create a technical user to read access data the remote system.

This is purely optional in case you want to fill the tables with some random data you can use the code below which wraps the internal function SERIES_GENERATE_INTEGER()

<

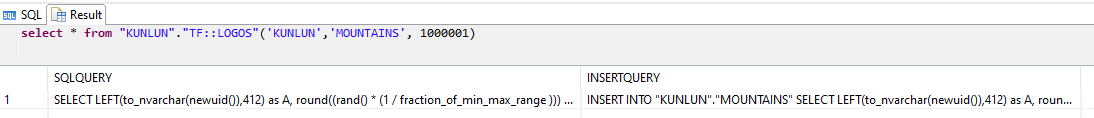

The function "KUNLUN"."TF::LOGOS", accepts 3, parameters:

"KUNLUN "."TF::LOGOS"(schema_name, table_name, data_rows) and will provide you two results:

sqlQuery: SELECT query to generate the data (you can check if the data fits your purpose before inserting it)

insertSqlQuery: INSERT statement with the above sqlQuery. e.g.

You will need a privileged user in the remote system to create users and add privileges

Same as before we will create roles, privileges and data to consume.

Difference compared to the SDI case you’ll notice that we don’t need to grant the TRIGGER privilege to the remote role.

To fill the table with dummy data i used the TF::LOGOS function from before.

In the target data lake EDEN we also have to do some preparations in order to read the data inside the HDI containers.

Use the technical user to connect to the remote system.

Execute in system with admin user.

Figure 5 Remote Source JOERMUNGANDR_SDA in database explorer

After we have created the remote sources in the system and tested them to be working we will go ahead and create a user-provided-service(ups) instance which is able to provide access to these remote systems by consuming the service.

This service is used to grant the container it connects to the privileges necessary for remote source handling.

We need a user for the user-provided-service which will be called EDEN_REMOTE_GRANTOR

and assign him the remote source privileges with grant option

Inside BTP you need to create the UPS inside the space where the hdi-container will exist.

You need to go into your Subaccount > Instances > Click on create and select “User-Provided Service Instance”

Make sure to provide the user eden_remote_grantor in the wizard.

A template for this exercise looks like this, change the host and password according to your environment.

The HostString can be obtained from inside BTP or the SAP HANA Cloud Central:

Figure 6 Obtain HostString from BTP - SQL Endpoint

Figure 7 Obtain HostString from SAP HANA Cloud Central

In the next chapters I’ll explain the most important parts of one of the datafoundation containers.

This will start with the overview of the file/folder structure (File Skeleton) and go over the different concepts that this implies like

┣ 📂db

┃ ┣ 📂cfg

┃ ┃ ┣ 📜.hdiconfig

┃ ┃ ┗ 📜remote.hdbgrants

┃ ┣ 📂src

┃ ┃ ┣ 📂auth

┃ ┃ ┃ ┣ 📜Read.hdbrole

┃ ┃ ┃ ┗ 📜Read_GRANTABLE.hdbrole

┃ ┃ ┣ 📂data

┃ ┃ ┃ ┗ 📜TG_KUNLUN_MOUNTAINS.hdbmigrationtable

┃ ┃ ┣ 📂defaults

┃ ┃ ┣ 📂replication

┃ ┃ ┃ ┗ 📜RT_KUNLUN_MOUNTAINS.hdbreptask

┃ ┃ ┣ 📜.hdiconfig

┃ ┃ ┗ 📜.hdinamespace

┃ ┣ 📜.env

┃ ┣ 📜.gitignore

┃ ┣ 📜package-lock.json

┃ ┗ 📜package.json

┣ 📜.gitignore

┗ 📜mta.yaml

.hdinamespace file in /src/ folder:

Given our file structure as mentioned in the file skeleton this will lead to the following namespaces created

Before that we have three namespaces that are specific to the “layer” we are operating in:

Provide files to self-service the roles necessary for remote source management to the container.

Provide a READ access role to access the tables inside this container.

Provide a description of the database tables.

In HDI we can choose between two separate artifacts for this.

For this exercise we opted for the .hdbmigrationtable artifact.

Our main reason for this is the option to adapt the table structure without implicitly dropping the data.

If during the development of our project we see that some changes are necessary later on to the structure of the table, we can do so without having to copy/reload the table, which can be costly for huge tables.

In addition to that we have explicit versioning of database table changes.

Official documentation for .hdbmigrationtable (perma-link):

https://help.sap.com/viewer/c2cc2e43458d4abda6788049c58143dc/LATEST/en-US/52d1f5acfa754a7887e2122664...

Here we define the replication tasks used to move data from the remote systems into our target system.

First you’ll have to connect a .hdbreptask to a remote source. Once that is successful you need to select the table(s) you want to replicate.

Important!If you put multiple remote source objects defined inside one .hdbreptask file then ALL the actions defined in the reptasks will be executed upon deployment.

In case you have defined target tables to be truncated or dropped and recreated this happens for all tables inside the .hdbreptask. Even if you only change one remote source object configuration as there is no delta-mechanism available to detect and deploy only the changed remote source object configuration.

A .hdbreptask file when deployed will, depending on the options in the reptask, generate multiple runtime objects, , in this container we used SDI Replication with HANA Adapter:

In our scenario we defined the following options:

No special partition or projection options

Target Properties

Targe Table: we choose the target table provided by the .hdbmigrationtable object

Drop Target Table on activation: False

Generate Target Table on activation: False

Truncate Table on execution: True

For more details please have a look into the SDI&SDQ Modeling Guide for SAP Business Application Studio

https://help.sap.com/products/HANA_SMART_DATA_INTEGRATION/cc7ebd3f344a4cdda20966a7617f52d8/b72a6833d...?

As you have seen this template for adding remote source is lightweight easy to manage as we have achieved the following:

Starting the data transfer is simply done by executing the TASK's generated by the .hdbreptask file.

Once the task is successfully completed, initial load is done and if configured - real time replication will start, visible in the remote subscription monitor.

The EDEN-reporting project is containing the calculation views & synonyms which will access all of the data in the previously created data foundation by simply connection to the HDI container and consuming the provided READ roles.

📦db

┣ 📂cfg

┃ ┣ 📂joermungandr

┃ ┃ ┣ 📜joermungandr.hdbgrants

┃ ┃ ┗ 📜joermungandr.hdbsynonymconfig

┃ ┣ 📂shangri-la

┃ ┃ ┣ 📜shangri-la.hdbgrants

┃ ┃ ┣ 📜shangri-la.hdbsynonymconfig

┃ ┗ 📜.hdiconfig

┣ 📂src

┃ ┣ 📂auth

┃ ┃ ┗ 📜Read.hdbrole

┃ ┣ 📂synonym

┃ ┃ ┣ 📜.hdinamespace

┃ ┃ ┣ 📜joermungandr.hdbsynonym

┃ ┃ ┗ 📜shangri-la.hdbsynonym

┃ ┣ 📂view

┃ ┃ ┗ 📂baseview

┃ ┣ 📜.hdiconfig

┃ ┗ 📜.hdinamespace

┣ 📜.env

┣ 📜.gitignore

┣ 📜package-lock.json

┗ 📜package.json

.hdinamespace file in /src/ folder:

Given our file&folder structure as mentioned in the file skeleton this will lead to the following namespaces created

Before that we have two namespaces that are specific to the “layer” we are operating in:

In each of the data foundation HDI containers we provided roles to self-service the READ access to the tables, now we will make use of them.

You need to be connected to the service in order for this to work.

db/src/cfg/shangri-la/ shangri-la.hdbgrants

In the provided .hdbsynonymconfig we make use of the feature to abstract from a concrete schema name by using the schema.configure key. During deyployment the schema will be replaced by the schema defined by the service connected.

db/src/cfg/shangri-la/ shangri-la.hdbsynonymconfig

Provide a READ access role to access all calculation views inside and below the view folder

The usage of the wildcard pattern (%) ensures that we don’t need to adapt the roles whenever we add new views in the package. During deployment the roles will be adapted automatically as the wildcards are evaluated.

In order to access the tables in the data foundation, which are outside of the reporting HDI container, we need to use synonyms.

db/src/synonyms/ shangri-la.hdbsynonym

For this example we created a calculation view in the baseview folder which propagates the columns of the tables TG_KUNLUN_MOUNTAINS.

It serves the purpose to distinguish easily between calculation views which expose the table as is (baseviews) and the additional calculation views which add additional logic on top.

In case your business users want to consume the raw tables creating these baseviews also has the benefit that you do not give direct access to the data foundation layer to upstream consumers (e.g. reporting tools) but rather through the reporting layer.

One additional view will be created in the db/src/view/ folder which will perform a simple aggregation.

In the end we will have two views.

And as you can see the namespace clearly distinguishes them.

The roles are dynamically extended by usage of the wildcard syntax (%) during deployment

Conclusion

Initially i teased the following outcome

And this was achieved.

If you have feedback & questions please let me know in the comments what would be helpful to you.

Feel free to follow me in case you want further updates on SAP HANA Cloud / HDI / Performance optimization / sap hana modelling topics.

Thanks and Regards,

Yves

Introduction

In this blogpost (which is part of a series linked here ) I would like to provide a simple and scalable approach for HDI container design under the assumption of a sap hana cloud data mart/lake scenario where multiple remote systems are to be connected to using different replication interfaces.

In order to have the frame of these concepts as crisp and clear as possible i’ll focus on the essentials only.

Teaser Outcome:

- Clear distinction between persistence and modelling space

- If possible all runtime objects have a HDI design-time counterpart and are HDI managed

- Each remote system is managed in a separate HDI container (with virtual tables, replication tasks and target tables).

This clear separation enables you to divide and conquer the complexity of the project. - Only one user-provided-service necessary to access the data

Figure 1 HDI - Architecture with namespaces

Prerequisite knowledge:

If you need to dig into the basics first please consider reading this:

- Information about the SDI/DPAgent installation

- Official SAP HELP : Install the Data Provisioning Agent

- Information about SDA, virtual fabric tables (limitations, advantages)

- Supported Remote Sources:

https://launchpad.support.sap.com/#/notes/2600176

Additional sources:

- https://developers.sap.com/group.hana-cloud-extend-2-data-replication.html

https://developers.sap.com/tutorials/hana-cloud-mission-extend-05.html - https://developers.sap.com/tutorials/hana-cloud-mission-extend-08.html

- https://developers.sap.com/group.hana-cloud-extend-2-data-replication.html

Proposed solution

Below is a meta-level conceptualization of the applied approach.

Figure 2 HDI meta concept for remote source system setups

All objects deployed inside an HDI container will fall into the respective database schema <sid_schema>, this is indicated by the dashed lines in the picture above.

We will use dedicated .hdbmigrationtable artifacts to provide the database tables.

In our example we used SDI (HANA Adapter) for the Shangri-la datafoundation and SDA/RTR for the Joermungandr data foundation. Replication tasks can create various objects and we will use some of them.

Virtual tables objects will be created by the replication task, which we will use to read the data from the remote system. There is no need to create virtual tables on your own, except for testing purposes. And Tasks will be used to perform the initial load of the table.

The datalake-reporting container will use synonyms to access the tables inside the datafoundation HDI containers. The data will be exposed to upstream consumers using calculation views.

In essence everytime you add a remote system you repeat the pattern applied to the datafoundations Shangri-la and Joermungandr as indicated here by the bottom right box.

Figure 3 Scalable parts of the architecture

Where your Datafoundation <name>-hdi is a placeholder for the name of your remote system.

Figure 4 meta-level file overview

Implementation

Build Kit

In total you will need the following steps to create this example:

- Privileged user in the datalake enviroment / outside HDI container to create & assign user/privileges/roles

- Remote system connection

- Technical User which is allowed to SELECT and TRIGGER (SDI) on the remote schemas

- Assign Roles & Privileges for SDI

- SDI DPAgent running on remote system, installation guide or https://developers.sap.com/tutorials/hana-cloud-mission-extend-06.html

- Add Agent on to Cloud System

- Remote Source

- Role with privileges on Remote Source

- User-Provided-Service which gets the Roles assigned

- HDI container consumes UPS which is granting the container rights to change the remote source

- Test connection

- Technical User which is allowed to SELECT and TRIGGER (SDI) on the remote schemas

- HDI container per remote source

- Folder structure skeleton

- Edit mta.yaml to be able to consume UPS to access remote source

- Add remote.hdbgrants to define what roles are assigned to #OO and application_user (_RT?)

- Create data structure (.hdbmigrationtables)

- Fill using replication tasks

- Grant READ role on tables to consumers

- Changing .default_access_role to add ALTER privilege for SDI Reptask operations by container

- Adding the _RT user inside DBX as a database connection

- HDI container for upstream modelling artifacts (dl-reporting)

- Consuming data from each of the underlying HDI containers (cfg-grants)

- Creation of synonyms per remote source container

- Creation of calculation views on top of synonyms

- Creation of roles to allow read access on dl-reporting objects

Preparations in the remote source system Shangri-la

You will need a privileged user in the remote system to create users and add privileges

Create a schema with demo tables

CREATE SCHEMA "KUNLUN";

CREATE COLUMN TABLE "KUNLUN"."MOUNTAINS"(

"A" NVARCHAR(412) NOT NULL,

"B" DECIMAL(38) NOT NULL,

"C" NVARCHAR(6) NOT NULL,

"D" NVARCHAR(412) NOT NULL,

"E" NVARCHAR(10),

"TIME_1" TIMESTAMP,

"TIME_2" TIMESTAMP);Create technical user to connect to remote system

For this example we create a technical user to read access data the remote system.

-- [EXECUTE IN Shangri-la] Create roles & user in Shangri-la system to access Shangri-la data remotely from EDEN data lake

CREATE USER JAMES_HILTON PASSWORD ######;

CREATE ROLE REMOTE_SHANGRILA_SDI;

GRANT SELECT, TRIGGER ON SCHEMA "KUNLUN" TO REMOTE_SHANGRILA_SDI;

GRANT REMOTE_SHANGRILA_SDI TO JAMES_HILTON;Quickly generate data (optional)

CREATE ROLE KUNLUN_DEVELOPER;

GRANT SELECT,INSERT, DELETE, CREATE ANY, ALTER, DROP ON SCHEMA "KUNLUN" TO KUNLUN_DEVELOPER;

GRANT KUNLUN_DEVELOPER TO JAMES_HILTON;This is purely optional in case you want to fill the tables with some random data you can use the code below which wraps the internal function SERIES_GENERATE_INTEGER()

<

drop function "KUNLUN"."TF::LOGOS";create FUNCTION "KUNLUN"."TF::LOGOS" ( pin_schema_name NVARCHAR(256), pin_table_name NVARCHAR(256), pin_tablesize INTEGER ) RETURNS TABLE ( sqlQuery NVARCHAR(5000), insertQuery NVARCHAR(5000) ) LANGUAGE SQLSCRIPT SQL SECURITY INVOKER AS

BEGIN

DECLARE l_icount INTEGER = 0;

DECLARE lc_tablemeta_count INTEGER = 0; DECLARE sqlStringPrefix NVARCHAR(5000)= 'SELECT ';--'SELECT GENERATED_PERIOD_START as INTERNAL_ID, ';

DECLARE sqlStringSuffix NVARCHAR(256) = ' from SERIES_GENERATE_INTEGER(1,1,'||:pin_tablesize|| ')';

DECLARE lv_data_type_name NVARCHAR(256) = '';

DECLARE lv_column_name NVARCHAR(256) = '';

DECLARE lv_length INTEGER = 0;

DECLARE lt_mytable TABLE (sqlQuery NVARCHAR(5000));

lt_mytable = select '' as sqlQuery from dummy;

lt_table_meta = select "POSITION", column_name, data_type_name, "LENGTH", scale from "SYS"."TABLE_COLUMNS" where schema_name = :pin_schema_name and table_name = :pin_table_name and column_name not like '%$%' order by "POSITION";

select count(*) into lc_tablemeta_count from :lt_table_meta ;

FOR l_icount IN 1..:lc_tablemeta_count DO

select data_type_name into lv_data_type_name from :lt_table_meta where position = :l_icount;

select "COLUMN_NAME" into lv_column_name from :lt_table_meta where position = :l_icount;

select "LENGTH" into lv_length from :lt_table_meta where position = :l_icount;

-- handle data generation for datatype here

IF lv_data_type_name = 'NVARCHAR'

THEN

lt_mytable = select concat(sqlQuery, 'LEFT(to_nvarchar(newuid())'||','|| lv_length ||')' || ' as ' || lv_column_name || ', ') as sqlQuery from :lt_mytable;

ELSEIF lv_data_type_name = 'DECIMAL'

THEN

lt_mytable = select concat(sqlQuery,'round((rand() * (1 / fraction_of_min_max_range )))'|| ' as ' || lv_column_name || ', ') as sqlQuery from :lt_mytable;

ELSEIF lv_data_type_name = 'SECONDDATE'

THEN

lt_mytable = select concat(sqlQuery,'add_seconds(now(),rand() * (1 / fraction_of_min_max_range )*10 )'|| ' as ' || lv_column_name || ', ') as sqlQuery from :lt_mytable;

ELSEIF lv_data_type_name = 'TIMESTAMP'

THEN

lt_mytable = select concat(sqlQuery,' add_seconds(now(),rand() * (1 / fraction_of_min_max_range )*10 ) '|| ' as ' || lv_column_name || ', ') as sqlQuery from :lt_mytable;

END IF;

-- handle data generation for datatype here - END

END FOR;

-- left(sqlQuery, length(sqlQuery)-1) removed the trailing , at the end.

return

select CONCAT(CONCAT(:sqlStringPrefix, left(sqlQuery, length(sqlQuery)-2 )), :sqlStringSuffix) as sqlQuery,

'INSERT INTO "' || :pin_schema_name ||'"."'|| :pin_table_name || '" ' || CONCAT(CONCAT(:sqlStringPrefix, left(sqlQuery, length(sqlQuery)-2 )), :sqlStringSuffix) as insertQuery

from :lt_mytable;

END;The function "KUNLUN"."TF::LOGOS", accepts 3, parameters:

"KUNLUN "."TF::LOGOS"(schema_name, table_name, data_rows) and will provide you two results:

select * from "KUNLUN"."TF::LOGOS"('KUNLUN','MOUNTAINS', 1000001);

sqlQuery: SELECT query to generate the data (you can check if the data fits your purpose before inserting it)

insertSqlQuery: INSERT statement with the above sqlQuery. e.g.

-- query to generate data

SELECT LEFT(to_nvarchar(newuid()),412) as A, round((rand() * (1 / fraction_of_min_max_range ))) as B, LEFT(to_nvarchar(newuid()),6) as C, LEFT(to_nvarchar(newuid()),412) as D, LEFT(to_nvarchar(newuid()),10) as E, add_seconds(now(),rand() * (1 / fraction_of_min_max_range )*10 ) as TIME_1, add_seconds(now(),rand() * (1 / fraction_of_min_max_range )*10 ) as TIME_2 from SERIES_GENERATE_INTEGER(1,1,1000001);-- write generated data into target table

INSERT INTO "KUNLUN"."MOUNTAINS" SELECT LEFT(to_nvarchar(newuid()),412) as A, round((rand() * (1 / fraction_of_min_max_range ))) as B, LEFT(to_nvarchar(newuid()),6) as C, LEFT(to_nvarchar(newuid()),412) as D, LEFT(to_nvarchar(newuid()),10) as E, add_seconds(now(),rand() * (1 / fraction_of_min_max_range )*10 ) as TIME_1, add_seconds(now(),rand() * (1 / fraction_of_min_max_range )*10 ) as TIME_2 from SERIES_GENERATE_INTEGER(1,1,1000001);-- check for generated data in the target table

SELECT * FROM "KUNLUN"."MOUNTAINS";

Preparations in the remote source system Joermungandr

You will need a privileged user in the remote system to create users and add privileges

Same as before we will create roles, privileges and data to consume.

Difference compared to the SDI case you’ll notice that we don’t need to grant the TRIGGER privilege to the remote role.

CREATE USER LOKI PASSWORD ######;

CREATE SCHEMA "MIDGARD";

-- Create Remote Roles to access Joermungandr

CREATE ROLE REMOTE_JOERMUNGANDR_SDA;

GRANT SELECT ON SCHEMA "MIDGARD" TO REMOTE_JOERMUNGANDR_SDA;

GRANT REMOTE_JOERMUNGANDR_SDA TO LOKI;

-- Roles necessary for schema schanges on MIDGARD / creation of TF::LOGOS

CREATE ROLE MIDGARD_DEVELOPER;

GRANT SELECT,INSERT, DELETE, CREATE ANY, ALTER, DROP ON SCHEMA "MIDGARD" TO MIDGARD_DEVELOPER;

GRANT MIDGARD_DEVELOPER TO LOKI;

CREATE COLUMN TABLE "MIDGARD"."SERPENT"(

"A" NVARCHAR(412) NOT NULL,

"B" DECIMAL(38) NOT NULL,

"C" NVARCHAR(6) NOT NULL,

"D" NVARCHAR(412) NOT NULL,

"E" NVARCHAR(10),

"TIME_1" TIMESTAMP,

"TIME_2" TIMESTAMP);To fill the table with dummy data i used the TF::LOGOS function from before.

Preparations in the target system (EDEN)

In the target data lake EDEN we also have to do some preparations in order to read the data inside the HDI containers.

- Creation of remote sources

- Creation of a user-provided-service (EDEN-remote-source-ups) which will grant access to both remote system data

Create the Remote Source in EDEN to access remote system Shangri-la

Use the technical user to connect to the remote system.

Create the Remote Source in EDEN to access remote source system Joermungandr

Execute in system with admin user.

DROP REMOTE SOURCE JOERMUNGANDR_SDA;

CREATE REMOTE SOURCE JOERMUNGANDR_SDA ADAPTER "hanaodbc"

CONFIGURATION 'ServerNode=<virtual_host_name>:<portnumber>;use_haas_socks_proxy=true;scc_location_id=scc_location_id'

WITH CREDENTIAL TYPE 'PASSWORD' USING 'user=LOKI;password=<password>';Figure 5 Remote Source JOERMUNGANDR_SDA in database explorer

After we have created the remote sources in the system and tested them to be working we will go ahead and create a user-provided-service(ups) instance which is able to provide access to these remote systems by consuming the service.

User-Provided-Service creation (EDEN-remote-source-ups)

This service is used to grant the container it connects to the privileges necessary for remote source handling.

User & privileges for remote-source-ups (EDEN-remote-source-ups)

We need a user for the user-provided-service which will be called EDEN_REMOTE_GRANTOR

and assign him the remote source privileges with grant option

CREATE USER EDEN_REMOTE_GRANTOR PASSWORD "ups_user_password";

create role eden_remote_read;

-- Create remote source roles

-- 1. For SDI remote source

CREATE ROLE SHANGRILA_REMOTE_SOURCE;

CREATE ROLE SHANGRILA_REMOTE_SOURCE_GRANTABLE;

-- grant privilege to roles

GRANT CREATE REMOTE SUBSCRIPTION, CREATE VIRTUAL TABLE, PROCESS REMOTE SUBSCRIPTION EXCEPTION, ALTER ON REMOTE SOURCE "Shangri-la_SDI" TO SHANGRILA_REMOTE_SOURCE;

GRANT CREATE REMOTE SUBSCRIPTION, CREATE VIRTUAL TABLE, PROCESS REMOTE SUBSCRIPTION EXCEPTION, ALTER ON REMOTE SOURCE "Shangri-la_SDI" TO SHANGRILA_REMOTE_SOURCE_GRANTABLE WITH GRANT OPTION;

-- grant role to eden-ups-user

grant SHANGRILA_REMOTE_SOURCE to eden_remote_read with admin option;

grant SHANGRILA_REMOTE_SOURCE_GRANTABLE to eden_remote_read with admin option;

-- 2. for SDA remote source

CREATE ROLE JOERMUNGANDR_REMOTE_SOURCE;

CREATE ROLE JOERMUNGANDR_REMOTE_SOURCE_GRANTABLE;

-- grant privilege to roles

GRANT CREATE REMOTE SUBSCRIPTION, CREATE VIRTUAL TABLE, PROCESS REMOTE SUBSCRIPTION EXCEPTION, ALTER ON REMOTE SOURCE "JOERMUNGANDR_SDA" TO JOERMUNGANDR_REMOTE_SOURCE;

GRANT CREATE REMOTE SUBSCRIPTION, CREATE VIRTUAL TABLE, PROCESS REMOTE SUBSCRIPTION EXCEPTION, ALTER ON REMOTE SOURCE "JOERMUNGANDR_SDA" TO JOERMUNGANDR_REMOTE_SOURCE_GRANTABLE WITH GRANT OPTION;

-- grant role to eden-ups-user

grant JOERMUNGANDR_REMOTE_SOURCE to eden_remote_read with admin option;

grant JOERMUNGANDR_REMOTE_SOURCE_GRANTABLE to eden_remote_read with admin option;Creation of the user-provided-service in BTP

Inside BTP you need to create the UPS inside the space where the hdi-container will exist.

You need to go into your Subaccount > Instances > Click on create and select “User-Provided Service Instance”

Make sure to provide the user eden_remote_grantor in the wizard.

A template for this exercise looks like this, change the host and password according to your environment.

{

"host": "your-host-string",

"password": "ups-user-password",

"port": "443",

"tags": [

"hana"

],

"user": "eden_remote_grantor"

}

Obtaining HostString

The HostString can be obtained from inside BTP or the SAP HANA Cloud Central:

Figure 6 Obtain HostString from BTP - SQL Endpoint

Figure 7 Obtain HostString from SAP HANA Cloud Central

SAP Business Application Studio - HANA projects

Shangri-la Project

In the next chapters I’ll explain the most important parts of one of the datafoundation containers.

This will start with the overview of the file/folder structure (File Skeleton) and go over the different concepts that this implies like

- Namespaces

- Tables & Replication Tasks to fill them

- Consumed Privileges

- Provided Privileges

- yaml (container name and ups consumption)

File Skeleton

┣ 📂db

┃ ┣ 📂cfg

┃ ┃ ┣ 📜.hdiconfig

┃ ┃ ┗ 📜remote.hdbgrants

┃ ┣ 📂src

┃ ┃ ┣ 📂auth

┃ ┃ ┃ ┣ 📜Read.hdbrole

┃ ┃ ┃ ┗ 📜Read_GRANTABLE.hdbrole

┃ ┃ ┣ 📂data

┃ ┃ ┃ ┗ 📜TG_KUNLUN_MOUNTAINS.hdbmigrationtable

┃ ┃ ┣ 📂defaults

┃ ┃ ┣ 📂replication

┃ ┃ ┃ ┗ 📜RT_KUNLUN_MOUNTAINS.hdbreptask

┃ ┃ ┣ 📜.hdiconfig

┃ ┃ ┗ 📜.hdinamespace

┃ ┣ 📜.env

┃ ┣ 📜.gitignore

┃ ┣ 📜package-lock.json

┃ ┗ 📜package.json

┣ 📜.gitignore

┗ 📜mta.yaml

Namespaces defined

.hdinamespace file in /src/ folder:

{

"name": "dl.df.shangri-la",

"subfolder": "append"

}Given our file structure as mentioned in the file skeleton this will lead to the following namespaces created

- df.shangri-la.auth

- df.shangri-la.data

- df.shangri-la.replication

Before that we have three namespaces that are specific to the “layer” we are operating in:

- (Datalake)

- df. (Data foundation layer)

- df.shangri-la. (remote system namespace)

Container configuration folder

Provide files to self-service the roles necessary for remote source management to the container.

Authorizations (db/src/auth/)

Provide a READ access role to access the tables inside this container.

Database Tables (db/src/data/)

Provide a description of the database tables.

In HDI we can choose between two separate artifacts for this.

- .hdbtable

- .hdbmigrationtable

For this exercise we opted for the .hdbmigrationtable artifact.

Our main reason for this is the option to adapt the table structure without implicitly dropping the data.

If during the development of our project we see that some changes are necessary later on to the structure of the table, we can do so without having to copy/reload the table, which can be costly for huge tables.

In addition to that we have explicit versioning of database table changes.

Official documentation for .hdbmigrationtable (perma-link):

https://help.sap.com/viewer/c2cc2e43458d4abda6788049c58143dc/LATEST/en-US/52d1f5acfa754a7887e2122664...

Replication Tasks (db/src/replication/)

Here we define the replication tasks used to move data from the remote systems into our target system.

First you’ll have to connect a .hdbreptask to a remote source. Once that is successful you need to select the table(s) you want to replicate.

Important!If you put multiple remote source objects defined inside one .hdbreptask file then ALL the actions defined in the reptasks will be executed upon deployment.

In case you have defined target tables to be truncated or dropped and recreated this happens for all tables inside the .hdbreptask. Even if you only change one remote source object configuration as there is no delta-mechanism available to detect and deploy only the changed remote source object configuration.

A .hdbreptask file when deployed will, depending on the options in the reptask, generate multiple runtime objects, , in this container we used SDI Replication with HANA Adapter:

- Virtual table to read from the remote table

- TASK artifact performing the initial load (+ stored procedures starting the task execution)

- Remote Subscription handling the real-time replication aspect (+ stored procedures starting the remote subscription)

- Trigger/shadow/queue tables in the remote system

- Target Table (when GENERATE TARGET TABLE is flagged as true in the .hdbreptask)

In our scenario we defined the following options:

| Replication Behavior: | Initial + Realtime |

Virtual Table Prefix: | VT_ |

No special partition or projection options

Target Properties

Targe Table: we choose the target table provided by the .hdbmigrationtable object

Drop Target Table on activation: False

Generate Target Table on activation: False

Truncate Table on execution: True

For more details please have a look into the SDI&SDQ Modeling Guide for SAP Business Application Studio

https://help.sap.com/products/HANA_SMART_DATA_INTEGRATION/cc7ebd3f344a4cdda20966a7617f52d8/b72a6833d...?

Shangri-la MTA.yaml

_schema-version: "2.1"

ID: shangri-la

version: 0.0.1

modules:

- name: shangri_la-data-db

type: hdb

path: db

requires:

- name: shangri_la-data-hdi

properties:

TARGET_CONTAINER: ~{hdi-container-name}

- name: EDEN-remote-source-ups

resources:

- name: shangri_la-data-hdi

type: com.sap.xs.hdi-container

parameters:

config:

schema: SHANGRI_LA_DATA_FOUNDATION

properties:

hdi-container-name: ${service-name}

- name: EDEN-remote-source-ups

type: org.cloudfoundry.existing-serviceFinal words on the data foundation containers

As you have seen this template for adding remote source is lightweight easy to manage as we have achieved the following:

- Remote system data can be managed separately

- One user-provided-service can be used across all remote sources

- Follows the divide and conquer pattern to split complexity into smaller, more digestible parts

Move data from Shangri-la to EDEN

Starting the data transfer is simply done by executing the TASK's generated by the .hdbreptask file.

Once the task is successfully completed, initial load is done and if configured - real time replication will start, visible in the remote subscription monitor.

EDEN-Reporting Project

The EDEN-reporting project is containing the calculation views & synonyms which will access all of the data in the previously created data foundation by simply connection to the HDI container and consuming the provided READ roles.

File Skeleton

📦db

┣ 📂cfg

┃ ┣ 📂joermungandr

┃ ┃ ┣ 📜joermungandr.hdbgrants

┃ ┃ ┗ 📜joermungandr.hdbsynonymconfig

┃ ┣ 📂shangri-la

┃ ┃ ┣ 📜shangri-la.hdbgrants

┃ ┃ ┣ 📜shangri-la.hdbsynonymconfig

┃ ┗ 📜.hdiconfig

┣ 📂src

┃ ┣ 📂auth

┃ ┃ ┗ 📜Read.hdbrole

┃ ┣ 📂synonym

┃ ┃ ┣ 📜.hdinamespace

┃ ┃ ┣ 📜joermungandr.hdbsynonym

┃ ┃ ┗ 📜shangri-la.hdbsynonym

┃ ┣ 📂view

┃ ┃ ┗ 📂baseview

┃ ┣ 📜.hdiconfig

┃ ┗ 📜.hdinamespace

┣ 📜.env

┣ 📜.gitignore

┣ 📜package-lock.json

┗ 📜package.json

Namespaces defined

.hdinamespace file in /src/ folder:

{

"name": "dl.reporting",

"subfolder": "append"

}Given our file&folder structure as mentioned in the file skeleton this will lead to the following namespaces created

- reporting.auth

- reporting.synonym

- reporting.view

- reporting.view.baseview

Before that we have two namespaces that are specific to the “layer” we are operating in:

- (Datalake)

- reporting. (Reporting Layer)

Container configuration folder

In each of the data foundation HDI containers we provided roles to self-service the READ access to the tables, now we will make use of them.

You need to be connected to the service in order for this to work.

db/src/cfg/shangri-la/ shangri-la.hdbgrants

{

"shangri_la-data-hdi": {

"object_owner": {

"schema_roles": [ "dl.df.shangri-la.auth::Read#" ]

},

"application_user": {

"schema_roles": [ "dl.df.shangri-la.auth::Read" ]

}

}

}In the provided .hdbsynonymconfig we make use of the feature to abstract from a concrete schema name by using the schema.configure key. During deyployment the schema will be replaced by the schema defined by the service connected.

db/src/cfg/shangri-la/ shangri-la.hdbsynonymconfig

{

"SHANGRI_LA_DATA_FOUNDATION.TG_KUNLUN_MOUNTAINS": {

"target": {

"object": "dl.df.shangri-la.data::TG_KUNLUN_MOUNTAINS",

"schema.configure": "shangri_la-data-hdi/schema"

}

}

}Authorizations (db/src/auth/)

Provide a READ access role to access all calculation views inside and below the view folder

{

"SHANGRI_LA_DATA_FOUNDATION.TG_KUNLUN_MOUNTAINS": {

"target": {

"object": "dl.df.shangri-la.data::TG_KUNLUN_MOUNTAINS",

"schema.configure": "shangri_la-data-hdi/schema"

}

}

}The usage of the wildcard pattern (%) ensures that we don’t need to adapt the roles whenever we add new views in the package. During deployment the roles will be adapted automatically as the wildcards are evaluated.

Synonyms (db/src/synonym/)

In order to access the tables in the data foundation, which are outside of the reporting HDI container, we need to use synonyms.

db/src/synonyms/ shangri-la.hdbsynonym

{

"SHANGRI_LA_DATA_FOUNDATION.TG_KUNLUN_MOUNTAINS": {}

}Calculation Views (db/src/view/ & db/src/view/baseview/)

For this example we created a calculation view in the baseview folder which propagates the columns of the tables TG_KUNLUN_MOUNTAINS.

It serves the purpose to distinguish easily between calculation views which expose the table as is (baseviews) and the additional calculation views which add additional logic on top.

In case your business users want to consume the raw tables creating these baseviews also has the benefit that you do not give direct access to the data foundation layer to upstream consumers (e.g. reporting tools) but rather through the reporting layer.

One additional view will be created in the db/src/view/ folder which will perform a simple aggregation.

In the end we will have two views.

And as you can see the namespace clearly distinguishes them.

The roles are dynamically extended by usage of the wildcard syntax (%) during deployment

select * from roles where role_schema_name = 'EDEN_REPORTING';

select SCHEMA_NAME, OBJECT_NAME, "PRIVILEGE" from "SYS"."GRANTED_PRIVILEGES" where GRANTEE_SCHEMA_NAME = 'EDEN_REPORTING' and GRANTEE = 'dl.reporting.auth::Read'Eden-Reporting MTA.yaml

_schema-version: "2.1"

ID: eden-reporting

version: 0.0.1

modules:

- name: eden-reporting-db

type: hdb

path: db

requires:

- name: eden-reporting-hdi

properties:

TARGET_CONTAINER: ~{hdi-container-name}

- name: shangri_la-data-hdi

- name: joermungandr-data-hdi

resources:

- name: eden-reporting-hdi

type: com.sap.xs.hdi-container

parameters:

config:

schema: EDEN_REPORTING

properties:

hdi-container-name: ${service-name}

- name: shangri_la-data-hdi

type: org.cloudfoundry.existing-service

- name: joermungandr-data-hdi

type: org.cloudfoundry.existing-serviceConclusion

Initially i teased the following outcome

- Clear distinction between persistence (Shangri-la, Joermungandr) and modelling space (EDEN)

- If possible all runtime objects have a HDI design-time counterpart and are HDI managed

- Each remote system is managed in a separate HDI container (with virtual tables, replication tasks and target tables).

- Only one user-provided-service necessary to access the data

And this was achieved.

If you have feedback & questions please let me know in the comments what would be helpful to you.

Feel free to follow me in case you want further updates on SAP HANA Cloud / HDI / Performance optimization / sap hana modelling topics.

Thanks and Regards,

Yves

- SAP Managed Tags:

- SAP HANA Cloud, SAP HANA database

Labels:

4 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,661 -

Business Trends

87 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

64 -

Expert

1 -

Expert Insights

178 -

Expert Insights

273 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

784 -

Life at SAP

11 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

327 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,886 -

Technology Updates

405 -

Workload Fluctuations

1

Related Content

- explore the business continuity recovery sap solutions on AWS DRS in Technology Blogs by Members

- Unlocking Full-Stack Potential using SAP build code - Part 1 in Technology Blogs by Members

- Consuming CAPM Application's OData service into SAP Fiori Application in Business Application Studio in Technology Blogs by Members

- Deep dive into Q4 2023, What’s New in SAP Cloud ALM for Implementation Blog Series in Technology Blogs by SAP

- Harnessing the Power of SAP HANA Cloud Vector Engine for Context-Aware LLM Architecture in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 13 | |

| 10 | |

| 10 | |

| 7 | |

| 7 | |

| 6 | |

| 5 | |

| 5 | |

| 5 | |

| 4 |