- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Connect Cloud Integration with Azure Event Hubs

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

former_member23

Participant

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

01-19-2022

4:51 PM

SAP Integration Suite comes with SAP Event Mesh, which is a broker for event notification handling that allows applications to trigger and react to asynchronous business events.

However, it might be the case that you require to use different event brokers in your landscape.

Microsoft Cloud Azure contains the service Event Hubs, which is a simple, trusted and scalable ingestion service for streaming millions of events per second from any source to their pipelines reacting to business events.

In this blog post we will see how we can send events from Cloud Integration to Event Hubs and how to consume them. Event Hubs allow this by means of AMQP, Apache Kafka and HTTP protocols. Each one of these need different configurations and even different credentials in Event Hubs. More information on supported protocols might be found in Exchange events between consumers and producers.

You can get an Azure free account in following URL https://azure.microsoft.com/en-us/free/, which is enough for testing the following scenarios.

For sending events to Azure Event Hubs with the Cloud Integration AMQP adapter, you need a namespace and an event hub. As namespace you can use any pricing tier, even basic (more information on Azure Event Hubs pricing tiers on Event Hubs pricing).

In the following site Create an event hub using Azure portal, you find how to create a Resource Group, a Namespace and an Event Hub.

For getting the credentials, you need to go in the namespace to the shared access policies. There you can use the existing one or create any additional. In the policy, copy the connection string-primary or secondary key.

Taking for example the following connection string, you get the user (SharedAccessKeyName) and the password (SharedAccessKey) as follows:

With this information you can create a User Credentials artifact in Security Material in Cloud Integration.

Next, comes the integration flow to send the events from Cloud Integration to Azure Event Hubs. For sake of simplicity, I show you a simple integration flow triggered by a timer and where a sample payload is hardcoded as a json in a content modifier. Also, a header Content-Type = application/json is set in the content modifier.

In the AMQP adapter you must set as Host the host name you see in the overview of the namespace (also to be found in the connection string). Port must be 5671, Connection with TLS --> true and Authentication --> SASL. As Credential Name use the one created above.

As Destination Type select Queue and give the name of the event hub as Destination Name (also found in the namespace overview).

Once you deploy the integration flow, you should see a completed message.

In the namespace or in the event hub logs you will see the incoming messages or events.

Update 2nd March 2023: The sender AMQP adapter does not support Consumer Groups, just queues. However Azure Event Hubs requires to put the Consumer Group as queue name. As a result the integration flow consumes again and again the messages from Event Hubs. According to this, we can state that consumption of events from Azure Event Hubs is not supported through AMQP adapter. The following section is just for connection test purposes.

In this chapter, you will see how to consume the events sent to the event hub in the previous chapter. For that, use an integration flow with a sender AMQP adapter. You must set as Host the host name you see in the overview of the namespace. Port must be 5671, Connection with TLS --> true and Authentication --> SASL. As Credential Name use the one created above in the previous chapter.

As queue name use the following pattern:

In the event hub overview, you will find the partition count (2 in the example) and the consumer group name ($Default is the default consumer group).

After deploying the integration flow, you should be able to see the messages generated for the consumed events.

In the event hub logs you see also the outgoing events in red. The incoming events generated in the previous chapter are colored in blue.

To send messages to Azure Event Hubs with the Kafka adapter, you need a namespace with at least a pricing tier standard, to have the Kafka surface enabled (more information on Azure Event Hubs pricing tiers on Event Hubs pricing). You can create an event hub in the namespace or it will be created automatically when sending events to a particular event hub, if not already created.

Here you need to also get the shared access policies from the namespace.

With the following connection string you get like this the user (constant $ConnectionString) and password (the whole endpoint):

So, you can create a User Credentials artifact in Security Material in Cloud Integration.

Like in the previous integration flow, you see here a sample one. In the Kafka adapter use the host name you get in the namespace overview as Host and 9093 as port. Authentication must be SASL, Connect with TLS true and SASL Mechanism PLAIN. Use the credential you created before.

As topic enter the event hub name where you want to send your events.

Once deployed, you will see a completed message in the message monitor.

In the namespace and event hub logs you should be able to see your incoming events.

To consume the events sent to the event hub in the previous chapter, you use an integration flow with a sender Kafka adapter. You must set as Host the host name you see in the overview of the namespace. Port must be 9093, Connection with TLS --> true, Authentication --> SASL and SASL Mechanism --> true. As Credential Name use the one created above in the previous chapter.

In the processing tab enter the event hub name as topic.

Once the integration flow is deployed, you will be able to see the messages generated from the consumed events.

You will see also the incoming events in red in the event hub logs.

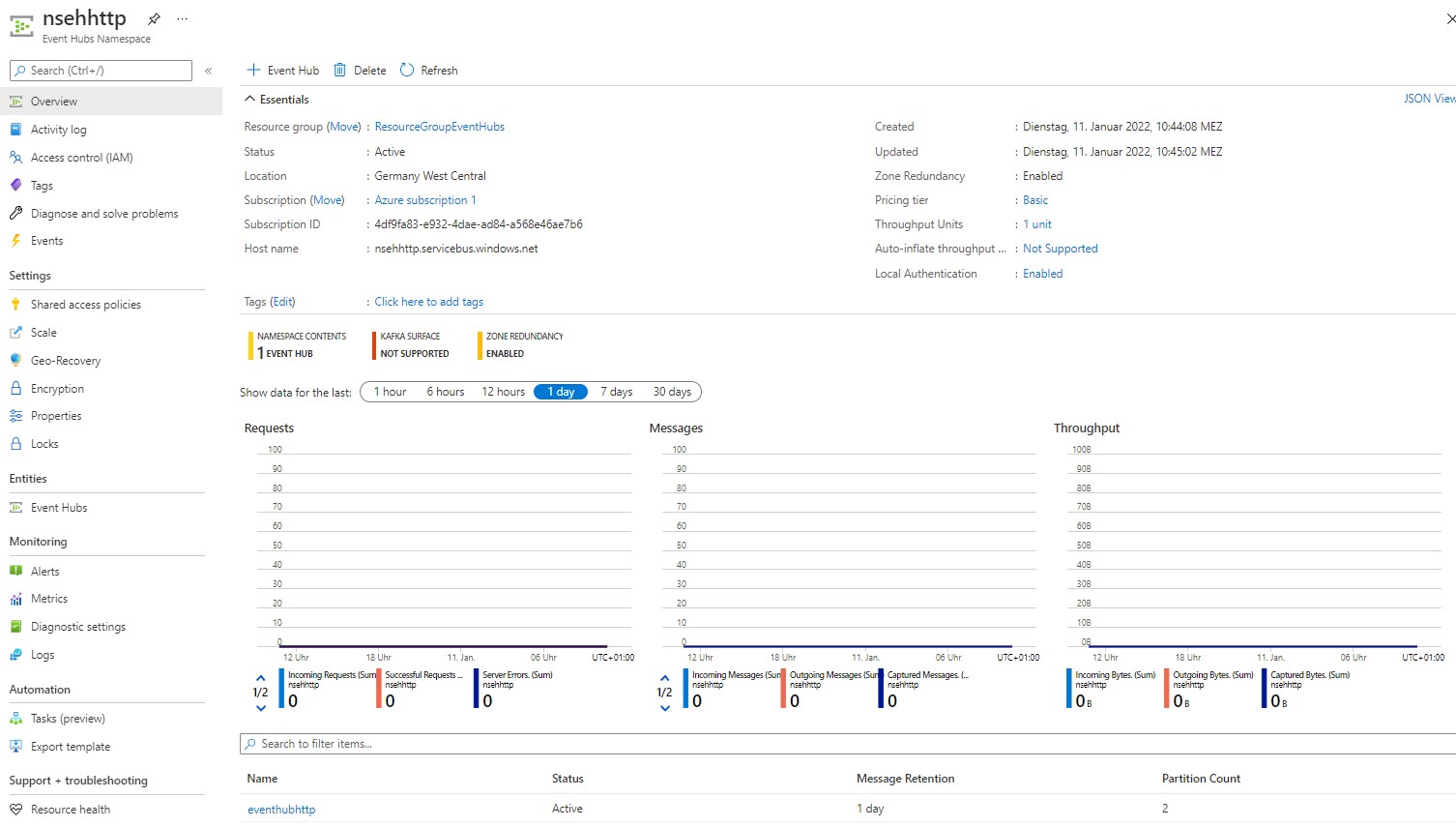

Sending events to Azure Event Hubs with the Cloud Integration Http adapter requires again a namespace and an event hub. As namespace you can use any pricing tier, even basic.

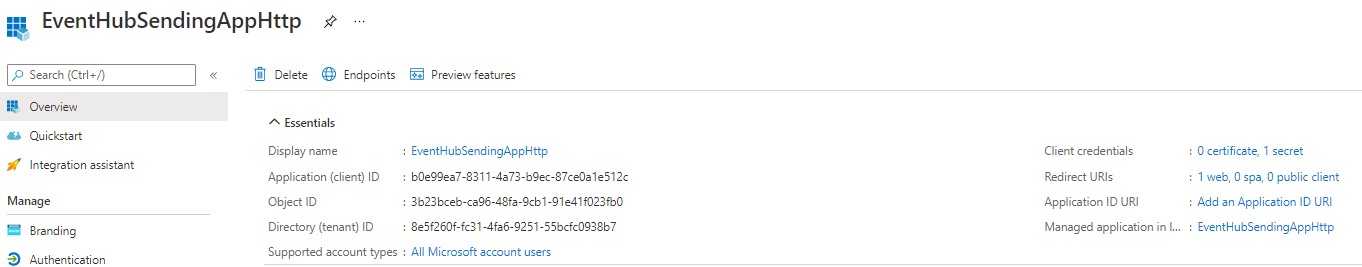

Here you need, as additional step, to register an application, which will act as OAuth2 client. For that, go to Azure Active Directory --> App registrations and add a new application. From the overview, you need to write down application ID and tenant ID, which will be used in the security material.

In the Authentication section of the application, you need to give also a Web Redirect URI. Use the following url:

In the Certificates & secrets section, add a new client secret and write it down, as it will be used also in the security material.

In the API permissions section, add the API Microsoft.EventHubs to grant access to it.

As last step in Azure, you need to assign roles for sending events to Event Hubs to the application acting as OAuth2 client and to the user account whose resources the application wants to get access to (the user account where the namespace was created). This is done in Event Hubs--><your namespace>-->Access control (IAM)-->Role assignments. You need to assign there the role Azure Event Hubs Data Sender.

Next you can create the security material in Cloud Integration. In that case you need an OAuth2 Authorization Code. You obtained the needed information in the previous steps.

Once saved, you must still authorize the artifact.

You will get a success message and your artifact will be showed with status Deployed.

Again, you can use the following integration flow to send events through http adapter. In the http adapter use the host name you get in the namespace overview as Address. As Authorization select None as it is handled in a previous step.

Before the adapter call, add a groovy script to get the access token from the OAuth2 Authorization Code you created above and add it as authorization header.

Once the integration flow is deployed, you should see a successful message in the monitor.

Also, you should be able to see the incoming messages in the Event Hub.

Consumption of events from Azure Event Hubs is not supported through Https adapter.

In this blog post you have seen three possibilities to connect your Cloud Integration tenant with Azure Event Hubs to send and consume events or messages.

However, it might be the case that you require to use different event brokers in your landscape.

Microsoft Cloud Azure contains the service Event Hubs, which is a simple, trusted and scalable ingestion service for streaming millions of events per second from any source to their pipelines reacting to business events.

In this blog post we will see how we can send events from Cloud Integration to Event Hubs and how to consume them. Event Hubs allow this by means of AMQP, Apache Kafka and HTTP protocols. Each one of these need different configurations and even different credentials in Event Hubs. More information on supported protocols might be found in Exchange events between consumers and producers.

You can get an Azure free account in following URL https://azure.microsoft.com/en-us/free/, which is enough for testing the following scenarios.

Pushing events through AMQP Adapter

For sending events to Azure Event Hubs with the Cloud Integration AMQP adapter, you need a namespace and an event hub. As namespace you can use any pricing tier, even basic (more information on Azure Event Hubs pricing tiers on Event Hubs pricing).

In the following site Create an event hub using Azure portal, you find how to create a Resource Group, a Namespace and an Event Hub.

Event Hub Namespace

For getting the credentials, you need to go in the namespace to the shared access policies. There you can use the existing one or create any additional. In the policy, copy the connection string-primary or secondary key.

Shared access policies

Taking for example the following connection string, you get the user (SharedAccessKeyName) and the password (SharedAccessKey) as follows:

- Endpoint=sb://nsehamqp.servicebus.windows.net/;SharedAccessKeyName=RootManageSharedAccessKey;SharedAccessKey=**********************************************

- User: RootManageSharedAccessKey

- Password: **********************************************

With this information you can create a User Credentials artifact in Security Material in Cloud Integration.

User Credentials

Next, comes the integration flow to send the events from Cloud Integration to Azure Event Hubs. For sake of simplicity, I show you a simple integration flow triggered by a timer and where a sample payload is hardcoded as a json in a content modifier. Also, a header Content-Type = application/json is set in the content modifier.

In the AMQP adapter you must set as Host the host name you see in the overview of the namespace (also to be found in the connection string). Port must be 5671, Connection with TLS --> true and Authentication --> SASL. As Credential Name use the one created above.

Integration Flow

As Destination Type select Queue and give the name of the event hub as Destination Name (also found in the namespace overview).

AMQP adapter

Once you deploy the integration flow, you should see a completed message.

Message Monitor

In the namespace or in the event hub logs you will see the incoming messages or events.

Event Hub Logs

Consuming events through AMQP Adapter

Update 2nd March 2023: The sender AMQP adapter does not support Consumer Groups, just queues. However Azure Event Hubs requires to put the Consumer Group as queue name. As a result the integration flow consumes again and again the messages from Event Hubs. According to this, we can state that consumption of events from Azure Event Hubs is not supported through AMQP adapter. The following section is just for connection test purposes.

In this chapter, you will see how to consume the events sent to the event hub in the previous chapter. For that, use an integration flow with a sender AMQP adapter. You must set as Host the host name you see in the overview of the namespace. Port must be 5671, Connection with TLS --> true and Authentication --> SASL. As Credential Name use the one created above in the previous chapter.

Integration Flow

As queue name use the following pattern:

- <event_hub_name>/ConsumerGroups/<consumer_group_name>/Partitions/<Partition_number>

AMQP adapter

In the event hub overview, you will find the partition count (2 in the example) and the consumer group name ($Default is the default consumer group).

Event Hub

After deploying the integration flow, you should be able to see the messages generated for the consumed events.

Message Monitor

In the event hub logs you see also the outgoing events in red. The incoming events generated in the previous chapter are colored in blue.

Event Hub Logs

Pushing events through KAFKA Adapter

To send messages to Azure Event Hubs with the Kafka adapter, you need a namespace with at least a pricing tier standard, to have the Kafka surface enabled (more information on Azure Event Hubs pricing tiers on Event Hubs pricing). You can create an event hub in the namespace or it will be created automatically when sending events to a particular event hub, if not already created.

Event Hub Namespace

Here you need to also get the shared access policies from the namespace.

Access Policies

With the following connection string you get like this the user (constant $ConnectionString) and password (the whole endpoint):

- Endpoint=sb://nsehkafka.servicebus.windows.net/;SharedAccessKeyName=RootManageSharedAccessKey;SharedAccessKey=**********************************************

- User: $ConnectionString

- Password: Endpoint=sb://nsehkafka.servicebus.windows.net/;SharedAccessKeyName=RootManageSharedAccessKey;SharedAccessKey=**********************************************

So, you can create a User Credentials artifact in Security Material in Cloud Integration.

User Credential

Like in the previous integration flow, you see here a sample one. In the Kafka adapter use the host name you get in the namespace overview as Host and 9093 as port. Authentication must be SASL, Connect with TLS true and SASL Mechanism PLAIN. Use the credential you created before.

Integration Flow

As topic enter the event hub name where you want to send your events.

Kafka adapter

Once deployed, you will see a completed message in the message monitor.

Message Monitor

In the namespace and event hub logs you should be able to see your incoming events.

Event Hub Logs

Consuming events through Kafka Adapter

To consume the events sent to the event hub in the previous chapter, you use an integration flow with a sender Kafka adapter. You must set as Host the host name you see in the overview of the namespace. Port must be 9093, Connection with TLS --> true, Authentication --> SASL and SASL Mechanism --> true. As Credential Name use the one created above in the previous chapter.

Integration Flow

In the processing tab enter the event hub name as topic.

Kafka adapter

Once the integration flow is deployed, you will be able to see the messages generated from the consumed events.

Message Monitor

You will see also the incoming events in red in the event hub logs.

Event Hub Logs

Pushing events through HTTP Adapter

Sending events to Azure Event Hubs with the Cloud Integration Http adapter requires again a namespace and an event hub. As namespace you can use any pricing tier, even basic.

Event Hub Namespace

Here you need, as additional step, to register an application, which will act as OAuth2 client. For that, go to Azure Active Directory --> App registrations and add a new application. From the overview, you need to write down application ID and tenant ID, which will be used in the security material.

App registrations

In the Authentication section of the application, you need to give also a Web Redirect URI. Use the following url:

- https://<your cloud integration tenant management node>/itspaces/odata/api/v1/OAuthTokenFromCode

Redirect URI

In the Certificates & secrets section, add a new client secret and write it down, as it will be used also in the security material.

Certificates and secrets

In the API permissions section, add the API Microsoft.EventHubs to grant access to it.

API permissions

As last step in Azure, you need to assign roles for sending events to Event Hubs to the application acting as OAuth2 client and to the user account whose resources the application wants to get access to (the user account where the namespace was created). This is done in Event Hubs--><your namespace>-->Access control (IAM)-->Role assignments. You need to assign there the role Azure Event Hubs Data Sender.

Role assignments

Next you can create the security material in Cloud Integration. In that case you need an OAuth2 Authorization Code. You obtained the needed information in the previous steps.

- Tenant id: 8e5f260f-fc31-4fa6-9251-55bcfc0938b7

- Client id: b0e99ea7-8311-4a73-b9ec-87ce0a1e512c

- Client secret: *************************************

- Autorization URL: https://login.microsoftonline.com/<Tenant id>/oauth2/v2.0/authorize

- Token Service URL: https://login.microsoftonline.com/<Tenant id>/oauth2/v2.0/token

- User Name: user account where the namespace was created

- Scope: https://eventhubs.azure.net/.default

OAuth2 Authorization Code

Once saved, you must still authorize the artifact.

Authorize OAuth2 Authorization Code

You will get a success message and your artifact will be showed with status Deployed.

OAuth2 Authorization Object deployed

Again, you can use the following integration flow to send events through http adapter. In the http adapter use the host name you get in the namespace overview as Address. As Authorization select None as it is handled in a previous step.

Integration Flow

Before the adapter call, add a groovy script to get the access token from the OAuth2 Authorization Code you created above and add it as authorization header.

Groovy script

Once the integration flow is deployed, you should see a successful message in the monitor.

Message Monitor

Also, you should be able to see the incoming messages in the Event Hub.

Event Hub Logs

Consuming events through HTTP Adapter

Consumption of events from Azure Event Hubs is not supported through Https adapter.

Summary

In this blog post you have seen three possibilities to connect your Cloud Integration tenant with Azure Event Hubs to send and consume events or messages.

- SAP Managed Tags:

- SAP Integration Suite,

- Cloud Integration,

- SAP Event Mesh

Labels:

1 Comment

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

92 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

66 -

Expert

1 -

Expert Insights

177 -

Expert Insights

298 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

344 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

421 -

Workload Fluctuations

1

Related Content

- Consuming SAP with SAP Build Apps - Mobile Apps for iOS and Android in Technology Blogs by SAP

- Support for API Business Hub Enterprise in Actions Project in Technology Blogs by SAP

- Demystifying the Common Super Domain for SAP Mobile Start in Technology Blogs by SAP

- How to host static webpages through SAP CPI-Iflow in Technology Blogs by Members

- SAP Signavio is the highest ranked Leader in the SPARK Matrix™ Digital Twin of an Organization (DTO) in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 39 | |

| 25 | |

| 17 | |

| 13 | |

| 7 | |

| 7 | |

| 7 | |

| 7 | |

| 6 | |

| 6 |