- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Intelligent Lookup FAQ

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Advisor

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

12-21-2021

8:00 AM

Frequently-Asked Questions

- What problem is Intelligent Lookup trying to solve?

- How does Intelligent Lookup solve the data harmonization problem?

- What is a pairing column? How do I choose it?

- What about those different workarea tabs - matched, in review, multiple, unmatched?

- What's the difference between "Add Rule for Multiple Matches" and "Add Rule for Unmatched Records"?

- How does Intelligent Lookup deal with changing data?

- What about slowly changing or time-dependent dimensions?

- Where can I learn more? What resources exist?

What problem is Intelligent Lookup trying to solve?

Intelligent Lookup lets users harmonize datasets that share no common identifier. This is oftentimes the case when two datasets stem from different origins like internal data vs. external data or data provider A vs. data provider B. A typical example is when suppliers try to make leverage sales data that they receive from a retailer selling their products. Very often, the retailer will not reliably share the supplier's own ID back to them, but rather use their own, proprietary product ID. Even if they do share it back, this might not be true for 100% of the cases, leaving the supplier no choice but to use other columns like product description, product category, attributes, price etc. to map the sales data back to the supplier's own product master

Another typical case is that of region data, where special characters, abbreviations and place names in different languages make the data integrator's life pretty difficult

In their blog post & video, SAP partner Nagarro ES are going over a case of web orders that customers places as either guest or as existing customer. Orders thus might have a customer ID included, but many guest orders can actually be traced back to customers who did indeed order as guest, but whose name, address or email could help identify them as known customer, thus giving the retailer a 360 degree view on customer behaviour despite incomplete order data

In this video, the SAP Data Marketplace team is trying to assess influence of tourism on a country's CO2 footprint, by harmonizing a Data Marketplace asset on CO2 emissions with one on tourism turnover. Unluckily, spelling differences between country names like "Democratic Republic of Congo" and "CONGO, DEMOCRATIC REPUBLIC" prohibits them from using a standard database join. Luckily for them, Intelligent Lookup takes no more than 5min to solve the case

We foresee a lot of such mix & match problems particularly when using SAP Data Marketplace assets with SAP source data from S/4, BW, Ariba, Concur etc., simply because data governance is handled differently between different sources. This is why Intelligent Lookup is of great help in the context of SAP Data Marketplace, but subject matter experts know plenty of other cases in other domains also, where data matches semantically and not technically

How does Intelligent Lookup solve the data harmonization problem?

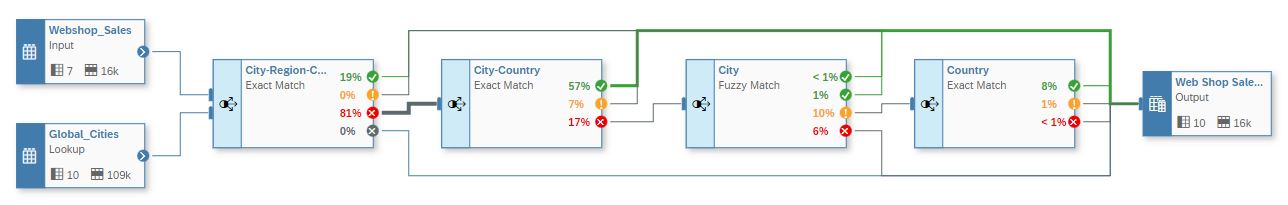

Intelligent Lookup addresses the matching problem through a series of user-designed matching rules that can be chained to arbitrary length. Each rule is either doing an exact comparison of field values or a fuzzy comparison of string values. Rules can be chained such that insufficient or failed matches from one rule can be piped into another rule for trying another strategy.

In the example of this blog and highlighted in this video, the tries to find geo coordinates & continent of web orders that have been placed on those same cities. Four rules that look at city, region and country in an exact and also in a fuzzy way allow to solve a problem that looked enormous (81% failed matches) to a small & manageable one (<2% failed; some match candidates to review & confirm) in small amount of time.

Users can take decisions on individual cases in a work area that makes concrete match proposals and that was designed primarily with end-user efficiency in mind.

Matches made by rule or through human decision-making are persisted in a "pairing table". This table represents the missing link between the two tables, i.e. it contains the identifiers & their match status that users were so dearly missing originally when they only had those two datasets. The pairing table is Intelligent Lookup's secret sauce and is ultimately what is being used at runtime when data is being requested from an Intelligent Lookup's output view. That output view is essentially a three-table join over the input table, the pairing table & the lookup table. Depending on configuration, the records currently in status multiple match & unmatched are either included or not. Including them is the default, but obviously no value for the requested return columns can be identified, thus leaving them null

The basic relationship for the output is depicted in the join diagram above, though the inner workings of Intelligent Lookup are somewhat more complex to e.g. leverage match status & default value for the return column values.

In essence, Intelligent Lookup is therefore a clever way to design & fill this pairing table. Once a match has been made, its value is stored in the pairing table and subsequently used without consulting the rules themselves anymore. This captures the business knowledge that users put into designing the rules & taking match decisions, honors the time-investment made by the user and thus creates a reusable data asset over time.

What is a pairing column? How do I choose it?

A pairing column can be thought of in different ways:

- In a purely technical sense, it is the foreign key in the input entity that uniquely matches a the key of the the lookup entity.

Take the above-mentioned example of a supplier receiving point-of-sale data on product sales from a retailer and trying to match it to the suppliers product master. Once the supplier has figured out, what retailer product ID matches to what supplier product ID, the problem is solved for ANY sales transaction that contains the respective retailer product ID. Therefore the retailer product ID serves as foreign key to the supplier product ID - In more semantic terms, the pairing column is that column of the input dataset that uniquely identifies a record in the lookup dataset. So: for any pairing value (i.e. value of the pairing column) the following is true: there is exactly one object in the lookup entity that matches to that value (though finding which one it is might be difficult; this is what the matching rules are do)

- From an efficiency stand-point, the pairing column is the granularity of reuse.

In the above example: once the system has made the link between the retailer product ID and the supplier product ID, it will use it for ALL sales transactions that cross its way. No need to run any rule again, no need ask the user for input - once that link has been established, it is there to stay (unless the user unmatches the two again, for whatever reason). - From a deployment stand-point, the pairing column is an essential part of the definition of the pairing table. By choosing the pairing column of the input entity as well as the lookup entity itself, the user essentially designs the pairing table that we discussed above. The pairing table has the following shape:

- Its first key is the pairing column

- Its second key is the key field(s) of the lookup entity

- Its other fields are match status and some house-keeping fields that are of no relevancy here

This is also why changing the pairing column choice also deletes all mappings: because it essentially changes the entire persistency of all matches! The rules, which themselves are just the agents for finding these matches, can stay entirely as they are. But the matches themselves get lost because the table that stores them is being redesigned.

The pairing column must not be mixed up with the match columns: the match columns are rule-specific choices for finding matches. These matches are links between a value of the pairing column and a value of the lookup key together with a match status. Once these are found, they are persisted in the pairing table

The following ground rules apply for choosing the pairing column:

- If in doubt, pick the key of the input table. This is never wrong and you can revisit your choice later in the process

- Once you see yourself taking the same decision again and again and again within the same context (e.g. 15 different transactions for the same product with the same retailer product ID & product text, but matched 15 independent times to the same target supplier product), you might want to reconsider your choice of pairing column and ask yourself the question: if I knew this field of the input entity, would it be enough to uniquely identify a record in the lookup entity. If your answer is yes, then this is your pairing column

Some final notes before closing this topic:

- Pairing columns must not contain NULL values, since this violates a key constraint on the pairing table (also: what would you match it to). If you absolutely want to use this column nonetheless, build a view on the input dataset with a calculated column that is identical to your proposed pairing column but is itself never NULL and use that new column as alternative pairing column

- Intelligent Lookup supports only single pairing column today, though we plan to improve on this front in the future. If you need multiple pairing columns, build a view on the input entity and create a calculated column that concatenates all participating column. In the example of the launch blog & video, I created a unique LocationID and used it as pairing column purely for educational purposes. In reality, I would have concatenated city, region & country columns into an own column and would have used that as proxy pairing column instead.

What about those different workarea tabs - matched, in review, multiple, unmatched?

Intelligent Lookup uses rules to triage records into 4 separate buckets that reflect the confidence that the system has on the results and how it intends to treat them in the output. To that end the system applies the rule on all relevant records to get their respective match bucket:

- Matched: Matched records are those the system has 100% confidence in that the matching happened correctly. They are either created automatically by a rule or manually by a user pressing the Confirm button in the review work area or the Match button in the work areas for multiple matches or unmatched records.

- Review: These records require inspection by the user because the system is quite sure, but not yet positive, that the proposed matching is correct. They are the result of a fuzzy rule where the fuzzy algorithm found just a single lookup record whose fuzzy score was between the fuzzy thresholds of the rule properties

- Multiple: These are records for which the system found more than one lookup record for which the rule conditions held. The user needs to take a decision about which of the records is correct or can add another rule to have the system condense this proposal list of match candidates even further. While no decision has been taken, the system cannot figure out a "correct" value for the return columns and thus puts their value to NULL (or the default value specified in the output properties)

- Unmatched: These are records for which the rule could not find a single correct lookup record for which the rule conditions held. The user would typically define a subsequent, alternative rule to find matches. Alternatively, she can manually browse the lookup records through filtering & sorting and manually match records

What's the difference between "Add Rule for Multiple Matches" and "Add Rule for Unmatched Records"?

As written above, buckets for multiple matches and unmatched records are very different:

- multiple matches mean that the system found a couple of alternative lookup records for which a rule holds (e.g. rule on surname fuzzy comparison might yield Miller, Miler & Myller)

- whereas unmatched records mean that the the system does not find a single correct lookup record for which the rule conditions held

In case a), the issue is about taking a decision between probable, good match candidates where as in b) the issue is about finding that needle in the haystack in the first place.

For a), users could in principle work through all those match candidates manually and take those decisions. In order to be more efficient in that, users might want to employ yet another rule to limit the number of match candidates and thus make decision-taking easier and more efficient. So if they "Add Rule for Multiple Matches", that rule will ONLY look at the match candidates and see if they conform to the requirements of the second rule. None of the other lookup records will be considered! In the best of cases, the list of match candidates is reduced to just a single record, then that one can now be set to "matched" and the case is closed. If more than one match candidate also conforms to the subsequent rule, then those now make up the (hopefully shorter) list of new match candidates in the multiple matched tab. If none of the match candidates conforms to the new rule, then the final match status is actually unmatched and the user actually needs to start from scratch - which is actually warranted because none of the match candidates was actually good enough and held up to the second rule. Because of the reducing nature of the rule, we sometimes also talk about a "refine" rule, because it refines the proposed list of match candidates with the goal to shorten it. Logically, it forms an AND condition with its predecessor rule.

For b), the issue is about finding matches or at least match candidates. So users who "Add Rule for Unmatched Records" need to come up with a strategy about what to compare between between input record and lookup records so as to suggest a match. All records in the lookup entity are potential candidates. Because of the entirely independent nature of the rule, we sometimes also talk about a "new" rule, because it is new and independent of its predecessor rule. Logically, it forms an OR condition with its predecessor rule.

The Intelligent Lookup UI distinguishes between both rule types by introducing the terms input scope and lookup scope.

- The input scope are those records from the preceding rule that "flow" into the new rule. Semantically these are records from the input dataset that got flagged as either multiple matches or the unmatched records by the preceding rule. It is not possible to pipe both buckets into the same subsequent rule.

- The lookup scope are those records in the lookup entity that the subsequent rule considers at all as potentially match-worthy.

- For "Add rule for Multiple Matches", the follow-up rule only looks at the match candidates and looks no further. Therefore these constitute the lookup scope. Note that the lookup scope differs for each and every pairing value, i.e. while input record Miller fuzzily matches to Mieller, Myller & Myller, input record Smith fuzzily matches to Smit & Smith. The follow-up rule will look independently in both sets when it is their turn.

- For "Add Rule for Unmatched Records", the follow-up rule does not make any assumptions and takes all lookup records into consideration.

While this might sound opaque at first, the concept is really straightforward. This blog post & video show a worked end-to-end example that uses both types of rules and should make things a lot clearer, if you still have questions around this.

How does Intelligent Lookup deal with changing data?

Intelligent Lookup deals with changing data by cleverly using the existing matches where available and reverting back to Intelligent Lookup's rule graph where matches don't yet exist. This will be the topic of an own blog post, but let us give the short answer here:

Imagine a simple Intelligent Lookup graph that takes 330 input records and triages them into 100 matched records, 50 records in review, 80 records in status multiple match and 100 records unmatched.

What happens now, when 150 new records are loaded into the input dataset? What will the output view contain? In essence, this is really simple because for each and every record of the updated table, the system asks itself the following questions:

- What is the value of the record's pairing column?

- Check the pairing table, if there's a pairing for that pairing value.

- If a pairing exists for this value, use it as it is, i.e.

- if the pairing is in status matched, then the record is matched

- If the pairing is in status review, put the record in status review

- If the pairing is in status multiple match, put the record in status multiple match

- If the pairing is in status unmatched, put the record in status unmatched

- If no pairing exists yet, the record is in status unprocessed. Such records are listed as grey in the first rule of the canvas and its work area. As with unmatched records, no value can be found for return columns of unprocessed records

Note that this decision tree is done in the output view of the Intelligent Lookup operator itself and esp. do not need the Intelligent Lookup to be run. In the above-mentioned sales example, this means that if new sales transactions are received, then all transactions on known & matched retailer products IDs are automatically matched to their respective supplier product ID. The same is essentially true for multiple matches or unmatched products that are still in the works: any new transaction on the same products will also be counted into that respective bucket and be itself a multiple match or unmatched records. Only genuinely new, never-encountered retailer producht IDs count into the unprocessed bucket and need a new execution of the Intelligent Lookup. If we are lucky and the rules are well designed, then those rules will automatically identify the respective supplier product and match them. Whatever the outcome the rule on concrete products, eventually all IDs, also the new ones will end up in one of our famous buckets: matched, in review, multiple match and unmatched.

What about slowly changing or time-dependent dimensions?

Intelligent Lookup today persists all the matches it can find and automatically uses these at data retrieval time. While we do envisage a concept for time-dependent dimensions and entirely acknowledge its importance in practice, users can already today cleverly use Intelligent Lookup's existing features to handle time-dependency

- Delete matches: Users can at all times delete existing matches thus cleansing the pairing table. By running the rule graph again, all matches that can be made automatically are created and persisted. If the rules are designed well and the data is well-behaved, then only few manual match decisions need to be made, thus getting back to a proper state quickly even on new data

- Add time as additional pairing column: If the semantics of a pairing value changes over time (e.g. because retailers reuse the same GTIN for a new, different product), then obviously the GTIN is not a valid pairing column in and out of itself, i.e. only taken together with the load time or some other data does the GTIN allow to uniquely identify a given lookup record (in the supplier's product master). Then this is obviously the better pairing column to take: concatenate the original pairing column (e.g. GTIN) and a meaningful temporal column (e.g. the load date) via a new calculated column in a view that wraps the input entity and use that new column as pairing column instead

Where can I learn more? What resources exist?

This blog post is your one-stop-shop to all relevant resources on Intelligent Lookup

- SAP Managed Tags:

- SAP Datasphere

Labels:

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

91 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

66 -

Expert

1 -

Expert Insights

177 -

Expert Insights

293 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

12 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

340 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

417 -

Workload Fluctuations

1

Related Content

- Hack2Build on Business AI – Highlighted Use Cases in Technology Blogs by SAP

- New Machine Learning features in SAP HANA Cloud in Technology Blogs by SAP

- SAP HANA Cloud Vector Engine: Quick FAQ Reference in Technology Blogs by SAP

- 10+ ways to reshape your SAP landscape with SAP Business Technology Platform - Blog 7 in Technology Blogs by SAP

- 10+ ways to reshape your SAP landscape with SAP Business Technology Platform – Blog 4 in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 34 | |

| 25 | |

| 12 | |

| 7 | |

| 7 | |

| 6 | |

| 6 | |

| 6 | |

| 5 | |

| 4 |