- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- SAP Data Warehouse Cloud, Data Marketplace: An Ove...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Disclaimer

This blog was written in the time before SAP Data Warehouse Cloud was transformed into SAP Datasphere. The below depicted content on the Public Data Marketplace use cases remain valid but have been significantly enhanced with Private Data Sharing and Internal Data Marketplace scenarios that are described in this follow-up blog.

Abstract

This blog post is about a strategic feature of SAP Data Warehouse Cloud, namely the Data Marketplace. It helps data providers and data consumers to exchange data in clicks, not projects - to heavily reduce the data integration efforts which currently costs Time, Budget & Motivation in analytics projects. It consequently addresses use cases ranging from external data integration and harmonization to cross-company data collaboration between business partners that use SAP Data Warehouse Cloud. For the time being, commercial transactions remain outside of the data marketplace and embedded license management allows a BYOL (bring your own license) scenario to onboard existing Data Provider-Data Consumer relationships or new ones done on the SAP Store or via the Data Provider's sales channel.

The Data Marketplace serves as a strategic element of SAP Data Warehouse Cloud to ease the consumption of external data in order to combine it with internal (SAP) data.

This blog provides an overview of the data marketplace as well as shows the core steps of the processes for data consumers and data providers with links to detailed blogs.

Table of Contents:

- Data Marketplace Value Proposition

- Data Marketplace End-to-End Demo

- Data Marketplace Setup Overview

- End-to-End Data Marketplace Processes

- Data Marketplace Use Cases

- Data Provider and Data Product Portfolio

- Data Marketplace Roadmap

- Get Started

Disclaimer Information: In this blog post, certain topics that are foreseen and part of the product concept but not yet communicated on the roadmap are stated with "in the product vision".

1. Data Marketplace Value Proposition

1.1 Data Consumer - Access in Clicks, Not Projects.

From the realization of outside-in use cases to the creation of a holistic data platform/warehouse, the necessity to include external data is more important than ever. What do we mean by external data? Let us give you some examples & use cases :

- COVID-19 numbers that an HR department needs to understand the impact for their office locations

- Stock Data of competitors to put internal performance data into a market perspective

- Company Master Data & KPIs to assess how much of the market is already assessed or to measure market attractiveness in the area of market expansion

- ESG data to understand the risk & opportunities arising from the sustainability setup of your ecosystem

- Internal data from your business partners that want to share the data with you as part of a business process, e.g. point-of-sale data for promotion evaluation

External Data is sourced from Commercial and Public Data Providers as well as from a Business Partner to harmonize with internal data and perspectives.

In a nutshell, with external data, we mean all data that you cannot extract from your company's internal applications but get access to from an external party which can be a commercial data provider, an open data source, or a company where data is traded as part of a business process.

While this data is super helpful - especially in times of market disruption through innovation, pandemic times, or economic climate changes where historical data does not help you to project the future - integrating it can be super cumbersome and is today often the hurdle for data-driven innovation and cross-company collaboration. Why can this be the case?

- Flat Files Chaos: External Data is available in form of flat files where ingestion is cumbersome and for ongoing updates impracticable, ungoverned and expensive

- API Skills: External data is often provided as an API where skills in the business department is lacking and the integration requires an expensive project and support from IT

- Export Struggles: External Data is available in a dashboard where it can be exported where in the import processes challenges such as data type definitions create a headache

- Real-Time Setup: External Data is available as a real-time query where only selected elements are required and technical means to provide that are lacking

- No Experience: External data - especially from business partners - needs to be exchanged and no platform exists with which the data can be exchanged in a scalable fashion - most often solved with mail attachment exchange

Integration Challenges leave the data management and data consumption potentials on the table

In a nutshell, for enterprise-grade integration of external data with the current setup, the integration work lies with the data consumer, and almost always an IT project is required which in reality leads to the fact that the use case is not realized and potentials remain unaddressed.

With the Data Marketplace, this changes completely for the data consumer in three steps:

CONSUME. The data consumer can access external data in a few clicks into his SAP Data Warehouse Cloud without doing any integration work. All of the integration work is done by the Data Marketplace (after the Data Provider has once onboarded his views). This holds true for the initial load as well as for updates published by the data provider. As a consequence, the Data Marketplace allows you to standardize the inbound flow of external data where in a company today a mess of different setups makes it impossible to scale.

COMBINE. Once the data is integrated via the Data Marketplace, you benefit from the entire data management functionality of SAP Data Warehouse Cloud to cleanse, harmonize and prepare the data for consumption. One explicit feature to call out here is the Intelligent Lookup that helps you to bring together datasets that do not have a singular, technical JOIN condition which is almost always the case with external data and which is introduced in the linked blog by Jan Fetzer. And ultimately, you can then easily consume the data with SAP tools such as SAP Analytics Cloud, SAP HANA Cloud, SAP Data Intelligence, or 3rd Party Tools such as Jupyter Notebook, PowerBI, etc.

CONTROL. Ultimately - by being tightly embedded into the SAP Data Warehouse Cloud platform - the Data Marketplace allows you to setup an infrastructure with which you can manage your data inbound processes at scale, e.g. by leveraging spaces. Within the Data Marketplace, you benefit from Access control mechanisms that manage who can access which Data Product. This way a provider can represent his existing contracts as a digital twin and onboard his existing customer relationships while new ones can be created via the SAP Store.

All further questions to the Data Consumer perspectives can be found below in Chapter 5 or within our FAQ.

1.2 Data Provider - Easily deploy data for SAP customers

In order to realize the above-described data consumer perspective, the data providers obviously play a crucial role. But why should they care?

- User Visibility. With the growth of potentially relevant data sources, the actual users of a data solution often do not even know about potential data offerings in the market. As a data provider, you need to be visible to the end-users that are pivotal in the decision of which data to acquire and how.

- Share of Customer Wallet. Data Providers that have contracts with their clients always need to find an individual solution to make the data accessible within an SAP Data & Analytics infrastructure. The required budgets that are reserved for data integration efforts - in-house or by consultants - endanger a potential deal or decrease the potential size of the data deal.

- Adoption Risk. More and more data products are sold in a subscription or pay-per-use model. Consequently, a complex integration & usage experience endangers the adoption and customer relationship.

- Customer Journey Friction. With an inefficient data delivery mechanism, it is difficult to position and deliver additional offerings. This way it is difficult to land and expand while at the same time most of the trust is built on small scenarios that prove their value and are then expanded into other units.

- Commercial Friction: With several players involved in the external data value chain, as a data provider, you need to choose your battles wisely.

How does the Data Marketplace for SAP Data Warehouse Cloud help?

- Visibility into SAP customer base: The Data Marketplace is provided as a free in-app solution within SAP's strategic public cloud data platform - SAP Data Warehouse Cloud. With the Listing in the Data Marketplace, you reach SAP's customers that look for promising data products that help them solve their problems.

- Standardized Deployment Option: With the Data Marketplace, there is a clear deployment option where the provider benefits from standardized processes that establish the same experience across data providers for the customer.

- Adoption Insurance: With the Data Marketplace, the provider's customer gets access to the data in a few clicks with the right semantics in place. This ensures a great first experience as well as ongoing usage through a process that realizes seamless consumption of data updates (without an IT project required).

- Marketing-Mix Modeling & Delivery: With the listing process in the data sharing cockpit, you can efficiently design Data Products that align with your GTM strategy while keeping the operating costs minimal. This way you can start the individual customer journey with a small listing that you can extend over time - all configurable via the UI.

- Flexible Data Product Licensing Options: On the one hand side, you can bring and represent your own licenses in the License Management which means there is no brokerage fee taken by SAP for executing data exchange via the Data Marketplace. On the other hand, to support a data provider in the commercial process, options to list on the SAP Store or via the Partner Datarade will be offered.

All further information on the Data Provider perspective shall be answered in the E2E processes below or in the Provider FAQ on the SAP Help Page right here.

2. Data Marketplace End-to-End Demo

The best way to understand the value proposition of the Data Marketplace is to see it in action.

Feel free to take a look at the following 25-minutes long end-to-end demo video where you see how 2 data products are loaded and an additional one is listed and delivered. In addition, the Intelligent Lookup is shown to demonstrate how the native SAP Data Warehouse Cloud functionality helps you to work with the acquired data in an SAP context.

3. Data Marketplace Setup Overview

In order to truly understand the benefits of the Data Marketplace - as a data consumer or data provider, it is important to know how it operates. This chapter should give an overview and understanding of the setup while the following chapter will give more context for the processes.

The Data Marketplace connects all SAP Data Warehouse Cloud Tenants via a central catalog

while the data delivery is orchestrated in a decentral fashion.

First of all, all participants in the Data Marketplace need access to an SAP Data Warehouse Cloud tenant. For companies that only want to use SAP Data Warehouse Cloud to offer and deliver data in the Data Marketplace and do not want to use it internally as a data warehouse solution, a special offer is available for €830 per month that can be retrieved by contacting datamarketplace@sap.com.

As a Data Provider, you use the Data Sharing Cockpit to list one or multiple SAP Data Warehouse Cloud artifacts as a so-called Data Product. For this Data Product, you can manage access via the License Management and updates via the Publishing Management. Based on this definition, a hidden "Data Product Space" is created in the Data Provider's SAP Data Warehouse Cloud Tenant.

When a Data Consumer now discovers such a Data Product that he wants to load, he needs a license key to activate it (unless it is a free product) and can select the target SAP Data Warehouse Cloud Space in which he wants to consume the Data Product. Based on this selection, the Data Marketplace now creates a database connection between the generated "Data Product Space" and the selected target space.

Subsequently, the defined artifacts are automatically created and the data is replicated. Every time a new update is made available to the data, a new replication is triggered. Federated access is currently being investigated while replication was the priority to mirror the current process of flat-file delivery where a physical copy is shipped as part of the data contract.

This approach scales especially if multiple customers consume the same product (or products based on the same data) as the Data Provider only once needs to connect, list, publish and update the product while all data consumers that have subscribed to the product benefit immediately (or manually if they choose to manually control the update flow).

Consequently, the Data Marketplace connects all SAP Data Warehouse Cloud customers (currently within the same landscape, e.g. EU10, US10, etc.) in a matter of clicks, without an IT project required.

4. End-to-End Data Marketplace Process

In this chapter, you get a high-level walkthrough of the main data marketplace processes.

Further step-by-step guidance and field-level explanations can be found in more detailed blogs then.

0. Data Provider - Connect & Prepare Data for Listing

In order to make data available on the Data Marketplace, you need a deployed graphical or SQL view in your SAP Data Warehouse Cloud populated with the data that you want to ship. Based on the origin and complexity of your data product, different onboarding mechanisms are possible. In a nutshell, you can distinguish between the following 5 archetypes:

- The easiest while the most manual thing is that you can create a local table inside SAP Data Warehouse Cloud and use the Data Editor to enter data via the UI. This might be useful for small helper tables or if you need a certain party to submit a few entries in an integrated fashion, e.g. enter reference data for which individualized data products shall be delivered.

- Upload local CSV files via the Desktop Upload functionality of SAP Data Warehouse Cloud. Once the Table is created and initially published, you can also add new data - incrementally or as a full load. This is a simple setup but also requires manual effort to realize.

- The third option might be the most common one - connecting a cloud storage provider such as AWS, Google Cloud, or Microsoft Azure and loading files (CSV, XLSX, PARQUET, etc.) via the Data Flow functionality of SAP Data Warehouse Cloud. This is especially handy as you can schedule data flows and conduct ETL processes such as cleansing the data or adjusting data types.

- The fourth option is to connect a database to SAP Data Warehouse Cloud. This can be interesting as this connection type allows to use a federated setup. This means that the data is not replicated to the Data Provider DWC tenant but live access is established. Consequently, it is the provider's responsibility that the setup is robust in order to ensure that in case a data load is triggered via the Data Marketplace, the source system can be called. That is why snapshotting of the view is possible on view level.

- Last but not least, you can create a so-called OPEN SQL schema or Database user in the Space Management of SAP Data Warehouse Cloud which provides you with the credentials to connect via the HANA DB Client. This allows you to write data with 3rd Party Tools into SAP Data Warehouse Cloud and then create views on the ingested table. PM colleague Jan Fetzer wrote a nice blog with various ways on how to connect with Phyton.

On top of the ingested data, you then can use the standard SAP Data Warehouse Cloud functionality to create the artificats that you want to list in the Data Marketplace. In case you want to do that in a scalable and programmatic fashion for a lot of objects, have a look at our CLI - explained in a great blog by Jascha Kanngiesser.

From Manual Entry to Federated Access to a database - all types of SAP Data Warehouse Cloud connectivity are available for you to connect data for sharing with the Data Sharing Cockpit

In a nutshell, multifold data onboarding options are available and you can find a full list of supported sources systems right here.

1. Data Provider - Create Data Provider Profile

Once the views are created that you want to list in the Data Marketplace, the processes in the Data Sharing Cockpit start. You need to create a Data Provider Profile or assign your user to an existing one via an activation key. With the Data Provider profile, you can describe your area of expertise, connect your LinkedIn page and tag the Industries, Data Categories, Regions that you serve with your data. In addition, you maintain the contact details to help curious data consumers to reach out.

With the Data Provider Profile, you set the frame for all further activity in the Data Sharing Cockpit

2. Data Provider - Create Data Product Listing

Data Product Listing is the process of defining the marketing mix of your data offering. For interested data consumers, you can provide all "4Ps" as you would call it in economics: Product, Price, Promotion, Placement.

It starts with descriptive information. You can maintain a free text that describes the product, its use case, its sources, etc. With the same taxonomy as in the Data Provider profile, you can tag your product with the applicable Data Category, SAP Application, Industry, and Country to optimize the search experience and likelihood to be found. In addition, you can maintain images as well as data documentation such as a metadata catalog or KPI definition document.

A further very important asset is the Sample Data representation. This is currently been achieved with a JSON upload while later this will be realized with a filter on the actual data set. One or multiple samples can be maintained - ideally one for each artifact that is part of the data product - with each maximum 1000 records.

A further key setting is the maintenance of the delivery information. You can decide between the following data shipment types:

- Direct Delivery: Data is shipped via the Data Marketplace into the space that is selected in the Load Dialogue. The prerequisite is that the Provider has connected his assets to his Data Provider tenant. There are then two delivery pattern options:

- One-Time: Nature of the data doesn’t require data updates/refresh of data, thus no publishing management is required.

- Full: There are data updates expected/planned that can be managed with the Publishing Management. Each delivery represents a full load of the existing

- Open SQL Delivery: The provider is capable to push the data into an OpenSQL Schema that the Data Consumer creates. Consequently, the Data Provider does not need to onboard his data to a DWC Tenant prior. This makes sense especially for highly tailored products or validates demand before investing in the Direct Delivery setup.

- External Delivery: The data product is listed in the Data Marketplace but the delivery is taken care outside - e.g. flat-file delivery, cloud storage access, dashboard access. In this setup, it is the full provider's responsibility to deliver the data to each individual consumer and each consumer's responsibility to integrate the data themselves.

Furthermore, commercial information can be maintained. In general, the Data Marketplace runs on a "Bring your own License" setup. You can represent your existing license in the Data Marketplace to authorize access. This can be a license that a provider sells in his own sales channels or via SAP channels, the SAP Store. In addition, you can set your product to "Free of Charge" which consequently does not require a license key to access a product. For license products, you can then maintain price information as well as terms and conditions.

Last but not least and most importantly, you select the artifacts that you want to ship in an automated fashion (in case you have a direct delivery data product). In the most simplistic fashion, it can be one, entire view that you can ship as it is. At the same time, with one data product, you can also ship multiple views at once, e.g. transactional and master data views that can be joined on the consumer side. Furthermore, you can use data filter or column selection to base multiple data products on the same base view to accommodate the portfolio and GTM strategy.

Once you have defined these Data Product listings aspects, you are ready to list your product on the data marketplace. The entire process can be done in several minutes in case the Data Provider knows what exactly he wants to list and deliver.

The next two steps are only required for specific Data Product setups:

- 3. Creating Releases in the Publishing Management is required for Data products with Delivery Type "Full". Only with a defined data release, you can set the Data Product status to "Listed".

- 4. Creating Licenses is only required for commercial data products where access shall only be granted to users that have a contractual right to do so. This can either be for commercial products that customers have to pay for or for a free of charge product that should only by accessible with explicit permission of the data provider via license.

3. Data Provider - Create Releases

Most Data Products are being updated frequently or infrequently. This is where non-integrated setups create efforts for both data providers and data consumers. Data Providers need to create new data slices and data consumers need to find ways to ingest new incoming data. To reduce the friction of releasing and consuming data updates was one of the biggest motivations to build the Data Marketplace. This is why a dedicated module is available in the Data Sharing Cockpit with the Publishing Management.

For each Data Product, the Data Provider can easily define a new release that provides the Data Marketplace with the information the new content is available. Data Consumers that have activated the Data provide immediately receive the new data into their existing artifacts without any intervention required. At the same time, a "Manual Update" mode is available as well on the consumer side that allows the data consumer to decide when the new data shall be ingested. The Data Provider has also the option to lock a data product for the time where he updates his view to make sure no inconsistent data is shipped (e.g. to prevent data from being shipped to the customer while it is updated by the data provider).

In the first release of the Data Marketplace, a new data release always provides the full data set in the provider's view(s) to be transferred. In the product vision, you will be able to define and ship incremental releases as well.

4. Data Provider - Create Licenses

Finally, the Data Provider is able to set up licenses that make the commercial setup actionable. Therefore the Licensing Management module exists in the Data Sharing Cockpit. A license entitles one or multiple users to access one or multiple data products for a limited or unlimited time. In addition, a domain check can be set up to only allow users with a certain mail address, e.g.@sap.com, to activate a specific license. For a given license, the data provider can generate as many activation keys as he likes and can send them to the data consumer that can enter the license on the specific product page or in the "My Licenses" section.

There are four major licensing models that you can execute with the License Management:

- Product Licenses: You set up a license that only contains one product and has no customer-specific settings. Each customer that buys the product license gets an activation key and can use the product.

- Product Group License: You set up a license that contains multiple data products that are licensed together and the license also has no customer-specific settings. Each customer that buys the product group license gets access to all products that are maintained in the license. The Data Provider can add new Data Products to the license at any time.

- Contract License: Compared to the Product and Product Group License, a contract license is always specific to a specific customer contract. You can maintain e.g. the Contract ID in the reference as well as the contract dates in the validity. All products that are part of the contract are maintained in the license and only the users of the specific customer get an activation key.

- Trial License: As you can restrict the validity and product scope, you can use the License Management to set up a trial license that gives access to a product for a limited time or to selected products of a product group. In the product vision, you will be able to set a certain trial option in the data product and the data consumer will be able to sign-up for trial access in a self-service manner while the system generates the trial license in the background.

As the Data Products and the License Management is decoupled, any combination is possible. In the product vision, you will also be able to set a data filter within the license to restrict data access via the license to a certain data scope.

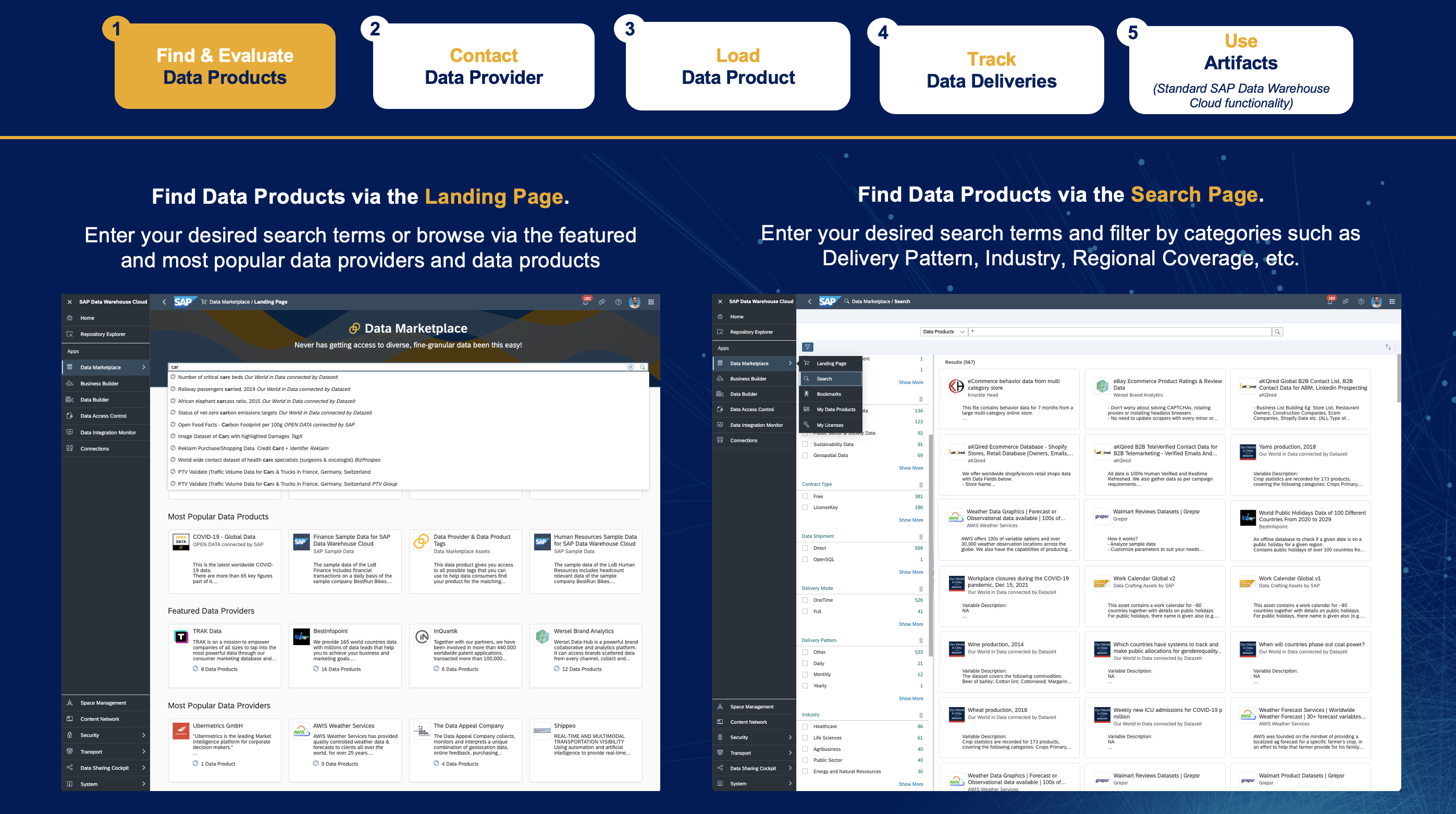

Data Consumer - 1. Find & Evaluate Data Products

On the Data Consumer side, the process starts with finding the right products with the right content for the right use case for the right price in the right form. In order to find & evaluate them, the user can either use the landing page which always shows the most popular products and providers by the number of views. In addition, SAP maintains a featured area where for a limited period of time, specific data products and data providers are highlighted.

In addition to this curation, the Data Marketplace offers a search that on the one hand side allows to search within the titles and description of the Data Products and Data Providers. On the other side, you can use filters such as Data Category, Industry, Price, Delivery Pattern, etc. to browse or narrow down a given result set.

Once a data product has been identified, the Data Product page allows the data consumer to elaborate on the product based on the listing details. These are all fields described in the Data Provider Step 2 - "Create Data Product Listing". Currently, the sample data can only be downloaded as an Excel file to the desktop - in the product vision, the user will be able to load it into his space and add the productive data later on.

Data Consumer - 2. Contact Data Provider

In many cases, the Data Product listing does not answer all the user's questions. There are especially two cases where this almost always happens:

- External or Open SQL Delivery Request: In the scenarios where the data is not pre-integrated via the Data Sharing Cockpit, the data consumer & provider need to exchange in order to align on the data delivery form.

- Pricing Inquiry: Oftentimes, the prices are not fixed per data set or even data product but the Provider has even more complex pricing which requires input on the scenario of the data consumer.

- Individualization Request: In addition, a data product is described in general while the data consumer expects an individualized data product, e.g. where only records for reference data that he specifies (and delivers) are requested. Furthermore, customers request individual KPIs based on their company calculations.

To interact, the Data Consumer can request pricing and delivery for products that are listed with pricing "On Request" or with Delivery Type "External Delivery". This generates a mail in the data consumers mail client with a template and the data product information prefilled to facilitate the interaction between data provider & data consumer. In addition, the user can contact the data provider with the "Mail" button on the top right corner of the data product page.

In Q2/22 a "Shop Link" is on the product roadmap for the Data Marketplace. This will allow the Data Consumer to directly get to the place in the Data Consumer Shop or to the SAP Store Listing where a license can be obtained.

Data Consumer - 3. Load Data Products

As a next step, the Data Consumer can load the Data Product in his space of choice.

- For Free Data Products, solely the space needs to be entered.

- For Commercial Data Products, the License Key needs to be entered.

This can be done in the Load Dialogue or in the "My Licenses UI" as a license key can also unlock multiple Data Products at once.

In addition, the Update Type can be selected for Data Products of Type "Full". The default and initially delivery value is "Manual" which means that the Data Provider can manage when a new data delivery is imported into the system via the Delivery Tracking (which is explained in the next chapter). The second option is "Immediate" (which is planned to be rolled out in a later SAP Data Warehouse Cloud wave) which means that once the Data Provider creates a release, the import is triggered immediately. In the product vision, a scheduled mode shall be offered as well, e.g. to trigger an import every day at 8am.

After selection of the space, the Data marketplace creates all artifacts, sets up the connection and takes a snapshot of the data each time a load is triggered. If for your use case a "Real-Time Data Access" is required instead of a replicated data transfer, contact datamarketplace@sap.com.

There is some important information on current restrictions that are being worked on and shall disappear over time:

- A Data Product can currently be installed in a space only once. Consequently, the selection space is invalidated if this product has already been installed into this space.

- The load fails if artifacts already exist in the Space that have the same technical name as the artifacts in the Data Product. Verify that by loading the Data product into an empty space. The current concept of the technical name is currently reworked to avoid this issue.

Data Consumer - 4. Track Data Deliveries

For enterprise-grade management of the external data inbound flow, the fourth step is highly important. In the "My Data Products" Area - reachable via a submenu of the Data Marketplace on the left side of the SAP Data Warehouse Cloud navigation area - a Data Consumer gets all this information, processes and actions in one place.

The first perspective offered by the Data Marketplace is the My Products overview where in one central place all Data Product activations per Space are transparent with the Status, Delivery Mode etc. In addition, this is the place where you can de-activate products which will erase the installed data products from the space.

The second perspective offered by the Data Marketplace is the Updates section. The user can monitor and manage the update flow of all activated data products per space. Based on the Status, different actions are possible:

- With Re-initialize, you can reload the data product, especially if the delivery has the Status “Failed” or if you want to retrieve the newest set of data independent of the publishing process.

- With the Update Button – especially when the Status is ”Outdated” because of a newly available data release – you can trigger the new load. In Update Mode “Immediate”, this happens automatically.

The third perspective offered by the Data Marketplace is the Delivery Tracking section. The main table is sorted by Last Update Date which means the newest load is on top. In the detail view, you can see all releases that are part of the Data Product and can drill down further to see the artifacts that have been shipped with the release and KPIs like duration, number of records, etc.

Data Consumer - 5. Use Data Product's artifacts

The Artifacts are directly deployed in the selected SAP Data Warehouse Cloud Space which make them accessible for the Data Modelling persona. The user can now

- use the graphical or SQL-based view modeling capabilities

- harmonize data with the Intelligent Lookup

- access the data on HANA DB level for complex calculations, e.g. ML/AI, spatial engine, etc.

- enrich data semantically with the Business Layer

- share the data with other Spaces

- report on the data with SAP Analytics Cloud

- open the data for 3rd Party Analytics or Data Management tools via the Database User

5. Data Marketplace Use Cases

Many that are new to the topic of external data and data sharing are curious about the use cases that can be covered with this cross-tenant data exchange technology. In the following, I would like to give an overview of the archetypes of use cases that are possible. Check the chapter below on the Data Provider and Data Product Portfolio below.

- Outside-in Analytics: Most SAP Data Warehouse Cloud customers focus on internal (SAP) data. With the Data Marketplace, you can start to add an outside-in perspective, e.g. by adding Benchmark Data or Market Data to your SAP S/4HANA Sales & Distribution Data.

- Holistic Planning & ML/AI: In current dynamic times, it gets more and more difficult to plan and predict your business outcomes solely by extrapolating internal data. This is where the Data Marketplace helps with external data such as macroeconomic and microeconomic data as well as environmental and geospatial data. Concrete Examples could be to assess future markets based on GDP, assess future suppliers based on ESG risk factors or assess future locations of e-charging stations based on movement data.

- Cross-Company Data Collaboration: The Data Marketplace and the Data Sharing Cockpit is not only for commercial and public datasets but can be used for B2B data exchange as well. Anyone can list Data Products to exchange data with other SAP Data Warehouse Cloud users.Due to the tight connectivity with SAP source systems this is especially interesting when the participants want to exchange SAP data. This way e.g. retailers can share data with manufacturers or manufacturers with suppliers or construction companies with the many companies they collaborate with when the many stakeholders in a building project.In the cross-company data collaboration use case, bi-directional sharing capabilities are key. Check out the demo below or the step-by-step blog on how a retailer and an e-charging station data provider exchange data bi-directionally.

- Internal Data Marketplace: Interestingly, in large multinational companies the same base requirements for data sharing hold true as in cross-company data sharing. If you share data with another unit or subsidary, you want to demonstrate the data product transparently and manage the governed access. Consequently, the functionality of the Data Marketplace can be used when exchanging data internally as well. While in external data cases contracts and payment flows are the basis for license management, it is then internal orders and department missions.

- Faster Data Modelling with Data Crafting Assets: This last use case is rather special and mentioned has it was a learning while using SAP Data Warehouse Cloud in concrete use cases. Often times, you miss those little helper tables that make your modelling life easier, e.g. when the one view has the country identifier with two-digit ISO codes and the other one the country name, or when the date information does not have the same granularity, etc. This is where SAP lists those data crafting assets that help users in data modelling challenges and we cannot see other Consulting Companies to share their crafting assets as well.

With the Roadmap Item Context Management, the Data Marketplace will get an incredible amount of innovation to realize use cases with the matching functionality & processes. With the definition of contexts, users will be able to manage the visibility of their Data Products to realize both Private B2B Data Exchanges as well as Internal Data Marketplaces.

In Context Management, the context owner can specify the type of context. The Data Marketplace provides 5 different context types that differentiate based on the use case who can see & list data products.

6. Data Provider & Data Product Portfolio

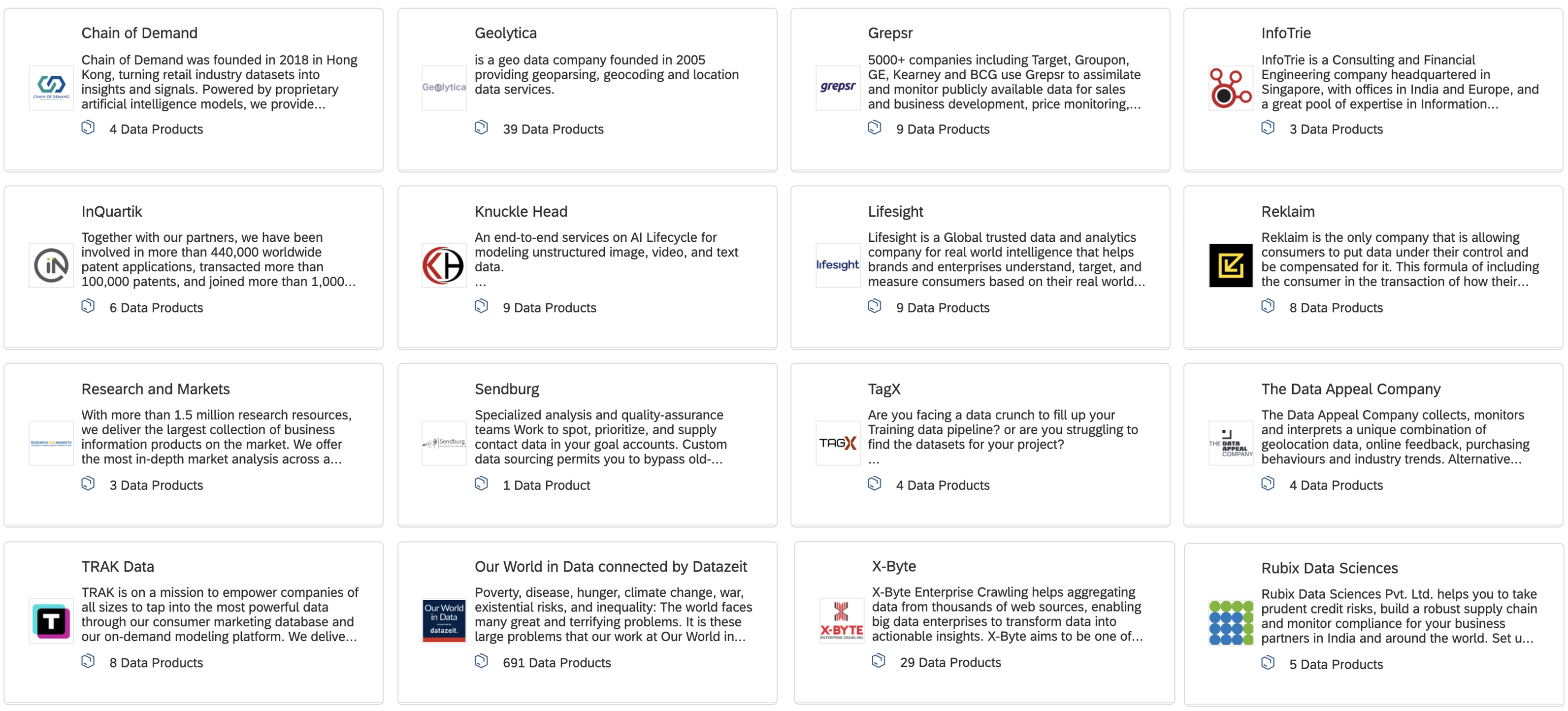

The portfolio of data providers and their listed data products evolves constantly. Especially with the co-operation with Datarade, we are able to bring the Data Providers on board that our customers need.

Please find the listed 126 Data Providers with 2.368 Data Products from September 2022 below or just go into your SAP Data Warehouse Cloud System and check them out.

SAP also connects open data from public sources that are under creative commons license for specific use cases that any SAP Data Warehouse Cloud customer can load in their spaces as well.

Stay tuned for a dedicated blog that inspires how you can use such open data products for your data challenges.

8. Get Started

As a Data Provider, reach out to datamarketplace@sap.com in case you want to become a Data Provider on the Data Marketplace. There are different ways to get your listing in front of our SAP customers based on your data assets and GTM strategy.

As a Data Consumer, you can load data with any kind of access to SAP Data Warehouse Cloud. If you are not yet a customer, just check out our up to 90 days trial offer right here.

----

Back To The Top of The Blog

- SAP Managed Tags:

- SAP Analytics Cloud,

- SAP Datasphere,

- SAP Business Technology Platform

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

92 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

66 -

Expert

1 -

Expert Insights

177 -

Expert Insights

298 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

344 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

421 -

Workload Fluctuations

1

- 体验更丝滑!SAP 分析云 2024.07 版功能更新 in Technology Blogs by SAP

- What’s New in SAP Analytics Cloud Release 2024.08 in Technology Blogs by SAP

- SAP 分析云 2024.06 版新功能抢先看 in Technology Blogs by SAP

- SAP Datasphere is ready to take over the role of SAP BW in Technology Blogs by SAP

- What’s New in SAP Analytics Cloud Release 2024.07 in Technology Blogs by SAP

| User | Count |

|---|---|

| 38 | |

| 25 | |

| 17 | |

| 13 | |

| 7 | |

| 7 | |

| 7 | |

| 7 | |

| 6 | |

| 6 |