- SAP Community

- Products and Technology

- Enterprise Resource Planning

- ERP Blogs by Members

- S/4HANA Upgrade 1809 to 2020 – SDMI Lessons Learne...

Enterprise Resource Planning Blogs by Members

Gain new perspectives and knowledge about enterprise resource planning in blog posts from community members. Share your own comments and ERP insights today!

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

nanda_kumar21

Active Contributor

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

12-07-2021

8:21 PM

Introduction

We upgraded from S/4HANA 1809 FPS 02 to 2020 FPS01 recently and I want to share the errors and troubleshooting related to the Silent Data Migration from this upgrade.

I highly recommend familiarizing yourself with SDMI during downtime (Part 1) before continuing reading the rest of this blog.

Offline SDMI during SUM Downtime

Problem Statement 1

During the very first upgrade in sandbox (copy of production), the SUM upgrade phase MAIN_NEWBAS/PARRUN_SILENT_DATA_MIGR ran for an hour and ended with following error:

Checks after phase MAIN_NEWBAS/PARRUN_SILENT_DATA_MIGR were negative

Analysis

The log file SDMI_SUM_PROC_<client>.<SID> mentioned in the SUM screen is the starting point:

A4 EUPGBA 505 "CL_SDM_SDBIL_VBRP": Records still to be processed: "133.621.311" on "10.05.2021" at "09:36:18"

A4 EUPGBA 507 "CL_SDM_SDBIL_VBRP": Number of active tasks: "42"

A2EEUPGBA 502 "CL_SDM_SDBIL_VBRP" client "100" finished with error on "10.05.2021" at "09:59:31"

A4 EUPGBA 506 "CL_SDM_SDBIL_VBRP": Records to be processed at end of migration: "122.281.311"

A2EEUPGBA 509 SDM stops all migrations due to a migration error

A4 EUPGBA 508 See SAP note 2725109 describes how to analyse error during SDM Migration

A4 EUPGBA 513 "CL_SDM_SDBIL_VBRP": Troubleshooting: run SLG1 in client "100" with:

A4 EUPGBA 514 - object = SDMI_MIGRATION

A4 EUPGBA 515 - External ID = "CL_SDM_SDBIL_VBRP*"

A4 EUPGBA 026 === End of process "Creating SDM User and starting SDM Migrations" log messages ===

A4 EUPGBA 001 -------------------------------------------------------------------------

A4 EUPGBA 002 " "

A4 EUPGBA 005 Report name ...: "R_SILENT_DATA_MIGR_SUM"

A4 EUPGBA 007 Start time ....: "10.05.2021" "09:04:06"

A4 EUPGBA 008 End time ......: "10.05.2021" "09:59:31"

The note 2725109 basically describes the same that is already in the log.

Since the system is still in downtime, need to execute unlocksys command (refer upgrade guide) to unlock the system first.

Go to SLG1 in the client with SDMI error and list for object SDMI_MIgration and External ID as the class id with * as suffix.

SDM CL_SDM_SDBIL_VBRP registered for parallel execution (100%)

Starting at 10.05.2021 09:04:23

- on host dummyhost work process 28

Dispatcher Timeout: "PackSel 4363-4378" waited 300

sec for collector

Ending at 10.05.2021 09:59:31

- on host dummyhost work process 54

Records to be processed at first migration run: 142281311

Records to be processed at start of migration run: 142281311

Records to be processed at end of migration run: 122281311

There was no documentation that we could find that could help with this error, so we raised an SAP incident for BC-CCM-SDM

As per SAP Support the root cause was that SDMI uses PFW parallelization framework which initiated many parallel sessions each requesting 100% of all DIA work processes consequently overloading the Resource Allocator and eventual timeout off PFW itself.

You may wonder from reading the FAQ KBA, there are options to configure the parallelization. Well, not when SDMI runs during the downtime of an upgrade from 1809 to 2020. S4 1809 doesnt have SDMI implementation, so there is nothing that can be done as a "pre-requisite" before the upgrade. During the downtime, SDMI phase starts automatically after its previous and runs with default value i.e with 100% Max Parallel Processes for a migration.

Solution

The only way to fix this problem was implementing the note 3049806 and 3060006 while we were still in that same phase with system unlocked. Followed by configuration in SDM_MON transaction in the client impacted (in our case 100)

- Select "Config Options" from the menu

- Select the following parameters:

- client = 100

- Migration Class Name = *

- Select Config Parameter = Max. Parallel Processes of a migration

- Input parameter value = 80%

- Execute [F8]

SDM_MON config

Problem statement 2

NOTE: There is still no SAP Note for this problem at the time of writing this blog

For subsequent systems, we added a breakpoint for the phase MAIN_NEWBAS/XPRAS_AIMMRG and implemented the notes and but when we tried to save the configuration in SDM_MON, we got an error:

No Authority to configure SDM Settings

Analysis

This was bizarre, because we tried DDIC and individual users will SAP_ALL profiles, but kept getting the authorization error. Nothing in the SAP notes or in SAP community related to this error.

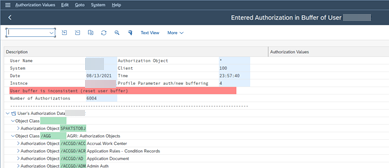

Nothing in SU53 but hit jackpot at SU56. The user buffer was inconsistent for some reason, my guess is we are still in the middle of downtime and probably the user master part of the upgrade was incomplete.

SU56 - Inconsistent User Buffer

Solution

Reset the buffer in SU56--> Authorization Values-->Reset User Buffer (Client) and refresh.

We were able to complete the configuration and move forward.

SU56 - Reset User Buffer (client)

Problem Statement 3

The SUM proceeded forward, we started monitoring the SDMI process.

When SDMI is still running there will no logs generated in /SUM/abap/logs folder. So, watch the file SDMI_SUM_PROC_<client>.<SID> in /SUM/abap/tmp to monitor the performance. The log is updated every 30 mins only.

A4 EUPGBA 505 "CL_SDM_SDBIL_VBRP": Records still to be processed: "129.958.732" on "03.06.2021" at "21:19:24"

A4 EUPGBA 507 "CL_SDM_SDBIL_VBRP": Number of active tasks: "34"

A4 EUPGBA 505 "CL_SDM_SDBIL_VBRP": Records still to be processed: "114.118.732" on "03.06.2021" at "21:49:27"

A4 EUPGBA 507 "CL_SDM_SDBIL_VBRP": Number of active tasks: "34"

A4 EUPGBA 505 "CL_SDM_SDBIL_VBRP": Records still to be processed: "106.158.732" on "03.06.2021" at "22:19:28"

A4 EUPGBA 507 "CL_SDM_SDBIL_VBRP": Number of active tasks: "34"

A4 EUPGBA 505 "CL_SDM_SDBIL_VBRP": Records still to be processed: "98.658.732" on "03.06.2021" at "22:49:29"

A4 EUPGBA 507 "CL_SDM_SDBIL_VBRP": Number of active tasks: "34"

A4 EUPGBA 505 "CL_SDM_SDBIL_VBRP": Records still to be processed: "88.748.732" on "03.06.2021" at "23:19:31"

A4 EUPGBA 507 "CL_SDM_SDBIL_VBRP": Number of active tasks: "34"

A4 EUPGBA 505 "CL_SDM_SDBIL_VBRP": Records still to be processed: "81.558.732" on "03.06.2021" at "23:49:32"

A4 EUPGBA 507 "CL_SDM_SDBIL_VBRP": Number of active tasks: "34"

As you can see, the performance degraded significantly over time. The number of records processed every 30 mins nearly halved between the first and second run.

The total runtime was more than ~8hrs. The performance degraded more than it recovered and had the highest # of record processing right before the last set of records.

SUM SDMI Initial run for class CL_SDM_SDBIL_VBRP

Analysis

In log SDMI_SUM_PROC_<client>.<SID>, it is clear the PFW is using only 34 work processes. The app server had 60 wp, so 80% of it is 48 wps. Apparently the configuration 80% that was done earlier to solve problem 1 was just a max number, PFW decided based on internal criteria the processes needed upto the max limit. So, this is not contributing to the degrading performance.

A quick look at HANA DB showed that threads were waiting on locks

HANA DB Thread Status

We reported to SAP support because SDMI classes are standard delivery.

SAP responded that 30+ update statements executing simultaneously for the same column of the same unpartitioned table. These parallel changes will generate lock (called BTree GuardContainer) on the delta part of column table.

The UPDATE statements with the pattern of including FROM clause on view ZZS4_1_VBRP, will look up view ZZS4_1_VBRP and generate share lock on the delta part of the table VBRP which will lead to the result that the update statement cannot get the exclusive lock on the same delta part. And this will affect the overall performance of these update statements because from time to time some of the parallel statements will need to wait for BTree GuardContainer lock and the threads will be in Exclusive Lock Enter state.

Solution

While partitioning sounds like a potential solution there is no guarantee that each update will work on different part of the partition.

So, the only solution was to reduce the parallel update processes.

Following SAP recommendation, we reduced the Max Parallel processes to 10% (of 60 wp = 6WPs).

Actual SDMI runtimes

We repeated it four more times before doing it in production, and we tried values between 5 and 6 but the results were consistent for the most part. We couldn't really play around with different configs due to project constraints, but I highly encourage you to try if you have the opportunity to land the right downtime duration for your business.

Production run performed slightly better than the rest because of hardware differences.

The data volume difference between each run is almost negligible except for the first QA run.

The first QA run (Blue line below) had 10M records lower than other run and took longer to process despite having highest peaks (80% max parallel processes) because of Lock Wait problem described above.

Downtime SDMI Actuals

Summary

If you are upgrading from 1809 to 2020 or similar jump upgrades

- You have to implement relevant notes before upgrade, but more importantly implement 3049806 and 3060006 during SUM upgrade phase MAIN_NEWBAS/XPRAS_AIMMRG phase

- If you have authorization problems in saving SDM_MON configuration during SUM phase MAIN_NEWBAS/XPRAS_AIMMRG, check SU56 and reset user buffer if its inconsitent

- Play around with parallelization configuration in SDM_MON to land the optimum downtime for your business

One would think that should be it, but no we had issues during online SDMI post upgrade as well, check Part 3 for that information

Part 1 - https://blogs.sap.com/2021/12/06/s-4hana-upgrade-1809-to-2020-sdmi-lessons-learned-part-1/

Part 3 - https://blogs.sap.com/2021/12/07/s-4hana-upgrade-1809-to-2020-sdmi-lessons-learned-part-3/

Regards

Nanda

- SAP Managed Tags:

- SAP S/4HANA,

- software logistics toolset

1 Comment

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

"mm02"

1 -

A_PurchaseOrderItem additional fields

1 -

ABAP

1 -

ABAP Extensibility

1 -

ACCOSTRATE

1 -

ACDOCP

1 -

Adding your country in SPRO - Project Administration

1 -

Advance Return Management

1 -

AI and RPA in SAP Upgrades

1 -

Approval Workflows

1 -

Ariba

1 -

ARM

1 -

ASN

1 -

Asset Management

1 -

Associations in CDS Views

1 -

auditlog

1 -

Authorization

1 -

Availability date

1 -

Azure Center for SAP Solutions

1 -

AzureSentinel

2 -

Bank

1 -

BAPI_SALESORDER_CREATEFROMDAT2

1 -

BRF+

1 -

BRFPLUS

1 -

Bundled Cloud Services

1 -

business participation

1 -

Business Processes

1 -

CAPM

1 -

Carbon

1 -

Cental Finance

1 -

CFIN

1 -

CFIN Document Splitting

1 -

Cloud ALM

1 -

Cloud Integration

1 -

condition contract management

1 -

Connection - The default connection string cannot be used.

1 -

Custom Table Creation

1 -

Customer Screen in Production Order

1 -

Data Quality Management

1 -

Date required

1 -

Decisions

1 -

desafios4hana

1 -

Developing with SAP Integration Suite

1 -

Direct Outbound Delivery

1 -

DMOVE2S4

1 -

EAM

1 -

EDI

3 -

EDI 850

1 -

EDI 856

1 -

edocument

1 -

EHS Product Structure

1 -

Emergency Access Management

1 -

Energy

1 -

EPC

1 -

Financial Operations

1 -

Find

1 -

FINSSKF

1 -

Fiori

1 -

Flexible Workflow

1 -

Gas

1 -

Gen AI enabled SAP Upgrades

1 -

General

1 -

generate_xlsx_file

1 -

Getting Started

1 -

HomogeneousDMO

1 -

IDOC

2 -

Integration

1 -

Learning Content

2 -

LogicApps

2 -

low touchproject

1 -

Maintenance

1 -

management

1 -

Material creation

1 -

Material Management

1 -

MD04

1 -

MD61

1 -

methodology

1 -

Microsoft

2 -

MicrosoftSentinel

2 -

Migration

1 -

mm purchasing

1 -

MRP

1 -

MS Teams

2 -

MT940

1 -

Newcomer

1 -

Notifications

1 -

Oil

1 -

open connectors

1 -

Order Change Log

1 -

ORDERS

2 -

OSS Note 390635

1 -

outbound delivery

1 -

outsourcing

1 -

PCE

1 -

Permit to Work

1 -

PIR Consumption Mode

1 -

PIR's

1 -

PIRs

1 -

PIRs Consumption

1 -

PIRs Reduction

1 -

Plan Independent Requirement

1 -

Premium Plus

1 -

pricing

1 -

Primavera P6

1 -

Process Excellence

1 -

Process Management

1 -

Process Order Change Log

1 -

Process purchase requisitions

1 -

Product Information

1 -

Production Order Change Log

1 -

purchase order

1 -

Purchase requisition

1 -

Purchasing Lead Time

1 -

Redwood for SAP Job execution Setup

1 -

RISE with SAP

1 -

RisewithSAP

1 -

Rizing

1 -

S4 Cost Center Planning

1 -

S4 HANA

1 -

S4HANA

3 -

Sales and Distribution

1 -

Sales Commission

1 -

sales order

1 -

SAP

2 -

SAP Best Practices

1 -

SAP Build

1 -

SAP Build apps

1 -

SAP Cloud ALM

1 -

SAP Data Quality Management

1 -

SAP Maintenance resource scheduling

2 -

SAP Note 390635

1 -

SAP S4HANA

2 -

SAP S4HANA Cloud private edition

1 -

SAP Upgrade Automation

1 -

SAP WCM

1 -

SAP Work Clearance Management

1 -

Schedule Agreement

1 -

SDM

1 -

security

2 -

Settlement Management

1 -

soar

2 -

Sourcing and Procurement

1 -

SSIS

1 -

SU01

1 -

SUM2.0SP17

1 -

SUMDMO

1 -

Teams

2 -

User Administration

1 -

User Participation

1 -

Utilities

1 -

va01

1 -

vendor

1 -

vl01n

1 -

vl02n

1 -

WCM

1 -

X12 850

1 -

xlsx_file_abap

1 -

YTD|MTD|QTD in CDs views using Date Function

1

- « Previous

- Next »

Related Content

- Manage Supply Shortage and Excess Supply with MRP Material Coverage Apps in Enterprise Resource Planning Blogs by SAP

- SAP Fiori for SAP S/4HANA - Technical Catalog Migration – How the migration process works in Enterprise Resource Planning Blogs by SAP

- User Experience in SAP S/4HANA Cloud Public Edition: New Microlearning Available in Enterprise Resource Planning Blogs by SAP

- Review and Adapt Business Roles after a Major Upgrade in the SAP S/4HANA Cloud Public Edition in Enterprise Resource Planning Blogs by SAP

- Asset Management in SAP S/4HANA Cloud Public Edition 2402 in Enterprise Resource Planning Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 5 | |

| 2 | |

| 2 | |

| 2 | |

| 2 | |

| 2 | |

| 1 | |

| 1 | |

| 1 | |

| 1 |